If you make use of this code, please cite the following information [and star me (0.0)]

@{Dang_2020_NAS_Attempt

author = {Dang, Anh-Chuong}

title = {Attempt Neural Architecture Search on Visual Question Answering task},

month = {May},

year = {2020}

publisher = {GitHub}

journal = {GitHub repository}

commit = {master}

}

This repository contains Pytorch implementation for my attempt NAS on Vision Language models (VQA task).

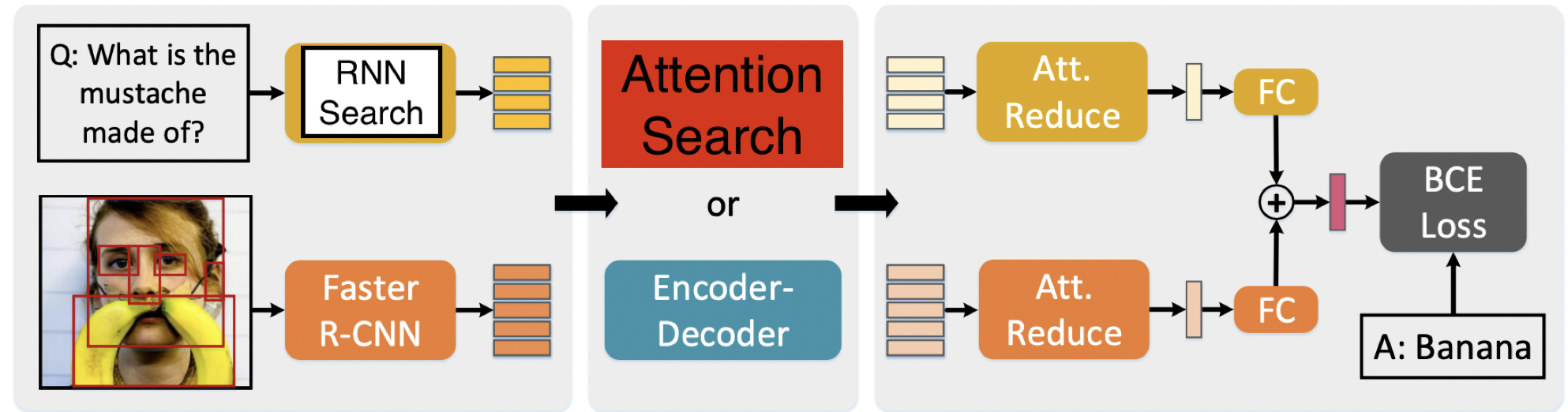

In this work, I utilized MCAN-VQA model and factorized its operations then applied Search algorithms i.e SNAS to optimize Network's architecture.

For more detais, plz refer to my code as well as summary report

For more detais, plz refer to my code as well as summary report summary.pdf.

You should install some necessary packages.

-

Install Python >= 3.5

-

Install PyTorch >= 1.x with CUDA.

-

Install SpaCy and initialize the GloVe as follows:

$ pip install -r requirements.txt $ wget https://github.com/explosion/spacy-models/releases/download/en_vectors_web_lg-2.1.0/en_vectors_web_lg-2.1.0.tar.gz -O en_vectors_web_lg-2.1.0.tar.gz $ pip install en_vectors_web_lg-2.1.0.tar.gz

The image features are extracted using the bottom-up-attention strategy, with each image being represented as an dynamic number (from 10 to 100) of 2048-D features. We store the features for each image in a .npz file. You can prepare the visual features by yourself or download the extracted features from OneDrive or BaiduYun. The downloaded files contains three files: train2014.tar.gz, val2014.tar.gz, and test2015.tar.gz, corresponding to the features of the train/val/test images for VQA-v2, respectively.

For more details of setup: Please refer to repository (https://github.com/MILVLG/mcan-vqa)

For search stage, run file run_search.py. Command for running search:

python run_search.py --RUN=str --GPU=str --SEED=int --PRELOAD=bool

- After you achieved desired architecture, please copy it to namedtuple

VQAGenotypeingenotypes.pyfile inmodelfolder.

For evaluation stage, run file run.py. Command for running evaluation:

python run.py --RUN=str --ARCH_NAME=str --GPU=str --SEED=int --PRELOAD=bool

where;

str: should be replaced with string element of your choices. e.g. For --RUN, option choices are {'train', 'val'}

int: a integer element of your choices

bool: boolean element, i.e. True or False

The project was under progression. However, around the end of April 2020, A great work, which has quite similar approach with more favorable results, was published hence unfortunately I decided to stop this project.

Published paper (mentioned above): Deep Multimodal Neural Architecture Search

As for personal curiosity, any further suggestions, advices are welcome.

https://github.com/MILVLG/mcan-vqa

https://github.com/cvlab-tohoku/Dense-CoAttention-Network