- Original Code available: https://github.com/aimagelab/meshed-memory-transformer

- Fixed the

/vs//error in the original code following this discussion - Fixed the

UserWarning: masked_fill_ received a mask with dtype torch.uint8, this behavior is now deprecated,please use a mask with dtype torch.bool instead.warning in the attention mechanism - Improved speed of the

Datasetclass - Added

hpcscripts andsetup.qsub - Added loss/eval training and validation plot functionality (runs automatically)

- Added a potential fix to the

<eos>bug in SCST.

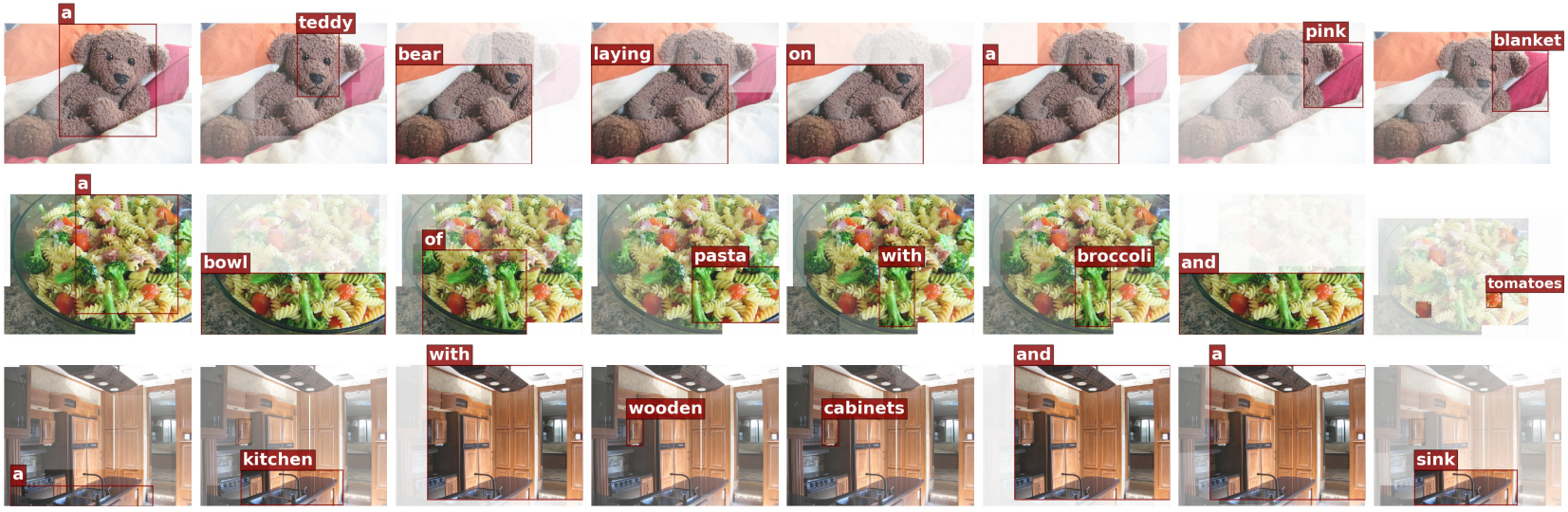

This repository contains a fork of the reference code for the paper Meshed-Memory Transformer for Image Captioning (CVPR 2020).

Please cite with the original work BibTeX:

@inproceedings{cornia2020m2,

title={{Meshed-Memory Transformer for Image Captioning}},

author={Cornia, Marcella and Stefanini, Matteo and Baraldi, Lorenzo and Cucchiara, Rita},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2020}

}

Run the following:

cd evaluations

bash get_stanford_models.shSee this post for more information.

See setup.qsub. On QMUL's Apocrita/Andrena hpc system, this job can be automated with the following steps:

- Check the directories are as expected

- Run

qsub setup.qsub

See train.py for the complete list of arguments. An hpc system script has been provided in hpc/train.qsub. Ensure the script is ammeded to account for your username and directory structure. i.e. Don't use $USER$ in the header information. Submit the job with qsub train.qsub from within the hpc directory.

[1] P. Anderson, X. He, C. Buehler, D. Teney, M. Johnson, S. Gould, and L. Zhang. Bottom-up and top-down attention for image captioning and visual question answering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018.