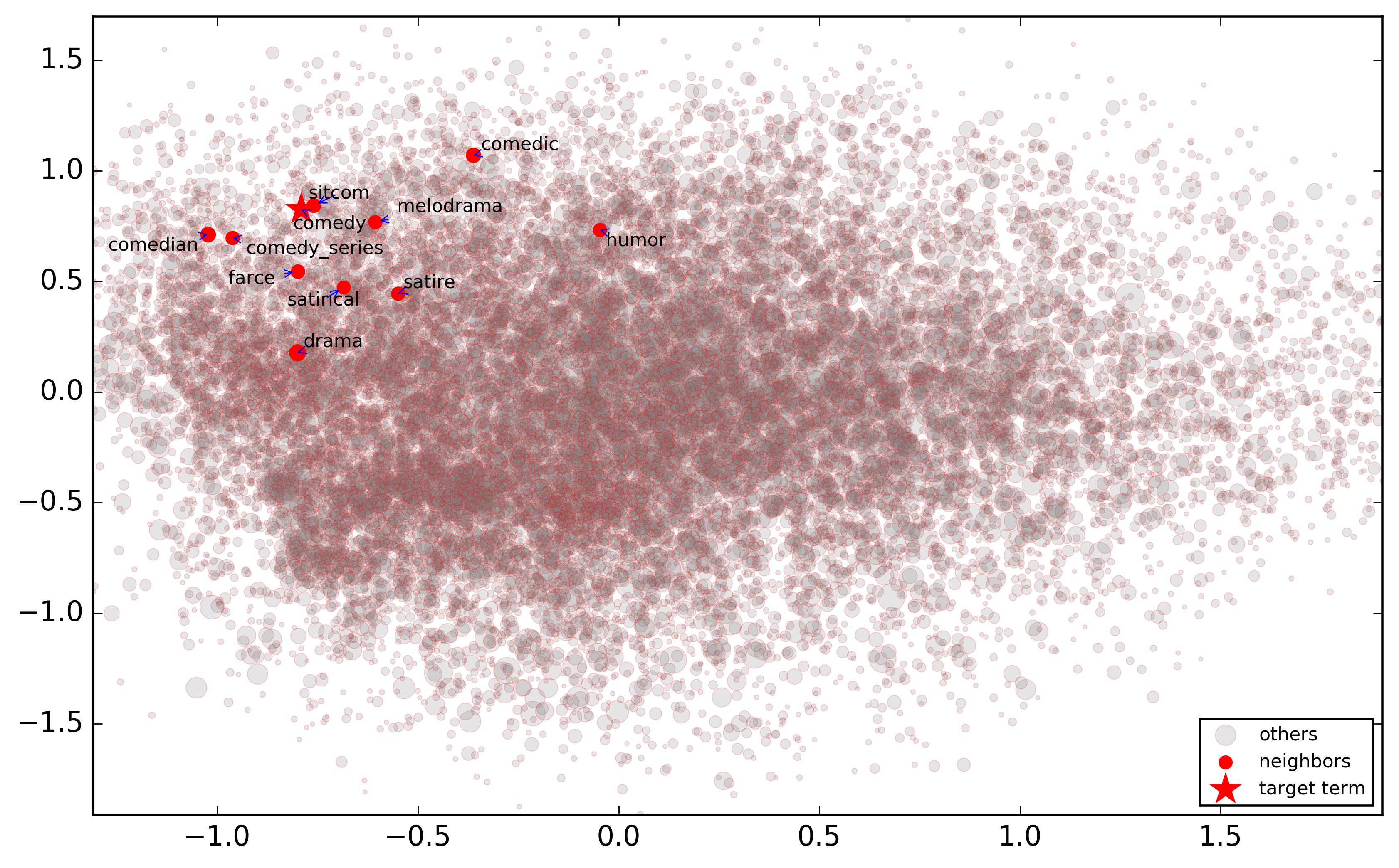

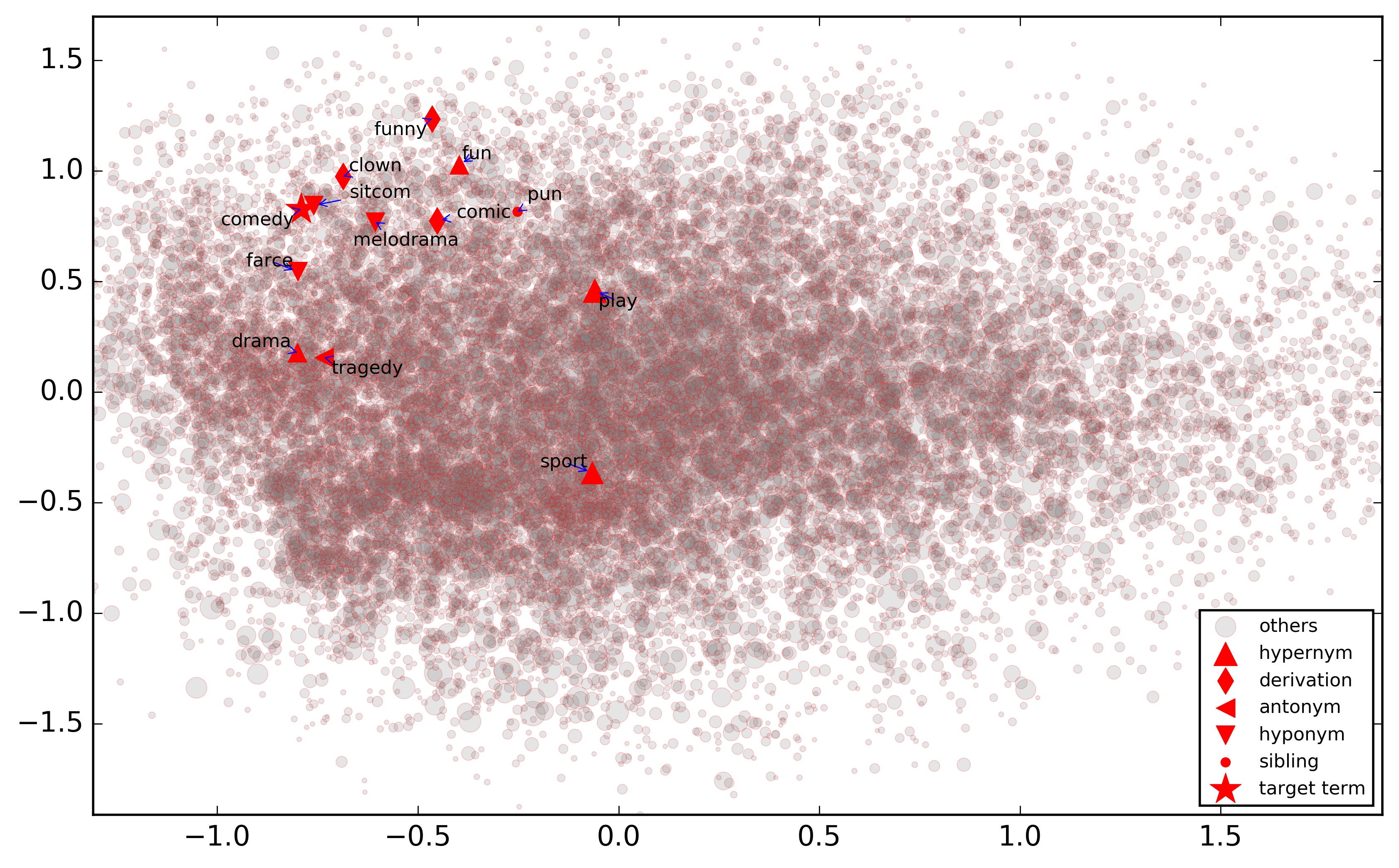

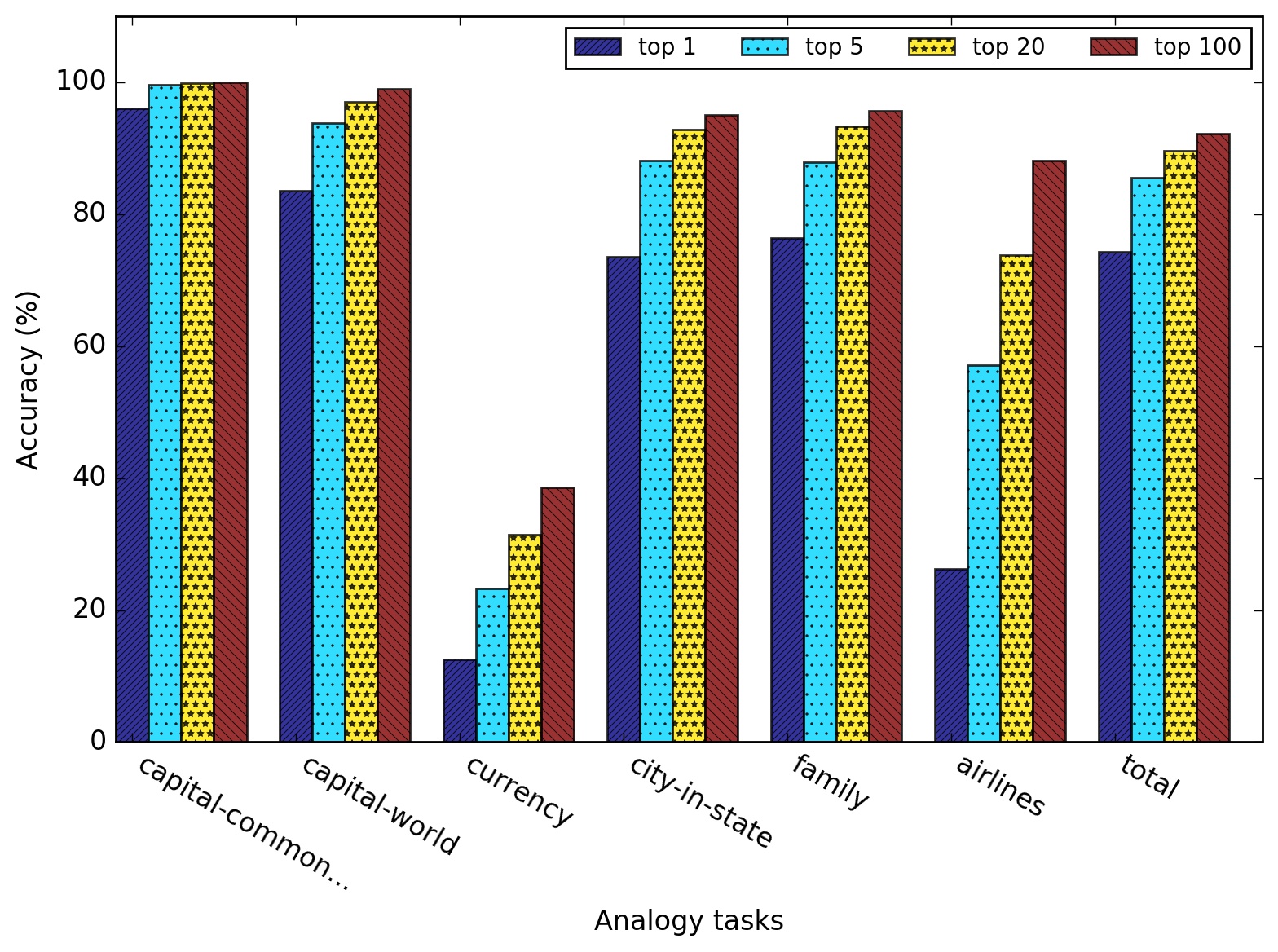

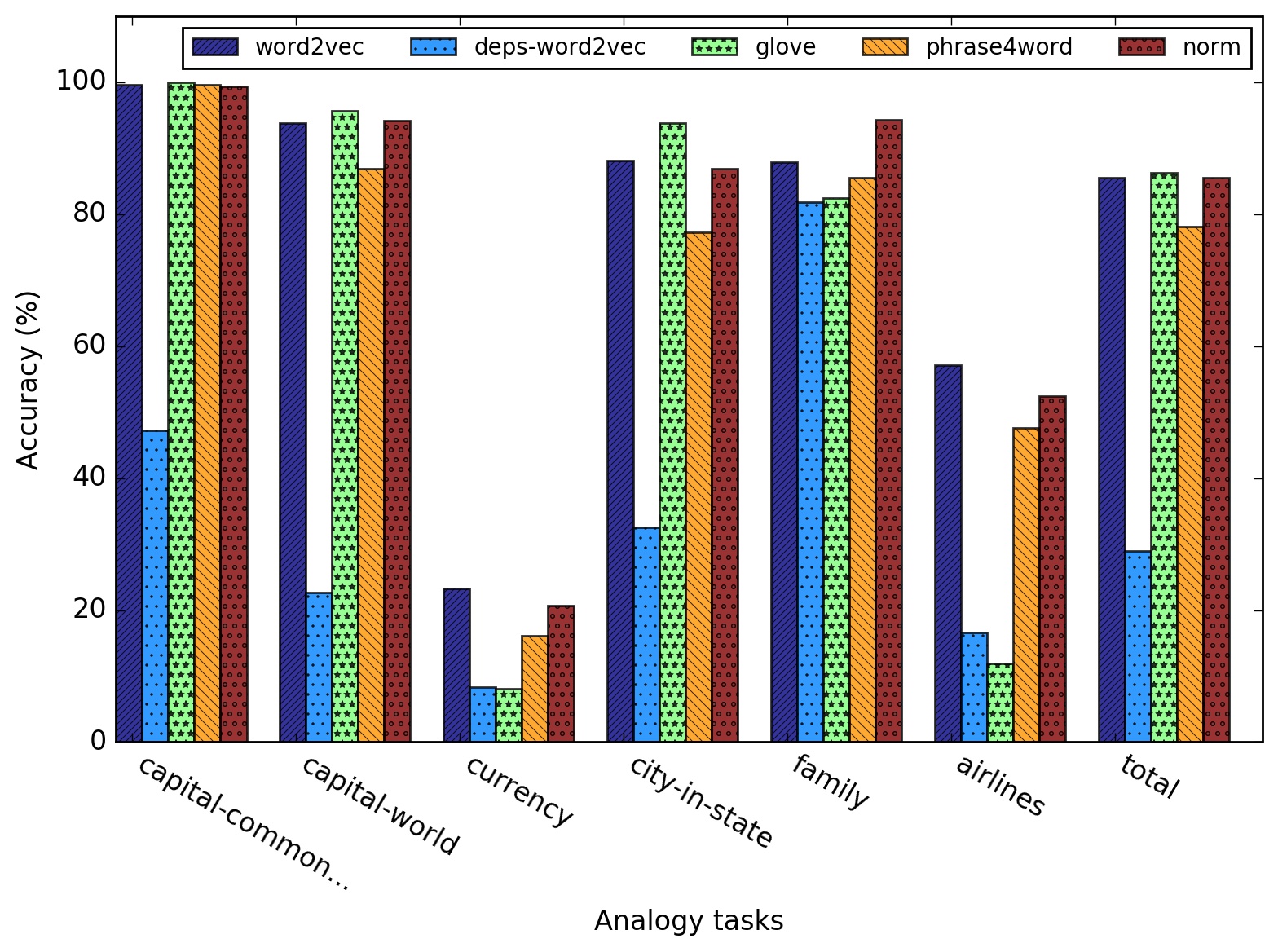

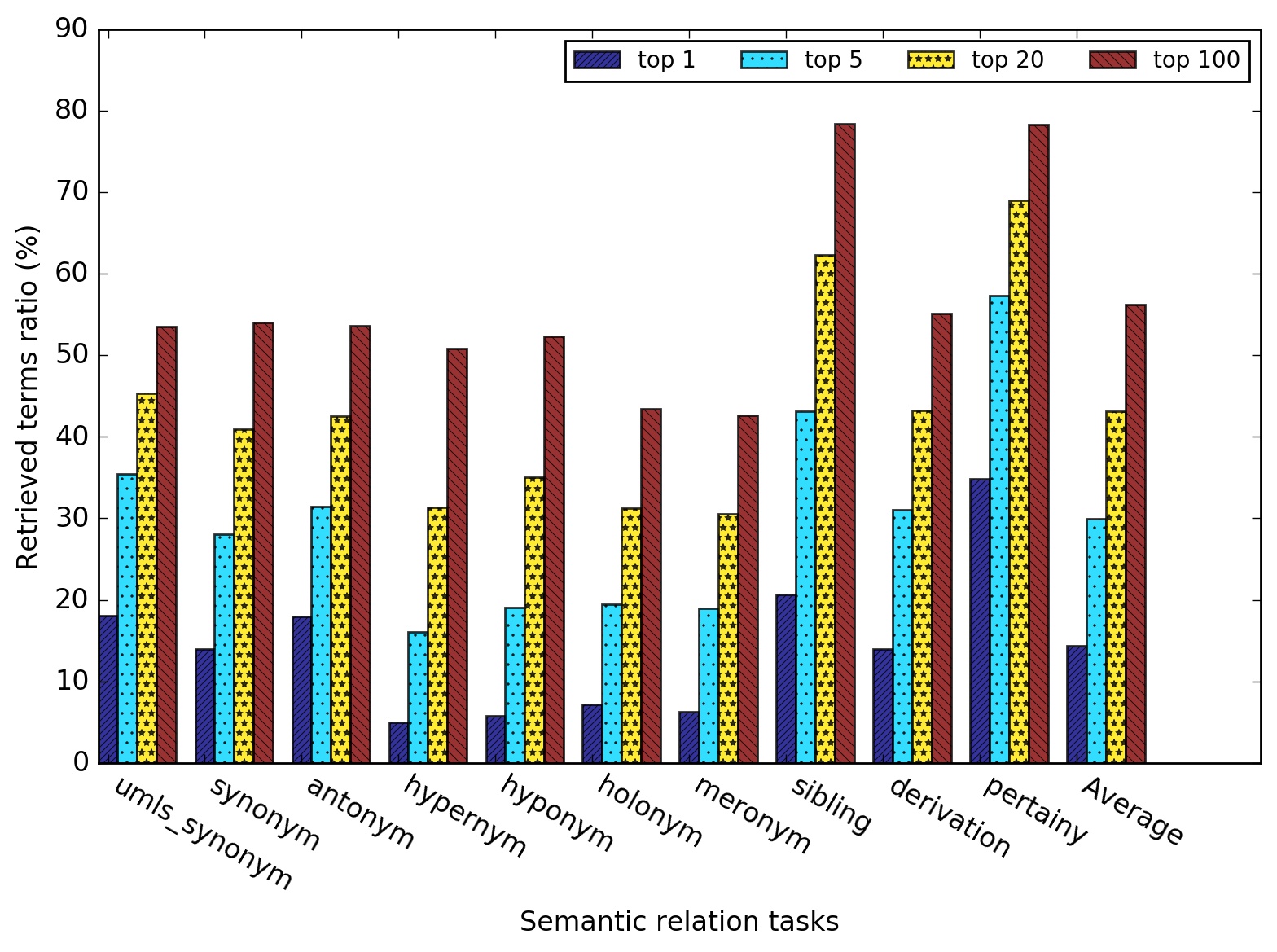

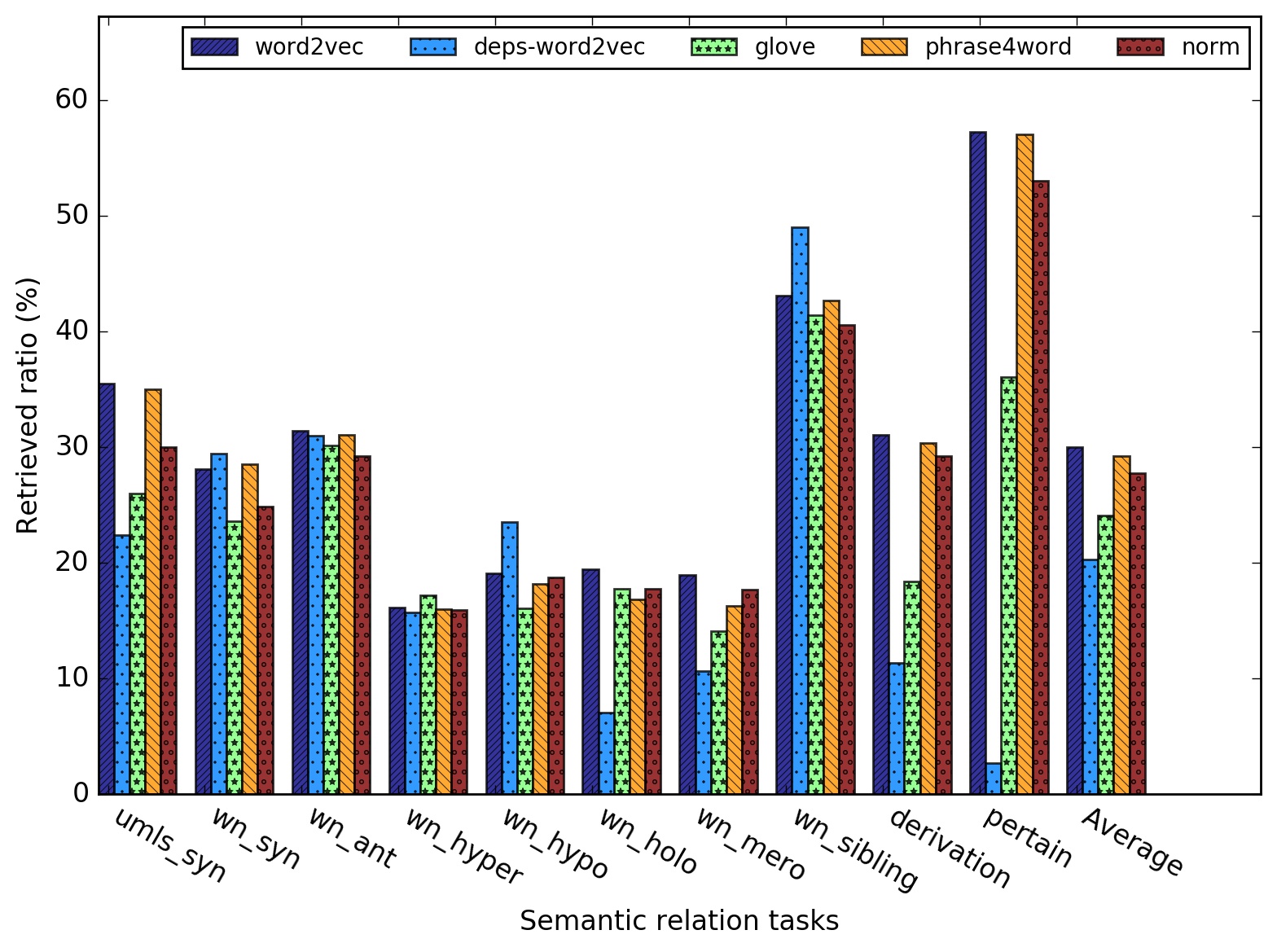

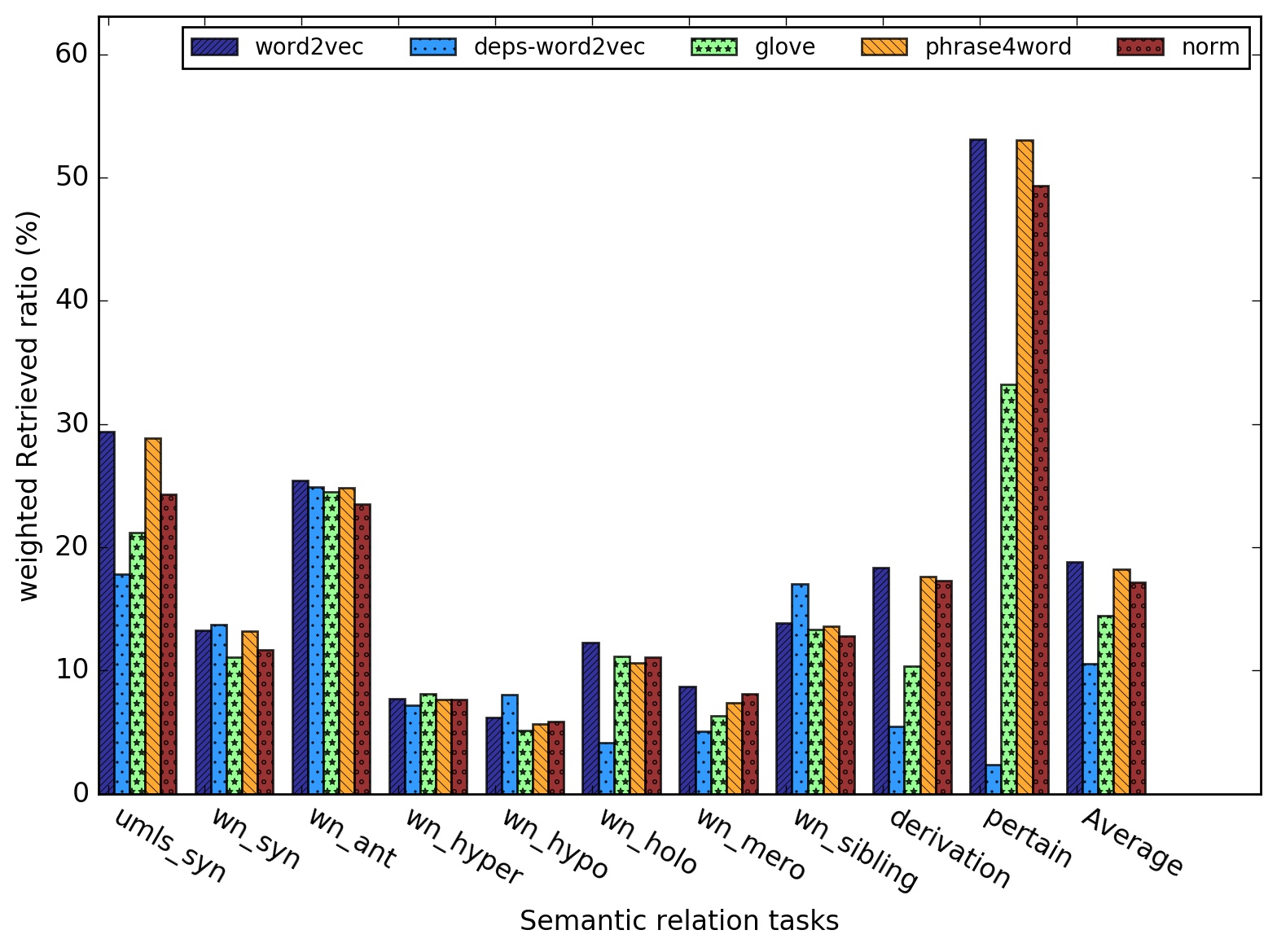

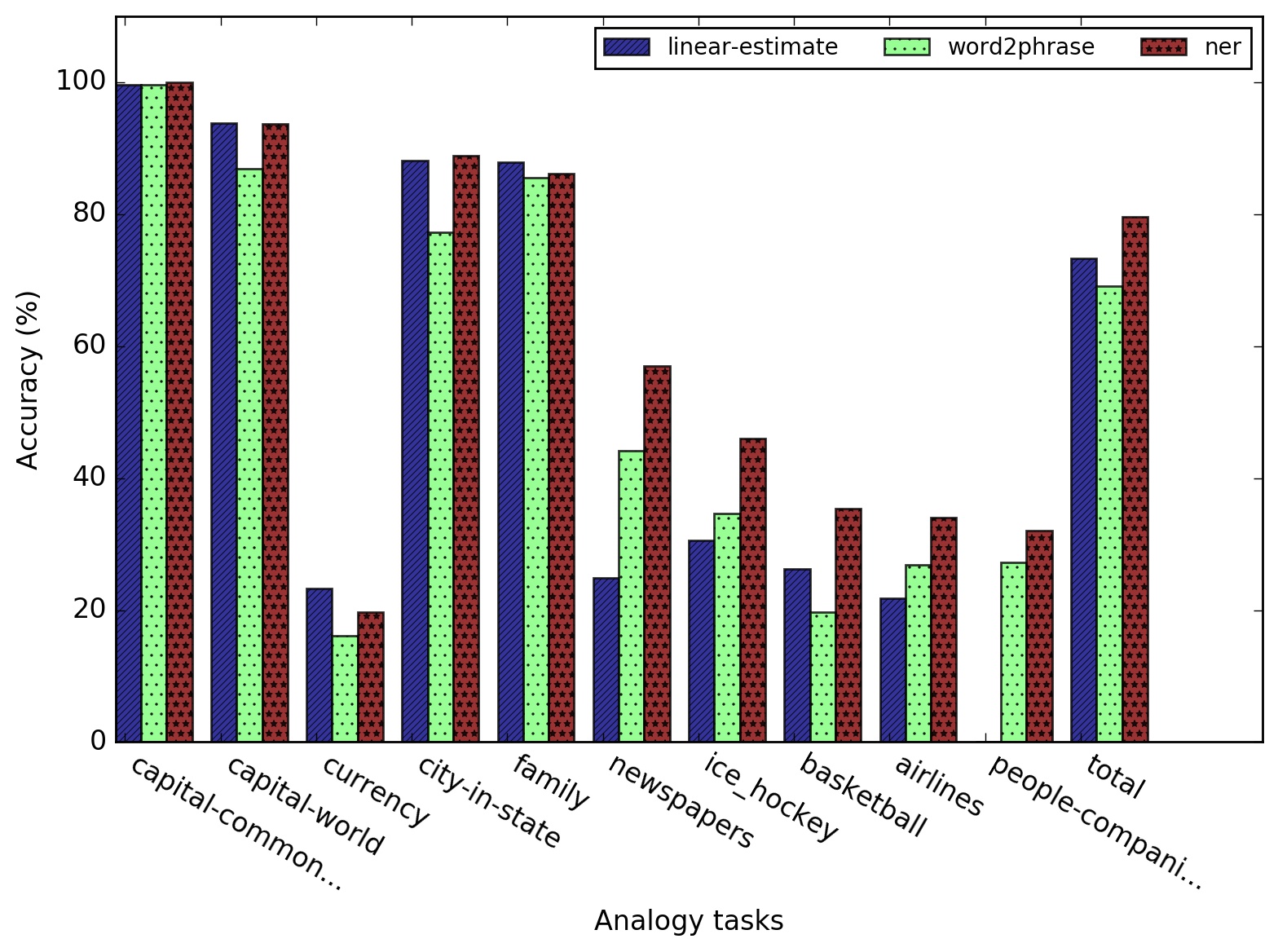

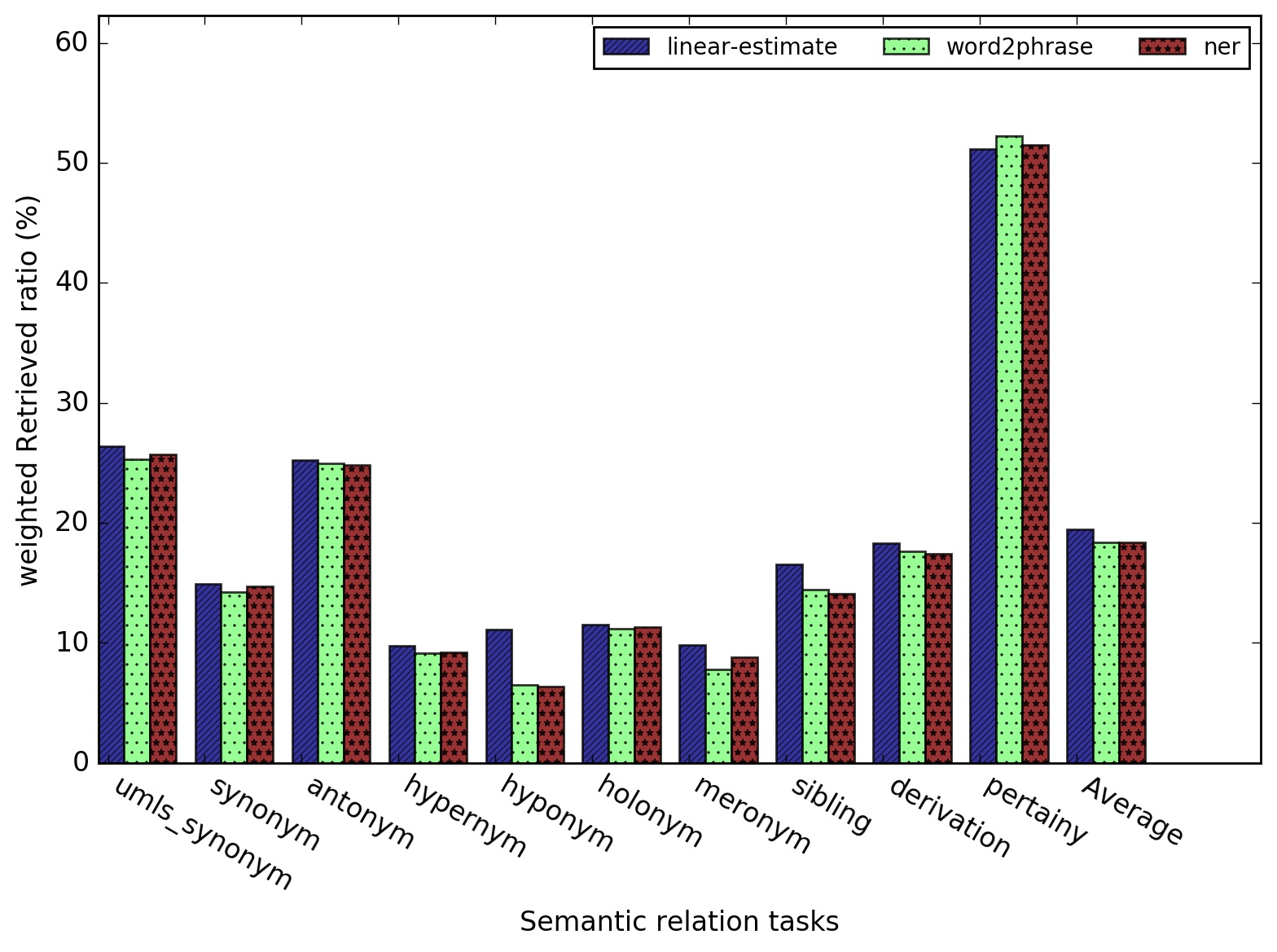

Neural network based word embedding has demonstrated outstanding results in variety task, and become a standard input for NLP related deep learning research. These representations are capable to catch semantic regularities in language, e.g. analogy relation. While a general question "what kind of semantic relation does the embedding represent and how the semantic relation could be retrieved using the embedding model?" is not clear and rare relevant work was explored. In this study, we proposed a new approach to explore the semantic relation represented in neural-embedding based on WordNet and UMLS. Our study demonstrated neural embedding did prefer some semantic relation as well as the neural embedding also represented diverse semantic relations. Our study also found out the NER based phrase composition outperformed Word2phrase and the word variants did not affect the performance on analogy and semantic relation tasks.

forked from zwChan/Wordembedding-and-semantics

-

Notifications

You must be signed in to change notification settings - Fork 0

Evaluation and improvement of wordembeding and semantics

License

Demon-JieHao/Wordembedding-and-semantics

Folders and files

| Name | Name | Last commit message | Last commit date | |

|---|---|---|---|---|

Repository files navigation

About

Evaluation and improvement of wordembeding and semantics

Resources

License

Stars

Watchers

Forks

Releases

No releases published

Packages 0

No packages published

Languages

- Python 100.0%