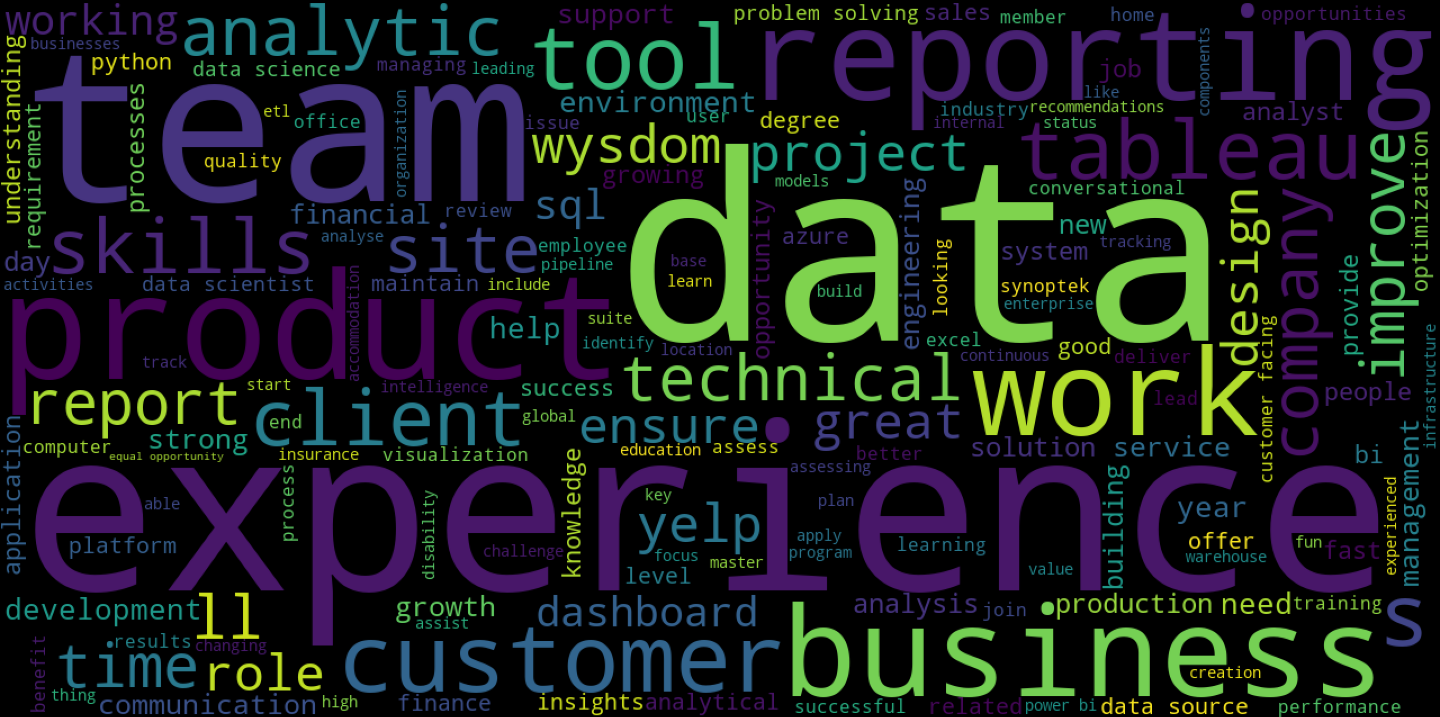

This Python script scrapes up to 100 most recent Linkedin Job Postings of any job title and creates sentiment visualization in a form of a word cloud. It is currently limited to Canada only, but the code can be easily changed for any other location.

See Jupiter notebook linkedsent.ipynb for detailed presentation.

- Linkedin jobs sentiment visualization and scraping - Python, JavaScript

It is crucial for job seekers to write resumes, cover letters, and Linkedin profiles using the language of their potential employers. The task is even more difficult for recent graduates and those who switch fields. Job seekers there must read through dozens of Job Positions to pick up the lingo before even start drafting a resume or a cover letter.

Instead of manually reading through Job Postings this script scrapes up to 100 recent Job Postings in Canada and creates Word Cloud Visualization where the size of the word represents the frequency of its total use.

This script can also be useful for career exploration, comparison of different positions, or even for monitoring how employers' sentiment changes over time.

When starting as a Data Analyst I was told by a Career Strategist, that I should use 'employers' language' in my application documents... The challenge was accepted with the mindset of a Data Analyst :).

Throughout the project, multiple web parsing and scraping tools were explored, such as requests, selenium, and bs4. ChromeDriver which is a tool designed for automated testing of web apps across many browsers was adapted to navigate and load hidden content of a webpage.

Also, a few custom libraries were discovered and utilized such as wordcloud to generate word cloud and advertools to filter out stop words in multiple languages.

-

Hidden webpage content

- Problem: The original Linkedin web page only displays around 13 Job postings by default. Up to 100 Job Postings are loaded as the user scrolls down the web page.

- Solution: Using

seleniumandChromeDriverto open the webpage and scroll down to the bottom of the page.

-

- Problem: When scraping data from 100 links using

for looponly about 20 will be successfully scraped. The rest would be blocked by Run-time error 429 due to frequent requests. - Solution: Using

timemodule to pause the execution for 1 second at the end of each loop. And printing out response code to ensure that all 100 links giveresonse 200.

- Problem: When scraping data from 100 links using

-

- Problem: When scraping Job Postings with

bs4and converting to text this error would be printed.Error: 'NoneType' object has no attribute 'text' - Solution: putting the

for loopinsidetry exceptsolved the issue.

- Problem: When scraping Job Postings with

Python 3.8.8as a main language.JavaScriptto scroll down and load the full length of web pages.

chromedriverstandalone server to automate opening and navigating webpages.Google Chromeweb browser to run ChromeDriver.timemodule to pause between web queries to avoid run-timeerror 429.requestsmodule to parse web content.seleniummodule to open and navigate through a webpage.bs4Beautiful Soup module to scrape content from web pages.advertoolsmodule to filter out English and French stop words.

wordcloudmodule to generate a word cloud.matplotlibmodule to plot word cloud.

- Install Python 3 on your system.

- Install all libraries/modules listed in Technologies Used.

- On Mac OS open

Terminal.app - type

pip installand the name of the module you want to be installed, then press Enter

- On Mac OS open

- Download and install Google Chrome web browser.

- Download ChromeDriver, unzip it, and move to

/usr/local/binlocation on Mac OS. - Download linkedsent.py file from this GitHub repository.

- Open linkedsent.py with any text editor like

TexEdit.apporAtom.app. - Look for this code approximately in the first 30 lines.

# specifying URL and number of job postings

postings_name = 'Data Analyst'

position_num = 10 # numbers 1 to 100

- Replace

Data Analystwith a Job Position of your choice. - Replace number

10with the number of jobs you want to scrape. Teh maximum number is 100. - Open Terminal.app. Type

python, addspace, then drag and droplinkedsent.pyand pressReturn. This will run the script, open and scroll down Linkedin webpage in Google Chrome browser, then you should see multiple<Response [200]>each representing parsing a single Job Posting. Eventually, it will open a separate window with wordcloud image. - Click save button to save the image locally.

The project is: complete

I am no longer working on it since I received the result I was looking for. But if you have some ideas or want me to modify something contact me and we should be able to collaborate.

- Tested on Mac OS only.

- The script only runs with

ChromeDriverand all the modules listed in Technologies Used. - The script only scrapes Job Postings in Canada (which can be easily changed inside the script).

- The maximum amount of Job Postings to scrape is 100.

- Testing and logging the issues.

- Designing solution to avoid using ChromeDriver and Chrome Browser.

- Making an executable file without the need to install additional modules.

- Developing GUI to be able to specify:

- Job Title

- location

- number of Job Postings to scrape

- Image aspect ratio and quality

- the path where to save the image

- Building a web app.

This project is open-source and available under the GNU General Public License v3.0

Created by @DmytroNorth - feel free to contact me at [email protected]!