Unsupervised video summarization using ITL- Autoencoder

In a video, there are so many frames that are not important to see or check contents. These unimportant frames make us waste the time. We can solve this problem by detecting important objects in a video and making video time shorter automatically. Detecting objects is performed well in computer vision. However, this good performance is for not only important objects but also unimportant objects in the video. Detecting only important objects is a challenging problem in computer vision. If we can detect only important objects in a video, there will be many applications we can apply in various fields. For example, in underwater circumstances, we can check what is happening by recording a video. However, it is difficult to sort out which parts are important and unimportant in a video. Moreover, it is waste of time to check every frame in a long video to sort the important parts. To detect important parts in a frame, the autoencoder is used for this project. Using this model, we can extract the important parts in a frame and make a video time shorter which includes only import events in a video. We can apply this project in various fields. With an unsupervised approach, we have the advantage that there is no requirement for human annotations to learn the important event in a video. With this method, the evaluation shows that the process for video summarization has two summarized videos that are an important event and an unimportant event.

Paper: Unsupervised video summarization using ITL-Autoencoder

- The first step is to extract frames in a video

- The second step is generating pseudo labels for the frames

- Inforamation_theoretic Learning-Autoencoder (ITL-AE)

- The third step is to classify actual frames by comparing with reconstruction scores and pseudo labels

- The fourth step is generating a summarized video

Results of video summarization

| Methods | Accuracy |

|---|---|

| Pseudo labels | 65.33% |

| Pseudo labels + Reconstruction loss | 87.44% |

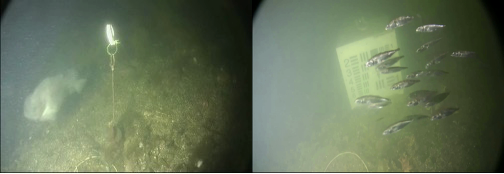

- The brackish dataset contains 89 videos are provided with annotations in the AAU Bounding Box, YOLO Darknet, and MS COCO formats. Fish are annotated in six coarse categories. Categories: Big fish, Small fish, Crab, Shrimp, Jellyfish, Starfish.

- Paper: Detection of Marine Animals in a New Underwater Dataset with Varying Visibility

- Data: The Brackish Dataset