A vocoder that can convert audio to Mel-Spectrogram and reverse with WaveGlow, with GPU.

Most code is from Tacotron2 and WaveGlow.

pip install waveglow-vocoder

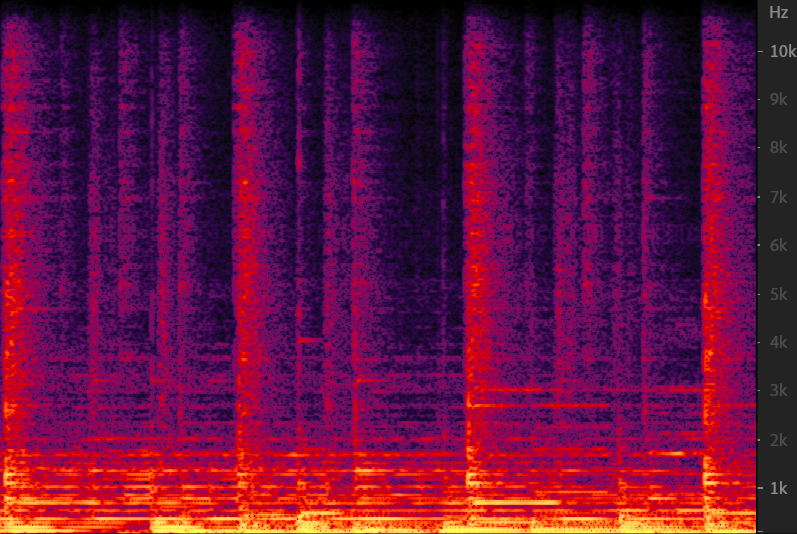

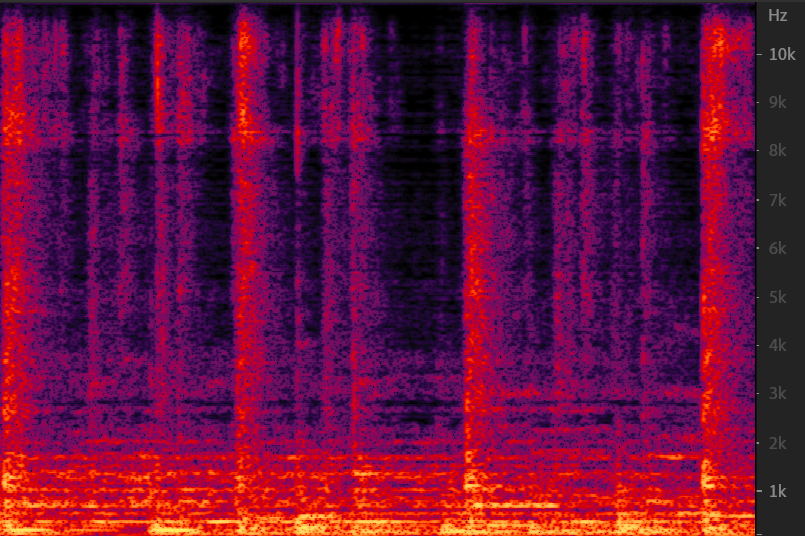

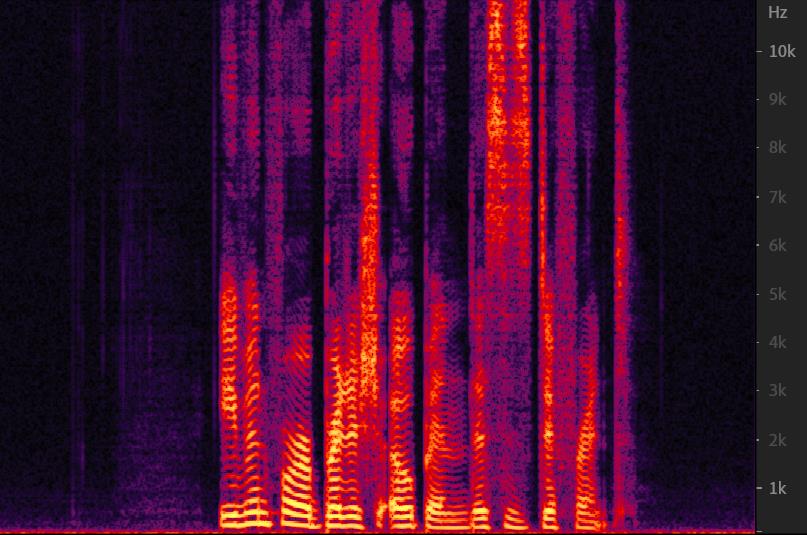

| Original | Vocoded |

|---|---|

|

|

| original music | vocoded music |

|

|

| original speech | vocoded speech |

Load wav file as torch tensor on GPU.

import torch

import librosa

y,sr = librosa.load(librosa.util.example_audio_file(), sr=22050, mono=True, duration=10, offset=30)

y_tensor = torch.from_numpy(y).to(device='cuda', dtype=torch.float32)Apply mel transform, this would be done with GPU(if avaliable).

from waveglow_vocoder import WaveGlowVocoder

WV = WaveGlowVocoder()

mel = WV.wav2mel(y_tensor)Decoder it with Waveglow.

NOTE:

As the hyperparameter of pre-trained model is alignment with Tacotron2, one might get totally noise if the Mel spectrogram comes from other function than wav2mel(an alias for TacotronSTFT.mel_spectrogram).

Support for the melspectrogram from librosa and torchaudio is under development.

wav = WV.mel2wav(mel)This vocoder will download pre-trained model from pytorch hub on the first time of initialize.

You can also download the latest model from WaveGlow, or with your own data and pass the path to the waveglow vocoder.

config_path = "your_config_of_model_training.json"

waveglow_path="your_model_path.pt"

WV = WaveGlowVocoder(waveglow_path=waveglow_path, config_path=config_path)Then use it as usual.

- WaveRNN Vocoder

- MelGAN Vocoder

- Performance

- Support librosa Mel input