Project Roadmap

In this Project, using multiple linear regression are being estimated market values of the football players in all European teams in the 2018-2019 season.Players from European League will be examined, and we will show the most and the least affecting factors for the market value.

Nowadays, FIFA is the most important association that governs football federations all over the planet and attracts a lot of fans. Because of its popularity, many football associations obtain huge incomes. Thus, soccer clubs are companies. These companies always make important decisions in relation to who football player they want to employ. It has been a fact that transfers of players make a significant impact on football clubs, cities, countries, and governments. Also, the market value of players provides a budget for these transfers. Therefore, the market value of a player is important to sales and profit for the clubs, which pay attention to analysis and predict player value. So, our question is “ what kind of factors will influence the market value of a player?”

- what kind of factors will influence the market value of a player?

- How much does the market value of the players increase on average?

- player performance and club rank can make a significant impact on player value.

Player personal information, player performance and club maket value can make a

significant impact on player value.

Develop an optimal model using Linear regression to predict the performance of the players.

- Target Audience

- Sports statistics fans

- Enthusiasts of football

-Data Source

Name: “Football Data from Transfermarkt”

Description

Clean, structured and automatically-updated football data from Transfermarkt, including:

- 40,000+ games from many seasons on all major competitions

- 300+ clubs from those competitions

- 20,000+ players from those clubs

- 900,000+ player appearance records from all games

-Data Source

Name: “Top 250 Football transfers from 2000 to 2018”

Description

The dataset of the 250 most expensive football transfers from season 2000-2001 until 2018-2019. There is a total of 4700 rows and 10 columns in this dataset. The columns contain the following information: the name of each football player, the selling team and league, the league and team where the player is sold, the estimated market value of the player, the actual value of the transfer, the position of the player and season during which the transfer took place.

Source: Kaggle:https://www.kaggle.com/vardan95ghazaryan/top-250-football-transfers-from-2000-to-2018

- Data Analysis

This dataset aims to present up-to-date football data down to the level of performance of the players.

We selected 10 independent variables and one dependent variable of the season 2018 to 2019. The independent variables are divided into three categories: physical data of the players, performance data of the players, and the ranking of the players club.

We selected 10 independent variables and one dependent variable from players, matches, and team data of European football from 2017 to 2018. The independent variables are divided into three categories: physical data of the players, performance data of the players, and the market of the club. The dependent variable is the players’ market value. After importing the raw data into Python for data cleaning we got a dataset of 348 rows and 11 columns.

- Club Market Value

- Red Cards

- Assists

- Age

- Hours Played

- Goals

- Transfer Fee

- Games

- Yellow Cards

- Player Position

We convert the variable " player_position " to dummies:

- Player position: Attack, Encode position: 0

- Player position: Defender, Encode position: 3

- Player position: Goalkeeper, Encode position: 2

- Player position: Midfield, Encode position: 1

In order to obtain our target values for our model, we needed to join multiple datasets with information from the players club, games and player market values, we joined our datasets in SQL as shown in our ERD below to:

After joining the datasets we obtained a table with 19,189 rows containing the player name with multiple appearances and games. In order to reduce the amount of rows we obtained the performance of each player by performing the next operations:

COUNTthe number ofgamesSUMthe number ofgoalsSUMthe number ofassistsSUMthe number ofminutes_playedand transform them intohours_playedSUMofyellow_cardsSUMofred_cards

After obtaining the performance of each player in all games of every season from 2014 to 2018, we obtained a database of 710 unique players from all the available seasons. In order to load the data to the cloud to make it more available and easier to access we created connected our final database from PostgreSQL with Heroku as shown in the images below:

| Heroku Connection | Database Credentials |

|---|---|

|

|

By loading the data into our Heroku database we can access the data by using the credentials of the database including the password. The database can be filtered by an SQL query as shown in the code below:

from getpass import getpass

password = getpass('Enter database password')

connection = psycopg2.connect(user="azcaqpdjrciaow",

password=password,

host="ec2-34-226-18-183.compute-1.amazonaws.com",

port="5432",

database="d7m85rf8c5rhv5")

cursor = connection.cursor()

postgreSQL_select_Query = "select * from player_market_values where season = 2018 or season = 2017"

cursor.execute(postgreSQL_select_Query)

print("Loading data from Heroku")

data = cursor.fetchall()

df = pd.DataFrame(data, columns=['player_id', 'player_name', 'age', 'club_id', \

'team_from', 'league_from', 'team_to', 'country_of_birth', \

'country_of_citizenship', 'player_position', 'games', 'goals', \

'assists', 'hours_played', 'yellow_cards', 'red_cards', 'transfer_fee', \

'market_value', 'club_market_value', 'season'])

Football_df = df.drop(columns=['player_id', 'player_name', 'club_id', 'team_from', 'league_from', 'team_to', 'country_of_birth', 'country_of_citizenship', 'season'])

Football_df

The database is filtered by the most recent seasons 2017 and 2018 and the next columns are mantained:

- age

- player_position

- games

- goals

- assists

- hours_played

- yellow_cards

- red_cards

- transfer_fee

- market_value

- club_market_value

- season

We used a multiple linear regression analysis because the multiple regression model not only helps us to make predictions about the data but also can help us to identify the variables that have a significant effect on the dependent variable (market_value). So it was suitable as well as reasonable for us to use here.

PyCaret is an open-source, low-code machine learning library in Python which automates machine-learning workflows. It is an end-to-end machine learning and model management tool that speeds up the experiment cycle exponentially, making you more productive.

Extra Trees is an ensemble machine-learning algorithm that combines the predictions from many decision trees. It is related to the widely-used random forest algorithm. It can often achieve a good or better performance than the random forest algorithm, although it uses a simpler algorithm to construct the decision trees used as members of the ensemble. Regression: Predictions made by averaging predictions from decision trees.

-We can see that R-squared is equal to 0.691, which means that the regression equation can explain the 69.1% variation in player value.

After defining the variables that we were going to use in our model we needed to encode categorical variables in our data. We used One Hot Encoder from sklearn to encode the player_position variable, obtaininga number per position of a player as shown below:

Attackas 1000 and there are124players with this position.Defenderas 0100 and there are106players with this position.Goalkeeperas 0010 and there are101players with this position.Midfieldas 0001 and there are only17players with this position.

Following this process we created a correlation map in order to determine which variables have a higher impact in our target value (market_value).

The variables related with player's market values are:

- transfer_fee

- club_market_value

- assists

- goals

- games

- hours_played

Afterwards we used PyCaret to test our data with multiple regression model and compare their performances as shown in the image below:

As we can see, the highest ranked model is the Orthogonal Matching Pursuit regression model that has a mean R2 of 0.6747, a RMSLE of 0.6583 and a MAPE of 0.7881 and then we tuned this model in order to have a higher performance as shown below_

Obtaining a model with an R2 ranging from 0.4195 to 0.8618

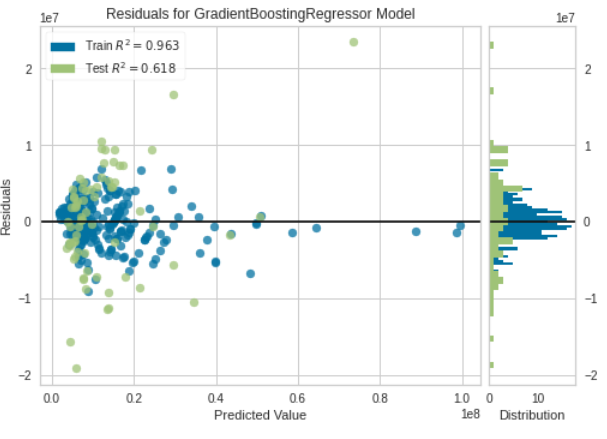

Other models where tested and compared, below we have the tables of the Gradient Boosting Regressor and Linear Regression models.

| Gradient Boosting Regressor | Linear Regression |

|---|---|

|

|

| Gradient Boosting Regressor | Linear Regression | Orthogonal Matching Pursuit |

|---|---|---|

|

|

|

| Gradient Boosting Regressor | Linear Regression | Orthogonal Matching Pursuit |

|---|---|---|

|

|

|

-

Incorporate new data

-

Divide the data by clusters (e.g. player position)

-

Run the model for a more recent season and compare the results.

This week, each team member chose a shape, and We knew that each shape was responsible for a specific task. Also, We chose from the square, circle, triangle, and X. Finally, We chose a figure concerning our strengths and with the help of the documentation on canvas.

We created a page where we can edit code all together at the same time and this is how we started to work. Also, with the help of other technologies such as WhatsApp we have and create good communication. https://deepnote.com/project/Untitled-Python-Project-eAGO52SQT5SsuFcbuPwvtw/%2FUntitled.ipynb

During this second segment, we continue to work together to coax a story from your data. We perform more than one analysis on your data during this segment—analyses that help bring together the larger picture and lend strength to the final project.

In this third segment, We begin to really tie things together. At this point, the machine learning model and database are plugged into the rest of the project, and we see results. We answered the question set out to answer.