An online code compiler supporting 11 languages (Java, Kotlin, C, C++, C#, Golang, Python, Scala, Ruby, Rust and Haskell) for competitive programming and coding interviews. This tool execute your code remotely using docker containers to separate environments of execution.

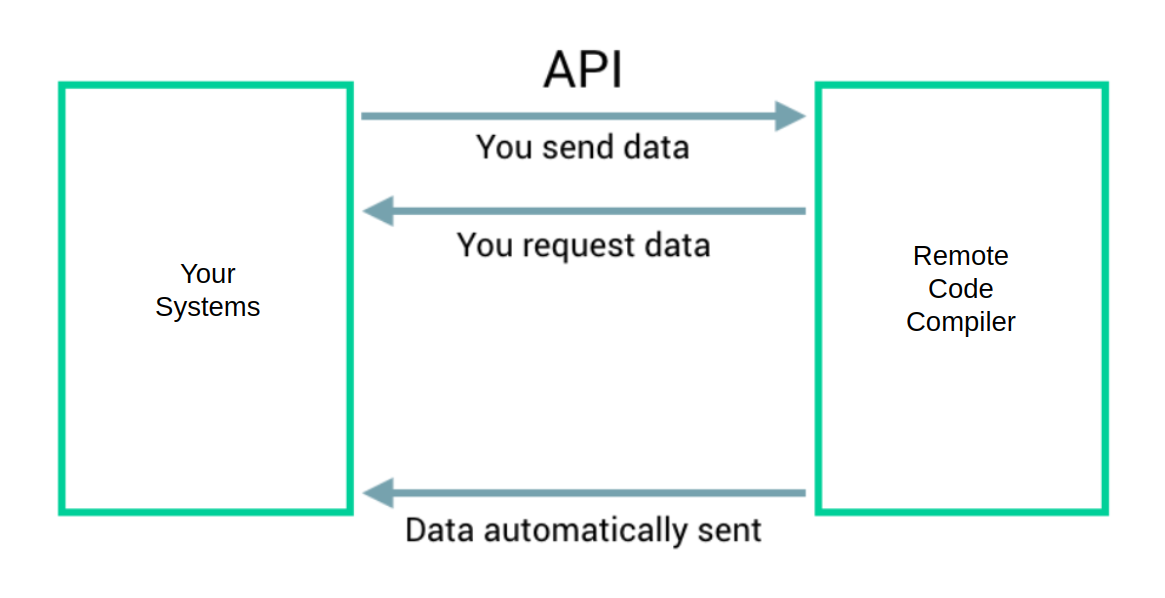

Supports Rest Calls (Long Polling and Push Notification), Apache Kafka and Rabbit MQ Messages.

Example of an input

{

"testCases": {

"test1": {

"input": "<YOUR_INPUT>",

"expectedOutput": "<YOUR_EXPECTED_OUTPUT>"

},

"test2": {

"input": "<YOUR_INPUT>",

"expectedOutput": "<YOUR_EXPECTED_OUTPUT>"

},

...

},

"sourceCode": "<YOUR_SOURCE_CODE>",

"language": "JAVA",

"timeLimit": 15,

"memoryLimit": 500

}Example of an ouput

The compiler cleans up your output, which means having extra spaces or line breaks does not affect the status of the response.

{

"verdict": "Accepted",

"statusCode": 100,

"error": "",

"testCasesResult": {

"test1": {

"verdict": "Accepted",

"verdictStatusCode": 100,

"output": "0 1 2 3 4 5 6 7 8 9",

"error": "",

"expectedOutput": "0 1 2 3 4 5 6 7 8 9",

"executionDuration": 175

},

"test2": {

"verdict": "Accepted",

"verdictStatusCode": 100,

"output": "9 8 7 1",

"error": "" ,

"expectedOutput": "9 8 7 1",

"executionDuration": 273

},

...

},

"compilationDuration": 328,

"averageExecutionDuration": 183,

"timeLimit": 1500,

"memoryLimit": 500,

"language": "JAVA",

"dateTime": "2022-01-28T23:32:02.843465"

}To run this project you need a docker engine running on your machine.

1- Build a docker image:

docker image build . -t compiler2- Create a volume:

docker volume create compiler3- build the necessary docker images used by the compiler

./environment/build.sh4- Run the container:

docker container run -p 8080:8082 -v /var/run/docker.sock:/var/run/docker.sock -v compiler:/compiler -e DELETE_DOCKER_IMAGE=true -e EXECUTION_MEMORY_MAX=10000 -e EXECUTION_MEMORY_MIN=0 -e EXECUTION_TIME_MAX=15 -e EXECUTION_TIME_MIN=0 -e MAX_REQUESTS=1000 -e MAX_EXECUTION_CPUS=0.2 -e COMPILATION_CONTAINER_VOLUME=compiler -t compiler- The value of the env variable DELETE_DOCKER_IMAGE is by default set to true, and that means that each docker image is deleted after the execution of the container.

- The value of the env variable EXECUTION_MEMORY_MAX is by default set to 10 000 MB, and represents the maximum value of memory limit that we can pass in the request. EXECUTION_MEMORY_MIN is by default set to 0.

- The value of the env variable EXECUTION_TIME_MAX is by default set to 15 sec, and represents the maximum value of time limit that we can pass in the request. EXECUTION_TIME_MIN is by default set to 0.

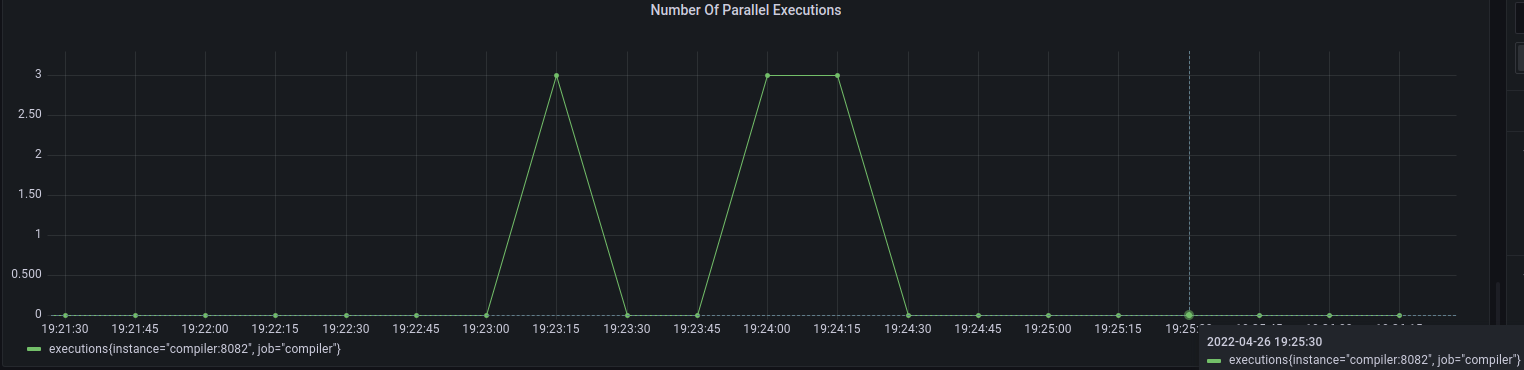

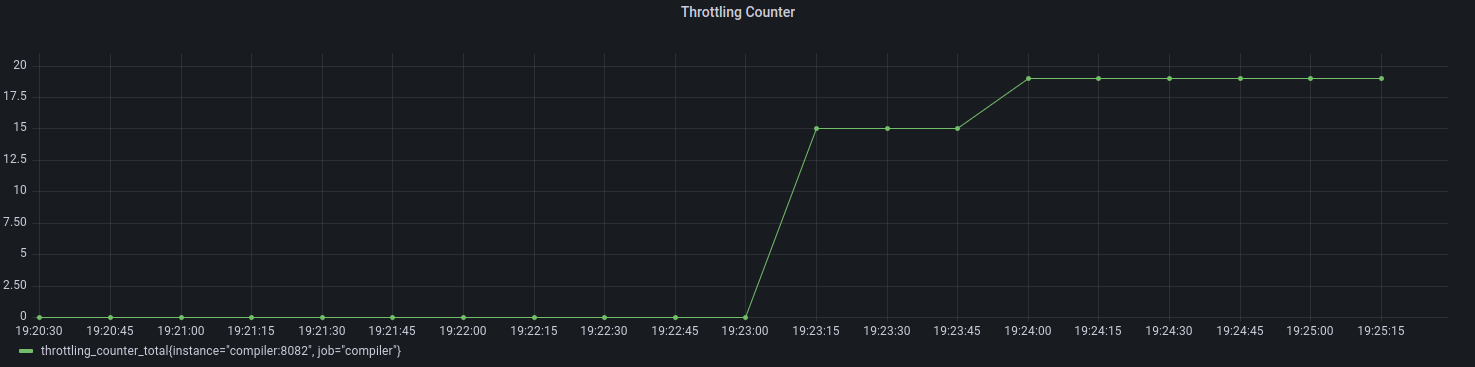

- MAX_REQUESTS represents the number of requests that can be executed in parallel. When this value is reached all incoming requests will be throttled, and the user will get 429 HTTP status code (there will be a retry in queue mode).

- MAX_EXECUTION_CPUS represents the maximum number of cpus to use for each execution (by default the maximum available cpus). If this value is set, then all requests will be throttled when the service reaches the maximum.

- COMPILATION_CONTAINER_VOLUME It should be the same as the volume created in step 2.

- MAX_TEST_CASES Maximum number of test cases a request should handle (by default it's set to 20)

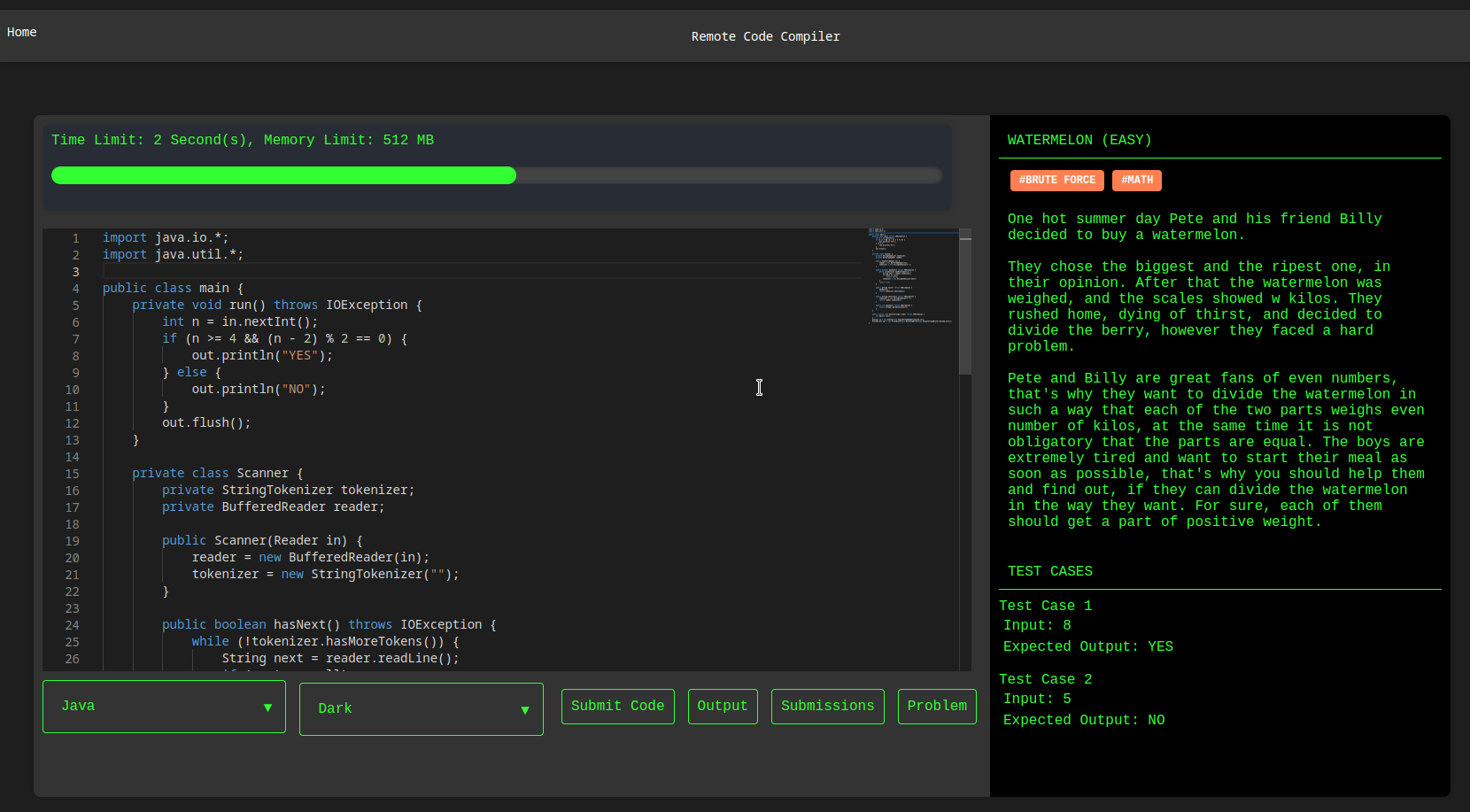

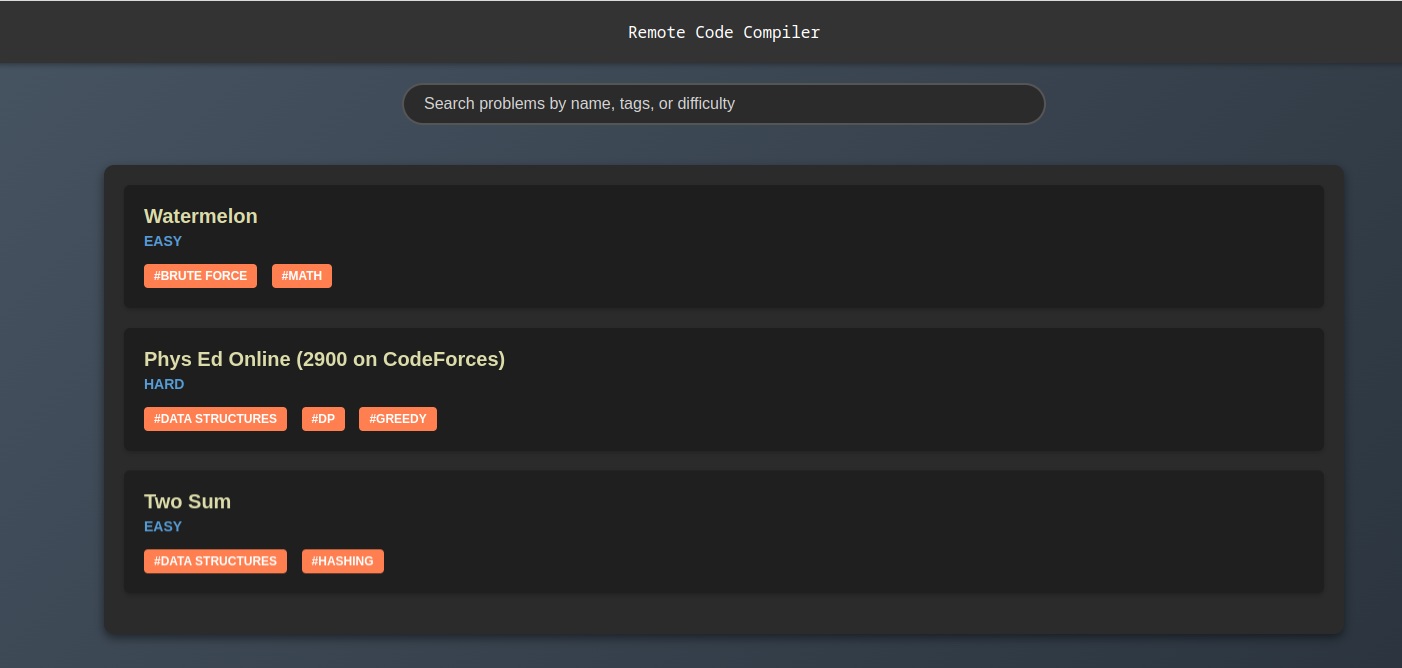

The compiler is equipped with some problems specified in the problems.json file located in the resource folder. These problem sets are automatically loaded upon project startup, granting you the opportunity to explore and test them through the /problems endpoint.

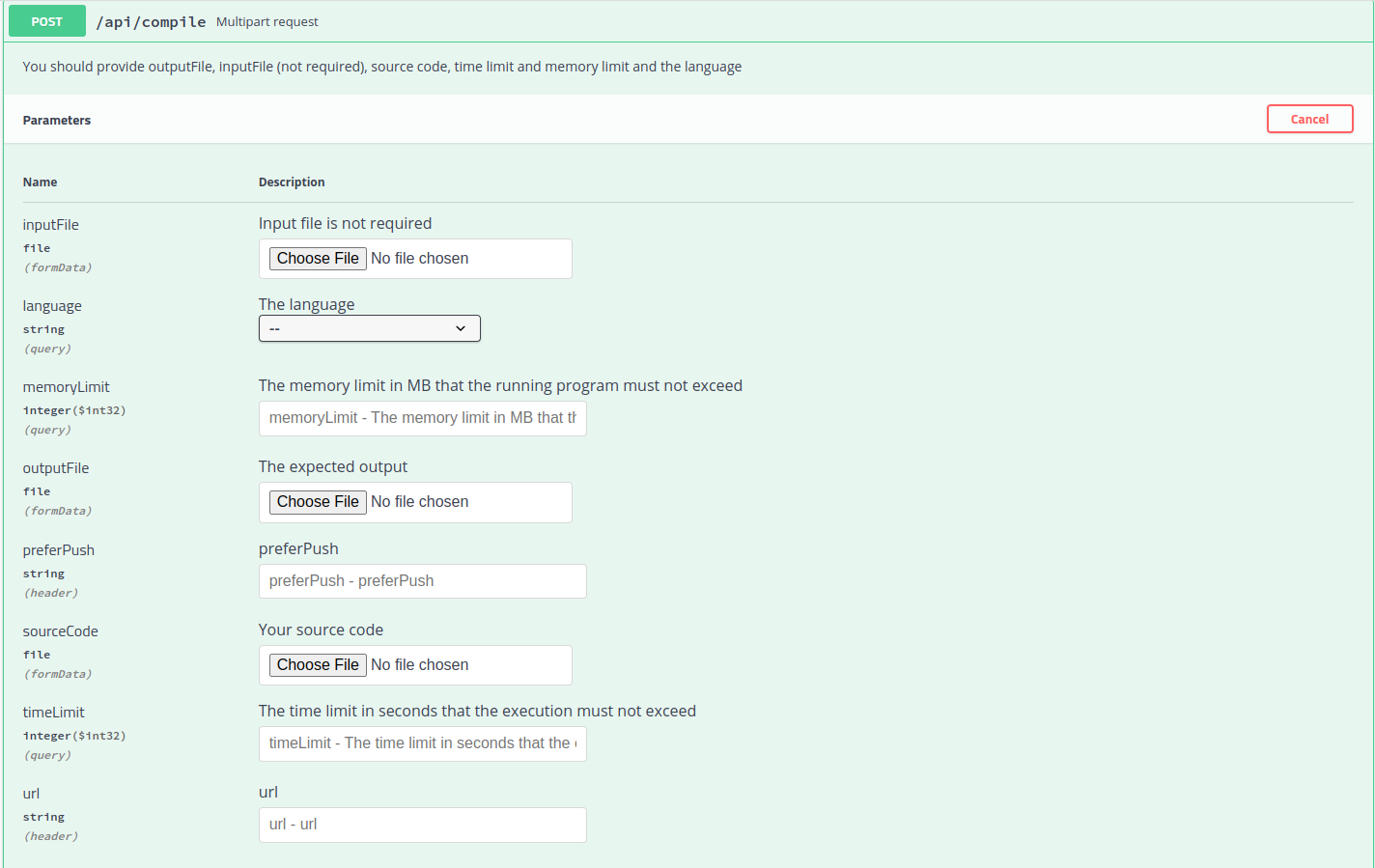

You may want to get the response later and to avoid http timeouts, you can use push notifications, to do so you should pass two header values (url where you want to get the response and set preferPush to prefer-push)

To enable push notifications you should set the environment variable ENABLE_PUSH_NOTIFICATION to true

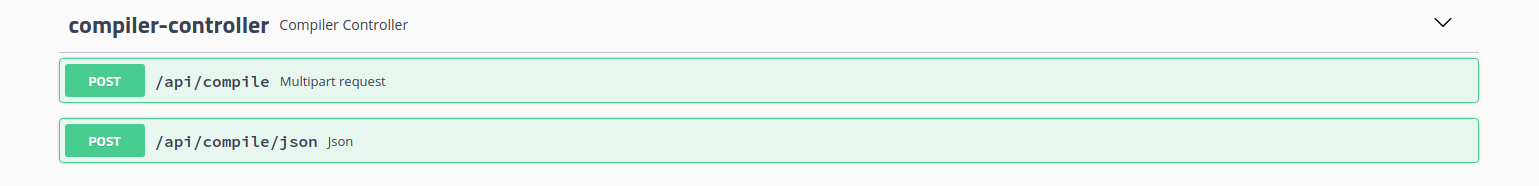

You have also the possibility to use multipart requests, you typically can use these requests for file uploads and for transferring data of several types in a single request. The only limitation with that, is that you can specify only one test case.

See the documentation in the local folder, a docker-compose is provided.

docker-compose up --buildYou can use the provided helm chart to deploy the project on k8s, see the documentation in the k8s folder.

helm install compiler ./k8s/compilerWe provide you with a script to provision an AKS cluster to ease your deployment experience. See the documentation in the provisioning folder.

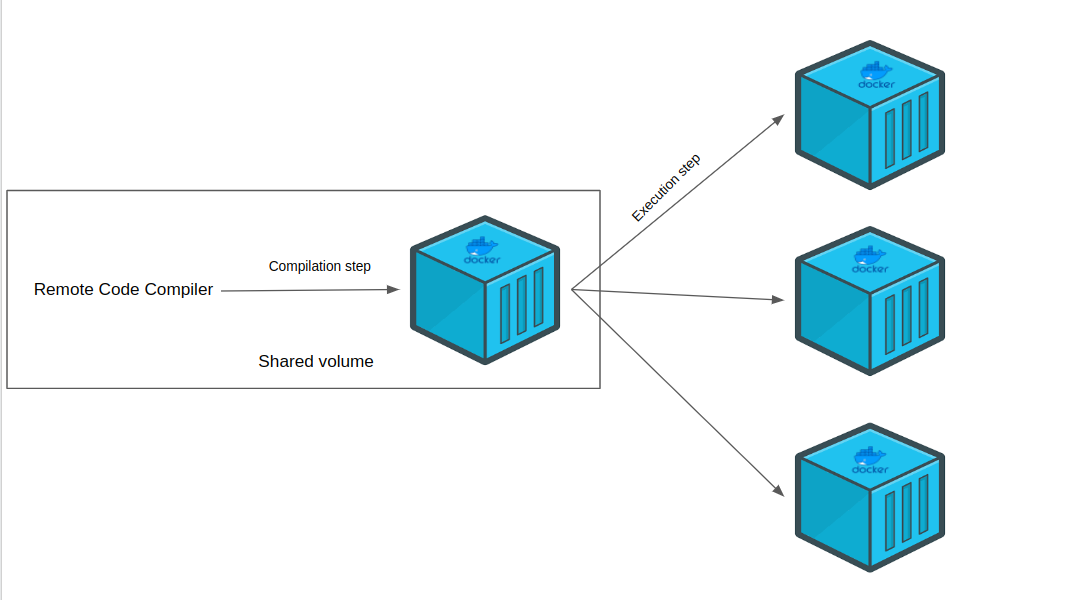

When a request comes in, the compiler creates a container responsible of compiling the given sourcecode (this container shares the same volume with the main application). After a successful compilation, an execution container (with it's own execution environment and totally isolated from other containers) is created for each test case.

In the execution step, each container is assigned a set number of CPUs (consistent across all containers, with a recommended value of 0.1 CPUs per execution), as well as limits on memory and execution time. When the container hits either the memory threshold or the maximum time allowed, it is automatically terminated, and a user-facing error message is generated to explain the termination cause.

For the documentation visit the swagger page at the following url : http://IP:PORT/swagger-ui.html

Here is a list of Verdicts that can be returned by the compiler:

🎉 Accepted

{

"verdict": "Accepted",

"statusCode": 100,

"error": "",

"testCasesResult": {

"test1": {

"verdict": "Accepted",

"verdictStatusCode": 100,

"output": "0 1 2 3 4 5 6 7 8 9",

"error": "",

"expectedOutput": "0 1 2 3 4 5 6 7 8 9",

"executionDuration": 175

},

"test2": {

"verdict": "Accepted",

"verdictStatusCode": 100,

"output": "9 8 7 1",

"error": "" ,

"expectedOutput": "9 8 7 1",

"executionDuration": 273

},

...

},

"compilationDuration": 328,

"averageExecutionDuration": 183,

"timeLimit": 1500,

"memoryLimit": 500,

"language": "JAVA",

"dateTime": "2022-01-28T23:32:02.843465"

}❌ Wrong Answer

{

"verdict": "Wrong Answer",

"statusCode": 200,

"error": "",

"testCasesResult": {

"test1": {

"verdict": "Accepted",

"verdictStatusCode": 100,

"output": "0 1 2 3 4 5 6 7 8 9",

"error": "",

"expectedOutput": "0 1 2 3 4 5 6 7 8 9",

"executionDuration": 175

},

"test2": {

"verdict": "Wrong Answer",

"verdictStatusCode": 200,

"output": "9 8 7 1",

"error": "" ,

"expectedOutput": "9 8 6 1",

"executionDuration": 273

}

},

"compilationDuration": 328,

"averageExecutionDuration": 183,

"timeLimit": 1500,

"memoryLimit": 500,

"language": "JAVA",

"dateTime": "2022-01-28T23:32:02.843465"

}💩 Compilation Error

{

"verdict": "Compilation Error",

"statusCode": 300,

"error": "# command-line-arguments\n./main.go:5:10: undefined: i\n./main.go:6:21: undefined: i\n./main.go:7:9: undefined: i\n",

"testCasesResult": {},

"compilationDuration": 118,

"averageExecutionDuration": 0,

"timeLimit": 1500,

"memoryLimit": 500,

"language": "GO",

"dateTime": "2022-01-28T23:32:02.843465"

}🕜 Time Limit Exceeded

{

"verdict": "Time Limit Exceeded",

"statusCode": 500,

"error": "Execution exceeded 15sec",

"testCasesResult": {

"test1": {

"verdict": "Accepted",

"verdictStatusCode": 100,

"output": "0 1 2 3 4 5 6 7 8 9",

"error": "",

"expectedOutput": "0 1 2 3 4 5 6 7 8 9",

"executionDuration": 175

},

"test2": {

"verdict": "Time Limit Exceeded",

"verdictStatusCode": 500,

"output": "",

"error": "Execution exceeded 15sec" ,

"expectedOutput": "9 8 7 1",

"executionDuration": 1501

}

},

"compilationDuration": 328,

"averageExecutionDuration": 838,

"timeLimit": 1500,

"memoryLimit": 500,

"language": "JAVA",

"dateTime": "2022-01-28T23:32:02.843465"

}💥 Runtime Error

{

"verdict": "Runtime Error",

"statusCode": 600,

"error": "panic: runtime error: integer divide by zero\n\ngoroutine 1 [running]:\nmain.main()\n\t/app/main.go:11 +0x9b\n",

"testCasesResult": {

"test1": {

"verdict": "Accepted",

"verdictStatusCode": 100,

"output": "0 1 2 3 4 5 6 7 8 9",

"error": "",

"expectedOutput": "0 1 2 3 4 5 6 7 8 9",

"executionDuration": 175

},

"test2": {

"verdict": "Runtime Error",

"verdictStatusCode": 600,

"output": "",

"error": "panic: runtime error: integer divide by zero\n\ngoroutine 1 [running]:\nmain.main()\n\t/app/main.go:11 +0x9b\n" ,

"expectedOutput": "9 8 7 1",

"executionDuration": 0

}

},

"compilationDuration": 328,

"averageExecutionDuration": 175,

"timeLimit": 1500,

"memoryLimit": 500,

"language": "GO",

"dateTime": "2022-01-28T23:32:02.843465"

}💽 Out Of Memory

{

"verdict": "Out Of Memory",

"statusCode": 400,

"error": "fatal error: runtime: out of memory\n\nruntime stack:\nruntime.throw({0x497d72?, 0x17487800000?})\n\t/usr/local/go/src/runtime/panic.go:992 +0x71\nruntime.sysMap(0xc000400000, 0x7ffccb36b0d0?, 0x7ffccb36b13...",

"testCasesResult": {

"test1": {

"verdict": "Accepted",

"verdictStatusCode": 100,

"output": "0 1 2 3 4 5 6 7 8 9",

"error": "",

"expectedOutput": "0 1 2 3 4 5 6 7 8 9",

"executionDuration": 175

},

"test2": {

"verdict": "Out Of Memory",

"verdictStatusCode": 400,

"output": "",

"error": "fatal error: runtime: out of memory\n\nruntime stack:\nruntime.throw({0x497d72?, 0x17487800000?})\n\t/usr/local/go/src/runtime/panic.go:992 +0x71\nruntime.sysMap(0xc000400000, 0x7ffccb36b0d0?, 0x7ffccb36b13..." ,

"expectedOutput": "9 8 7 1",

"executionDuration": 0

}

},

"compilationDuration": 328,

"averageExecutionDuration": 175,

"timeLimit": 1500,

"memoryLimit": 500,

"language": "GO",

"dateTime": "2022-01-28T23:32:02.843465"

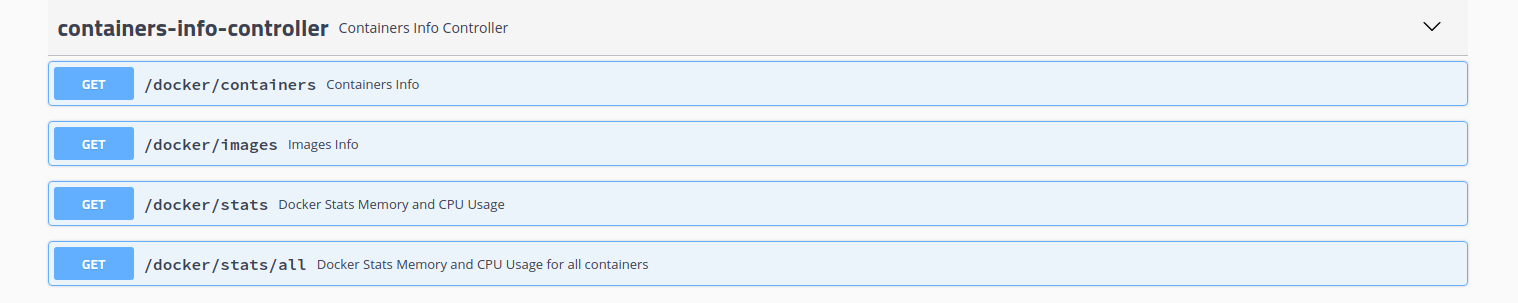

}It is also possible to visualize information about the images and docker containers that are currently running using these endpoints

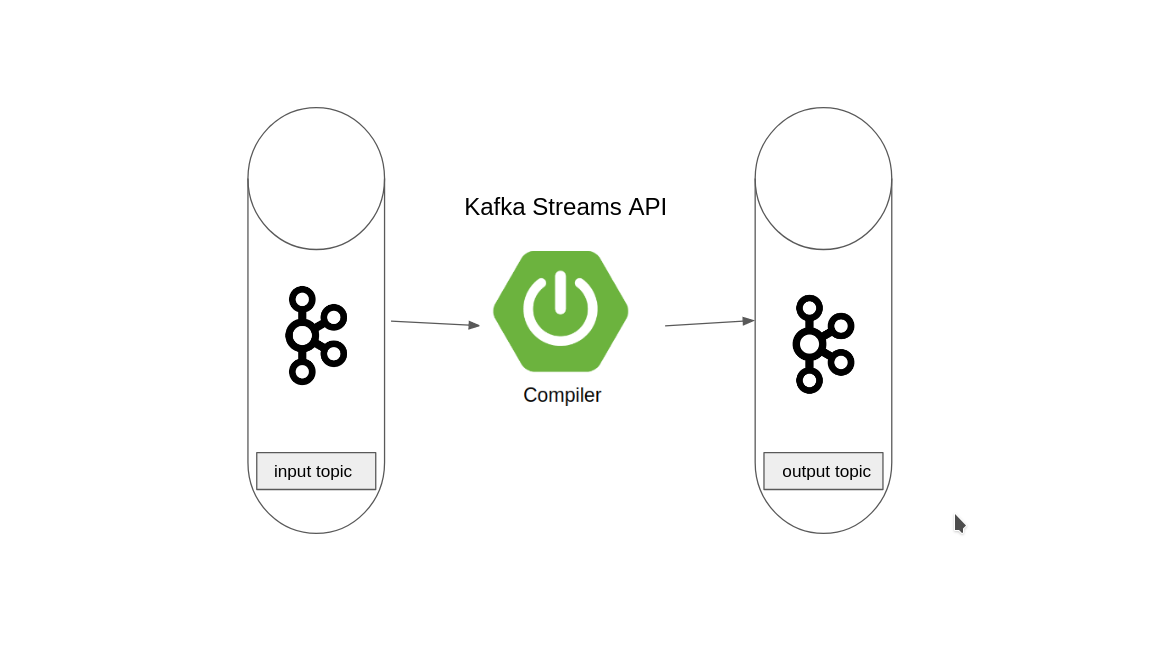

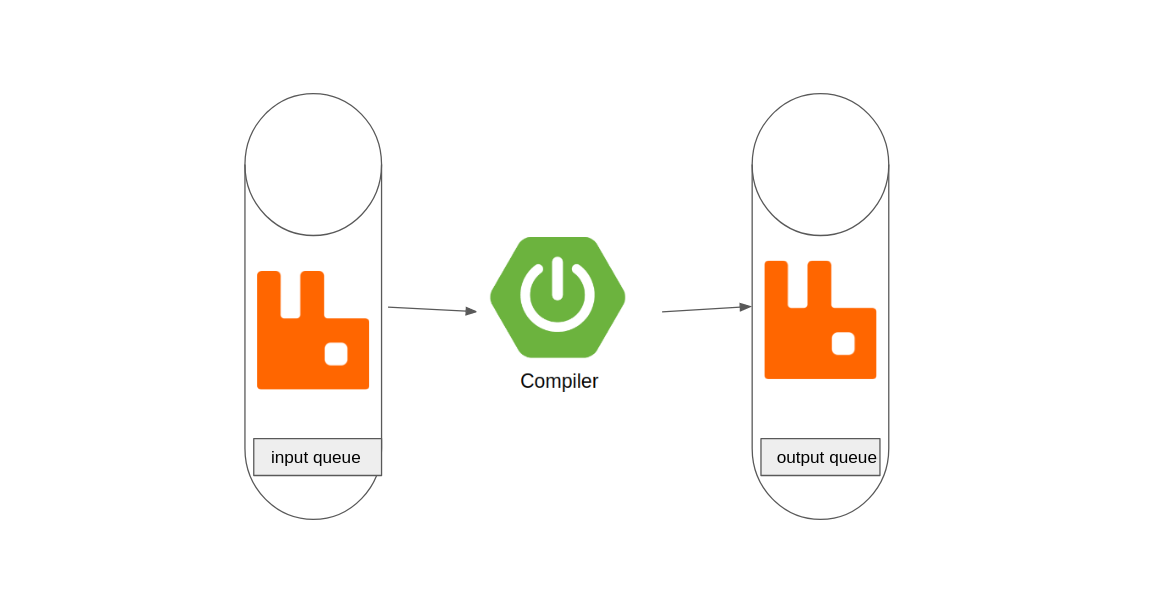

You can use the compiler with an event driven architecture. To enable kafka mode you should pass to the container the following env variables :

- ENABLE_KAFKA_MODE : True or False

- KAFKA_INPUT_TOPIC : Input topic, json request

- KAFKA_OUTPUT_TOPIC : Output topic, json response

- KAFKA_CONSUMER_GROUP_ID : Consumer group

- KAFKA_HOSTS : List of brokers

- CLUSTER_API_KEY : API key

- CLUSTER_API_SECRET : API Secret

- KAFKA_THROTTLING_DURATION : Throttling duration, by default set to 10000ms (when number of docker containers running reach MAX_REQUESTS, this value is used to do not lose the request and retry after this duration)

Note:

Having More partitions => More Parallelism => Better performance

docker container run -p 8080:8082 -v /var/run/docker.sock:/var/run/docker.sock -e DELETE_DOCKER_IMAGE=true -e EXECUTION_MEMORY_MAX=10000 -e EXECUTION_MEMORY_MIN=0 -e EXECUTION_TIME_MAX=15 -e EXECUTION_TIME_MIN=0 -e ENABLE_KAFKA_MODE=true -e KAFKA_INPUT_TOPIC=topic.input -e KAFKA_OUTPUT_TOPIC=topic.output -e KAFKA_CONSUMER_GROUP_ID=compilerId -e KAFKA_HOSTS=ip_broker1,ip_broker2,ip_broker3 -e API_KEY=YOUR_API_KEY -e API_SECRET=YOUR_API_SECRET -t compilerTo enable Rabbit MQ mode you should pass to the container the following env variables :

- ENABLE_RABBITMQ_MODE : True or False

- RABBIT_QUEUE_INPUT : Input queue, json request

- RABBIT_QUEUE_OUTPUT : Output queue, json response

- RABBIT_USERNAME : Rabbit MQ username

- RABBIT_PASSWORD : Rabbit MQ password

- RABBIT_HOSTS : List of brokers

- RABBIT_THROTTLING_DURATION : Throttling duration, by default set to 10000ms (when number of docker containers running reach MAX_REQUESTS, this value is used to do not lose the request and retry after this duration)

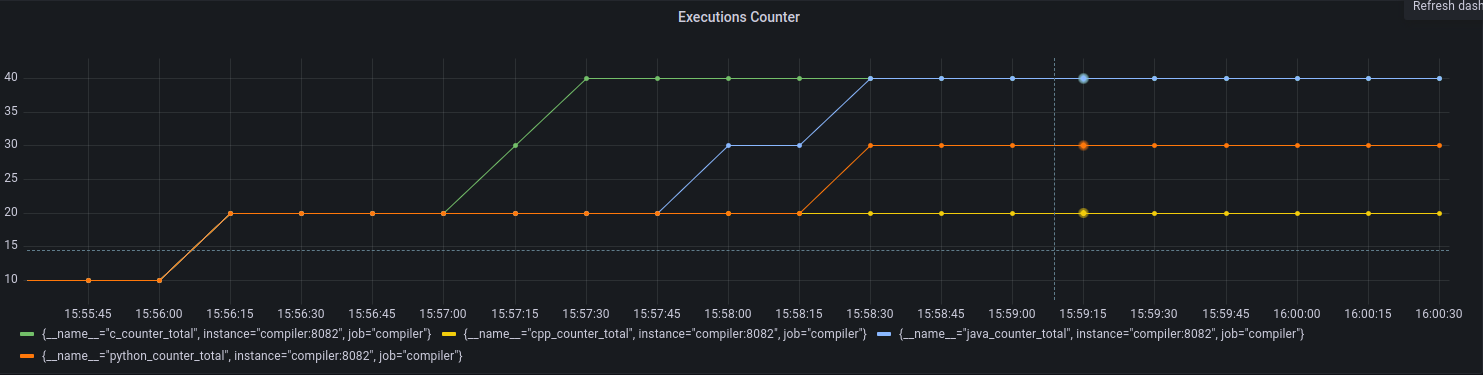

docker container run -p 8080:8082 -v /var/run/docker.sock:/var/run/docker.sock -e DELETE_DOCKER_IMAGE=true -e EXECUTION_MEMORY_MAX=10000 -e EXECUTION_MEMORY_MIN=0 -e EXECUTION_TIME_MAX=15 -e EXECUTION_TIME_MIN=0 -e ENABLE_RABBITMQ_MODE=true -e RABBIT_QUEUE_INPUT=queue.input -e RABBIT_QUEUE_OUTPUT=queue.output -e RABBIT_USERNAME=username -e RABBIT_PASSWORD=password -e RABBIT_HOSTS=ip_broker1,ip_broker2,ip_broker3 -t compilerCheck out exposed prometheus metrics using the following url : http://IP:PORT/prometheus

Other metrics are available.

By default, only console logging is enabled.

You can store logs in a file and access to it using /logfile endpoint by setting the environment variable ROLLING_FILE_LOGGING to true. All logs will be kept for 7 days with a maximum size of 1 GB.

You can also send logs to logstash pipeline by setting these environment variables LOGSTASH_LOGGING to true and LOGSTASH_SERVER_HOST, LOGSTASH_SERVER_PORT to logstash and port values respectively.

- Zakaria Maaraki - Initial work - zakariamaaraki