OCR-RCNN-v2 is designed for autonomous elevator manipulation, the goal of which is to enable the robot to autonomously operate elevators that are previously unvisited. This repository contains the perception part of this project. We published the initial version in paper A Novel OCR-RCNN for Elevator Button Recognition and this version improves the accuracy by 20% and achieves a real-time running speed ~10FPS (640*480) on a graphical card (>=GTX950). We have also tested on a laptop installed with a GTX950M (2G memory). It can achieves a running speed of ~6FPS. We are working on optimizing the TX2 version to make it faster, which will be soon released with the dataset, as well as the post-processing code.

- Ubuntu == 16.04

- TensorFlow == 1.12.0

- Python == 2.7

- Tensorrt == 4.0 (optional)

- 2GB GPU (or shared) memory

Before running the code, please first download the models into the code folder. There are five frozen tensorflow models:

- detection_graph.pb: a general detection model that can handle panel images with arbitrary size.

- ocr_graph.pb: a character recognition model that can handle button images with a size of 180x180.

- detection_graph_640x480.pb: a detection model fixed-size image as input.

- detection_graph_640x480_optimized.pb: an optimized version of the detection model.

- ocr_graph_optimized.pb: an optimized version of the recognition model.

For running on laptops and desktops (x86_64), you may need to install some packages :

sudo apt install libjpeg-dev libpng12-dev libfreetype6-dev libxml2-dev libxslt1-devsudo apt install ttf-mscorefonts-installerpip install pillow matplotlib lxml imageio --usergit clone https://github.com/zhudelong/ocr-rcnn-v2.gitcd ocr-rcnn-v2mv frozen/ ocr-rcnn-v2/python inference.py(slow version with two models loaded separately)python inference_640x480.py(fast version with two models merged)python ocr-rcnn-v2-visual.py(for visualization)

For Nvidia TX-2 platform:

-

Flash your system with JetPack 4.2.

-

We have to install tensorflow-1.12.0 by compiling source code, but if you want to try our wheel, just ignore the following procedure.

-

Start TX2 power mode.

sudo nvpmodel -m 0

-

Install some dependencies

sudo apt-get install openjdk-8-jdk sudo apt-get install libhdf5-dev libblas-dev gfortran sudo apt-get install libfreetype6-dev libpng-dev pkg-config pip install six mock h5py enum34 scipy numpy --user pip install keras --user

-

Download Bazel building tool

cd ~/Downloads wget https://github.com/bazelbuild/bazel/releases/download/0.15.2/bazel-0.15.2-dist.zip mkdir -p ~/src cd ~/src unzip ~/Downloads/bazel-0.15.2-dist.zip -d bazel-0.15.2-dist cd bazel-0.15.2-dist ./compile.sh sudo cp output/bazel /usr/local/bin bazel help

-

Download tensorflow source code and check out r1.12

cd ~/src git clone https://github.com/tensorflow/tensorflow.git git checkout r1.12

-

Before compiling please apply this patch.

-

Configure tensorflow-1.12, please refer to item-9 and the official doc.

cd ~/src/tensorflow ./configure

-

Start compiling tensorflow-1.12

bazel build --config=opt --config=cuda --local_resources 4096,2.0,1.0 //tensorflow/tools/pip_package:build_pip_package --cxxopt="-D_GLIBCXX_USE_CXX11_ABI=0" -

Make the pip wheel file, which will be put in wheel folder.

./bazel-bin/tensorflow/tools/pip_package/build_pip_package wheel/tensorflow_pkg

-

Install tensorflow with pip.

cd wheel/tensorflow_pkg pip tensorflow-1.12.1-cp27-cp27mu-linux_aarch64.whl --user

-

-

Run the python code in TX2 platform.

python inference_tx2.py(~0.7s per image, without optimization)- The model can be converted to tensorrt engine for faster inference. If you are interested in converting the model by yourself, please check here

If you find this work is helpful to your project, please consider cite our paper:

@inproceedings{zhu2018novel,

title={A Novel OCR-RCNN for Elevator Button Recognition},

author={Zhu, Delong and Li, Tingguang and Ho, Danny and Zhou, Tong and Meng, Max QH},

booktitle={2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={3626--3631},

year={2018},

organization={IEEE}

}

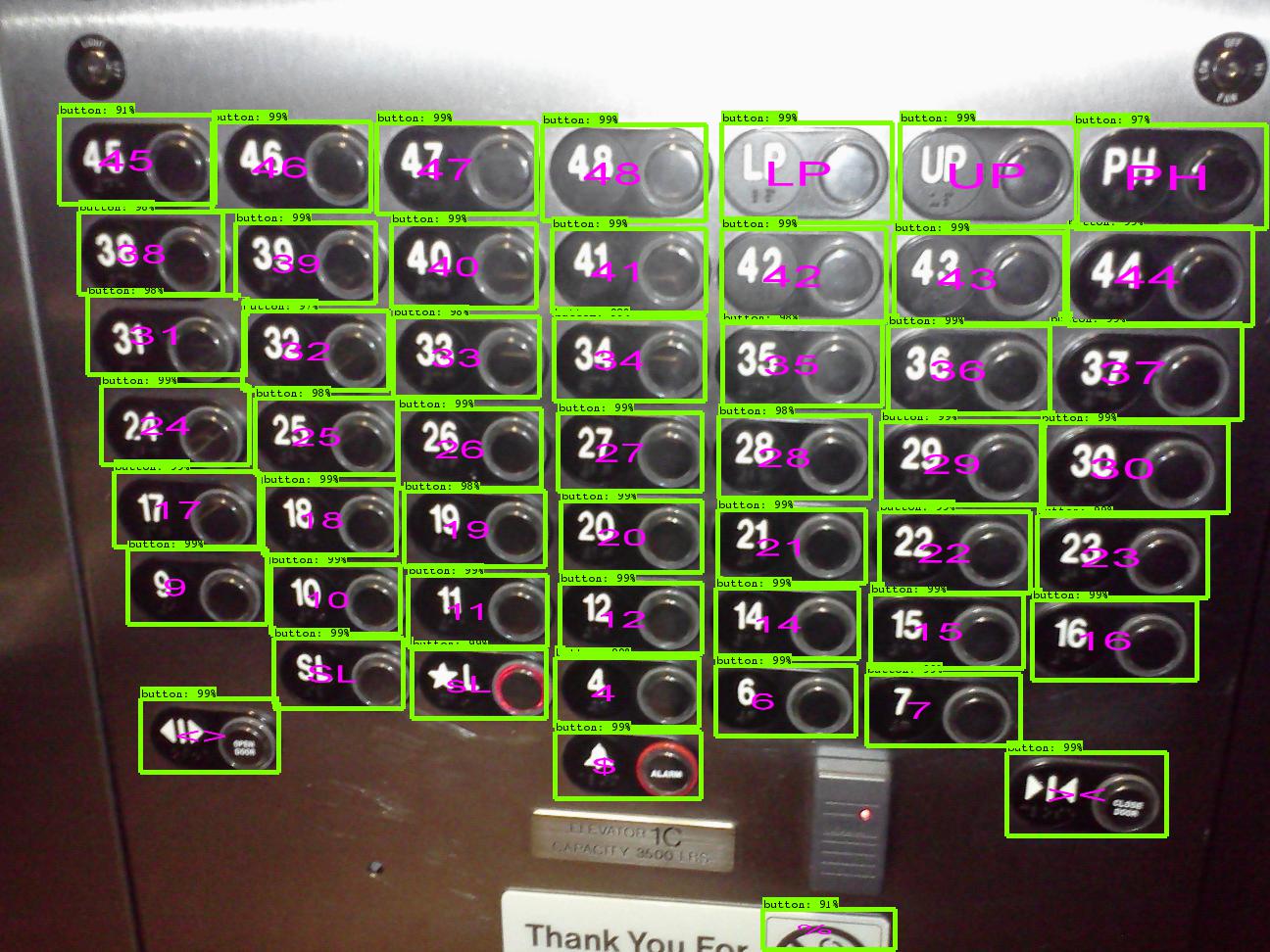

Two demo-images are listed as follows. They are screenshots from two Youtube videos. The character recognition results are visualized at the center of each bounding box.

Image Source: [https://www.youtube.com/watch?v=bQpEYpg1kLg&t=8s]

Image Source: [https://www.youtube.com/watch?v=bQpEYpg1kLg&t=8s]

Image Source: [https://www.youtube.com/watch?v=k1bTibYQjTo&t=9s]

Image Source: [https://www.youtube.com/watch?v=k1bTibYQjTo&t=9s]