适配了最新版comfyui的py3.11 ,torch 2.1.2+cu121

-

新增AppInfo节点,可以通过简单的配置,把workflow转变为一个Web APP。

-

支持多个web app 切换

-

发布为app的workflow,可以在右键里再次编辑了

-

web app可以设置分类,在comfyui右键菜单可以编辑更新web app

-

Support multiple web app switching.

-

Add the AppInfo node, which allows you to transform the workflow into a web app by simple configuration.

-

The workflow, which is now released as an app, can also be edited again by right-clicking.

-

The web app can be configured with categories, and the web app can be edited and updated in the right-click menu of ComfyUI.

Example:

- workflow

text-to-image

APP-JSON:

- text-to-image

- image-to-image

- text-to-text

暂时支持8种节点作为界面上的输入节点:Load Image、CLIPTextEncode、PromptSlide、TextInput_、Color、FloatSlider、IntNumber、CheckpointLoaderSimple、LoraLoader

输出节点:PreviewImage 、SaveImage、ShowTextForGPT、VHS_VideoCombine

ScreenShareNode & FloatingVideoNode. Now comfyui supports capturing screen pixel streams from any software and can be used for LCM-Lora integration. Let's get started with implementation and design! 💻🌐

newNode.mp4

ScreenShareNode & FloatingVideoNode

!! Please use the address with HTTPS (https://127.0.0.1).

Voice + Real-time Face Swap Workflow

Support for calling multiple GPTs.ChatGPT、ChatGLM3 , Some code provided by rui. If you are using OpenAI's service, fill in https://api.openai.com/v1 . If you are using a local LLM service, fill in http://127.0.0.1:xxxx/v1 . Azure OpenAI:https://xxxx.openai.azure.com

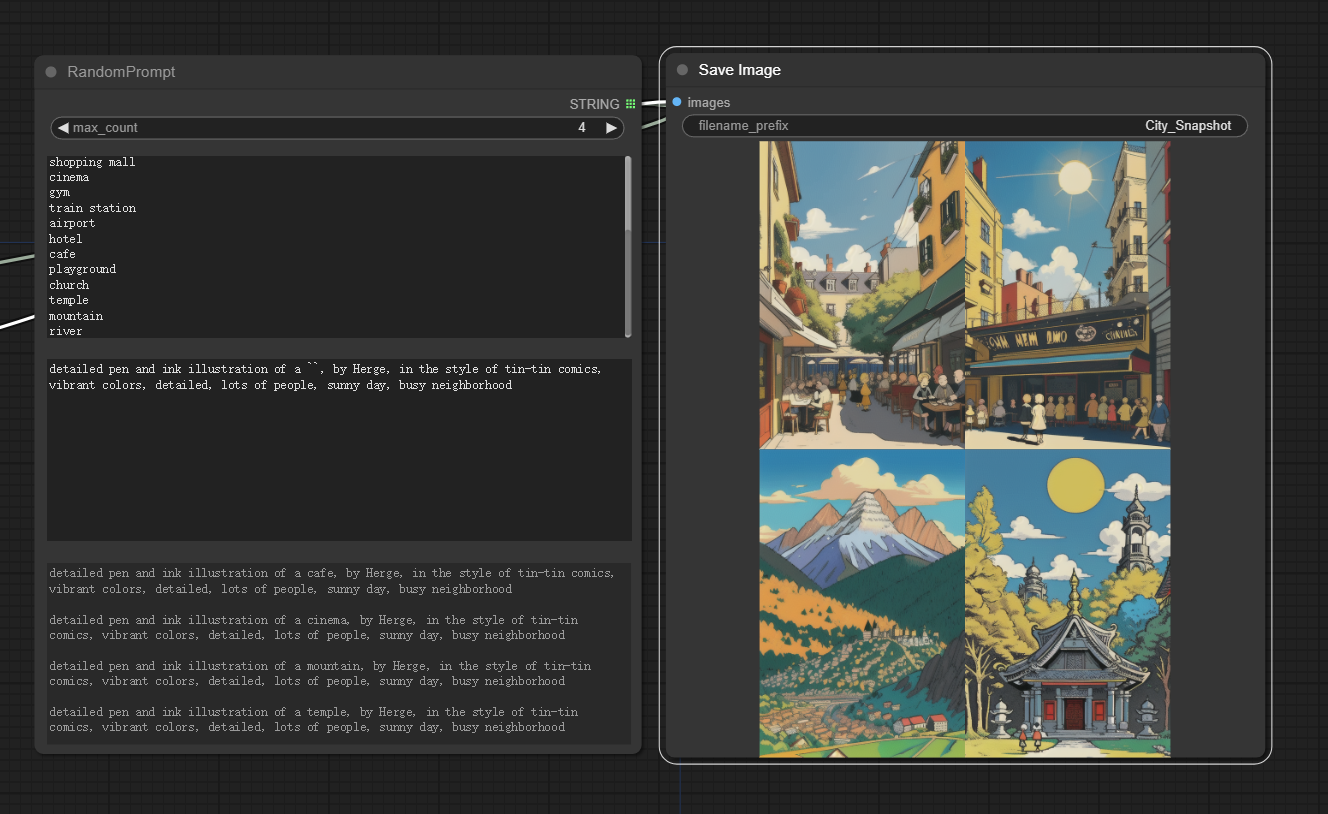

randomPrompt

A new layer class node has been added, allowing you to separate the image into layers. After merging the images, you can input the controlnet for further processing.

Monitor changes to images in a local folder, and trigger real-time execution of workflows, supporting common image formats, especially PSD format, in conjunction with Photoshop.

Conveniently load images from a fixed address on the internet to ensure that default images in the workflow can be executed.

The Color node provides a color picker for easy color selection, the Font node offers built-in font selection for use with TextImage to generate text images, and the DynamicDelayByText node allows delayed execution based on the length of the input text.

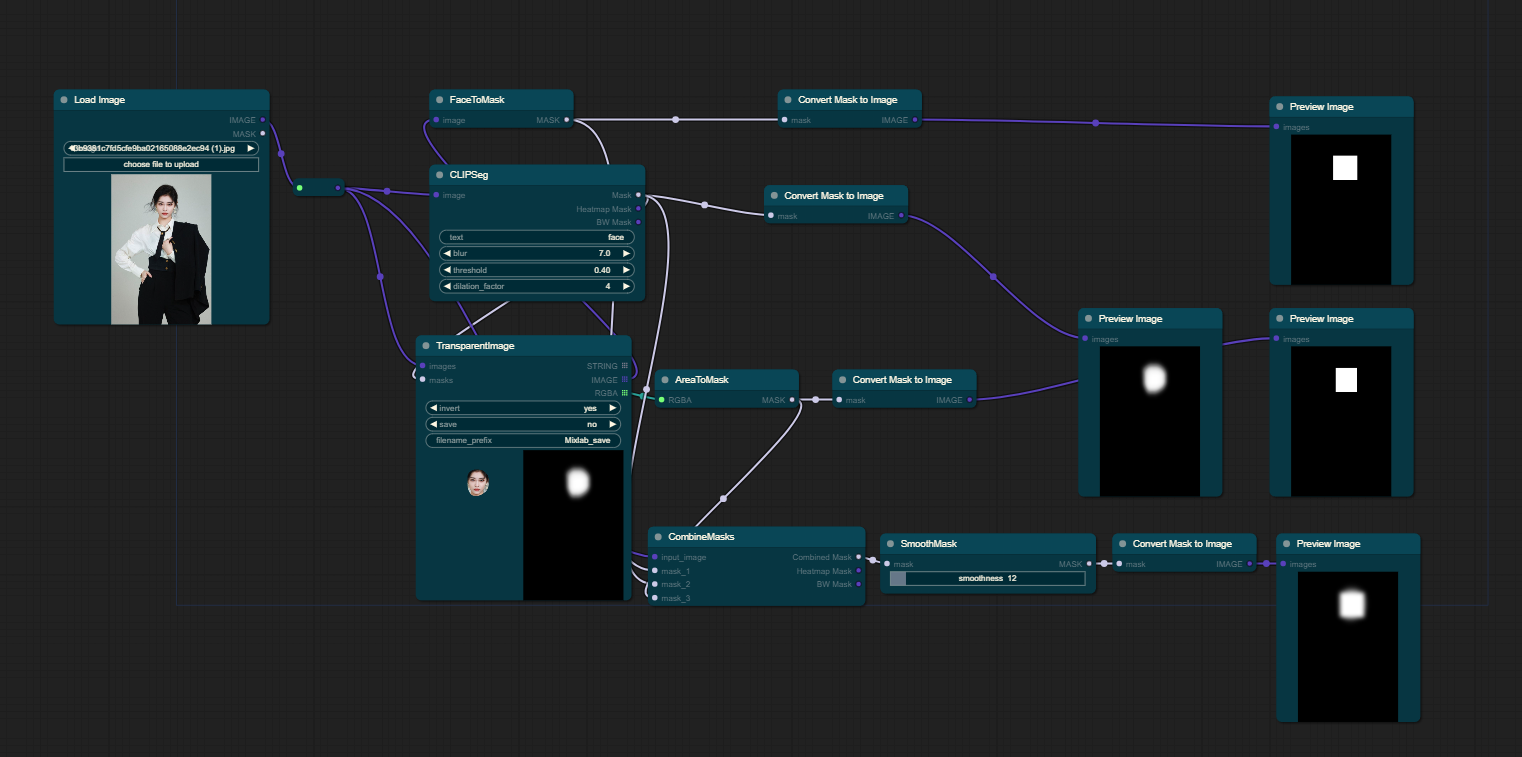

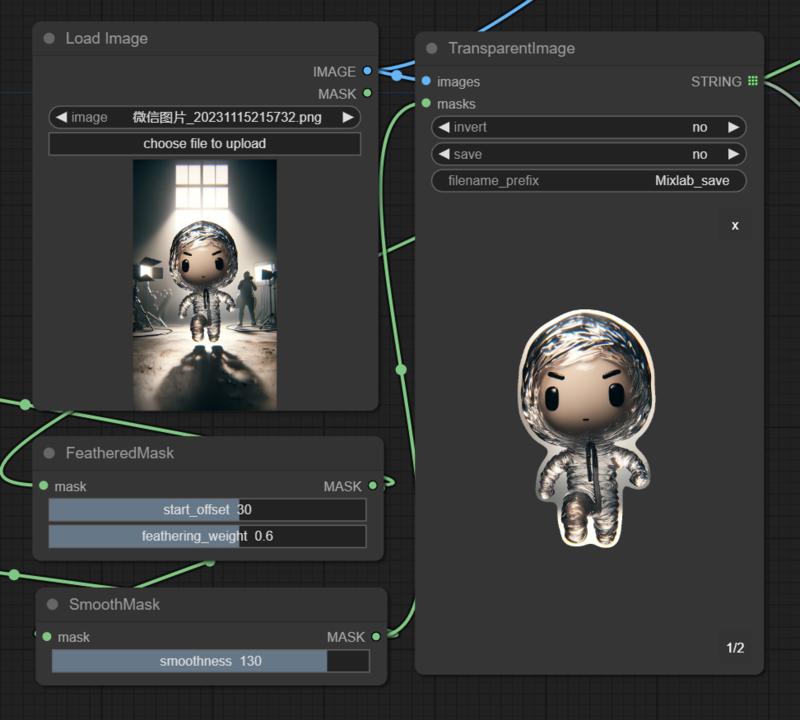

TransparentImage

Consistency Decoder

After downloading the OpenAI VAE model, place it in the "model/vae" directory for use.

https://openaipublic.azureedge.net/diff-vae/c9cebd3132dd9c42936d803e33424145a748843c8f716c0814838bdc8a2fe7cb/decoder.pt

After downloading the OpenAI VAE model, place it in the "model/vae" directory for use.

https://openaipublic.azureedge.net/diff-vae/c9cebd3132dd9c42936d803e33424145a748843c8f716c0814838bdc8a2fe7cb/decoder.pt

FeatheredMask、SmoothMask

Add edges to an image.

LaMaInpainting

- Add "help" option to the context menu for each node.

- Add "Nodes Map" option to the global context menu.

An improvement has been made to directly redirect to GitHub to search for missing nodes when loading the graph.

v0.8.0 🚀🚗🚚🏃 LaMaInpainting

-

新增 LaMaInpainting

-

优化color节点的输出

-

修复高清显示屏上定位节点不准的情况

-

Add LaMaInpainting

-

Optimize the output of the color node

-

Fix the issue of inaccurate positioning node on high-definition display screens

Download CLIPSeg, move to : models/clipseg

manually install, simply clone the repo into the custom_nodes directory with this command:

cd ComfyUI/custom_nodes

git clone https://github.com/shadowcz007/comfyui-mixlab-nodes.git

Install the requirements:

run directly:

cd ComfyUI/custom_nodes/comfyui-mixlab-nodes

install.bat

or install the requirements using:

../../../python_embeded/python.exe -s -m pip install -r requirements.txt

If you are using a venv, make sure you have it activated before installation and use:

pip3 install -r requirements.txt

访问 www.mixcomfy.com,获得更多内测功能,关注微信公众号:Mixlab无界社区

- 音频播放节点:带可视化、支持多音轨、可配置音轨音量

- vector https://github.com/GeorgLegato/stable-diffusion-webui-vectorstudio