A comprehensive codebase for training and finetuning Image <> Latent models.

- Trainer for VAE or VQ-VAE or direct AE

- Basic Trainer

- Decoder-only finetuning

- PEFT

- Equivariance Regularization EQ-VAE

- Rotate

- Scale down

- Scale up + crop

- crop

- random affine

- blending

- Adversarial Loss

- Investigate better discriminator setup

- Latent Regularization

- Discrete VAE

- Kepler Codebook Regularization Loss

- Models

- MAE for latent

- windowed/natten attention for commonly used VAE setup

KBlueLeaf/EQ-SDXL-VAE · Hugging Face

Quick PoC run (significant quality degrad but also significant smoother latent):

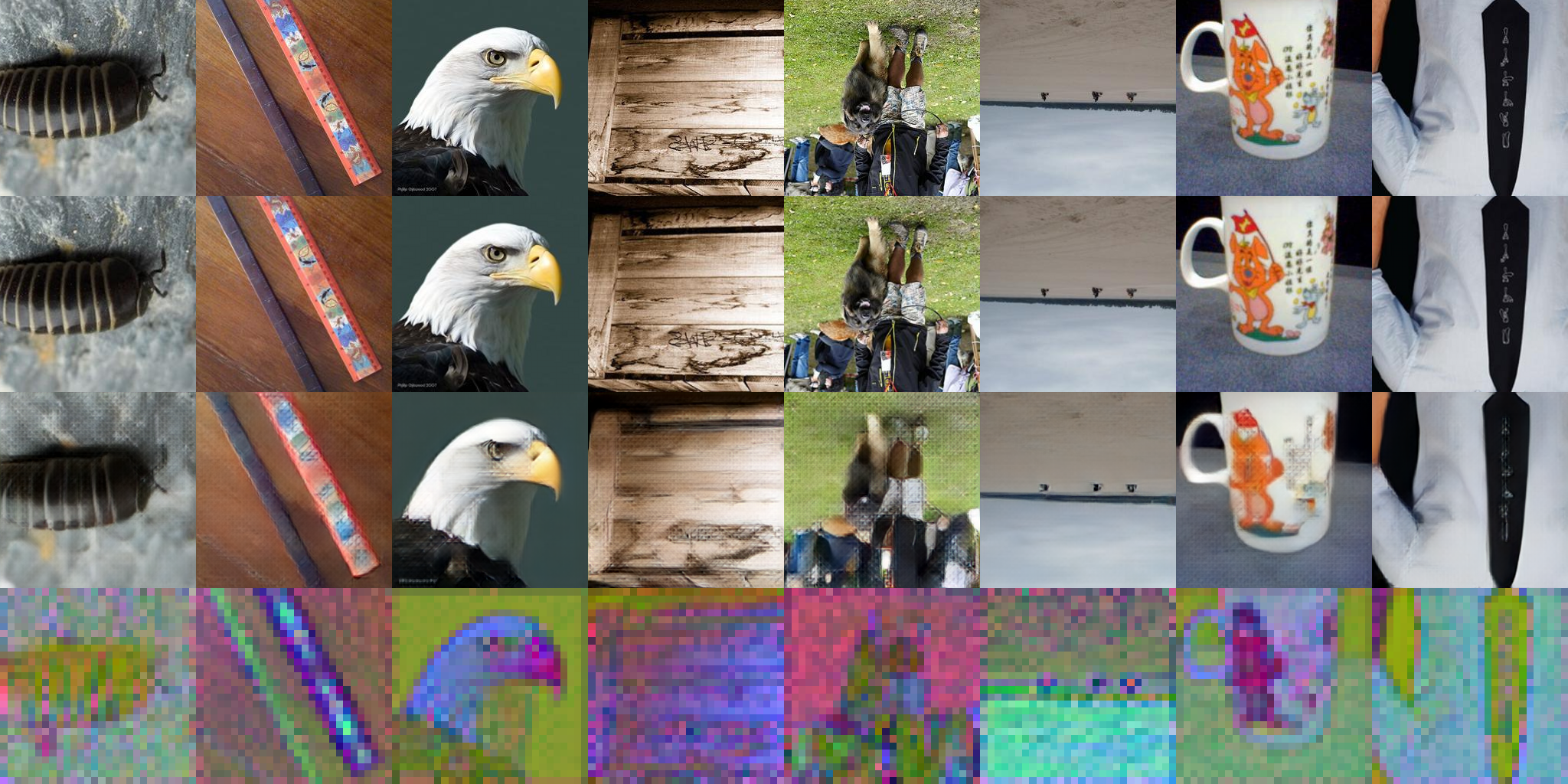

| Before EQ-VAE | After EQ-VAE |

|---|---|

|

|

The 1~4 row are: original image, transformed image, decoded image from transformed latent, transformed latent

@misc{kohakublueleaf_hakulatent,

author = {Shih-Ying Yeh (KohakuBlueLeaf)},

title = {HakuLatent: A comprehensive codebase for training and finetuning Image <> Latent models},

year = {2024},

publisher = {GitHub},

journal = {GitHub repository},

url = {https://github.com/KohakuBlueleaf/HakuLatent},

note = {Python library for training and finetuning Variational Autoencoders and related latent models, implementing EQ-VAE and other techniques.}

}