This repo guides you through building analyics for myBI Market with Modern Data Stack:

- myBI Connect (Extract - Load tool)

- Clickhouse (Database)

- dbt (Transformations) + mybi-dbt-core module

- Metabase (Business Intelligence)

- Github Actions (Continuous Integration + Deployment)

- Configure environment

- Install and configure mybi_dbt_core package

- Configure data sources

- Build staging layer models

- Model Data Marts

- Set up metrics layer

- Visualize on a dashboard

- Publish dbt project docs

- Introduce Continuous Integration

-

Install prerequisites:

-

Fork & Clone this repository and open in IDE

-

Spin up Docker containers

All the services are configured via Docker containers.

- Clickhouse

- Metabase

- Cube

# launch containers: clickhouse, metabase, cube docker-compose build --no-cache docker-compose up -d -

Open dev container with dbt installed

devcontainer open . # test connections dbt --version dbt debug

Install and configure mybi_dbt_core package

-

Install module via packages.yml

dbt clean # clean temp files dbt deps # install dependencies (modules)

-

✅ Enable only relevant data models (and disable the rest)

We will use specific data sources:

- general – General

- direct – Yandex.Direct

- mytarget – myTarget

- amocrm – AmoCRM

- ga – Google Analytics

Confirm with command:

dbt ls --resource-type model -s tag:staging -

✅ Assign variables

Certain variable values have to be set:

- source database connection details

- database and schema name to find mybi source data

- specific

account_idvalues to be included

-

✅ Turn on custom schema management

I use generate_schema_name.sql macro to set target schema names:

Renders a schema name given a custom schema name. In production, this macro will render out the overriden schema name for a model. Otherwise, the default schema specified in the active target is used.

Take a look at custom_schema_management.sql macro to find out more.

-

Create Clickhouse database of PostgreSQL Database Engine with source data:

dbt run-operation init_source_data

Staging models are alredy configured for you in mybi_dbt_core package:

All you need to do is just build these models in one command:

dbt build -s tag:stagingWith staging models in place we now can proceed to data modeling.

-

Intermediate models include:

- Wide tables for Google Analytics goals and sessions

- Aggregated table for myTarget campaigns facts

- Aggregated tables for Yandex.Direct context and search facts

- Comprehensive testing

dbt build -s tag:intermediate

-

Data Marts include:

- Costs (uniting Yandex.Direct + myTarget)

- Google Analytics events (uniting sessions and goal completions)

- Tracker which combines costs and events in a single table

dbt build --full-refresh -s tag:marts

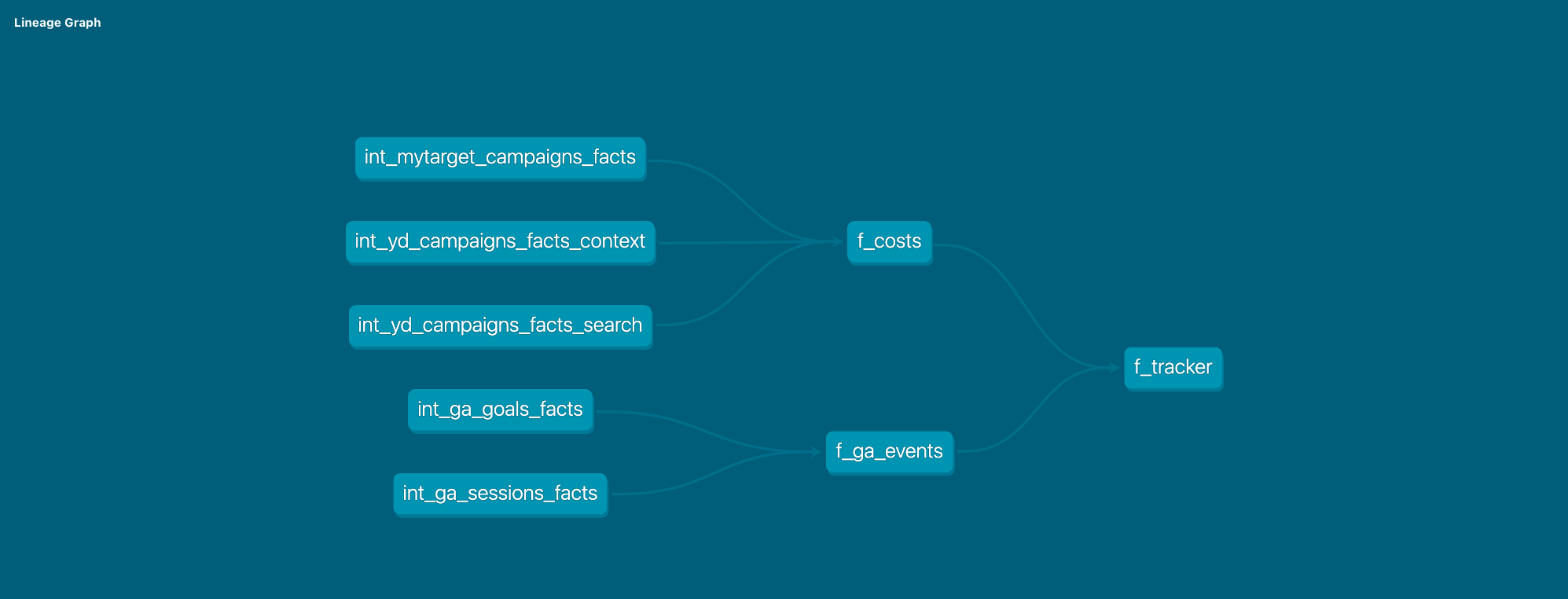

Take a look at the project graph (DAG):

- Define dbt Metrics in metrics.yml

- Access dbt Metrics via materalizing in f_metrics.sql

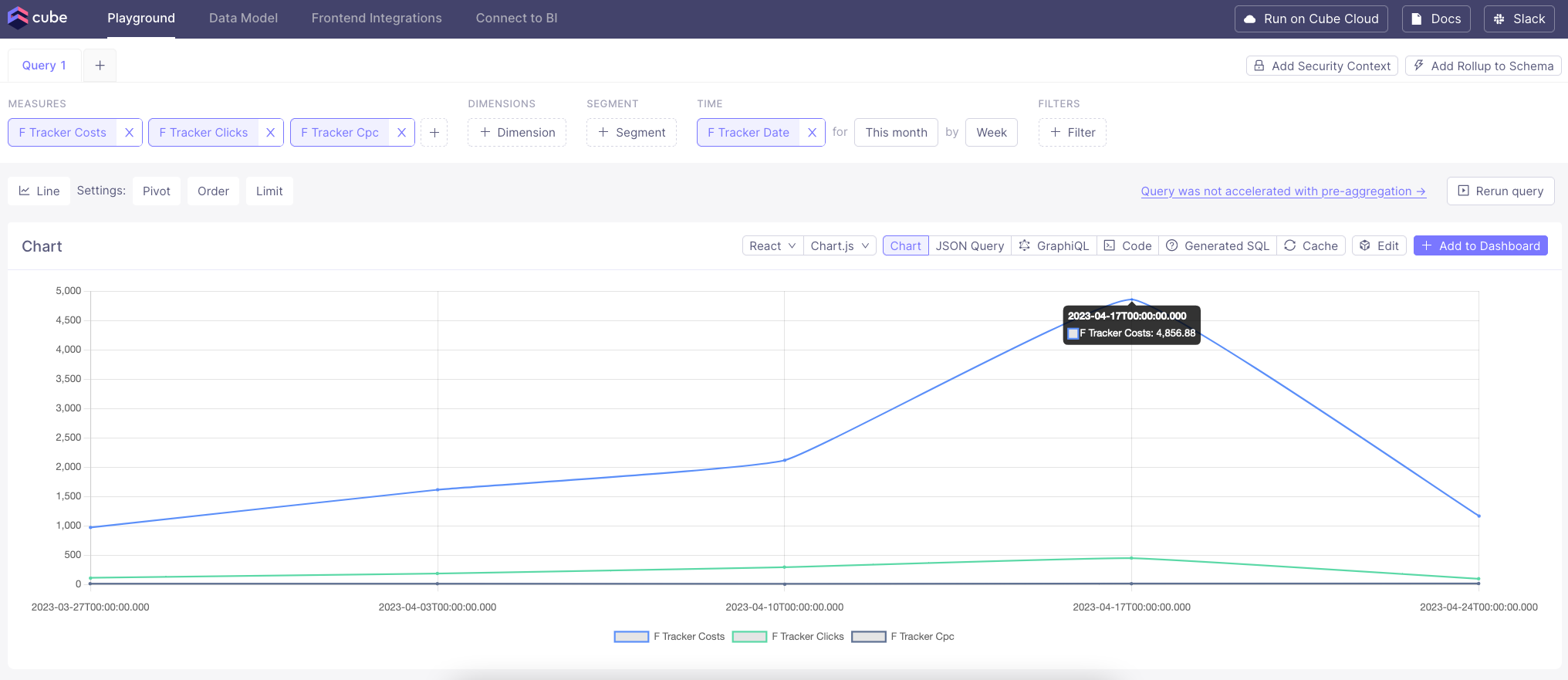

- Define dbt Metrics in f_tracker.yml

- Access dbt Metrics through Cube playground or Metabase

Now we are ready to visualize key metrics on a dashboard.

I have configured Clickhouse connection and prepared Metabase dashboard which you can access at http://localhost:3000/dashboard/1-mybi-tutorial:

- Email address:

[email protected] - Password:

tutorial101

You may explore data from Metabase yourself or even build your own dashboard.

dbt Docs can be easily served locally on http://localhost:8080:

dbt docs generate

dbt docs serveOr you may access pre-build version from Github Pages:

Let's say you want to introduce some code changes. How do you ensure data quality?

You protect your main branch and require Pull Requests to have:

- ✅ Continuous Integration checks successfully pass

- ✅ Code review from team member of Code Owner

This way you make sure to deploy high quality and functional code.

If you have any questions or comments please create an issue.