🙋 Please let us know if you find out a mistake or have any suggestions!

🌟 If you find this resource helpful, please consider to star this repository and cite our research:

@article{kowsher2024propulsion,

title={Propulsion: Steering LLM with Tiny Fine-Tuning},

author={Kowsher, Md and Prottasha, Nusrat Jahan and Bhat, Prakash},

journal={arXiv preprint arXiv:2409.10927},

year={2024}

}

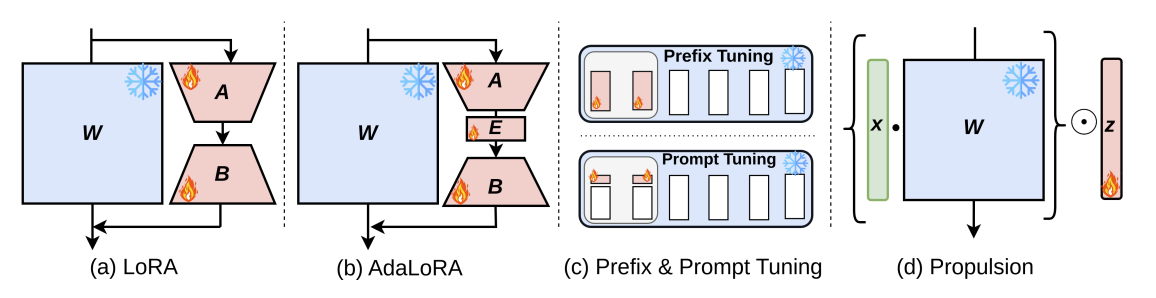

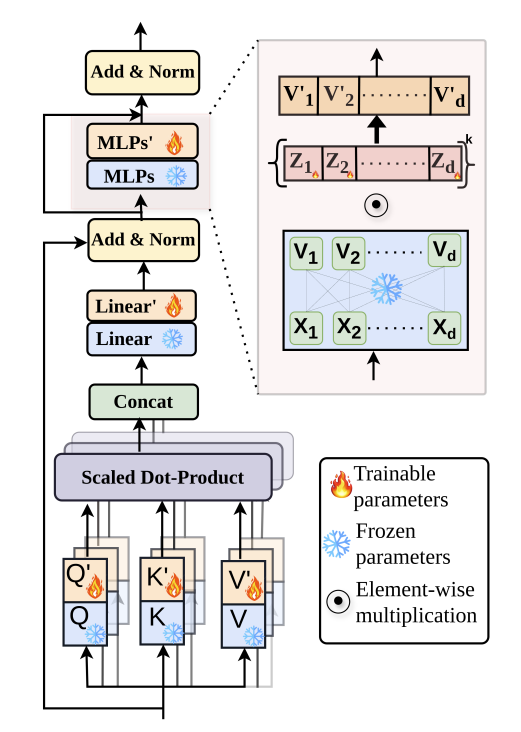

Propulsion, a parameter-efficient fine-tuning (PEFT) method designed to optimize task-specific performance while drastically reducing computational overhead

Use python 3.11 from MiniConda

- torch==2.3.0

- accelerate==0.33.0

- einops==0.7.0

- matplotlib==3.7.0

- numpy==1.23.5

- pandas==1.5.3

- scikit_learn==1.2.2

- scipy==1.12.0

- tqdm==4.65.0

- peft==0.12.0

- transformers==4.44.0

- deepspeed==0.15.1

- sentencepiece==0.2.0

To install all dependencies:

pip install -r requirements.txt

You can access the datasets from hugginface

To get started with propulsion, follow these simple steps:

-

Import the necessary modules:

import propulsion from transformers import RobertaForSequenceClassification

-

Load a pre-trained model and apply PEFT:

model = RobertaForSequenceClassification.from_pretrained('model_name') propulsion.PEFT(model)

-

Now you're ready to fine-tune your model using

propulsion.