Jiawei Liu, Wei Zhai, Cao Yang, Yujun Shen, Zheng-Jun Zha ✉️

-

Clone the repository:

git clone https://github.com/LuFan31/CompreCap.git cd CompreCap -

Build environment:

conda create -n CompreCap python=3.9 conda activate CompreCap pip install -r requirements.txt pip install spacy python -m spacy download en_core_web_lg -

Download the weights of parser and LLM evaluator:

Download the weights of Sentence-Transformers and Llama3 from huggingface.

Our CompreCap benchmark is available at 🤗CompreCap. Our benchmark is formed as follows:

├── CompreCap_dataset

│ ├── images

| | ├── 000000000802.jpg

| | └── ...

│ ├── QA_json

| | ├── finegri_desc_qa.jsonl

| | └── finegri_visible_qa_hulla.jsonl

│ └── anno.json

We require 10 popular LVLMs to generate captions for the images in the images folder.

You can run the following command to assess the detailed captions, using the Llama3 as the evaluator. The results will be saved in the output-dir.

python evaluate.py \

--data_root CompreCap_dataset/ \

--anno CompreCap_dataset/anno.json \

--soft_coverage \

--llm PATH/to/Meta-Llama-3-8B-Instruct \

--bert PATH/to/sentence-transformers--all-MiniLM-L6-v2 \

--longcap_file longcap_from_MLLM/LLava-Next-34B.json \

--output-dir output/LLava-Next-34B_result.json

The file for storing captions to be evaluated is specified by longcap_file, where the data is saved in the following format:

{

'image_name1': 'caption1',

'image_name2': 'caption2',

...

}

The fine-grained object VQA consists of CompreQA-P and CompreQA-Cap. The question-answer pairs are stored in .json files, which are placed in CompreCap_dataset/QA_json. You can evaluate LVLMs' performance in fine-grained object VQA with the following code example:

CLICK for the code example

image_dir='CompreCap_dataset/images'

with open('result.jsonl', 'w') as outfile:

with open ('CompreCap_dataset/QA_json/finegri_desc_qa.jsonl', 'r') as infile: # use finegri_visible_qa.jsonl to evaluate CompreQA-P

correct, num_total = 0, 0

for line in infile:

qa_line = json.loads(line)

image_name = qa_line['image_name']

object_category = qa_line['category']

question = qa_line['question']

question = question+'Just answer with the option\'s letter'

answer = qa_line['answer']

image_path = os.path.join(image_dir, image_name)

image = Image.open(image_path).convert('RGB')

image_tensor = process_images(image).cuda()

response = multimodal_model.generate(tokenizer, image_tensor, question)

option_letter_regex = re.compile(r"^(A|B|C)$", re.IGNORECASE)

if option_letter_regex.match(response):

response = response.upper()

else:

response = response.split('.')[0]

if option_letter_regex.match(response):

response = response.upper()

else:

print('Could not determine A or B or C.')

print(response)

num_total += 1

if response == answer:

correct += 1

Correct = 'Yes!'

else:

Correct = 'No!'

if response != 'D':

prediction = question.split(f'{response}. ')[-1].split('.')[0]

else:

prediction = f"The '{object_category}' is not visible!"

answer_desc = question.split(f'{answer}. ')[-1].split('.')[0]

qa_line = {'image_name': image_name, 'category': object_category, 'prediction': prediction, 'answer': answer_desc, 'correct': Correct}

json_qa_line = json.dumps(qa_line)

outfile.write(json_qa_line + '\n')

outfile.flush()

print(f'correct rate: {correct/num_total}')

Don't forget to cite this source if it proves useful in your research!

@article{CompreCap,

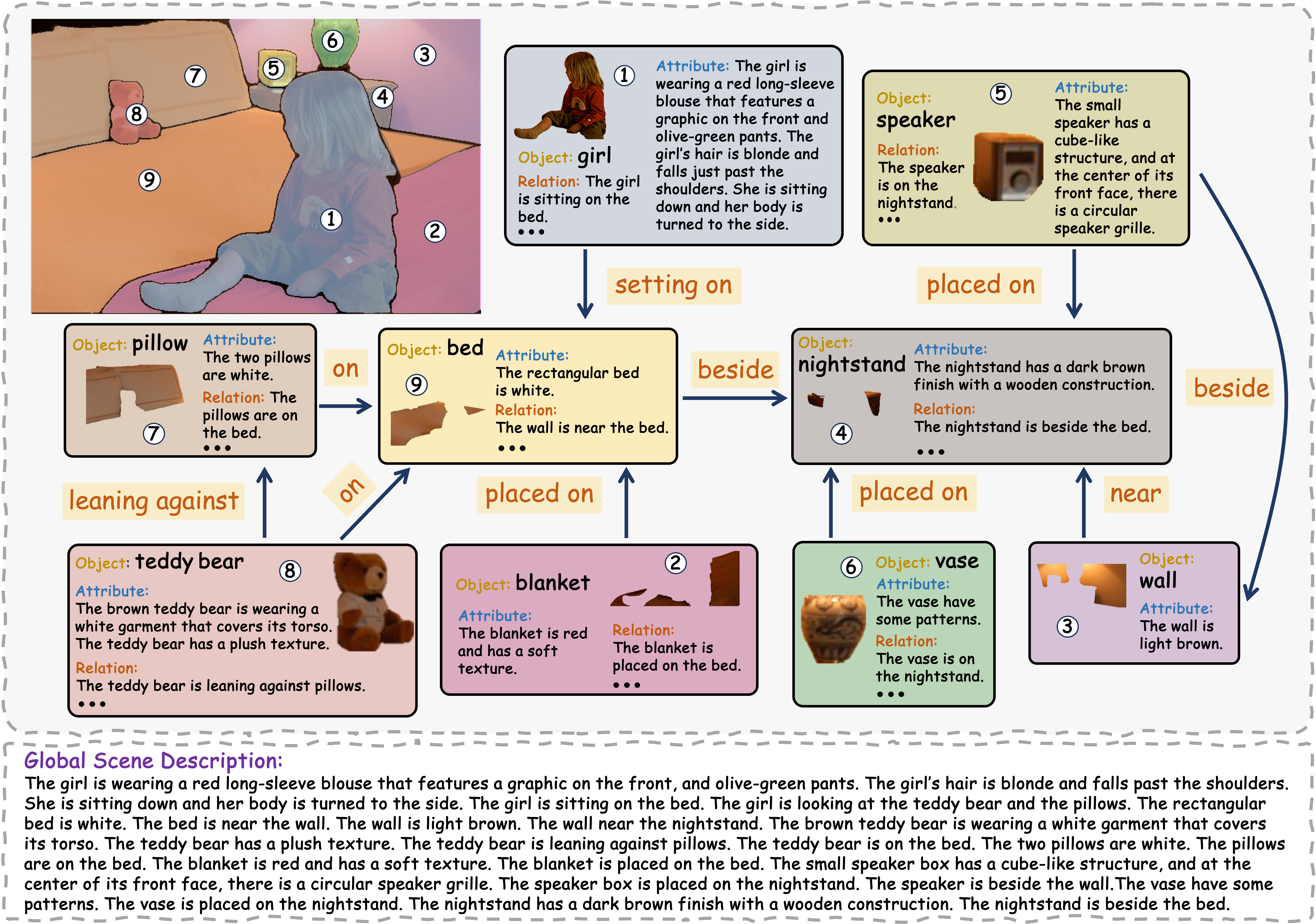

title={Benchmarking Large Vision-Language Models via Directed Scene Graph for Comprehensive Image Captioning},

author={Fan Lu, Wei Wu, Kecheng Zheng, Shuailei Ma, Biao Gong, Jiawei Liu, Wei Zhai, Yang Cao, Yujun Shen, Zheng-Jun Zha},

booktitle={arXiv},

year={2024}

}The data source of our CompreCap is based on the panoptic segmentation dataset in MSCOCO. Thanks for its remarkable contribution!