Video future frames prediction based on Transformers. Accepted by ICPR2022, http://arxiv.org/abs/2203.15836

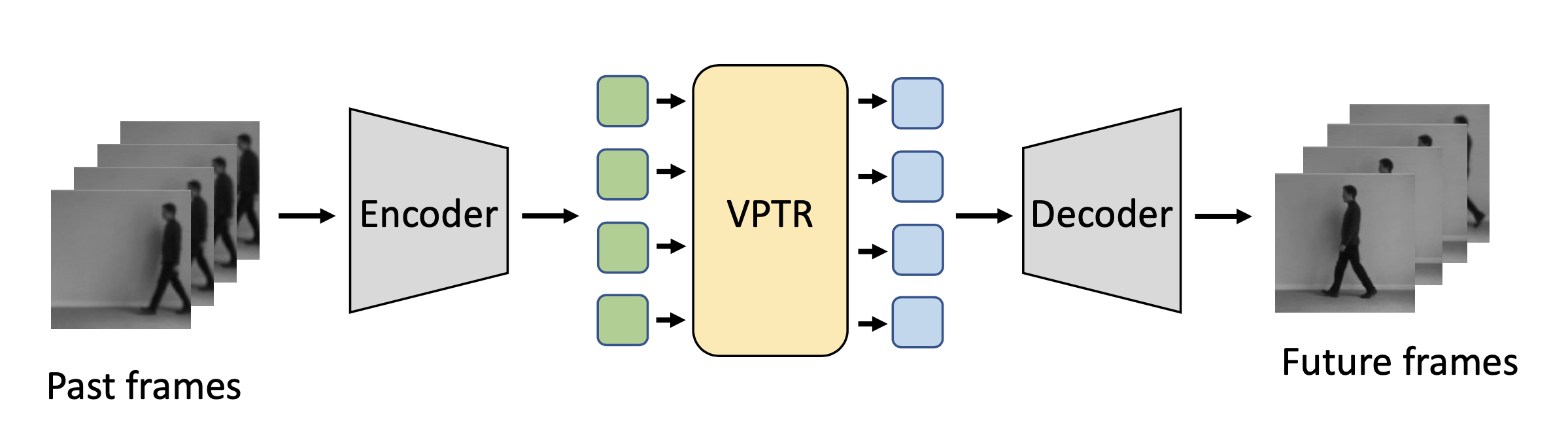

The overall framework for video prediction.

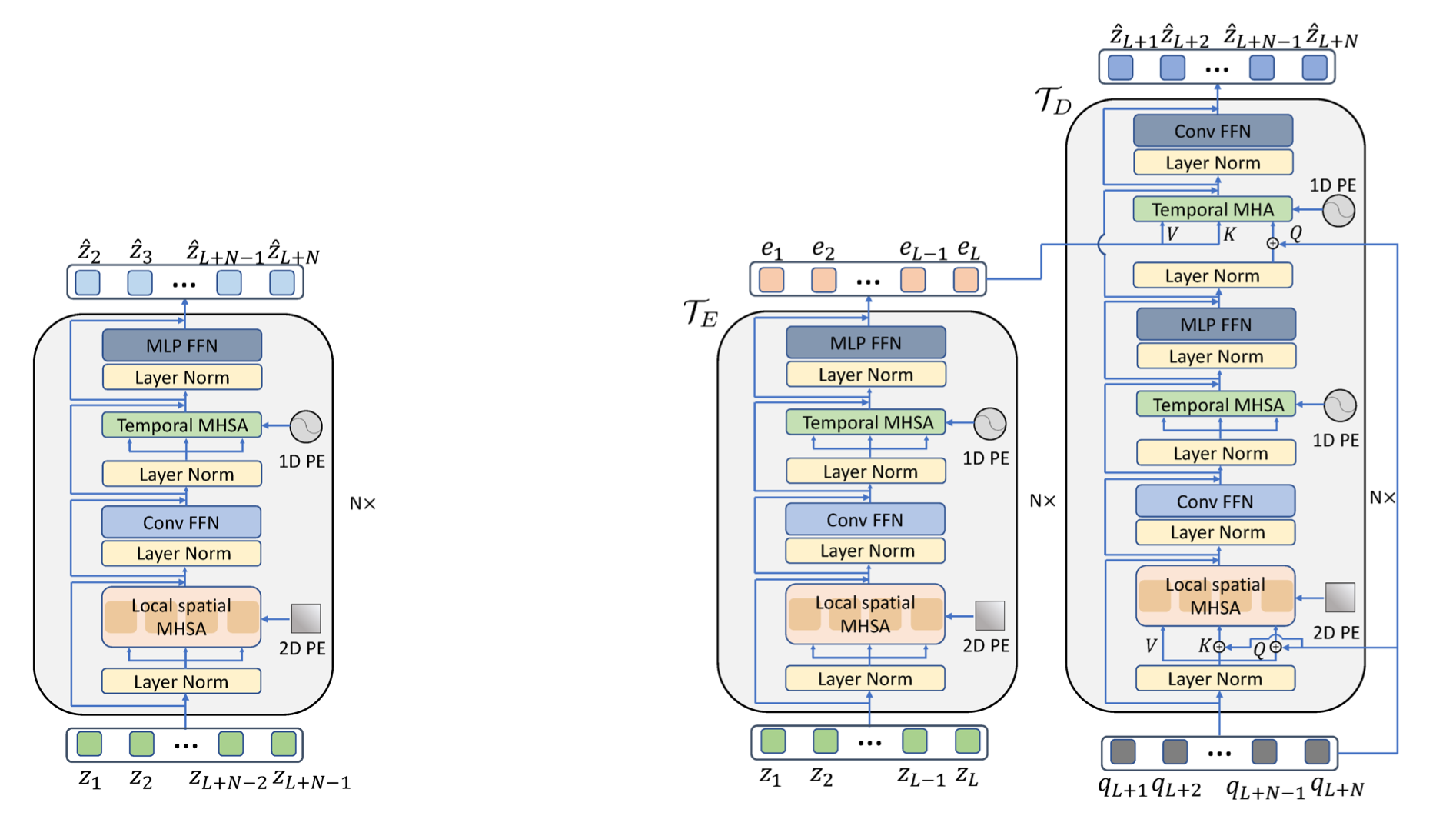

Fully autoregressive (left) and non-autoregressive VPTR (right).

Download the checkpoints from here: https://polymtlca0-my.sharepoint.com/:f:/g/personal/xi_ye_polymtl_ca/EuxjSddJ7wNIsiSTOfB-u7AB7qQhP5H0iX2a5mbaowiSZw?e=IEj1bd

See Test_AutoEncoder.ipynb and Test_VPTR.ipynb for the detatiled test functions.

Train the autoencoder firstly, save the ckpt, load it for stage 2

train_FAR.py: Fully autoregressive model

train_FAR_mp.py: multiple gpu training (single machine)

train_NAR.py: Non-autoregressive model

train_NAR_mp.py: multiple gpu training (single machine)

/MovingMNIST

moving-mnist-train.npz

moving-mnist-test.npz

moving-mnist-val.npz

/KTH

boxing/

person01_boxing_d1/

image_0001.png

image_0002.png

...

person01_boxing_d2/

image_0001.png

image_0002.png

...

handclapping/

...

handwaving/

...

jogging_no_empty/

...

running_no_empty/

...

walking_no_empty/

...

/BAIR

test/

example_0/

0000.png

0001.png

...

example_1/

0000.png

0001.png

...

example_...

train/

example_0/

0000.png

0001.png

...

example_...

Please cite the paper if you find our work is helpful.

@article{ye_2022,

title = {VPTR: Efficient Transformers for Video Prediction},

author = {Xi Ye and Guillaume-Alexandre Bilodeau},

journal={arXiv preprint arXiv:2203.15836},

year={2022}

}

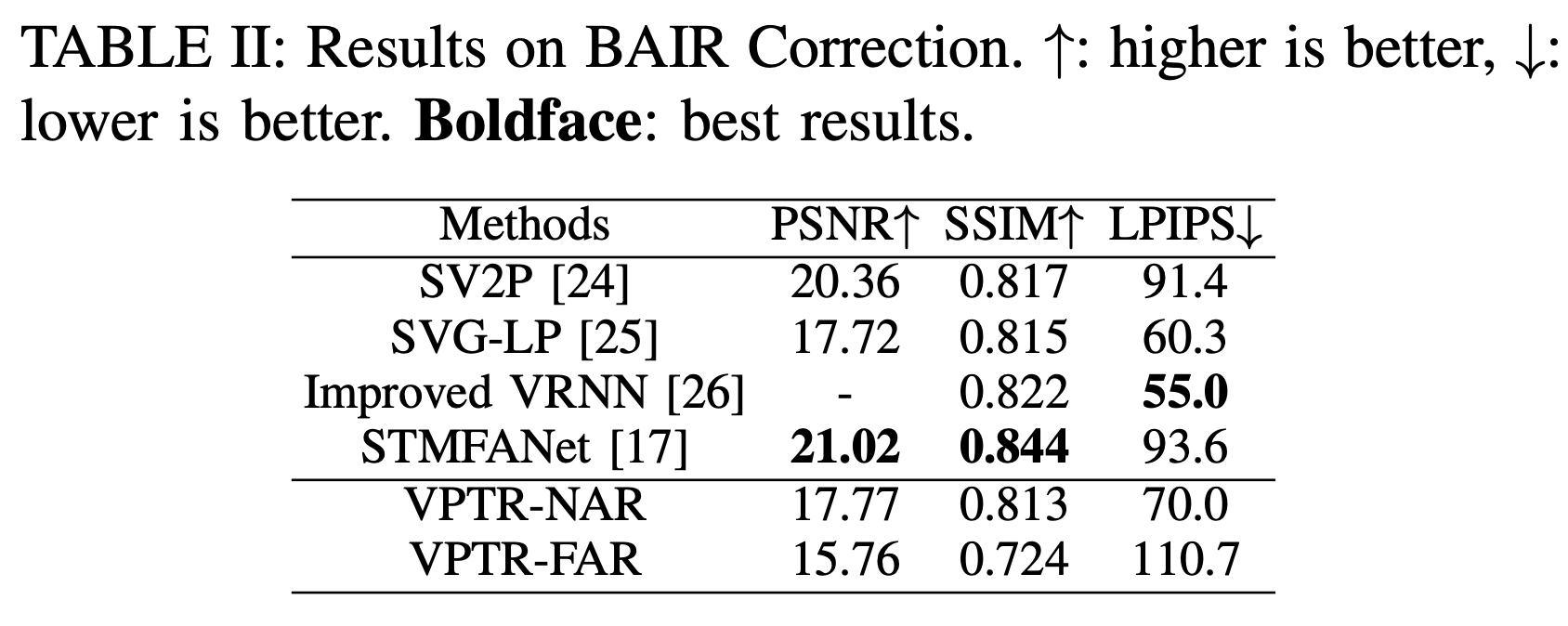

Recently, we found a mistake in our ICPR paper. For the BAIR experiments, the previous papers predict 28 future frames instead of 10. Specifically, the results in "TABLE II: Results on BAIR" are for 10 future frames instead of 28. The results for 28 predicted frames are updated here, see the following correct table.

We apologize for the mistake, the correction does not affect our conclusions.