3D Interacting Hand Pose Estimation by Hand De-occlusion and Removal

Hao Meng1,3*, Sheng Jin2,3*, Wentao Liu3,4, Chen Qian3, Mengxiang Lin1, Wanli Ouyang4,5, Ping Luo2,

ECCV 2022

This repo contains code and AIH (Amodal Interacting Hand) dataset of our HDR work.

We are currently cleaning the code, so you may encounter runtime errors when running this repo. The author is busy with other projects, and may not release the code soon. But the demo and the dataset could show you how the pipeline works.

The demo.py has been tested on the following platform:

Python 3.7, PyTorch 1.8.0 with CUDA 11.6 and cuDNN 8.4.0, mmcv-full 1.3.16, mmsegmentation 0.18.0, Win10 Pro

We recommend to manage the dependencies using conda. Please first install CUDA and ensure NVCC works. You can then create a conda environment using provided yml file as following:

conda env create -n hdr -f environment.yml

conda activate hdr

git clone https://github.com/MengHao666/HDR.git

cd HDR

Download it from onedrive, then extract all files.

Now your AIH_dataset folder structure should like this:

AIH_dataset/

AIH_render/

human_annot/

train/

machine_annot/

train/

val/

AIH_syn/

filtered_list/

human_annot/

train/

test/

machine_annot/

train/

val/

test/

syn_cfgs/

you could run python explore_AIH.py to explore our AIH dataset.Please modify the AIH_root in the code.

Download our pretrained models from Google Drive or baidu drive code:jrvm into HDR file directory, then extract all files inside.

Now your demo_work_dirs folder structure should like this:

HDR/

...

demo_work_dirs/

All_train_SingleRightHand/

checkpoints/

ckpt_iter_261000.pth.tar

Interhand_seg/

iter_237500.pth

TDR_fintune

checkpoints/

ckpt_iter_138000.pth.tar

D_iter_138000.pth.tar

You could run python demo/demo.py to see how our pipeline works. Note you may need to modify the full path of HDR in line 5 as we tested in Win10 Pro.

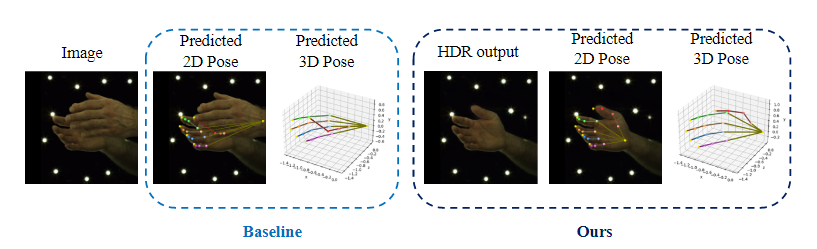

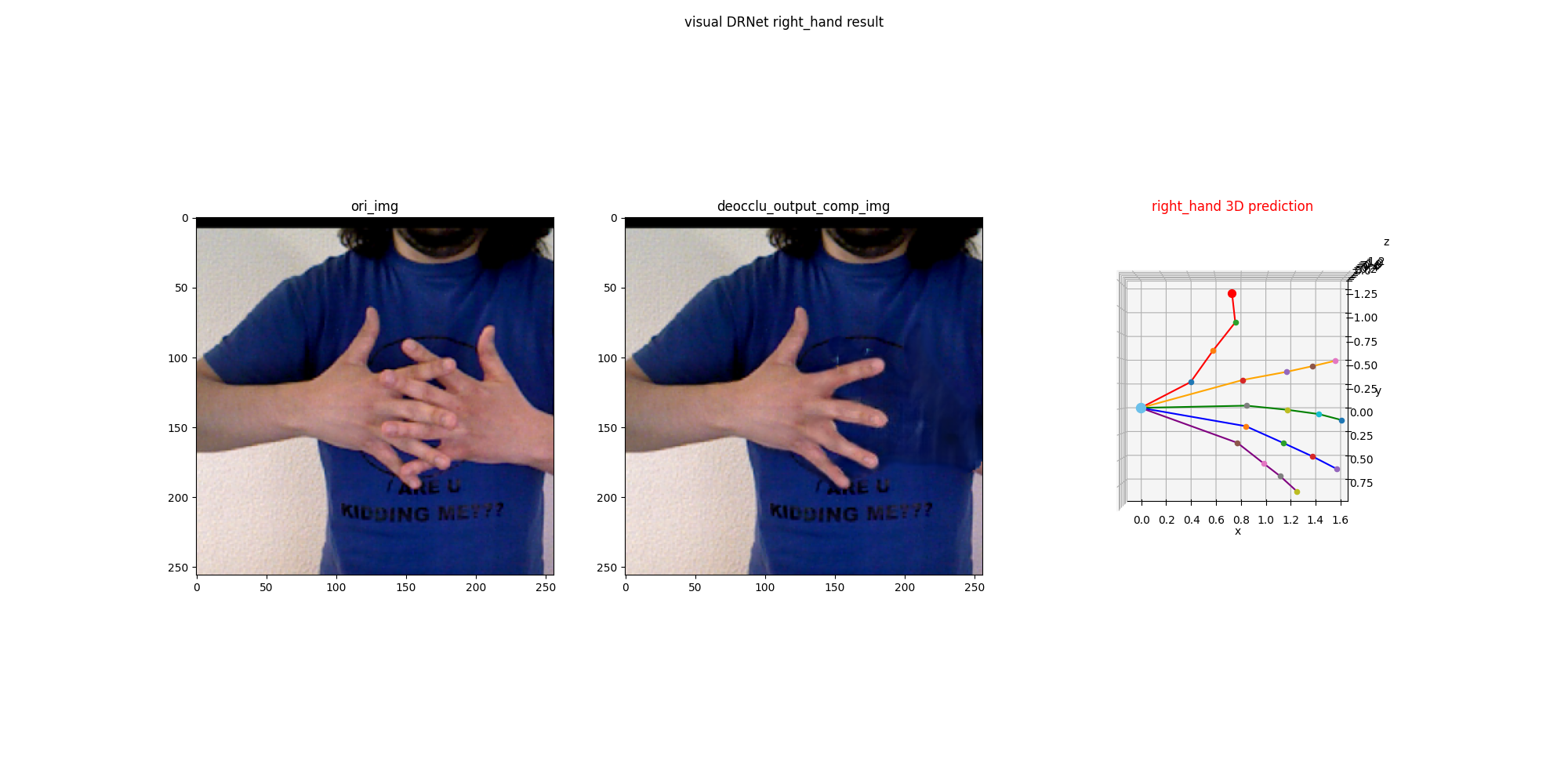

The results of HDR+SHPE are like following:

@article{meng2022hdr,

title={3D Interacting Hand Pose Estimation by Hand De-occlusion and Removal},

author={Hao Meng, Sheng Jin, Wentao Liu, Chen Qian, Mengxiang Lin, Wanli Ouyang, and Ping Luo},

booktitle={European Conference on Computer Vision (ECCV)},

year={2022}

month={October},

}

The code is built upon following works:

HDR (including AIH dataset) is CC-BY-NC 4.0 licensed, as found in the LICENSE file.