Unofficial CodeBLEU implementation with Linux and MacOS supports available with PyPI and HF HUB.

Based on original CodeXGLUE/CodeBLEU code -- refactored, build for macos, tested and fixed multiple crutches to make it more usable.

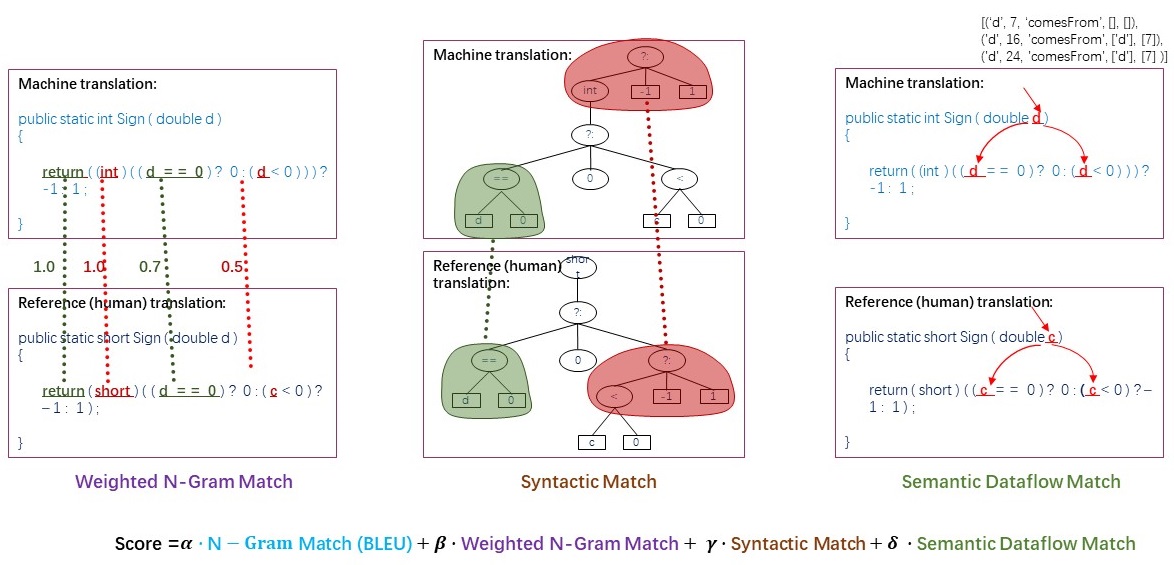

An ideal evaluation metric should consider the grammatical correctness and the logic correctness. We propose weighted n-gram match and syntactic AST match to measure grammatical correctness, and introduce semantic data-flow match to calculate logic correctness.

(from CodeXGLUE repo)

In a nutshell, CodeBLEU is a weighted combination of n-gram match (BLEU), weighted n-gram match (BLEU-weighted), AST match and data-flow match scores.

The metric has shown higher correlation with human evaluation than BLEU and accuracy metrics.

from codebleu import calc_codebleu

prediction = "def add ( a , b ) :\n return a + b"

reference = "def sum ( first , second ) :\n return second + first"

result = calc_codebleu([reference], [prediction], lang="python", weights=(0.25, 0.25, 0.25, 0.25), tokenizer=None)

print(result)

# {

# 'codebleu': 0.5537,

# 'ngram_match_score': 0.1041,

# 'weighted_ngram_match_score': 0.1109,

# 'syntax_match_score': 1.0,

# 'dataflow_match_score': 1.0

# }where calc_codebleu takes the following arguments:

refarences(list[str]orlist[list[str]]): reference codepredictions(list[str]) predicted codelang(str): code language, seecodebleu.AVAILABLE_LANGSfor available languages (python, c_sharp, java at the moment)weights(tuple[float,float,float,float]): weights of thengram_match,weighted_ngram_match,syntax_match, anddataflow_matchrespectively, defaults to(0.25, 0.25, 0.25, 0.25)tokenizer(callable): to split code string to tokens, defaults tos.split()

and outputs the dict[str, float] with following fields:

codebleu: the finalCodeBLEUscorengram_match_score:ngram_matchscore (BLEU)weighted_ngram_match_score:weighted_ngram_matchscore (BLEU-weighted)syntax_match_score:syntax_matchscore (AST match)dataflow_match_score:dataflow_matchscore

Alternatively, you can use k4black/codebleu from HuggingFace Spaces:

import evaluate

metric = evaluate.load("dvitel/codebleu")

result = metric.compute([reference], [prediction], lang="python", weights=(0.25, 0.25, 0.25, 0.25))Feel free to check the HF Space with online example: k4black/codebleu

Requires Python 3.8+

The metrics can be installed with pip and used as indicated above:

pip install codebleualternatively the metric is available as k4black/codebleu in evaluate (lib installation required):

import evaluate

metric = evaluate.load("dvitel/codebleu")Official CodeBLEU paper can be cited as follows:

@misc{ren2020codebleu,

title={CodeBLEU: a Method for Automatic Evaluation of Code Synthesis},

author={Shuo Ren and Daya Guo and Shuai Lu and Long Zhou and Shujie Liu and Duyu Tang and Neel Sundaresan and Ming Zhou and Ambrosio Blanco and Shuai Ma},

year={2020},

eprint={2009.10297},

archivePrefix={arXiv},

primaryClass={cs.SE}

}