[TOC]

Advanced analytics is transforming all industries and is inherently data hungry. In health care data privacy rules detract data sharing for collaboration. Synthetic data, that retains the original characteristics and model compatible, can make data sharing easy and enable analytics for health care data.

Conventionally statistical methods have been used, but with limited success. Current deidentification techniques are not sufficient to mitigate re-identification risks. Emerging technologies in Deep Learning such as GAN are very promising to solve this problem.

How can you certify that the generated data is as similar and as useful as original data for the intended uses?

The Proposed solution by the team involves generating Synthetic Data using Generative Adversarial Networks or GANs and with the help of conventionally available sources such as TGAN and CTGAN.

The team also wants to build modules which can test the generated synthetic data against the original datasets on following three areas:

- Statistical Similarity: Create standardized modules to check if the generated datasets are similar to the original dataset

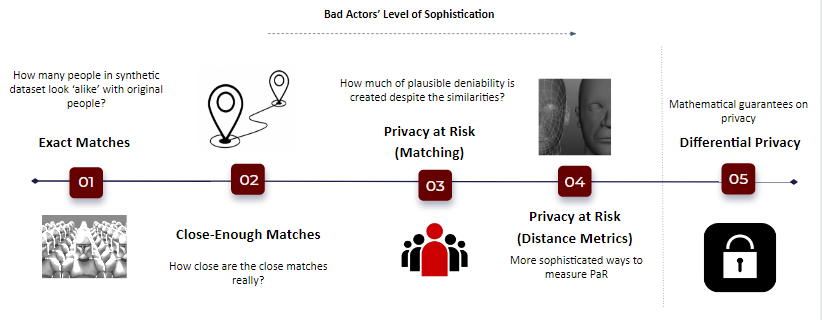

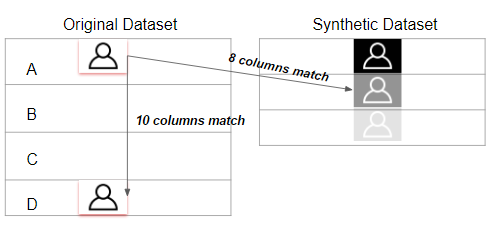

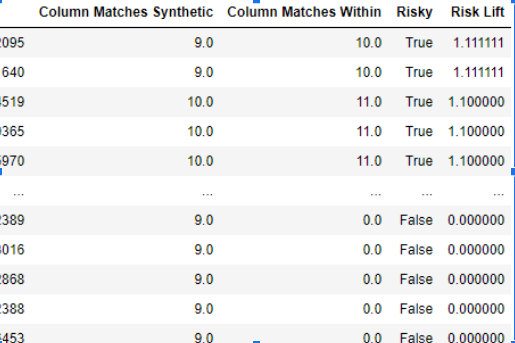

- Privacy Risk: Create standardized metrics to check if generated synthetic data protects privacy of all data points in the original dataset.

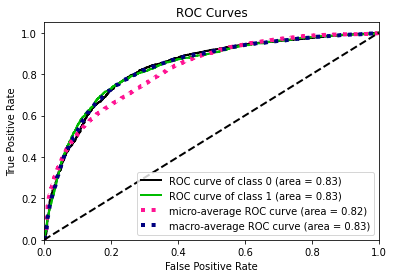

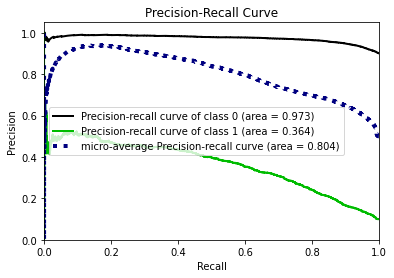

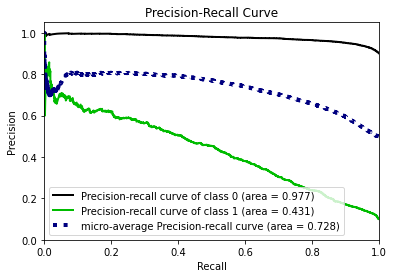

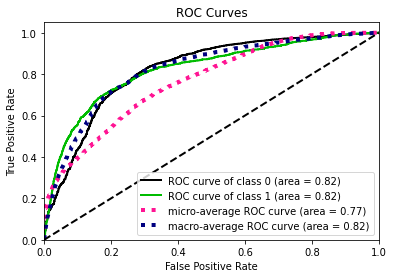

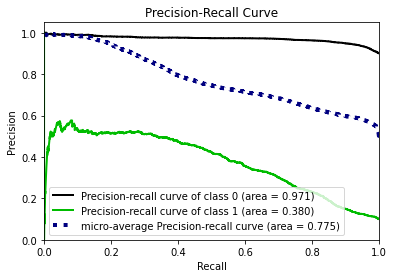

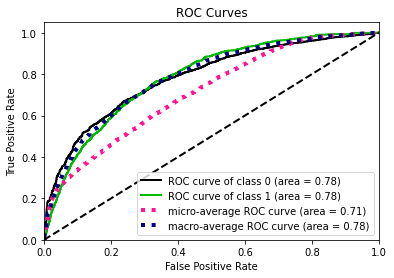

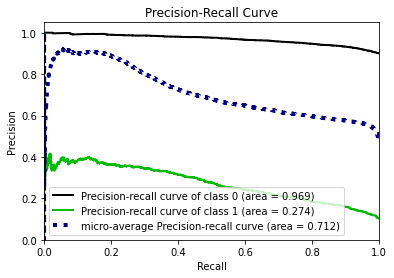

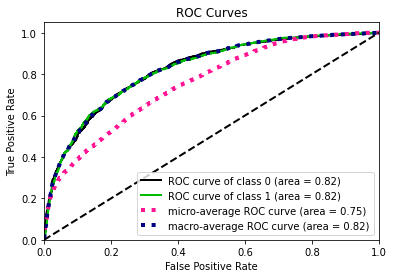

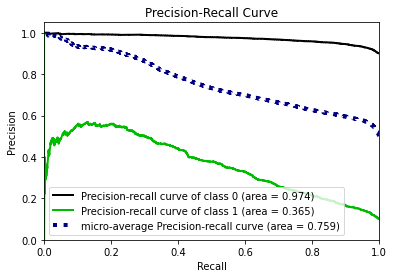

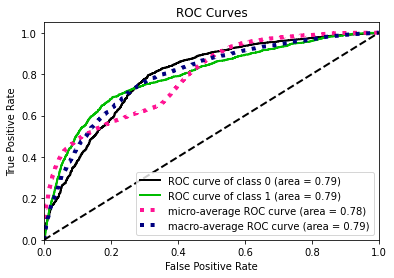

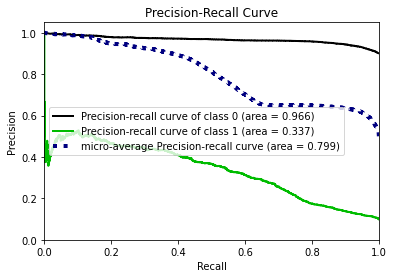

- Model Compatibility: Compare performance of Machine Learning techniques on original and synthetic datasets

- Statistical Similarity: The generated datasets were similar to each other when compared using PCA and Auto-Encoders.

- Privacy module: The generated Privacy at Risk (PaR) metric and modules helps identify columns which are at risk to expose the privacy of data points from original data. The generated datasets using TGAN and CTGAN had sufficiently high privacy score and protected privacy of original data points.

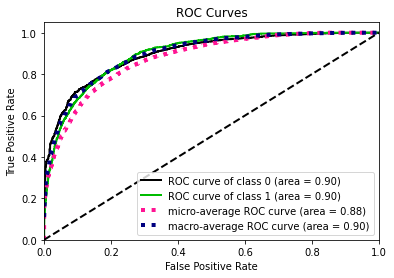

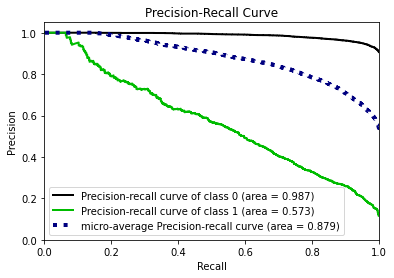

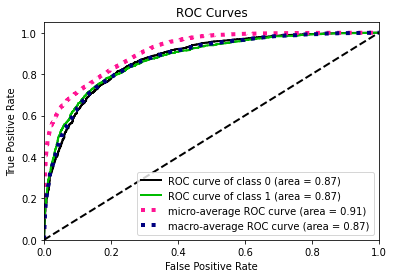

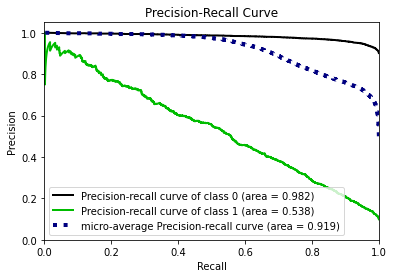

- Model Compatibility: The synthetic data has comparable model performance for both classification and regression problems.

Optum deals with sensitive healthcare data that has Personal identifiable Information (PII) of 100M+ people and it is expanding every day. The healthcare industry is particularly sensitive as Patient Identifiable Information data is strictly regulated by the Health Insurance Portability and Accountability Act (HIPPA) of 1996. Healthcare firms need to keep customer data secure while leveraging it to innovate research and drive growth in the firm. However, current data sharing practices (to ensure de-identification) have resulted in wait times for data access as long as 3 months. This has proved to be a hindrance to fast innovation at Optum. The need of the hour is to reduce the time for data access and enable innovation while protecting the information of patients. The key question to answer here is:

"How can we safely and efficiently share healthcare data that is useful?"The key questions involve the inherent trade-off between safety and efficiency. With the inception of big data, efficiency in the data sharing process is of paramount importance. Availability and accessibility of data ensure rapid prototyping and lay down the path for quick innovation in the healthcare industry. Efficient data sharing also unlocks the full potential of analytics and data sciences through use cases like the diagnosis of cancer, predicting response for drug therapy, vaccine developments, drug discovery through bioinformatics. Apart from medical innovation, efficient data sharing helps to bridge the shortcomings in the healthcare system through salesforce effectiveness, managing supply chain and improve patient engagement. While efficient data sharing is crucial, the safety of patient's data can not be ignored. Existing regulations like HIPPA and recent privacy laws like the California Consumer Privacy Act are focused on maintaining the privacy of sensitive information. More advanced attacks are being organized by hackers and criminals aimed at accessing personal information. As per IBM's report on cost data breaches, the cost per record is ~$150. But the goodwill and trust lost by the companies, cannot be quantified So, the balance between data sharing and privacy is tricky.

Existing de-identification techniques involve two main techniques 1) Anonymization Techniques 2) Differential Privacy. Almost every firm relies on these techniques to deal with sensitive information in PII data. These techniques have proven to be successful in the past and thus act as low hanging fruit for any organization.

-

Anonymization techniques: These techniques try to remove the columns which contain sensitive information. Methods include deleting columns, masking elements, quasi-identifiers, k-anonymity, l-diversity, and t-closeness.

-

Differential privacy: This is a perturbation technique which adds noise to columns which introduce randomness to data and thus maintain privacy. It is a mechanism to help to maximize the aggregate utility of databases ensuring high levels of privacy for the participants by striking a balance between utility and privacy.

However, these techniques are not cutting edge when it comes to maintaining privacy and data sharing. Rocher et al have proven that 99.98 percent of Americans (in a sample size of the population of Massachusetts) would be correctly re-identified in any dataset using as few as 15 demographic attributes. They conclude that "even heavily sampled anonymized datasets are unlikely to satisfy the modern standards for anonymization set forth by GDPR and seriously challenge the technical and legal adequacy of the de-identification release-and-forget model.

Proposition

Currently, the field of AI which is being given a lot of importance is Deep Learning. It addresses the critical aspect of data science in this age through universality theorem (identifying function form) and representation learning (correct features). Of late, generative modeling has seen a rise in popularity. In particular, a relatively recent model called Generative Adversarial Networks or GANs introduced by Ian Goodfellow et al. shows promise in producing realistic samples. While this is a state-of-the-art deep learning models to generate new synthetic data, there are few challenges which we need to overcome.

| Salient Features | Challenges |

|---|---|

| Neural Network is cutting edge algorithm in industry | Trained to solve one specific task, can it fit all use cases? |

| Generate image using CNN architecture | Can we generate table from relational databases? |

| Generate fake images of human faces that looks realistic | Would it balance the trade-off between maintaining utility and privacy of data |

| Requires high computational infrastructure like GPUs | How to implement GAN for big data? |

A generative adversarial network (GAN) is a class of machine learning systems invented by Ian Goodfellow in 2014. GAN uses algorithmic architectures that use two neural networks, pitting one against the other (thus the “adversarial”) in order to generate new, synthetic instances of data that can pass for real data.

GANs consist of Two neural networks contest with each other in a game. Given a training set, this technique learns to generate new data with the same statistics as the training set. The two Neural Networks are named Generator and a Discriminator.

Generator The generator is a neural network that models a transform function. It takes as input a simple random variable and must return, once trained, a random variable that follows the targeted distribution. The generator randomly feeds actual image and generated images to the Discriminator. The generator starts with Generating random noise and changes its outputs as per the Discriminator. If the Discriminator is successfully able to identify that generate input is fake, then then its weights are adjusted to reduce the error.

Discriminator The Discriminators job is to determine if the data fed by the generator is real or fake. The discriminator is first trained on real data, so that it can identify it to acceptable accuracy. If the Discriminator is not trained properly, then it in turn will not be accurately able to identify fake images thus poorly training the Generator.

This is continued for multiple iterations till the discriminator can identify the real/fake images purely by chance only.

Algorithm: Now lets see how GANs algorithm works internally.

- The generator randomly feeds real data mixed with generated fake data for the discriminator

- To begin, in first few iterations, the generator produces random noise which the discriminator is very good at detecting that the produced image is fake.

- Every iteration, the discriminator catches a generated image as fake, the generator readjusts its weights to improve itself. much like the Gradient Descent algorithm

- Over time, after multiple iterations, the generator becomes very good at producing images which can now fool the discriminator and pass as real ones.

- Now, its discriminators turn to improve its detection algorithm by adjusting its network weights.

- This game continues till a point where the discriminator is unable to distinguish a real image from fake and can only guess by chance.

This methodology has been created from the work provided in this paper:

Synthesizing Tabular Data using Generative Adversarial Networks

And also the following python package:

https://pypi.org/project/tgan/

Generative adversarial networks (GANs) implicitly learn the probability distribution of a dataset and can draw samples from the distribution. Tabular GAN (TGAN) is a generative adversarial network which can generate tabular data by learning distribution of the existing training datasets and can generate samples which are. Using the power of deep neural networks.

TGAN focuses on generating tabular data with mixed variable types (multinomial/discrete and continuous) and propose TGAN. To achieve this, we use LSTM with attention in order to generate data column by column. To asses, we first statistically evaluate the synthetic data generated by TGAN.

The paper also evaluates Machine learning models performance against traditional methods like modelling a multivariate probability or randomization based models.

For a table containing discrete and continuous random variables, They follow some probability distribution. Each row in the table is a sample from this distribution, which is sampled independently and the algorithms learn a generative model such that samples generated from this model can satisfy two conditions:

- A Machine Learning model using the Synthetic table achieves similar accuracy on the test table

- Mutual information between an arbitrary pair of variables is similar

Numerical Variables

For the model to learn the data effectively, a reversible transformation is applied. The a numerical variables are converted into a scalar in the range (1, 1) and a multinomial distribution, and convert a discrete variable into a multinomial distribution.

Often, numerical variables in tabular datasets follows multimodal distribution. Gaussian Kernal density estimation is used to estimate these number of noes in the continuous variable. To sample values from these, a gaussian mixture model is used.

Categorical Variables - Improvement needed

categorical variables are directly converted to to one-hot-encoding representation and add noise to binary variables

In TGAN, the the discriminator D tries to distinguish whether the data is from the real distribution, while the generator G generates synthetic data and tries to fool the discriminator. the algorithm uses a Long Short Term Memory(LSTM) as generator and a Multi Layer Perceptron (MLP) as a discriminator.

import warnings

warnings.filterwarnings('ignore')

import pandas as pd

import tensorflow as tf

from tgan.model import TGANModel

from tgan.data import load_demo_datadef tgan_run(data, cont_columns):

tgan = TGANModel(continuous_columns)

return tgan.fit(data)

def tgan_samples(model, num_samples):

return tgan.sample(100000)CTGAN is a GAN-based method to model tabular data distribution and sample rows from the distribution. CTGAN implements mode-specific normalization to overcome the non-Gaussian and multimodal distribution (Section 4.2). We design a conditional generator and training-by-sampling to deal with the imbalanced discrete columns (Section 4.3). And we use fully-connected networks and several recent techniques to train a high-quality model.

Several unique properties of tabular data challenge the design of a GAN model.

- Mixed data types Real-world tabular data consists of mixed types. To simultaneously generate a mix of discrete and continuous columns, GANs must apply both softmax and tanh on the output.

- Non-Gaussian distributions: In images, pixels’ values follow a Gaussian-like distribution, which can be normalized to [−1, 1] using a min-max transformation. A tanh function is usually employed in the last layer of a network to output a value in this range. Continuous values in tabular data are usually non-Gaussian where min-max transformation will lead to vanishing gradient problem.

- Multimodal distributions. We use kernel density estimation to estimate the number of modes in a column. We observe that 57/123 continuous columns in our 8 real-world datasets have multiple modes. Srivastava et al. [21] showed that vanilla GAN couldn’t model all modes on a simple 2D dataset; thus it would also struggle in modeling the multimodal distribution of continuous columns.

- Learning from sparse one-hot-encoded vectors. When generating synthetic samples, a generative model is trained to generate a probability distribution over all categories using softmax, while the real data is represented in one-hot vector. This is problematic because a trivial discriminator can simply distinguish real and fake data by checking the distribution’s sparseness instead of considering the overall realness of a row.

- Highly imbalanced categorical columns. In our datasets we noticed that 636/1048 of the categorical columns are highly imbalanced, in which the major category appears in more than 90% of the rows. This creates severe mode collapse. Missing a minor category only causes tiny changes to the data distribution that is hard to be detected by the discriminator. Imbalanced data also leads to insufficient training opportunities for minor classes.

When feeding data to the GAN algorithm, CTGAN samples so that all categories are correctly represented. Specifically, the goal is to resample efficiently in a way that all the categories from discrete attributes are sampled evenly (but not necessary uniformly) during the training process, and to recover the (not-resampled) real data distribution during test

These three things need to be incorporated:

- Modify the input for conditional vector creation

- The generated rows should preserve the condition

- The conditional generator should learn the real data conditional distribution

import pandas as pd

import tensorflow as tf

from ctgan import load_demo

from ctgan import CTGANSynthesizerdata = load_demo()

discrete_columns = ['workclass','education', 'marital-status', 'occupation', 'relationship', 'race', 'sex','native-country', 'income']

ctgan = CTGANSynthesizer()

ctgan.fit(data, discrete_columns)Source: https://arxiv.org/pdf/1802.06739.pdf

One common issue in above proposed methodologies in GANs is that the density of the learned generative distribution could concentrate on the training data points, meaning that they can easily remember training samples due to the high model complexity of deep networks. This becomes a major concern when GANs are applied to private or sensitive data such as patient medical records, and the concentration of distribution may divulge critical patient information. Differentially Private GANs is achieved by adding carefully designed noise to gradients during the learning procedure.

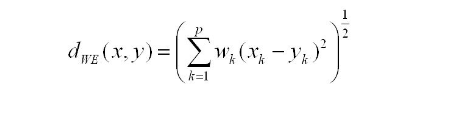

DPGAN focuses on preserving the privacy during the training procedure instead of adding noise on the final parameters directly, which usually suffers from low utility. Noise is added to the gradient of the Wasserstein distance with respect to the training data.

Note: Wasserstein distance is a distance function defined between probability distributions on a given metric space

The algorithm guarantees that the parameters of discriminator and generator have differential privacy with respect to the sample training points. The algorithm inputs noise e in the generator parameters which enables this privacy, however one needs to perform a grid search over a large range of noise parameter e to get best results.

Source: https://arxiv.org/pdf/1906.09338.pdf

Generative Adversarial Networks (GAN) provide a powerful method for using real data to generate synthetic data but it does not provide any rigorous privacy guarantees. PATE GAN modifies the existing GAN algorithm in a way that does guarantee privacy

PATE GAN consists of two generator blocks called student block and teacher block on top of the existing generator block. With traditional privacy techniques, it is possible for the Generator to reconstruct the original data even after adding noise. PATE GAN prevents this by breaking down the generator into three stages. After the generator creates the data and adds noise, there is an ensemble block which factors in majority voting to create the input. After this there is a student block which aggregates the inputs from the teacher blocks and generates the final data.

The synthetic data is (differentially) private with respect to the original data DP-GAN: The key idea is that noise is added to the gradient of the discriminator during training to create differential privacy guarantees. Our method is similar in spirit; during training of the discriminator differentially private training data is used, which results in noisy gradients, however, we use the mechanism introduced in A noticeable difference is that the adversarial training is no longer symmetrical: the teachers are now being trained to improve their loss with respect to G but G is being trained to improve its loss with respect to the student S which in turn is being trained to improve its loss with respect to the teachers.

Theoretically, the generator in GAN has the potential of generating an universal distribution, which is a superset of the real distribution, so it is not necessary for the student discriminator to be trained on real records. However, such a theoretical bound is loose. In practice, if a generator does generate enough samples from the universal distribution, there would be a convergence issue. On the other hand, when the generator does converge, it no longer covers the universal distribution, so the student generator may fail to learn the real distribution without seeing real records.

It is not necessary to ensure differential privacy for the discriminator in order to train a differentially private generator. As long as we ensure differential privacy on the information flow from the discriminator to the generator, it is sufficient to guarantee the privacy property for the generator. Therefore, instead of focusing on ensuring differential privacy for the whole GAN framework, we design a novel framework to guarantee that all information flowed from the discriminator to the generator satisfies differential privacy.

Compared to PATE-GAN, our approach has two advantages. First, we improve the use of privacy budget by applying it to the part of the model that actually needs to be released for data generation. Second, our discriminator can be trained on real data because itself does not need to satisfy differential privacy. The teacher discriminators do not need to be published, so they can be trained with non-private algorithms.

In addition, we design a gradient aggregator to collect information from teacher discriminators and combine them in a differentially private fashion. Unlike PATE-GAN, G-PATE does not require any student discriminator. The teacher discriminators are directly connected to the student generator. The gradient aggregator sanitizes the information flow from the teacher discriminators to the student generator to ensure differential privacy The privacy property is achieved by sanitizing all information propagated from the discriminators to the generator.

In order to validate the efficacy of GANs to serve our purpose, we propose a methodology for thorough evaluation of synthetic data generated by GANs.

MIMIC-III stands for Medical Information Mart for Intensive Care Unit. It is an open source database and contains multiple tables which can be combined to prepare various use cases. Data can be accessed on link provided below and in order to access data, few certifications are required which can be obtained by completing trainings on website. Open source dataset developed by the MIT Lab comprising de-identified health data is a comprehensive clinical dataset of 40,000+ patients admitted in ICU.

Source: https://mimic.physionet.org/gettingstarted/overview/

Overview of MIMIC-III data:

- 26 tables: Comprehensive clinical dataset with different tables like Admissions, Patients, CPT events, Chart events, Diagnosis etc.

- 45,000+ patients: Data associated with multiple admissions of patients and include details like Gender, Ethnicity, Marital Status

- 65,000+ ICU admissions: Admitted in ICUs at Beth Israel Deaconess Medical Centre, Boston, from 2001 to 2012

- PII data: Includes patient sensitive details like demographics, diagnosis, laboratory tests, medications etc.

- Use cases: Can be used for multiple analysis like clinical data (prescriptions), claims data (payer), members data (patient)

For this project, focus is on specific tables from this database and not using all 26 tables. Combining multiple tables from this database, 2 different use cases were created which can be found in section below. These use cases will be used in modules like statistical similarity, model compatibility and privacy risk so initial understanding is crucial to understand the variable names used in further documentation.

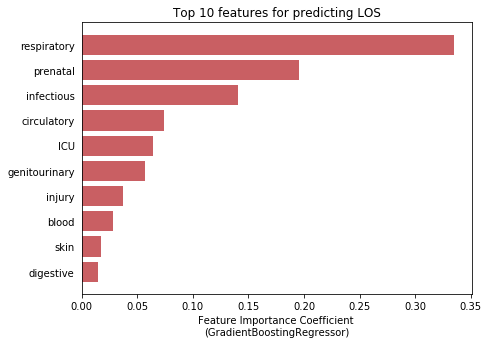

Predicting the length of stay in ICU using 4 out of 26 tables

Use case: Out of variety of possible use cases from MIMIC III dataset we focus on single use case to predict the number of days a patient stays in the ICU to further generate synthetic data. This usecase seemed important to us because its always a benefit for payer to predict the tendency of the length of stay of a patient. It helps in changing the premium charged by the payer according to the comparison of predictions and baseline (the defined no. of days covered by a particular plan of the patient). For this use case the model utilises the total number of diagnosis that occured for different disease category for each patient.

To build this use case we focus on primarily 4 tables.

- Patients: Every unique patient in the database (defines SUBJECT_ID) Columns like: SUBJECT_ID, GENDER (count of rows: 46520 count of columns: 7)

- Admissions: Every unique hospitalization for each patient in the database (defines HADM_ID) Columns like: SUBJECT_ID, HADM_ID, HOSPITAL_EXPIRE_FLAG, MARITAL_STATUS, ETHNICITY, ADMISSION_TYPE (count of rows: 58976 count of columns: 18 )

- ICUSTAYS: Every unique ICU stay in the database (defines ICUSTAY_ID) Columns like: SUBJECT_ID, HADM_ID, LOS (count of rows: 61532 count of columns: 11 )

- Diagnosis_ICD: Hospital assigned diagnoses, coded using the International Statistical Classification of Diseases and Related Health Problems (ICD) system Columns like: SUBJECT_ID, HADM_ID, NUMDIAGNOSIS (count of rows: 651047 count of columns: 5)

- Load csv files: Read the comma separated files downloaded from link (https://mimic.physionet.org/gettingstarted/overview/)

- Merge tables: Use 'SUBJECT_ID' to merge tables like ADMISSIONS, PATIENTS and ICU_STAYS and finally concatenate 'SUBJECT_ID' and 'HADM_ID' to form 'final_id' as composite key.

- Prepare diagnosis dataset: DIAGNOSIS_ICD table is used to map the disease category type using the first three code digits if ICD-9 code. The mapping is used to convert the unique 6984 ICD-9 codes into 18 different disease area categories and finally concatenate 'SUBJECT_ID' and 'HADM_ID' to form 'final_id' as composite key..

- Pivot up diagnosis dataset: After the mapping the disease categories using ICD-9 codes, the dataset is pivoted up at the level of the 18 disease categories and the total count of diagnosis is being populated across 'final_id'

- Merge pivoted diagnosis datset to the main dataset: Finally, the above generated dataset is then merged to the main dataset using the 'final_id' as the key.

Note: 6984 ICD-9 codes: The diagnosis dataset contains unique International Classification of Disease (ICD-9) codes 18 primary categories: We consider categories of conditions for the predictive modeling. Finally only the relevant columns required for the analysis are selected and we use the dataset for the synthetic data generation. The final data has 116354 rows and 27 columns.

- Level of data: Each instance in the final data set is unique admission for each patient and is defined by concatenation of 'SUBJECT_ID' and 'HADM_ID' to form 'final_id'

- Target Variables: 'LOS' (length of stay) is used as target variable

- Predictor variables: 18 columns of different diagnosis category are used as predictor variables.

These 18 categories are:

"certain conditions originating in the perinatal period"

"complications of pregnancy, childbirth, and the puerperium", "congenital anomalies",

"diseases of the blood and blood-forming organs",

"diseases of the circulatory system",

"diseases of the digestive system",

"diseases of the genitourinary system",

"diseases of the musculoskeletal system and connective tissue",

"diseases of the nervous system",

"diseases of the respiratory system",

"diseases of the sense organs",

"diseases of the skin and subcutaneous tissue",

"endocrine, nutritional and metabolic diseases, and immunity disorders",

"external causes of injury and supplemental classification",

"infectious and parasitic diseases",

"injury and poisoning",

"mental disorders",

"neoplasms" and

"symptoms, signs, and ill-defined conditions". - Other descriptive variables: "ADMISSION_TYPE", "MARITAL_STATUS","INSURANCE", "ETHNICITY", "HOSPITAL_EXPIRE_FLAG", "GENDER" and "EXPIRE_FLAG"

Code (data wrangling performed in R)

- Import required libraries and read csv files

- Function for data preparation

1. Import required libraries and read csv files

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.metrics import r2_score, mean_squared_error

from sklearn.preprocessing import MinMaxScaler

from sklearn.neighbors import KNeighborsRegressor

from sklearn.linear_model import LinearRegression

from sklearn.svm import SVR

from sklearn.ensemble import RandomForestRegressor

from sklearn.tree import DecisionTreeRegressor

from scipy.stats import pearsonr

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.linear_model import SGDRegressor

import statsmodels.api as sm

from sklearn.model_selection import GridSearchCV

import seaborn as sns# Primary Admissions information

df = pd.read_csv(r"ADMISSIONS.csv")

# Patient specific info such as gender

df_pat = pd.read_csv(r"PATIENTS.csv")

# Diagnosis for each admission to hospital

df_diagcode = pd.read_csv(r"DIAGNOSES_ICD.csv")

# Intensive Care Unit (ICU) for each admission to hospital

df_icu = pd.read_csv(r"ICUSTAYS.csv")print('Dataset has {} number of unique admission events.'.format(df['HADM_ID'].nunique()))

print('Dataset has {} number of unique patients.'.format(df['SUBJECT_ID'].nunique()))Dataset has 58976 number of unique admission events.

Dataset has 46520 number of unique patients.

# Convert admission and discharge times to datatime type

df['ADMITTIME'] = pd.to_datetime(df['ADMITTIME'])

df['DISCHTIME'] = pd.to_datetime(df['DISCHTIME'])

# Calculating the Length of Stay variable using the difference between Discharge time and Admit time

# Convert timedelta type into float 'days'. Note: There are 86400 seconds in a day

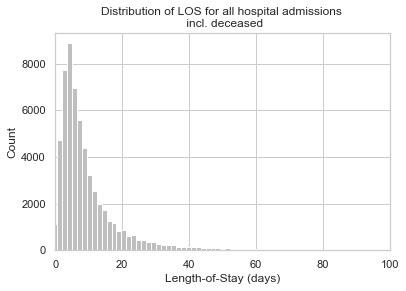

df['LOS'] = (df['DISCHTIME'] - df['ADMITTIME']).dt.total_seconds()/86400df['LOS'].describe()count 58976.000000

mean 10.133916

std 12.456682

min -0.945139

25% 3.743750

50% 6.467014

75% 11.795139

max 294.660417

Name: LOS, dtype: float64

# Drop rows with negative LOS. The negative LOS means that the patient was brought dead to the ICU.

df['LOS'][df['LOS'] > 0].describe()count 58878.000000

mean 10.151266

std 12.459774

min 0.001389

25% 3.755556

50% 6.489583

75% 11.805556

max 294.660417

Name: LOS, dtype: float64

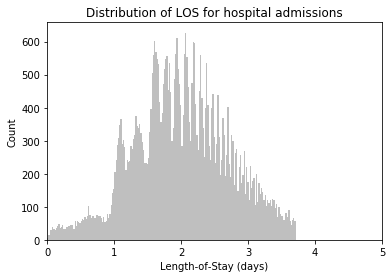

# Plot LOS Distribution

plt.hist(df['LOS'], bins=200, color = '0.75')

plt.xlim(0, 100)

plt.title('Distribution of LOS for all hospital admissions \n incl. deceased')

plt.ylabel('Count')

plt.xlabel('Length-of-Stay (days)')

plt.tick_params(top=False, right=False)

plt.show();# Drop LOS < 0

df = df[df['LOS'] > 0]# Dropping the columns that are not needed

df.drop(columns=['DISCHTIME', 'ROW_ID',

'EDREGTIME', 'EDOUTTIME', 'HOSPITAL_EXPIRE_FLAG',

'HAS_CHARTEVENTS_DATA'], inplace=True)# Mapping the dead and alive value of patients who died in hospital as 1 and 0

df['DECEASED'] = df['DEATHTIME'].notnull().map({True:1, False:0})print("{} of {} patients died in the hospital".format(df['DECEASED'].sum(),

df['SUBJECT_ID'].nunique()))5774 of 46445 patients died in the hospital

# Descriptive analysis of patients who died during the stay in ICU

df['LOS'].loc[df['DECEASED'] == 0].describe()count 53104.000000

mean 10.138174

std 12.284461

min 0.014583

25% 3.866667

50% 6.565972

75% 11.711632

max 294.660417

Name: LOS, dtype: float64

# Descriptive analysis of LOS metric (target variable)

mean_los = df['LOS'].mean()

median_los = df['LOS'].median()

min_los = df['LOS'].min()

max_los = df['LOS'].max()

print("Mean LOS: ",mean_los)

print("Median LOS: ",median_los)

print("Min LOS: ",min_los)

print("Max LOS: ",max_los)Mean LOS: 10.151266378028323

Median LOS: 6.489583333333333

Min LOS: 0.0013888888888888892

Max LOS: 294.66041666666666

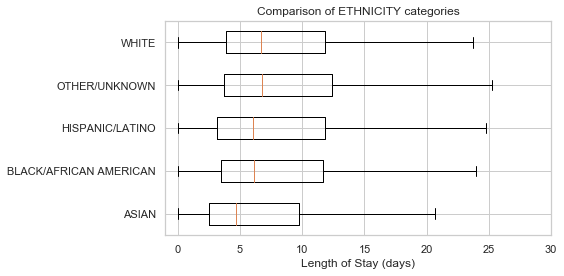

# Club the Ethnicity categories into 5 broad categories

df['ETHNICITY'].replace(regex=r'^ASIAN\D*', value='ASIAN', inplace=True)

df['ETHNICITY'].replace(regex=r'^WHITE\D*', value='WHITE', inplace=True)

df['ETHNICITY'].replace(regex=r'^HISPANIC\D*', value='HISPANIC/LATINO', inplace=True)

df['ETHNICITY'].replace(regex=r'^BLACK\D*', value='BLACK/AFRICAN AMERICAN', inplace=True)

df['ETHNICITY'].replace(['UNABLE TO OBTAIN', 'OTHER', 'PATIENT DECLINED TO ANSWER',

'UNKNOWN/NOT SPECIFIED'], value='OTHER/UNKNOWN', inplace=True)

df['ETHNICITY'].loc[~df['ETHNICITY'].isin(df['ETHNICITY'].value_counts().nlargest(5).index.tolist())] = 'OTHER/UNKNOWN'

df['ETHNICITY'].value_counts()WHITE 41268

OTHER/UNKNOWN 7700

BLACK/AFRICAN AMERICAN 5779

HISPANIC/LATINO 2125

ASIAN 2006

Name: ETHNICITY, dtype: int64

# Bar plot function

def plot_los_groupby(variable, size=(7,4)):

'''

Plot Median LOS by df categorical series name

'''

results = df[[variable, 'LOS']].groupby(variable).median().reset_index()

values = list(results['LOS'].values)

labels = list(results[variable].values)

fig, ax = plt.subplots(figsize=size)

ind = range(len(results))

ax.barh(ind, values, align='center', height=0.6, color = '#55a868', alpha=0.8)

ax.set_yticks(ind)

ax.set_yticklabels(labels)

ax.set_xlabel('Median Length of Stay (days)')

ax.tick_params(left=False, top=False, right=False)

ax.set_title('Comparison of {} labels'.format(variable))

plt.tight_layout()

plt.show();

# Boxplot function

def boxplot_los_groupby(variable, los_range=(-1, 30), size=(8,4)):

'''

Boxplot of LOS by df categorical series name

'''

results = df[[variable, 'LOS']].groupby(variable).median().reset_index()

categories = results[variable].values.tolist()

hist_data = []

for cat in categories:

hist_data.append(df['LOS'].loc[df[variable]==cat].values)

fig, ax = plt.subplots(figsize=size)

ax.boxplot(hist_data, 0, '', vert=False)

ax.set_xlim(los_range)

ax.set_yticklabels(categories)

ax.set_xlabel('Length of Stay (days)')

ax.tick_params(left=False, right=False)

ax.set_title('Comparison of {} categories'.format(variable))

plt.tight_layout()

plt.show();

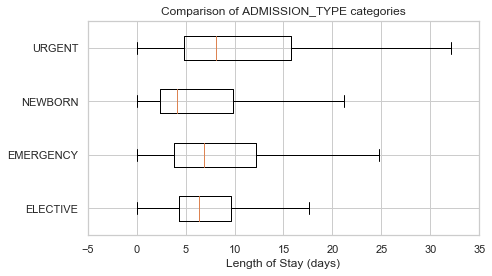

boxplot_los_groupby('ETHNICITY', los_range=(-1, 30))df['ADMISSION_TYPE'].value_counts()EMERGENCY 41989

NEWBORN 7854

ELECTIVE 7702

URGENT 1333

Name: ADMISSION_TYPE, dtype: int64

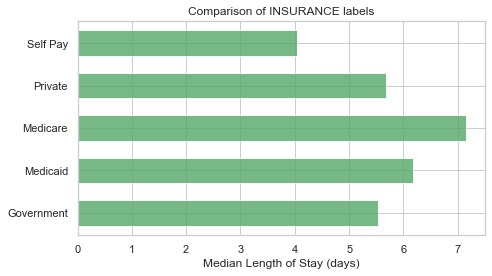

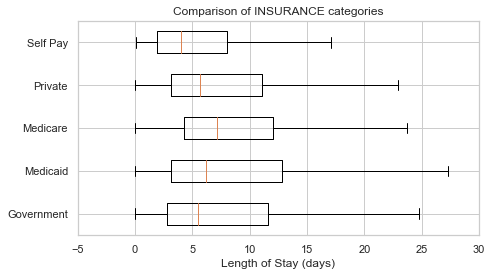

plot_los_groupby('ADMISSION_TYPE')boxplot_los_groupby('ADMISSION_TYPE', los_range=(-5, 35), size=(7, 4))df['INSURANCE'].value_counts()Medicare 28174

Private 22542

Medicaid 5778

Government 1781

Self Pay 603

Name: INSURANCE, dtype: int64

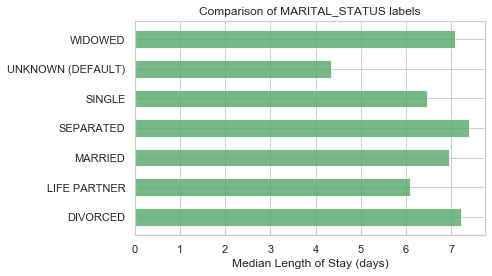

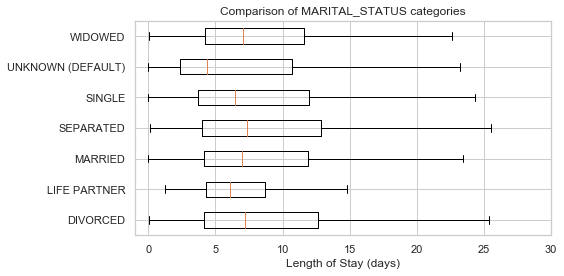

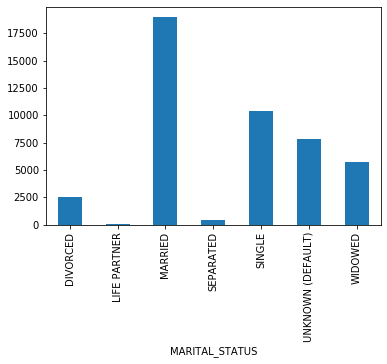

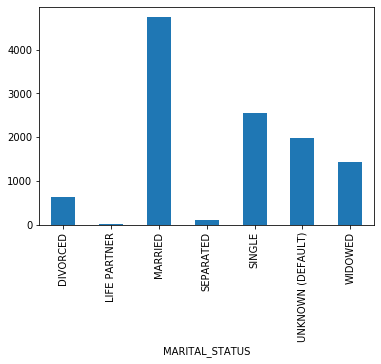

plot_los_groupby('INSURANCE')boxplot_los_groupby('INSURANCE', los_range=(-5, 30), size=(7, 4))df['MARITAL_STATUS'].value_counts(dropna=False)MARRIED 24199

SINGLE 13238

NaN 10097

WIDOWED 7204

DIVORCED 3211

SEPARATED 571

UNKNOWN (DEFAULT) 343

LIFE PARTNER 15

Name: MARITAL_STATUS, dtype: int64

# Replacing NAs as'UNKNOWN'

df['MARITAL_STATUS'] = df['MARITAL_STATUS'].fillna('UNKNOWN (DEFAULT)')

df['MARITAL_STATUS'].value_counts(dropna=False)MARRIED 24199

SINGLE 13238

UNKNOWN (DEFAULT) 10440

WIDOWED 7204

DIVORCED 3211

SEPARATED 571

LIFE PARTNER 15

Name: MARITAL_STATUS, dtype: int64

plot_los_groupby('MARITAL_STATUS')boxplot_los_groupby('MARITAL_STATUS')Because it's not feasible to have 6985 unique values to use as features for predicting LOS, The diagnosis were reduced into more general categories. After researching the ICD9 coding methodology, the super categories in which the diagnosis codes are arranged are as follows:

- 001–139: infectious and parasitic diseases

- 140–239: neoplasms

- 240–279: endocrine, nutritional and metabolic diseases, and immunity disorders

- 280–289: diseases of the blood and blood-forming organs

- 290–319: mental disorders

- 320–389: diseases of the nervous system and sense organs

- 390–459: diseases of the circulatory system

- 460–519: diseases of the respiratory system

- 520–579: diseases of the digestive system

- 580–629: diseases of the genitourinary system

- 630–679: complications of pregnancy, childbirth, and the puerperium

- 680–709: diseases of the skin and subcutaneous tissue

- 710–739: diseases of the musculoskeletal system and connective tissue

- 740–759: congenital anomalies

- 760–779: certain conditions originating in the perinatal period

- 780–799: symptoms, signs, and ill-defined conditions

- 800–999: injury and poisoning

- E and V codes: external causes of injury and supplemental classification, using 999 as placeholder even though it overlaps with complications of medical care

Source of these categories: https://en.wikipedia.org/wiki/List_of_ICD-9_codes

# Filter out E and V codes since processing will be done on the numeric first 3 values

df_diagcode['recode'] = df_diagcode['ICD9_CODE']

df_diagcode['recode'] = df_diagcode['recode'][~df_diagcode['recode'].str.contains("[a-zA-Z]").fillna(False)]

df_diagcode['recode'].fillna(value='999', inplace=True)df_diagcode['recode'] = df_diagcode['recode'].str.slice(start=0, stop=3, step=1)

df_diagcode['recode'] = df_diagcode['recode'].astype(int)# ICD-9 Main Category ranges

icd9_ranges = [(1, 140), (140, 240), (240, 280), (280, 290), (290, 320), (320, 390),

(390, 460), (460, 520), (520, 580), (580, 630), (630, 680), (680, 710),

(710, 740), (740, 760), (760, 780), (780, 800), (800, 1000), (1000, 2000)]

# Associated category names

diag_dict = {0: 'infectious', 1: 'neoplasms', 2: 'endocrine', 3: 'blood',

4: 'mental', 5: 'nervous', 6: 'circulatory', 7: 'respiratory',

8: 'digestive', 9: 'genitourinary', 10: 'pregnancy', 11: 'skin',

12: 'muscular', 13: 'congenital', 14: 'prenatal', 15: 'misc',

16: 'injury', 17: 'misc'}

# Re-code in terms of integer

for num, cat_range in enumerate(icd9_ranges):

df_diagcode['recode'] = np.where(df_diagcode['recode'].between(cat_range[0],cat_range[1]),

num, df_diagcode['recode'])

# Convert integer to category name using diag_dict

df_diagcode['recode'] = df_diagcode['recode']

df_diagcode['cat'] = df_diagcode['recode'].replace(diag_dict)# Create list of diagnoses for each admission

hadm_list = df_diagcode.groupby('HADM_ID')['cat'].apply(list).reset_index()# Convert diagnoses list into hospital admission-item matrix

hadm_item = pd.get_dummies(hadm_list['cat'].apply(pd.Series).stack()).sum(level=0)# Join the above created dataset using HADM_ID to the hadm_list

hadm_item = hadm_item.join(hadm_list['HADM_ID'], how="outer")# Finally merging with main admissions df

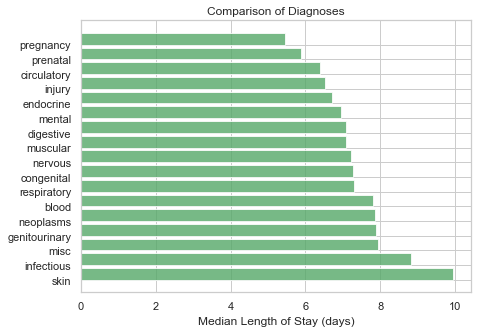

df = df.merge(hadm_item, how='inner', on='HADM_ID')# Explore median LOS by diagnosis category as defined above

diag_cat_list = ['skin', 'infectious', 'misc', 'genitourinary', 'neoplasms', 'blood', 'respiratory',

'congenital','nervous', 'muscular', 'digestive', 'mental', 'endocrine', 'injury',

'circulatory', 'prenatal', 'pregnancy']

results = []

for variable in diag_cat_list:

results.append(df[[variable, 'LOS']].groupby(variable).median().reset_index().values[1][1])

sns.set(style="whitegrid")

fig, ax = plt.subplots(figsize=(7,5))

ind = range(len(results))

ax.barh(ind, results, align='edge', color = '#55a868', alpha=0.8)

ax.set_yticks(ind)

ax.set_yticklabels(diag_cat_list)

ax.set_xlabel('Median Length of Stay (days)')

ax.tick_params(left=False, right=False, top=False)

ax.set_title('Comparison of Diagnoses'.format(variable))

plt.show();df_icu['HADM_ID'].nunique()57786

# Converting different categories of first care unit into ICU and NICU groups

df_icu['FIRST_CAREUNIT'].replace({'CCU': 'ICU', 'CSRU': 'ICU', 'MICU': 'ICU',

'SICU': 'ICU', 'TSICU': 'ICU'}, inplace=True)df_icu['cat'] = df_icu['FIRST_CAREUNIT']

icu_list = df_icu.groupby('HADM_ID')['cat'].apply(list).reset_index()df_icu['FIRST_CAREUNIT'].value_counts()ICU 53432

NICU 8100

Name: FIRST_CAREUNIT, dtype: int64

# Create admission-ICU matrix

icu_item = pd.get_dummies(icu_list['cat'].apply(pd.Series).stack()).sum(level=0)

icu_item[icu_item >= 1] = 1

icu_item = icu_item.join(icu_list['HADM_ID'], how="outer")# Merge ICU data with main dataFrame

df = df.merge(icu_item, how='outer', on='HADM_ID')# Replace NA with 0

df['ICU'].fillna(value=0, inplace=True)

df['NICU'].fillna(value=0, inplace=True)# Drop unnecessary columns

df.drop(columns=['ADMISSION_LOCATION','SUBJECT_ID', 'HADM_ID', 'ADMITTIME', 'ADMISSION_LOCATION',

'DISCHARGE_LOCATION', 'LANGUAGE',

'DIAGNOSIS', 'DECEASED', 'DEATHTIME'], inplace=True)

df.drop(columns=['DOB','DOD','DOD_HOSP','DOD_SSN','ROW_ID','RELIGION'], inplace=True)# Filtering only admission with LOS less than 40 days to reduce the skewness of the data.

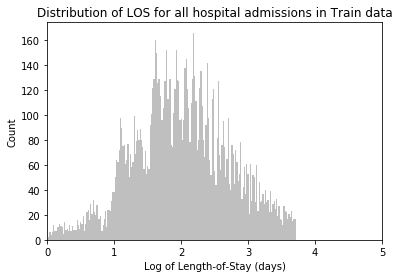

df = df[df['LOS'] <40]# Randomly splitting the data into test and train with 20% and 80% distribution. Using the train data to generate

# synthetic data and keeping the test data seperate for evaluation purpose

los_use, los_predict = train_test_split(df, test_size=0.2, random_state=25)# Writing the train and test files into csv for further use

# Note: Train dataset will be used for generating synthetic data using T-GAN and CT-GAN.

# Test dataset will be used for evaluation of models

los_use.to_csv("los_usecase.csv",index = False)

los_predict.to_csv("los_predict.csv",index = False)This use case will be used for regression problem in model compatibility and other modules like statistical similarity and privacy risk evaluation.

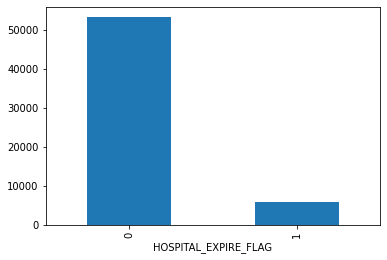

Another use case using MIMIC-III dataset is for mortality prediction. This use case is inspired by Kaggle kernel (reference below) where one can predict mortality just by the number of interactions between patient and hospital as predictors through count of lab tests, prescriptions, and procedures. It can be used to evaluate privacy risk with the help of PII columns like GENDER, ETHNICITY, MARITAL_STATUS and also serves as classification problem where we have to predict if patient will expire or not for a single hospital admission.

Reference: https://www.kaggle.com/drscarlat/predict-hospital-mortality-mimic3

Tables used in this use case:

- Patients - Every unique patient in the database (defines SUBJECT_ID). Columns like: SUBJECT_ID, GENDER

- Admissions - Every unique hospitalization for each patient in the database (defines HADM_ID). Columns Like: SUBJECT_ID, HADM_ID, HOSPITAL_EXPIRE_FLAG, MARITAL_STATUS, ETHNICITY, ADMISSION_TYPE

- CallOut - Information regarding when a patient was cleared for ICU discharge and when the patient was actually discharged. Columns Like: SUBJECT_ID, HADM_ID, NUMCALLOUT (count of rows)

- CPTEvents - Procedures recorded as Current Procedural Terminology (CPT) codes. Columns Like: SUBJECT_ID, HADM_ID, NUMCPTEVENTS (count of rows)

- Diagnosis_ICD - Hospital assigned diagnoses, coded using the International Statistical Classification of Diseases and Related Health Problems (ICD) system. Columns Like: SUBJECT_ID, HADM_ID, NUMDIAGNOSIS (count of rows)

- Inputevents_CV - Intake for patients monitored using the Philips CareVue system while in the ICU. Columns Like: SUBJECT_ID, HADM_ID, NUMINPUTEVENTS (count of rows)

- Labevents - Laboratory measurements for patients both within the hospital and in out patient clinics. Columns Like: SUBJECT_ID, HADM_ID, NUMLABEVENTS (count of rows)

- Noteevents - Deidentified notes, including nursing and physician notes, ECG reports, imaging reports, and discharge summaries. Columns Like: SUBJECT_ID, HADM_ID, NUMNOTEVENTS (count of rows)

- Outputevents - Output information for patients while in the ICU. Columns Like: SUBJECT_ID, HADM_ID, NUMOUTEVENTS (count of rows)

- Prescriptions - Medications ordered, and not necessarily administered, for a given patient. Columns Like: SUBJECT_ID, HADM_ID, NUMRX (count of rows)

- Procedureevents_mv - Patient procedures for the subset of patients who were monitored in the ICU using the iMDSoft MetaVision system. Columns Like: SUBJECT_ID, HADM_ID, NUMPROCEVENTS (count of rows)

- MICROBIOLOGYEVENTS - Microbiology measurements and sensitivities from the hospital database. Columns Like: SUBJECT_ID, HADM_ID, NUMMICROLABEVENTS (count of rows)

- Procedures_icd - Patient procedures, coded using the International Statistical Classification of Diseases and Related Health Problems (ICD) system. Columns Like: SUBJECT_ID, HADM_ID, NUMPROC (count of rows)

- Transfers - Patient movement from bed to bed within the hospital, including ICU admission and discharge. Columns Like: SUBJECT_ID, HADM_ID, NUMTRANSFERS (count of rows)

Steps to create analytical data set

- Load csv files: Read the comma separated files downloaded from link (https://mimic.physionet.org/gettingstarted/overview/)

- Roll up tables: We need count of various events or interactions between patients and hospital. In order to do this, group by or aggregate the tables at 'SUBJECT_ID' and 'HADM_ID' level and take count of number of rows for each. This will give total count of events related to single hospital admission.

- Merge tables: Use 'SUBJECT_ID' and 'HADM_ID' as composite key to merge all tables together and create final analytical data set.

Code

- Import required libraries and read csv files

- Function to roll up tables

- Merge all tables

- Exploratory Analysis

Import required libraries and read csv files

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

import warnings

warnings.filterwarnings('ignore')patients = pd.read_csv("data/patients.csv")

admissions = pd.read_csv("data/admissions.csv")

callout = pd.read_csv("data/callout.csv")

cptevents = pd.read_csv("data/cptevents.csv")

diagnosis = pd.read_csv("data/diagnoses_icd.csv")

outputevents = pd.read_csv("data/outputevents.csv")

rx = pd.read_csv("data/prescriptions.csv")

procevents = pd.read_csv("data/procedureevents_mv.csv")

microlabevents = pd.read_csv("data/microbiologyevents.csv")

proc = pd.read_csv("data/procedures_icd.csv")

transfers = pd.read_csv("data/transfers.csv")

inputevents = pd.read_csv("data/inputevents_cv.csv")

labevents = pd.read_csv("data/labevents.csv")

noteevents = pd.read_csv("data/noteevents.csv")Function to roll up tables

def rollup_sub_adm(df,col):

df=df.groupby(['SUBJECT_ID','HADM_ID']).agg({'ROW_ID':'count'})

df.reset_index(inplace=True)

df.columns=['SUBJECT_ID','HADM_ID',col]

print(col,":",df.shape)

return dfcallout=rollup_sub_adm(callout,'NUMCALLOUT')

cptevents=rollup_sub_adm(cptevents,'NUMCPTEVENTS')

diagnosis=rollup_sub_adm(diagnosis,'NUMDIAGNOSIS')

outputevents=rollup_sub_adm(outputevents,'NUMOUTEVENTS')

rx=rollup_sub_adm(rx,'NUMRX')

procevents=rollup_sub_adm(procevents,'NUMPROCEVENTS')

microlabevents=rollup_sub_adm(microlabevents,'NUMMICROLABEVENTS')

proc=rollup_sub_adm(proc,'NUMPROC')

transfers=rollup_sub_adm(transfers,'NUMTRANSFERS')

inputevents=rollup_sub_adm(inputevents,'NUMINPUTEVENTS')

labevents=rollup_sub_adm(labevents,'NUMLABEVENTS')

noteevents=rollup_sub_adm(noteevents,'NUMNOTEVENTS')NUMCALLOUT : (28732, 3)

NUMCPTEVENTS : (44148, 3)

NUMDIAGNOSIS : (58976, 3)

NUMOUTEVENTS : (52008, 3)

NUMRX : (50216, 3)

NUMPROCEVENTS : (21894, 3)

NUMMICROLABEVENTS : (48740, 3)

NUMPROC : (52243, 3)

NUMTRANSFERS : (58976, 3)

NUMINPUTEVENTS : (31970, 3)

NUMLABEVENTS : (58151, 3)

NUMNOTEVENTS : (58361, 3)

Merge all tables

mortality=admissions[['SUBJECT_ID','HADM_ID','ADMISSION_TYPE','MARITAL_STATUS','ETHNICITY','HOSPITAL_EXPIRE_FLAG']]

mortality.loc[pd.isnull(mortality['MARITAL_STATUS']),'MARITAL_STATUS'] ='UNKNOWN (DEFAULT)'

mortality = mortality.merge(patients[['SUBJECT_ID','GENDER']],how='left',on='SUBJECT_ID')

mortality = mortality.merge(callout,how='left',on=['SUBJECT_ID','HADM_ID'])

mortality = mortality.merge(cptevents,how='left',on=['SUBJECT_ID','HADM_ID'])

mortality = mortality.merge(diagnosis,how='left',on=['SUBJECT_ID','HADM_ID'])

mortality = mortality.merge(outputevents,how='left',on=['SUBJECT_ID','HADM_ID'])

mortality = mortality.merge(rx,how='left',on=['SUBJECT_ID','HADM_ID'])

mortality = mortality.merge(procevents,how='left',on=['SUBJECT_ID','HADM_ID'])

mortality = mortality.merge(microlabevents,how='left',on=['SUBJECT_ID','HADM_ID'])

mortality = mortality.merge(proc,how='left',on=['SUBJECT_ID','HADM_ID'])

mortality = mortality.merge(transfers,how='left',on=['SUBJECT_ID','HADM_ID'])

mortality = mortality.merge(inputevents,how='left',on=['SUBJECT_ID','HADM_ID'])

mortality = mortality.merge(labevents,how='left',on=['SUBJECT_ID','HADM_ID'])

mortality = mortality.merge(noteevents,how='left',on=['SUBJECT_ID','HADM_ID'])

mortality = mortality.fillna(0)#Exporing data

mortality.to_csv('mortality_full_data.csv',index=False)Exploratory Analysis

mortality.shape(58976, 19)

mortality.columnsIndex(['SUBJECT_ID', 'HADM_ID', 'ADMISSION_TYPE', 'MARITAL_STATUS',

'ETHNICITY', 'HOSPITAL_EXPIRE_FLAG', 'GENDER', 'NUMCALLOUT',

'NUMCPTEVENTS', 'NUMDIAGNOSIS', 'NUMOUTEVENTS', 'NUMRX',

'NUMPROCEVENTS', 'NUMMICROLABEVENTS', 'NUMPROC', 'NUMTRANSFERS',

'NUMINPUTEVENTS', 'NUMLABEVENTS', 'NUMNOTEVENTS'],

dtype='object')

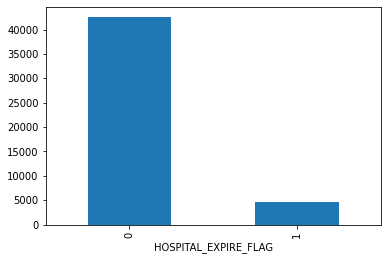

mortality.groupby('HOSPITAL_EXPIRE_FLAG').size().plot.bar()

plt.show()mortality.groupby('GENDER').size().plot.bar()

plt.show()mortality.groupby('MARITAL_STATUS').size().plot.bar()

plt.show()mortality.groupby('ADMISSION_TYPE').size().plot.bar()

plt.show()mortality.groupby('NUMLABEVENTS').size().plot.hist(bins=50)

plt.show()mortality.dtypesSUBJECT_ID int64

HADM_ID int64

ADMISSION_TYPE object

MARITAL_STATUS object

ETHNICITY object

HOSPITAL_EXPIRE_FLAG int64

GENDER object

NUMCALLOUT float64

NUMCPTEVENTS float64

NUMDIAGNOSIS int64

NUMOUTEVENTS float64

NUMRX float64

NUMPROCEVENTS float64

NUMMICROLABEVENTS float64

NUMPROC float64

NUMTRANSFERS int64

NUMINPUTEVENTS float64

NUMLABEVENTS float64

NUMNOTEVENTS float64

dtype: object

This use case will be used for classification problem in model compatibility and other modules like statistical similarity and privacy risk evaluation.

This method is the state of the art in reducing the reidentification risk. As we observed earlier, Data anonymization if effective but reduces the utility, Differential privacy adds small noise but has very bad model compatibility. However, Synthetic data, can be tuned to add privacy without losing either the utility, neither exposing privacy of individual data points. As the data doesn't represent any real entity, the disclosure of sensitive private data is eliminated. If the information available in the released synthetic data matches with any real entity participated in the original data then it is purely a co-incidence which gives individuals plausible deniability

A synthetic dataset is a repository of data that is generated programmatically.

- It can be numerical, binary, or categorical (ordinal or non-ordinal),

- The number of features and length of the dataset should be arbitrary

- It should preferably be random and the user should be able to choose a wide variety of statistical distribution to base this data upon i.e.. the underlying random process can be precisely controlled and tuned,

- If it is used for classification algorithms, then the degree of class separation should be controllable to make the learning problem easy or hard

- Random noise can be interjected in a controllable manner

- For a regression problem, a complex, non-linear generative process can be used for sourcing the data

To take advantage of GPU for better faster training of Neural networks, the system must be equipped with a CUDA enabled GPU card with a compatibility for CUDA 3.5 or higher. See the list of CUDA-enabled GPU cards

This is also a guide for the same https://www.tensorflow.org/install/gpu

To install latest version of Tensorflow

pip install tensorflow For releases 1.15 and older, CPU and GPU packages are separate:

pip install tensorflow==1.15 # CPU

pip install tensorflow-gpu==1.15 # GPUThe following NVIDIA® software must be installed on your system:

- NVIDIA® GPU drivers —CUDA 10.1 requires 418.x or higher.

- CUDA® Toolkit —TensorFlow supports CUDA 10.1 (TensorFlow >= 2.1.0)

- CUPTI ships with the CUDA Toolkit.

- cuDNN SDK (>= 7.6)

- (Optional) TensorRT 6.0 to improve latency and throughput for inference on some models.

finally, Add the CUDA, CUPTI, and cuDNN installation directories to the %PATH% environmental variable. For example, if the CUDA Toolkit is installed to C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.1 and cuDNN to C:\tools\cuda, update your %PATH% to match:

SET PATH=C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.1\bin;%PATH%

SET PATH=C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.1\extras\CUPTI\libx64;%PATH%

SET PATH=C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.1\include;%PATH%

SET PATH=C:\tools\cuda\bin;%PATH%

To Check if Tensorflow is is properly configured with the GPU, the following code can be run in the python console

from tensorflow.python.client import device_lib

print(device_lib.list_local_devices())If properly configured, the command will list out all the devices available for Tensorflow to use

Sample Output [name: "/cpu:0" device_type: "CPU" memory_limit: 268435456 locality { } incarnation: 4402277519343584096, name: "/gpu:0" device_type: "GPU" memory_limit: 6772842168 locality { bus_id: 1 } incarnation: 7471795903849088328 physical_device_desc: "device: 0, name: GeForce GTX 1070, pci bus id: 0000:05:00.0"]

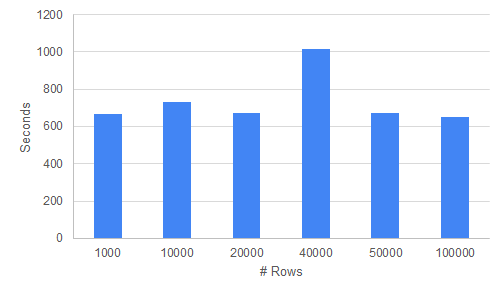

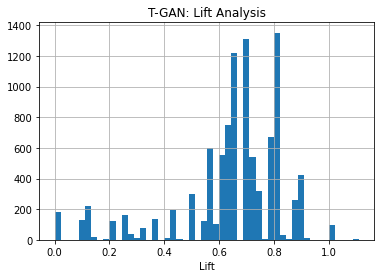

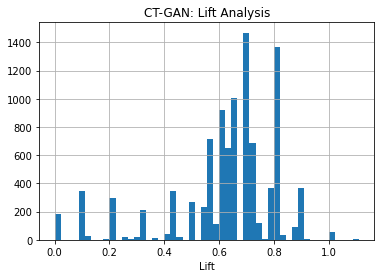

In this section we check how the execution time increases when the data size increases for the two algorithms TGAN and CTGAN.

When testing both the modules, the most time consuming part of the process seems to be the training time for e TGAN and CTGAN algorithms. This is understandable as GANS are basically two neural networks who are competing against each other to outdo the other. This back and forth, as well as the backpropagation to adjust the weights requires a lot of time and resources and we wanted to understand the exact time taken by these in case we wanted to scale them for future applications.

We approach testing these algorithms in the following way. We have two data types we are working with, mainly Continuous and categorical datasets. We want to record execution times for both of these types of columns. We also want to observe how the training time varies with increasing number of rows and columns.

We take a two datasets both having 100,000 rows and 25 columns. One dataset has all Categorical columns and one of them has only numeric columns. We want to vary the number of rows and columns and time the algorithm to check the execution time for both the algorithms.

Increasing Number of Rows Increasing Number of columns

Increasing Number of Rows Increasing Number of columns

Increasing Number of Rows Increasing Number of columns

Increasing Number of Rows Increasing Number of columns

-

For CTGAN algorithm, we can observe that the training time is affected by both the number of rows and columns in the data. Categorical Data takes much lower time to train than continuous dataset

-

For TGAN algorithm, we can observe that the training time is mainly affected by the the number of columns in the dataset. Increasing the number of rows from 1k to 100k does not lead to increased training time. Categorical Data takes much lower time to train than continuous dataset

-

the CTGAN algorithm takes much lower time to train the TGAN algorithm

We can see the training times below

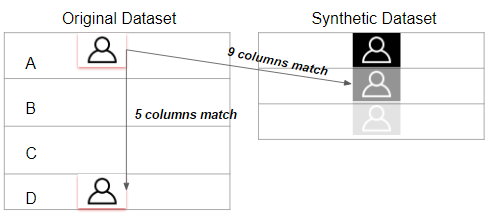

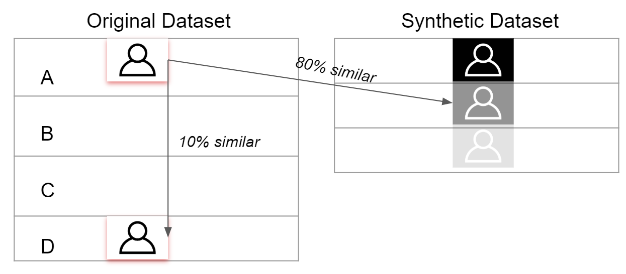

To calculate the similarity between two tables, our methodology transfers the problem into calculating how different the synthetic data generated by GAN algorithm is from the original data. The smaller the difference between two data sets, the more similar they are with each other.

In this methodology, the similarity between the synthetic data and original data will be evaluated in two perspectives:

- the pair columns. (Column-wise)

- the relationships across multiple columns in the table. (Table-wise)

The diagram of the metric methodology is shown below.

Description and Rationale for KL-divergence

Kullback-Leibler Divergence (KL-divergence) can be used to measure the distribution similarity between the pair-columns in both original table and synthetic table. KL-divergence quantifies how much information is lost when we compare the synthetic column distribution with original one. More specifically, the KL-divergence is just a slight modification of our formula for entropy and essentially, in our case, is the expectation of the log difference between the probability of data in the original column distribution(p) with the synthetic column distribution(q). The formula is below:

For the probability distribution of continuous variable:

(A continuous random variable is defined as one which takes an infinite number of possible values.)

For the probability distribution of discrete variable:

(A discrete random variable is defined as one which may take on only a countable number of distinct values and thus can be quantified.)

KL divergence can be used to measure continuous or discrete probability distributions, but in the latter case the integral of the events is calculated in stead of the sum of the probabilities of the discrete events. It requires the input data to have a sum of 1, it would not be a proper probability distribution otherwise. Therefore, transformation step before calculating the KL divergence is required, which converts the original column-wise data to an array of probability values of different events.

Why different techniques required for discrete and continuous data:

Different from how it is for discrete data, it is impossible to generate a specific and accurate probability value for each data points in the sample of continuous data. In our use case, the 'length of Stay' is the only continuous variable because both day and the time during the day are considered in this variable. We will round the values of length of stay to make it all integers for better calculating the the similarity of probability distribution. The probability values will be ordered based on the number of length stayed, the number of length stayed that does not show up in the rounded data would be assigned 0 as its probability. For example, if there is no patient staying in the ICU around 6 days, the probability of the value "6" will be assigned as 0.

Limitation of KL-divergence:

Even though KL divergence is a good measurement for common cases, it is restricted to be used on two distributions that have the same length of probability distribution. In the case when the generated discrete column has less number of events from the original one, we need to make sure including all events into the probability distribution of the synthetic one by adding 0 as the probability of the omitted event. According to KL-divergence's formula, the probability sequence put in should not contain zero, it would output infinity other wise.

Description and Rationale for Cosine Similarity

Cosine similarity can solve the issue mentioned above. Cosine similarity is a measure of similarity between two non-zero vectors of an inner product space that measures the cosine of the angle between them. The formula referenced from wiki page is shown below, where A and B in our case is the array of frequency probabilities of each unique value in the synthetic column and original column respectively:

Limitations:

For some numerical data such as decimal data that ranges only from 0 to 2, it is hard to be rounded up or divided by bins, which will create biases in statistical similarity measurement. In this case, we propose to randomly select the same number of observations as the original data contains for calculating the cosine similarity.

Dimensionality reduction techniques are proposed to compress high-dimensional table in a way that generates a lower dimensional representation of original table, which further enables similarity score calculation and visualization of the relationships among columns in a table.

2.1.1 Autoencoder

Description and Rationale for Autoencoder

Autoencoder is considered as a data compression algorithm and has long been thought to be a potential avenue for solving the problem of unsupervised learning, i.e. the learning of useful representations without the need for labels. Stated from Keras team about Autoencoder, autoencoders are not true unsupervised learning technique (which would imply a different learning process together), they are self-supervised technique, a specific instance of supervised learning where the targets are exactly the input data or generated from the input data.

In our case, the aim of an autoencoder is to learn a representation (embedding) of the relationships among multiple features in our table by setting the input and target data the same. Because Neural Networks can slowly approximate any function that maps inputs to outputs through an iterative optimization process called training. The embedding space has fewer dimensions (columns) than the feature data in a way that captures some latent structure of the feature data set.

To get a representation(embedding) of a table from an Autoencoder:

Step1: Train an Autoencoder

Autoencoder can be broken in to 2 parts:

- Encoder: this part of the network compresses the input into an assigned number of vectors, which in our case for tabular data is the number of columns. The space represented by these fewer number of vectors is often called the latent-space or bottleneck. These compressed data that represent the original input are together called an “embedding” of the input.

- Decoder: this part of the network tries to reconstruct the input using only the embedding of the input. When the decoder is able to reconstruct the input exactly as it was fed to the encoder, you can say that the encoder is able to produce the best embeddings for the input with which the decoder is able to reconstruct well!

Note: In order to make sure both synthetic table and original table are transformed exactly in the same process, we will train the auto-encoder using the original dataset and then predict on the synthetic dataset using the model built.

Step2: Extracting Embeddings from Autoencoder Network

After training the Autoencoder, extract the embedding for an example from the network. Extract the embedding by using the feature data as input, and read the outputs of the encoder layer. The embedding should contain information about reproducing the original input data, but stored in a compact way.

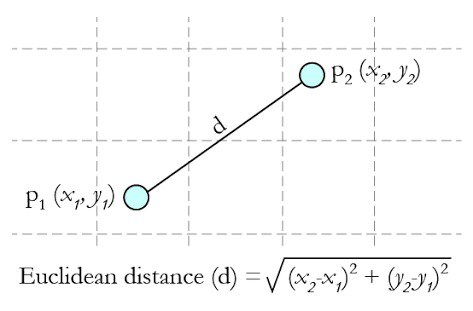

For the purpose of generating a similarity score, we would assign the dimension of embedding to be 1 so that we can use Cosine similarity or Euclidean distance to calculate the similarity. However, for visualization, we can choose either using autoencoder to compress both tables separately to a lower dimensional (but more than 2) embedding and then use PCA to further compress the data to 2 dimension or using autoencoder to compress both tables directly to 2 dimensional embeddings. In this document, we will demonstrate later with the former method for the purpose of metric diversity.

How to quantify the similarity between two embeddings?

We now have embeddings for the pair of tables. A similarity measure takes these embeddings and returns a number measuring their similarity. Remember that embeddings are simply vectors of numbers. To find the similarity between two vectors A=[a1,a2,...,an] and B=[b1,b2,...,bn], we can use Cosine Similarity that we mentioned before. It is a better measurement than Euclidean distance because it considers the angle of the vectors by putting them on a high dimensional space for comparison. And it outputs a score that ranges from 0 to 1 where 0 means that two vectors are oriented completely differently and 1 means that two vectors are oriented identically. This makes the comparison of the performances between different GAN algorithms easier.

Limitations:

-

The challenge applying Autoencoder with tabular data is the fact that each column represents its unique type of distribution. In other words, data types such as categories, ID numbers, ranks, binary values are all smashed into one sample table.

-

an autoencoder assume that all features (columns) in the table determines the similarity at the same degree, which means that this isn't the optimal choice when certain features could be more important than others in determining similarity.

-

Different settings of bins for calculating the frequency probabilities would result in different cosine similarity scores. The metric for this is not robust enough for an accurate and stable result.

2.1.2 PCA and t-SNE

For visualization, PCA and t-SNE are both commonly used dimensionality reduction metrics and can be used in our case to generate a lower dimensional data and then visualize the structures of different tables to compare.

Description and Rationale for PCA

Principal Component Analysis (PCA) is a linear feature extraction technique. It performs a linear mapping of the data to a lower-dimensional space in such a way that the variance of the data in the low-dimensional representation is maximized. It does so by calculating the eigenvectors from the covariance matrix. The eigenvectors that correspond to the largest eigenvalues (the principal components) are used to reconstruct a significant fraction of the variance of the original data.

Description and Rationale for t-SNE

t-Distributed Stochastic Neighbor Embedding (t-SNE) is a tool to visualize high-dimensional data. It converts similarities between data points to joint probabilities and tries to minimize the Kullback-Leibler divergence between the joint probabilities of the low-dimensional embedding and the high-dimensional data.

Why PCA over t-SNE?

- According to this blog trying out both PCA and t-SNE techniques, t-SNE is computational heavy with the probabilistic method it does. Since t-SNE scales quadratically in the number of objects N, its applicability is limited to data sets with only a few thousand input objects; beyond that, learning becomes too slow to be practical (and the memory requirements become too large).

- t-SNE in Scikit learn has a cost function that is not convex according to Scikit learn documentation, which makes two visualizations of two tables generated by the same transformation function not comparable. Because they use different initializations for the transformation, we would get different results. Below is an example of t-SNE plots using the same transformation on two different data, from which we can see that they are too different to compare because of different initializations.

Therefore, in this document, we would use PCA as techniques for visualization comparison. t-SNE is still a great technique recommended for visualizing high-dimensional data because it is a probabilistic technique compared to PCA that is a mathematical one. Linear dimensionality reduction algorithms, like PCA, concentrate on placing dissimilar data points far apart in a lower dimension representation. But in order to represent high dimension data on low dimension, non-linear manifold, it is essential that similar data points must be represented close together, which is something t-SNE does not PCA.

Why Autoencoder + PCA over PCA?

If a dataset has a lot of noisy data, it is risky that too much variation is created in a way that affects the judgement of choosing the best principal components. So applying data compression process would help capturing the major structure of data to avoid this issue.

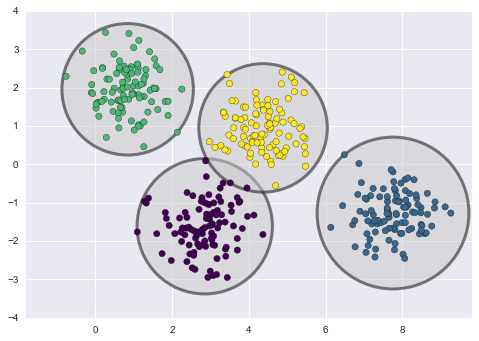

The combination of Autoencoder and dimensionality reduction techniques such as PCA is one way to measure the statistical similarity between two tables. They are done in a self-supervised manner by training the same data as input and output of a special neural network so that it could extract an representation of the input data in a desired dimensional structure. But how are the synthetic data performing in an unsupervised algorithm compared to the original data? Here we will use a unsupervised technique that is classical but widely-used in real business -Clustering - to evaluate the statistical similarity in another perspective.

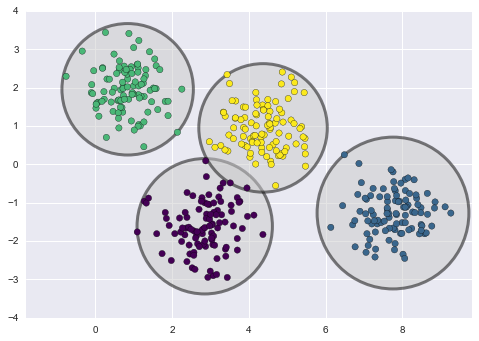

Description and Rationale for Clustering

The basic idea of clustering is to organize data objects (which in our case is patients) into homogeneous groups called clusters. The desired properties of clustering result are high intra-similarity (data objects within each cluster) and low inter-similarity (clusters with each other).

We would use the most common approach k-means clustering algorithm for clustering evaluation. Here is k-means idea:

- Choose k data points (objects) at random (They will represent cluster centers, namely means)

- Assign the remaining points to the cluster to which they are most similar (Based on the distance from the cluster mean value)

- Update the means based on newly formed clusters

- Repeat from Step 2 until converges.

Step1: Data Transformation

We use the most popular and widely used distance metric for numeric data, Euclidean Distance, to calculate the distance between data objects. Therefore, we need to convert string values into numerical values using one-hot encoding transformation. Even though one-hot encoding would make it harder to interpret the cluster results because it would keep increasing the space of features, we can use it because we do not need to worry about the interpretation in this case.

Step 2: Define the number of Clusters (Elbow Curve) and clustering modeling

The k-means clustering algorithm requires the number of clusters (k) to be assigned manually. The elbow method is the most common method to validate the number of clusters. The idea of the elbow method is to run k-means clustering on the dataset for a range of values of k, and for each value of k calculate the sum of squared errors (SSE). Then plot a line chart of the SSE for each value of k. If the line chart looks like an arm, then the "elbow" on the arm is the value of k that is the best. The idea is that we want a small SSE, but that the SSE tends to decrease toward 0 as we increase k. So our goal is to choose a small value of k that still has a low SSE, and the elbow usually represents where we start to have diminishing returns by increasing k.

Step 3: Evaluating Clustering result

We will evaluate the similarity of the table by comparing the visualization of the elbow curve and centers of clusters. By comparing the number of clusters indicated by elbow curves and how different centers of different clusters are between the original table and synthetic table, we can get a sense of how similar the features are between data in the original table and the synthetic one.

For discrete columns:

Step 1: Convert original data to probability values

Step 2: Calculate the Cosine Similarity and KL divergence (if applicable)

For continuous columns:

**Step 1: **Transform numerical data into values of bins

Step 2: Convert original data to probability values

Step 3: Calculate the Cosine Similarity

# p and q have to be probability distributions, which means they should be sum of 1.

def kl_divergence(p, q):

return np.sum(np.where(p != 0, p * np.log(p / q), 0))

from scipy.spatial import distance

def cos_similarity(p,q):

return 1 - distance.cosine(p, q)For discrete data:

def discret_probs(column):

counts = column.value_counts()

freqs ={counts.index[i]: counts.values[i] for i in range(len(counts.index))}

probs = []

for k,v in freqs.items():

probs.append(v/len(column))

return np.array(probs)

def cat_plot(colname, realdata, syndata):

real_p = discret_probs(realdata[colname])

syn_p = discret_probs(syndata[colname])

real_plt = pd.DataFrame({colname:realdata[colname], 'table': 'real'})

syn_plt = pd.DataFrame({colname:realdata[colname], 'table': 'synthetic'})

df_plt = pd.concat([real_plt, syn_plt], axis=0, sort=False)

kl = kl_divergence(np.array(real_p), np.array(syn_p))

cos_sim = cos_similarity(real_p,syn_p)

plt.figure(figsize = [16, 6])

plt.title('KL-divergence = %1.3f , Cosine Similarity = %1.3f'% (kl, cos_sim),

fontsize = 16)

sns.countplot(x=colname, hue="table", data=df_plt)

plt.suptitle('Frequency Distribution Comparison (Column: {})'.format(colname),

fontsize = 20)

plt.xlabel('Categories of Column: {}'.format(colname),fontsize = 14)

plt.ylabel("Frequency",fontsize = 14)

cat_plot('insurance', real_data, syn_data)For continuous data:

# identify bin range

max_numlen = max(max(real_data['NUMLABEVENTS']), max(syn_data['NUMLABEVENTS']))

min_numlen = min(min(real_data['NUMLABEVENTS']), min(syn_data['NUMLABEVENTS']))

print('max: ',max_numlen)

print('min: ',min_numlen)### Decide the bins by yourself:

# The upper bound should be 2 more steps more than the maximum value of both vectors

bins = np.arange(-50,13800,10)

real_inds = pd.DataFrame(np.digitize(real_data['NUMLABEVENTS'], bins), columns = ['inds'])

syn_inds = pd.DataFrame(np.digitize(syn_data['NUMLABEVENTS'], bins), columns = ['inds'])

from scipy.spatial import distance

def identify_probs(table,column):

counts = table[column].value_counts()

freqs = {counts.index[i]: counts.values[i] for i in range(len(counts.index))}

for i in range(1, len(bins)+1):

if i not in freqs.keys():

freqs[i] = 0

sorted_freqs = {}

for k in sorted(freqs.keys()):

sorted_freqs[k] = freqs[k]

probs = []

for k,v in sorted_freqs.items():

probs.append(v/len(table[column]))

return sorted_freqs, np.array(probs)

real_p = identify_probs(real_inds,'inds')[1]

syn_p = identify_probs(syn_inds,'inds')[1]

def cos_similarity(p,q):

return 1 - distance.cosine(p, q)

cos_sim = cos_similarity(real_p,syn_p)

def num_plot(colname, realdata, syndata):

plt.figure(figsize = [16, 6])

plt.suptitle('Frequency Distribution Comparison (Cosine Similarity: %1.3f )'% cos_sim, fontsize = 18)

plt.subplot(121)

plt.title('Synthetic Data', fontsize = 16)

sns.distplot(syndata[colname], color = 'b', kde = False)

plt.xlabel('Column: {}'.format(colname),fontsize = 14)

plt.ylabel("Frequency",fontsize = 14)

plt.xlim(min(bins), max(bins))

plt.subplot(122)

plt.title('Original Data', fontsize = 16)

sns.distplot(realdata[colname], color = 'r', kde = False)

plt.xlabel('Column: {}'.format(colname) ,fontsize = 14)

plt.ylabel("Frequency",fontsize = 14)

plt.xlim(min(bins), max(bins))

plt.show()

num_plot('NUMLABEVENTS', real_data, syn_data)Result demo:

- Discrete column demo

KL divergence and frequency distribution of pair-columns on Use case 1 dataset generated by tGAN:

KL divergence and frequency distribution of pair-columns on Use case 1 dataset generated by ctGAN:

- Continuous column demo

KL divergence and frequency distribution of pair-columns on Use case 1 dataset generated by tGAN:

(Note: Some missing values are treated as 0 )

KL divergence and frequency distribution of pair-columns on Use case 1 dataset generated by ctGAN:

KL divergence and frequency distribution of "insurance" pair-columns on Use case 2 dataset generated by tGAN:

KL divergence and frequency distribution of "insurance" pair-columns on Use case 2 dataset generated by ctGAN:

Step1: Train an Autoencoder

Step2: Extracting Embeddings from Autoencoder Network

x_train = np.array(x_train)

x_test = np.array(x_test)

# Flatten the data into vectors

x_train = x_train.reshape((len(x_train), np.prod(x_train.shape[1:])))

x_test = x_test.reshape((len(x_test), np.prod(x_test.shape[1:])))

print(x_train.shape)

print(x_test.shape)from keras.layers import Input, Dense

from keras.models import Model

def modeling_autoencoder(latent_dim, x_train):

original_dim= x_train.shape[1]

# this is our input placeholder

input_data = Input(shape=(original_dim,))

# "encoded" is the encoded representation of the input

encoded = Dense(latent_dim, activation='relu')(input_data)

# "decoded" is the lossy reconstruction of the input

decoded = Dense(original_dim, activation='sigmoid')(encoded)

# this model maps an input to its reconstruction (Define a model that would turn input_data into decoded output)

autoencoder = Model(input_data, decoded)

#### Create a separate encoder model ####

# this model maps an input to its encoded representation

encoder = Model(input_data, encoded)

#### as well as the decoder model ####

# create a placeholder for an encoded (assigned # of dimensions) input

encoded_input = Input(shape=(latent_dim,))

# retrieve the last layer of the autoencoder model

decoder_layer = autoencoder.layers[-1]

# create the decoder model

decoder = Model(encoded_input, decoder_layer(encoded_input))

#### Autoencoder model training ####

autoencoder.compile(optimizer='adadelta', loss='binary_crossentropy')

autoencoder.fit(x_train, x_train,

epochs=50,

batch_size=256,

shuffle=True,

validation_split = 0.2)

return encoder, decoder

**Step 1: **Extract 1-dimensional embedding from the model trained for the datasets respectively

Step 2: Transform embedding data to probability values (distribution vector)

Step 3: Calculate the Cosine Similarity between two distribution vectors

trained_encoder = modeling_autoencoder(1, x_train)[0]

encoded_testdata = trained_encoder.predict(x_test)

encoded_traindata = trained_encoder.predict(x_train) ### Decide the bins by yourself:

# The upper bound should be 2 more steps more than the maximum value of both vectors

bins = np.arange(0,2100,20)

real_inds = pd.DataFrame(np.digitize(encoded_traindata, bins), columns = ['inds'])

syn_inds = pd.DataFrame(np.digitize(encoded_testdata, bins), columns = ['inds'])

def identify_probs(table,column):

counts = table[column].value_counts()

freqs = {counts.index[i]: counts.values[i] for i in range(len(counts.index))}

for i in range(1, len(bins)+1):

if i not in freqs.keys():

freqs[i] = 0

sorted_freqs = {}

for k in sorted(freqs.keys()):

sorted_freqs[k] = freqs[k]

probs = []

for k,v in sorted_freqs.items():

probs.append(v/len(table[column]))

return sorted_freqs, np.array(probs)

from scipy.spatial import distance

real_p = identify_probs(real_inds,'inds')[1]

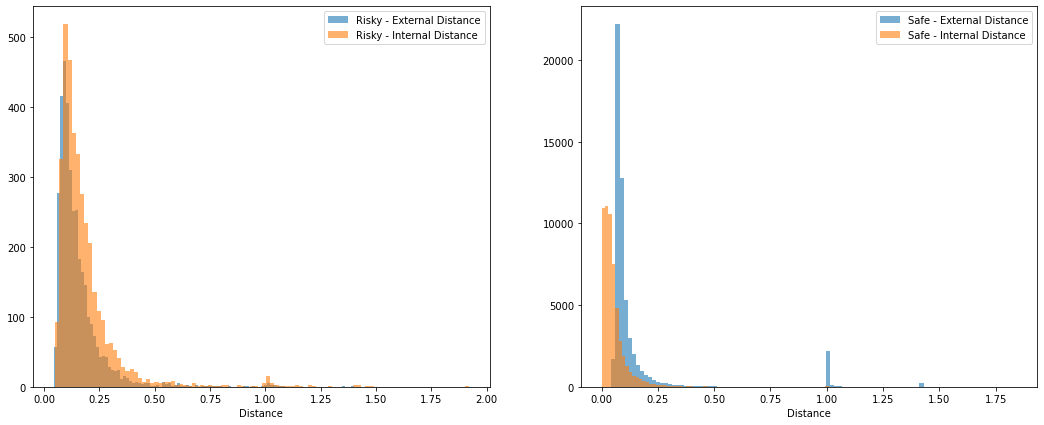

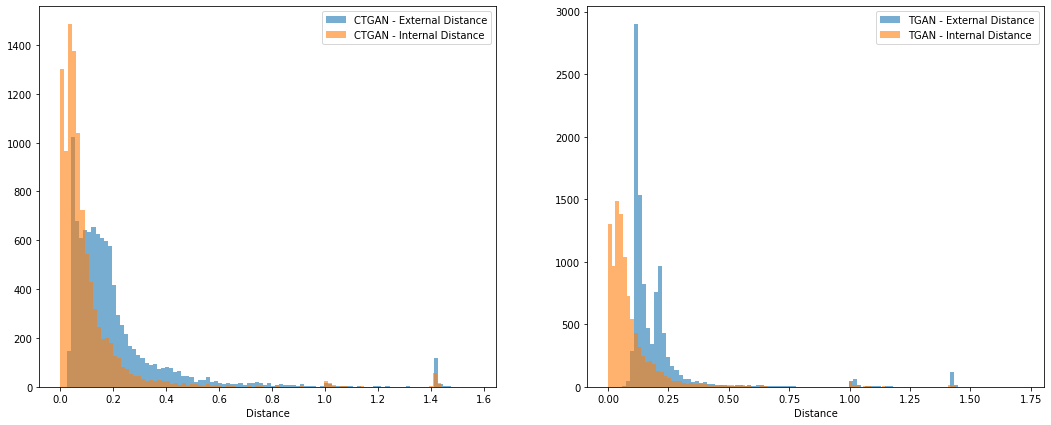

syn_p = identify_probs(syn_inds,'inds')[1]