ExplainX is a model explainability/interpretability framework for data scientists and business users.

Use explainX to understand overall model behavior, explain the "why" behind model predictions, remove biases and create convincing explanations for your business stakeholders.

Essential for:

- Explaining model predictions

- Debugging models

- Detecting biases in data

- Gaining trust of business users

- Successfully deploying AI solution

- Why did my model make a mistake?

- Is my model biased? If yes, where?

- How can I understand and trust the model's decisions?

- Does my model satisfy legal & regulatory requirements?

Dashboard Demo: http://3.128.188.55:8080/

Python 3.5+ | Linux, Mac, Windows

pip install explainxTo download on Windows, please install Microsoft C++ Build Tools first and then install the explainX package via pip

If you are using a notebook instance on the cloud (AWS SageMaker, Colab, Azure), please follow our step-by-step guide to install & run explainX cloud. Cloud Installation Instructions

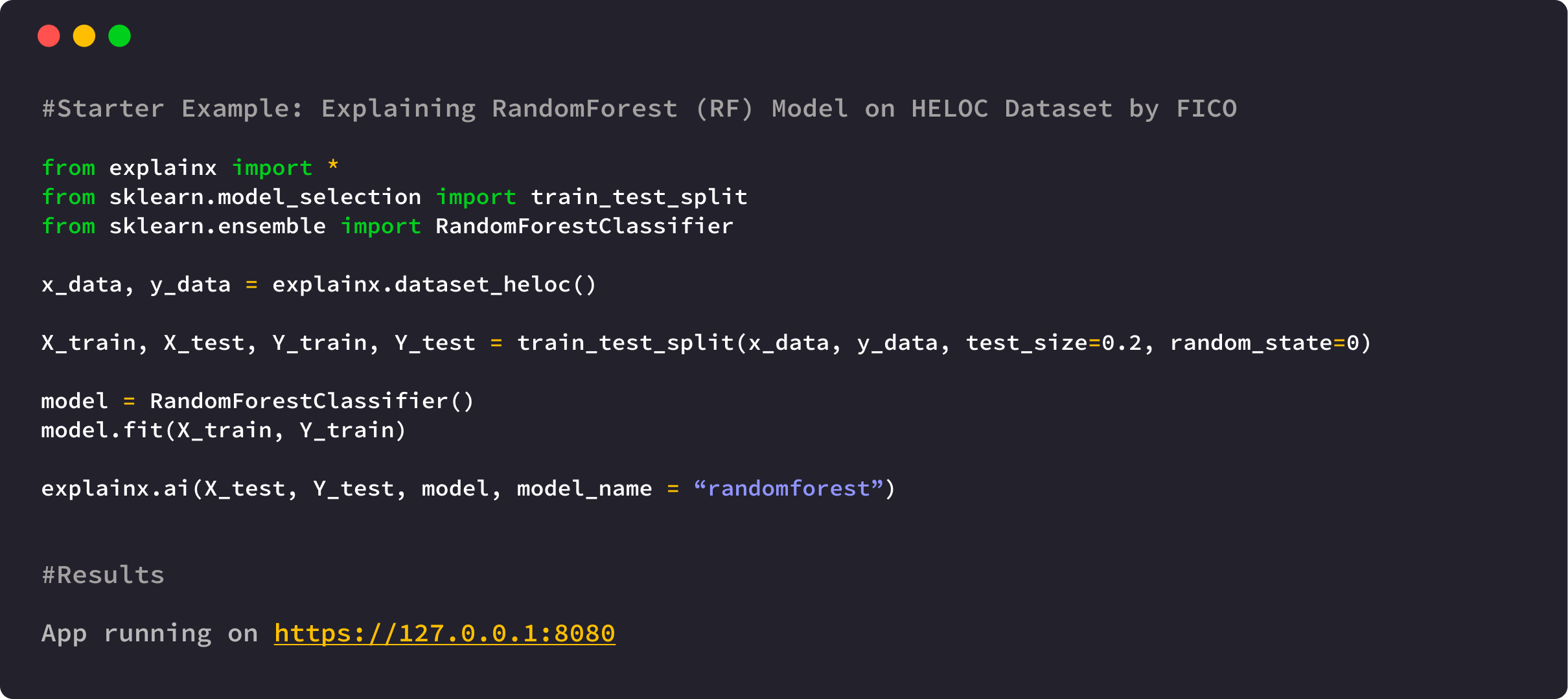

After successfully installing explainX, open up your Python IDE of Jupyter Notebook and simply follow the code below to use it:

- Import required module.

from explainx import *

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split- Load and split your dataset into x_data and y_data

#Load Dataset: X_Data, Y_Data

#X_Data = Pandas DataFrame

#Y_Data = Numpy Array or List

X_data,Y_data = explainx.dataset_heloc()- Split dataset into training & testing.

X_train, X_test, Y_train, Y_test = train_test_split(X_data,Y_data, test_size=0.3, random_state=0)- Train your model.

# Train a RandomForest Model

model = RandomForestClassifier()

model.fit(X_train, Y_train)After you're done training the model, you can either access the complete explainability dashboard or access individual techniques.

To access the entire dashboard with all the explainability techniques under one roof, follow the code down below. It is great for sharing your work with your peers and managers in an interactive and easy to understand way.

5.1. Pass your model and dataset into the explainX function:

explainx.ai(X_test, Y_test, model, model_name="randomforest")5.2. Click on the dashboard link to start exploring model behavior:

App running on https://0.0.0.0:8080In this latest release, we have also given the option to use explainability techniques individually. This will allow the user to choose technique that fits their personal AI use case.

6.1. Pass your model, X_Data and Y_Data into the explainx_modules function.

explainx_modules.ai(model, X_test, Y_test)As an upgrade, we have eliminated the need to pass in the model name as explainX is smart enough to identify the model type and problem type i.e. classification or regression, by itself.

You can access multiple modules:

Module 1: Dataframe with Predictions

explainx_modules.dataframe_graphing()Module 2: Model Metrics

explainx_modules.metrics()Module 3: Global Level SHAP Values

explainx_modules.shap_df()Module 4: What-If Scenario Analysis (Local Level Explanations)

explainx_modules.what_if_analysis()Module 5: Partial Dependence Plot & Summary Plot

explainx_modules.feature_interactions()Module 6: Model Performance Comparison (Cohort Analysis)

explainx_modules.cohort_analysis()To access the modules within your jupyter notebook as IFrames, just pass the mode='inline' argument.

For detailed description into each module, check out our documentation at https://www.docs.explainx.ai

If you are running explainX on the cloud e.g., AWS Sagemaker? https://0.0.0.0:8080 will not work. Please visit our documentation for installation instructions for the cloud: Cloud Installation Instructions

After installation is complete, just open your terminal and run the following command.

lt -h "https://serverless.social" -p [port number]

lt -h "https://serverless.social" -p 8080

Please click on the image below to load the tutorial:

(Note: Please manually set it to 720p or greater to have the text appear clearly)

| Interpretability Technique | Status |

|---|---|

| SHAP Kernel Explainer | Live |

| SHAP Tree Explainer | Live |

| What-if Analysis | Live |

| Model Performance Comparison | Live |

| Partial Dependence Plot | Live |

| Surrogate Decision Tree | Coming Soon |

| Anchors | Coming Soon |

| Integrated Gradients (IG) | Coming Soon |

| No. | Model Name | Status |

|---|---|---|

| 1. | Catboost | Live |

| 2. | XGboost==1.0.2 | Live |

| 3. | Gradient Boosting Regressor | Live |

| 4. | RandomForest Model | Live |

| 5. | SVM | Live |

| 6. | KNeighboursClassifier | Live |

| 7. | Logistic Regression | Live |

| 8. | DecisionTreeClassifier | Live |

| 9. | All Scikit-learn Models | Live |

| 10. | Neural Networks | Live |

| 11. | H2O.ai AutoML | Next in Line |

| 12. | TensorFlow Models | Coming Soon |

| 13. | PyTorch Models | Coming Soon |

Pull requests are welcome. In order to make changes to explainx, the ideal approach is to fork the repository then clone the fork locally.

For major changes, please open an issue first to discuss what you would like to change. Please make sure to update tests as appropriate.

Please help us by reporting any issues you may have while using explainX.