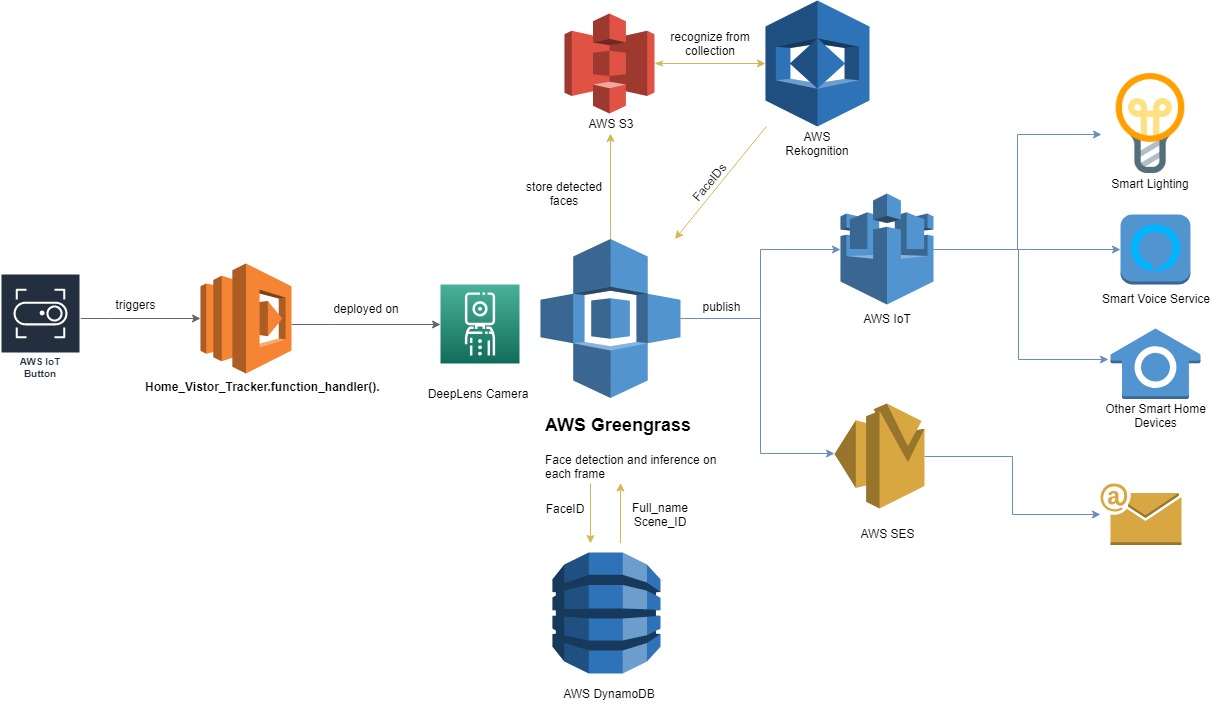

I made a home visitor management solution for homeowners to design IoT suite (lighting, photo gallery, music combination) for specific visitors. The system is implemented with AWS services. Paper is listed in the repo.

https://www.youtube.com/watch?v=IFgquaeec74

There is only one Lambda function needed for actual code. But you need to set up different AWS services which I will walk you through.

Headsup:

- I used an AWS DeepLens as the greegrass device. But any Raspberry Pi or Arduino is feasible for this.

- Use us-east-1 as the region since for some of my code I may directly used "us-east-1" as the region.

-

Set up S3

- Create a bucket called "home-visitor-tracker" to sync with the name in the code.

-

Set up Rekognition

- Download and install AWS-CLI, and do

aws configureto configure your aws-cli. - Create a Rekognition collection by doing

aws rekognition create-collection \ --collection-id "collectionname" - Upload your desired known faces images onto specific S3 bucket.

- index faces by doing

on each face image.

aws rekognition index-faces \ --image '{"S3Object":{"Bucket":"bucket-name","Name":"file-name"}}' \ --collection-id "collection-id" \ --max-faces 1 \ --quality-filter "AUTO" \ --detection-attributes "ALL" \ --external-image-id "example-image.jpg"

- Download and install AWS-CLI, and do

-

Set up your lighting scene

- I am using LIFX lighting, it can be done by creating different scenes on the mobile app.

- Get your authentication token by setting up your LIFX account online.

- On the terminal do

to retrieve your sceneIDs.

curl"https://api.lifx.com/v1/scenes" -H "Authorization: Bearer your_authenitcation_token"

-

Set up DynamoDB

- Create a table with primary key of

faceID - populate your table with the

faceID,full name, andsceneID

- Create a table with primary key of

-

Set up SES

- Really easy, just have the two email addresses verified.

-

Set up Lambda

-

Create the lambda function with the name "gghelloworld"

-

Set the Python environment to be 3.6 or 3.7

-

Set the handler name to be

gghelloworld.function_handler. -

Click the "action" tab on the top and click "export the function" to download the function as a zip file.

-

Extract the zip file to get the root function folder.

-

Replace the original

gghelloworld.pywith the file in theCloudCodefolder. -

Change the email address with your own emails set previously.

-

Change the authentication token to the own your got from LIFX.

-

Change the sceneID to the visitor sceneID you set on the mobile phone.

-

Then open the terminal, at the file folder, set up a virtual environment.

-

Then in the environment, do

mkdir package pip install face-recognition --target .then wait for it to complete and propagate the folder with required packages.

-

Then put the rest of the greengrass package folders into the package folder.

-

In the package folder, do

zip -r9 ../function.zip . -

Go to the root folder, do

zip -g function.zip function.py -

Upload the zip file to the lambda function, you may need to use S3 to upload it.

-

Set the timeout interval to be 6 seconds.

-

Set the environment variable

AWS_IOT_THING_NAMEwith your iot thing id of the greegrass device -

For the trigger, use AWS IoT, and in the configuration part, select IoT Button and then configure your IoT button to register it on the cloud.

-

-

Set up greengrass core.

- Create a greegrass group.

- Add the lambda function

gghelloworldto the lambda tab. - Add a subscription from IoT Cloud to the lambda function, with the topic of your IoT Button topic.

- Deploy the greengrass function to your device.

-

Now you should be able to use the IoT button to trigger your lambda function deployed on the greengrass core with a camera, and see the light turns to your desired scene and receive an notification email.