Important

RAI is in beta phase now, expect friction. Early contributors are the most welcome!

RAI is developing fast towards a glorious release in time for ROSCon 2024.

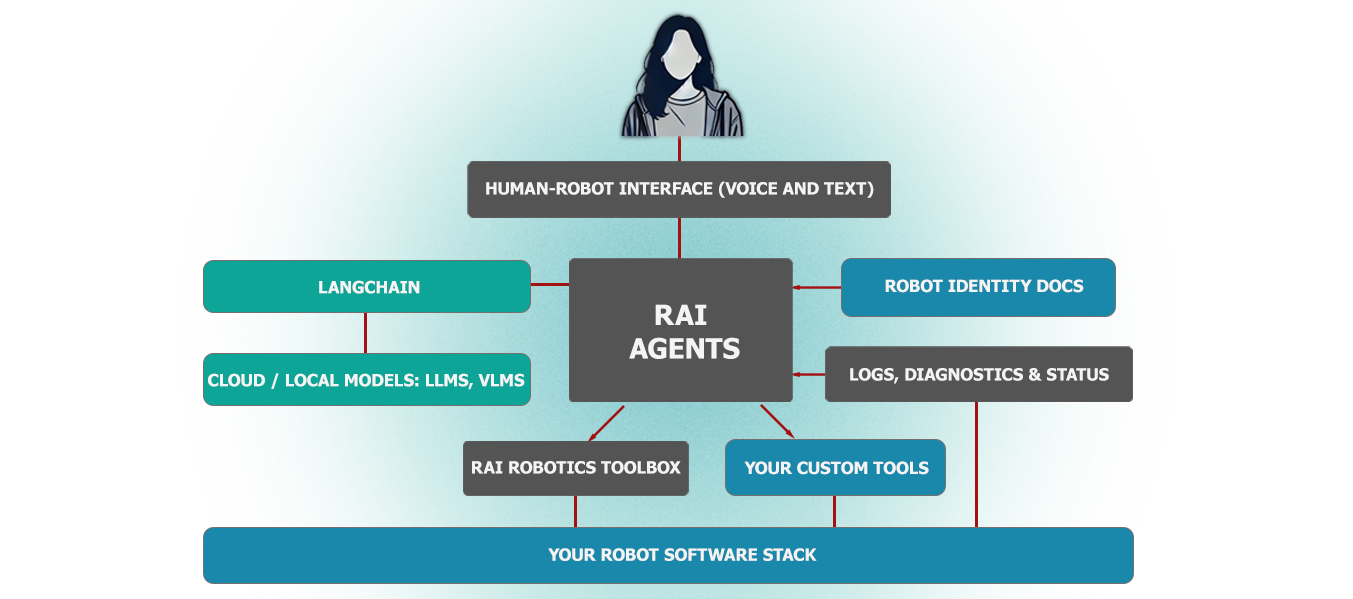

RAI is a flexible AI agent framework to develop and deploy Gen AI features for your robots.

The RAI framework aims to:

- Supply a general multi-agent system, bringing Gen AI features to your robots.

- Add human interactivity, flexibility in problem-solving, and out-of-box AI features to existing robot stacks.

- Provide first-class support for multi-modalities, enabling interaction with various data types.

- Incorporate an advanced database for persistent agent memory.

- Include ROS 2-oriented tooling for agents.

- Support a comprehensive task/mission orchestrator.

- Voice interaction (both ways).

- Customizable robot identity, including constitution (ethical code) and documentation (understanding own capabilities).

- Accessing camera ("What do you see?") sensor, utilizing VLMs.

- Reasoning about its own state through ROS logs.

- ROS 2 action calling and other interfaces. The Agent can dynamically list interfaces, check their message type, and publish.

- Integration with LangChain to abstract vendors and access convenient AI tools.

- Tasks in natural language to nav2 goals.

- NoMaD integration.

- Grounded SAM 2 integration.

- Improved Human-Robot Interaction with voice and text.

- SDK for RAI developers.

- Support for at least 3 different AI vendors.

- Additional tooling such as GroundingDino.

- UI for configuration to select features and tools relevant for your deployment.

Install poetry (1.8+) with the following line:

curl -sSL https://install.python-poetry.org | python3 -Alternatively, you can opt to do so by following the official docs.

git clone https://github.com/RobotecAI/rai.git

cd raipoetry install

rosdep install --from-paths src --ignore-src -r -ycolcon build --symlink-installsource ./setup_shell.shRAI aims to be vendor-agnostic. You can use the configuration in config.toml to set up your vendor of choice for most RAI modules.

Note

Some of the RAI modules still are hardcoded to OpenAI models. An effort is underway to make them configurable via config.toml file.

If you do not have a vendor's key, follow the instructions below:

OpenAI: link. AWS Bedrock: link.

Congratulations, your installation is now completed!

You can start by running the following examples:

- Hello RAI: Interact directly with your ROS 2 environment through an intuitive Streamlit chat interface.

- Explore the O3DE Husarion ROSbot XL demo and have your robot do tasks defined with natural language.

Chat seamlessly with your ROS 2 environment, retrieve images from cameras, adjust parameters, and get information about your ROS interfaces.

streamlit run src/rai_hmi/rai_hmi/text_hmi.pyRemember to run this command in a sourced shell.

This demo provides a practical way to interact with and control a virtual Husarion ROSbot XL within a simulated environment. Using natural language commands, you can assign tasks to the robot, allowing it to perform a variety of actions.

Given that this is a beta release, consider this demo as an opportunity to explore the framework's capabilities, provide feedback, and contribute. Try different commands, see how the robot responds, and use this experience to understand the potential and limitations of the system.

Follow this guide: husarion-rosbot-xl-demo

Once you know your way around RAI, try the following challenges, with the aid the developer guide:

- Run RAI on your own robot and talk to it, asking questions about what is in its documentation (and others!).

- Implement additional tools and use them in your interaction.

- Try a complex, multi-step task for your robot, such as going to several points to perform observations!

Soon you will have an opportunity to work with new RAI demos across several domains.

| Application | Robot | Description | Link |

|---|---|---|---|

| Mission and obstacle reasoning in orchards | Autonomous tractor | In a beautiful scene of a virtual orchard, RAI goes beyond obstacle detection to analyze best course of action for a given unexpected situation. | 🌾 demo |

| Manipulation tasks with natural language | Robot Arm (Franka Panda) | Complete flexible manipulation tasks thanks to RAI and Grounded SAM 2 | 🦾 demo |

| Quadruped inspection demo | A robot dog (ANYbotics ANYmal) | Perform inspection in a warehouse environment, find and report anomalies | link TBD |

Please take a look at Q&A.

See our Developer Guide.

You are welcome to contribute to RAI! Please see our Contribution Guide.

RAI will be released on October 15th, right before ROSCon 2024. If you are going to the conference, come join us at RAI talk on October 23rd.