Building large scalable iOS/macOS apps and frameworks with Domain-Driven Design

CFBundleVersion - 2.0.0

\newpage

"To my Mom and Dad, because they really tried."

&&

"To the community, rule no. 5 of my childhood hero Arnold Schwarzenegger says; Don't just take, give something back. This is me giving back."

&&

"Finally, to my current girlfriend ... whoever she might be at this very moment"

\newpage

Hi, I am Cyril Cermak, a software engineer by heart and the author of this book. Most of my professional career was spent building iOS apps or iOS frameworks. My professional career began at Skoda Auto Connect App in Prague, continued for Freelancer Ltd in Sydney building iOS platform, included numerous start-ups along the way, and, currently, has me as an iOS Architect Porsche AG, in Stuttgart. In this book, I am describing different approaches for building modular iOS architectures and will be providing some mechanisms and essential knowledge that should help one decide which approach would fit the best or should be considered for a project.

Greetings, I am Kristopher K. Kruger, the unintentional and forever grateful reviewer of this book in both its current and previous editions. My professional journey as an iOS Software Developer took an unexpected turn when I met the illustrious Cyril while working on the same groundbreaking project. Our paths first crossed amidst a whirlwind of iOS Swift code, Ruby code, and GitHub Actions during the development of the aforementioned project. In this book, I provided a meticulous, albeit whimsical, review of modular iOS architectures, contributing not just technical insights but also arcane wisdom gathered from my vast and varied experiences. My reviews are known for their unique blend of hard-hitting analysis and absurd humor, ensuring that even the driest of subjects can elicit a hearty chuckle.

Special thanks to David Ullmer, a dear colleague of mine, who did a bachelor thesis on modularisation of iOS applications with my guidance. David wrote the Benchmarking of Modular Architecture chapter of this book.

Feel free to contribute to this work by opening a PR.

\newpage \tableofcontents \newpage

In the software engineering field, people are going from project to project, gaining a different kind of experience out of it. In particular, on iOS, mostly the monolithic approaches are used. In some cases it makes total sense, so nothing against it. However, scaling up the team, or even better, the team of teams on a monolithically built app is horrifying and nearly impossible without some major build time impacts on a daily basis. Numerous problems will rise, that limit the way iOS projects are built or managed at the organisational level.

Scaling up the monolithic approach to a team of e.g 10+ developers will

most likely result in hell. By hell, I mean, resolving xcodeproj

issues, where in the worst case, both parties renamed, edited, or

deleted the same source code file or touched the same {storyboard|xib}

file. That is, both worked on the same file which would resolve in

classic merge conflicts. Somehow, we all become accustomed to those

issues and have learned we will just need to live with them.

The deal-breaker comes when your PO/PM/CTO/CEO or anybody higher on the company's food chain than you are will come to the team to announce that he or she is planning to release a new flavour of the app or to divide the current app into two separate parts. Afterwards, the engineering decision needs to be made to either continue with the monolithic approach or implement something different. Continuing with the monolithic approach, likely would result in creating different targets, assigning files towards the new flavour of the app and continuing on living in multiplied hell all the while hoping that some requirement such as shipping core components of the app to a subsidiary or open-sourcing it as a framework will not come into play.

Not surprisingly, a better approach would be to start refactoring the app using a modular approach, where each team can be responsible for particular frameworks (parts of the app) that are then linked towards final customer-facing apps. That will most certainly take time as it will not be easy to transform it but the future of the company's mobile engineering will be faster, scalable, maintainable and even ready to distribute or open-source some SDKs of it to the outer world.

Another scenario could be that you are already working on an app that is set up in a modular way but your app takes around 20 mins to compile because it is a huge legacy codebase that has been in development for the past ten or so years and has linked every possible 3rd party library along the way. The decision was made to modularise it with Cocoapods therefore, you cannot link easily already pre-compiled libraries with Carthage and every project clean means you can take a double shot of espresso. I have been there, trust me, it is another type of hell, definitely not a place where anyone would like to be. I described the whole migration process of such a project in an article on Medium in 2018. Of course, in this book you will read about it in more detail.

Nowadays, as an iOS System Architect, I am often getting asked some questions all over again from new teams or new colleagues with regards to those topics. Thereafter, I decided to sum it up and tried to get the whole subject covered in this book. The purpose of it is to help developers working on such architectures to gain the background knowledge and experience in order to more quickly and correctly implement these ideas.

Hopefully, this introduction provided enough motivation that you will want to dive further into this book.

The latest version of Xcode for compiling the demo examples, brew to install some mandatory dependencies, Ruby, and bundler for running scripts and downloading some ruby gems.

This book describes the essentials of building a modular architecture on iOS which further can be extended to all Apple platforms. You will find examples of different approaches, framework types, their pros and cons, common problems and so on. By the end of this book, you should have a very good understanding of what benefits such an architecture will bring to your project, whether it is necessary at all, and which way would be the best for modularising the project. This book focuses on high level architecture, modularisation of a project, and collaboration in way to be the most efficient.

SwiftUI.

\newpage

Modular, adjective - employing or involving a module or modules as the basis of design or construction: "modular housing units"

In the introduction, I briefly touched on the motivation for building the project in a modular way. To summarise, modular architecture will give us much more freedom when it comes to the product decisions that will influence the overall app engineering. These include building another app for the same company, open-sourcing some parts of the existing codebase, scaling the team of developers, and so on. With the already existing mobile foundation, the whole development process will be done way faster and cleaner.

To be fair, maintaining such a software foundation of a company might be also really difficult. By maintaining, I mean, taking care of the CI/CD (Continuous Integration / Continuous Delivery), maintaining old projects developed on top of the foundation that was heavily refactored in the meantime, legacy code, keeping it up-to-date with the latest development tools and so on. It goes without saying that on a very large project, this could be the work of one standalone team.

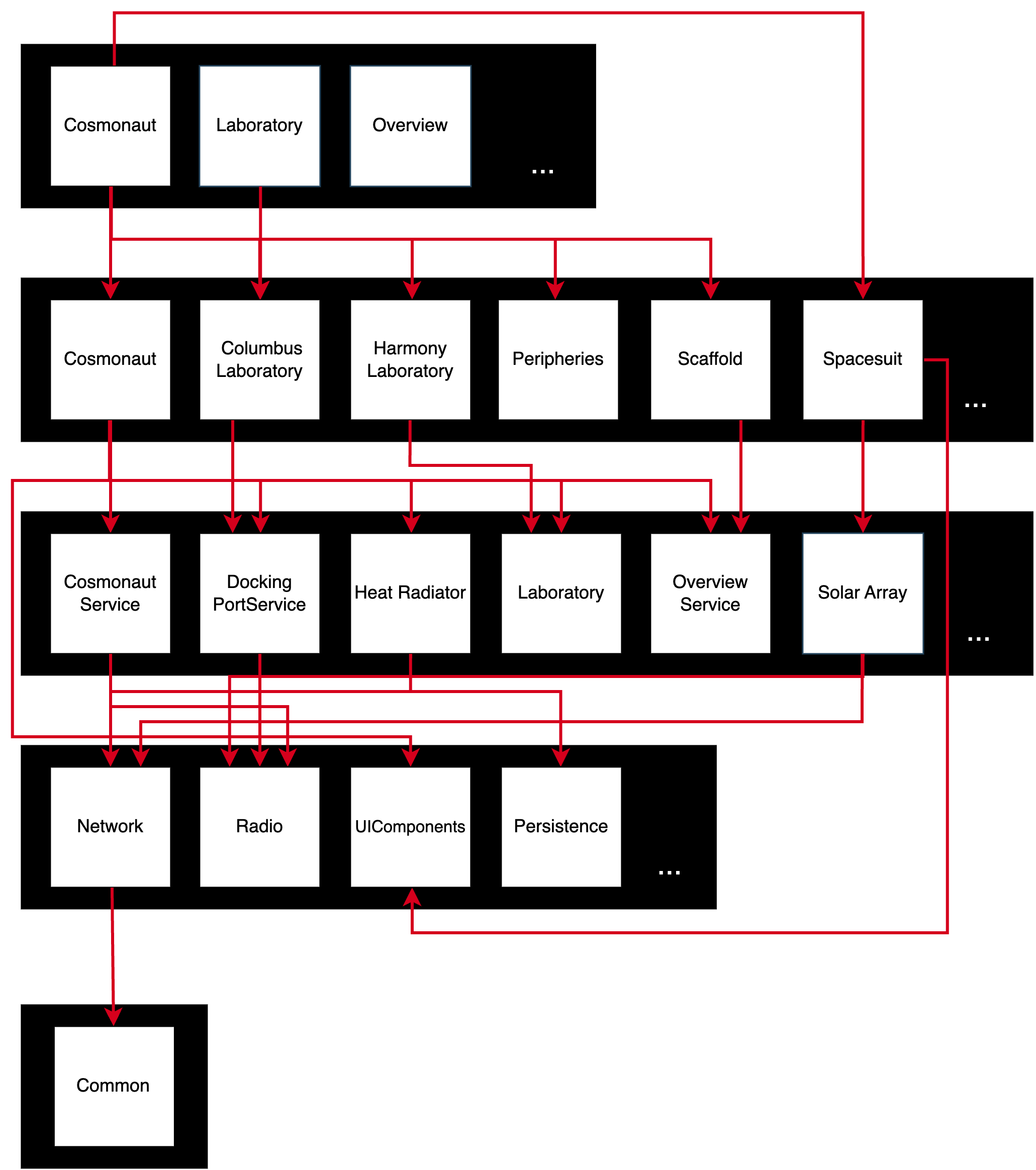

This book describes building such a large scalable architecture with domain-driven design and does so by using examples; The software foundation for the International Space Station.

In the context of this book a module is the standalone drawn box, or in practice an Xcode project which encapsulates frameworks, test bundles etc. Further to quote Apple: A framework is a hierarchical directory that encapsulates shared resources, such as a dynamic shared library, nib files, image files, localized strings, header files, and reference documentation in a single package. Multiple applications can use all of these resources simultaneously. The system loads them into memory as needed and shares the one copy of the resource among all applications whenever possible.

In this book, I chose to use the architecture that I think is the most flexible. It is a five-layer architecture that consists of the following layers:

- Application

- Domain

- Service

- Core

- Shared

Each layer is explained in the following section.

Nevertheless, the same principles can be applied for other architectural structures as well. An example is a simplified feature-oriented architecture where the layers could be defined as follows:

- Application

- Feature

- Core

This whole setup with layers and modules in them is further in the book

referenced as Application Framework.

Now to the specific layers.

Let us have a look now at each layer and its purpose. Further, we will look at the particular modules within layers and their internal structure.

The application layer consists of the final customer-facing products: applications. Applications assemble all the necessary parts from the Application Framework together, linking domains, services, and so on. Further the app is instantiating the UI stack, primarily domain coordinators (if such pattern is used) and objects such as NetworkService, CashierService, etc. The app also has its unique app configuration, which hosts information such as app flavour, app variant, enabled feature toggles, keychain configuration etc. Patterns that will help achieve such requirements will be described later.

Additionally, the App might also contain some necessary application implementations like receiving push notifications, handling deep linking, requesting permissions, and many more.

In the Application Framework, the App is just a container that stitches pieces together.

As an example, an app in an e-commerce business could be The Shop for

online customer and Cashier for the employees of that company.

Domain layer links services and other modules from layers below and uses them to implement the business domain needs of the company or the project. Domains will contain, for example, the user flow within the particular domain part of the app. Furthermore, the domain will have the necessary components for the flow like; coordinators, view controllers, views, models and view models. Obviously it depends on the team's preferences and technical experience which pattern will be used for creating screens. Personally, the reactive MVVM+C is my favourite but more on that later.

Continuing with our example of an e-commerce app, a domain could be

Checkout or Store Items and a shared domain could be a User which

would based on a configuration display flow for an employee or a

customer.

Services are modules supporting domains. Each domain can link several services to achieve desired outcomes. Such services will most likely talk to the backend, obtaining data from it, persisting the data in its storage, and exposing the data to domains.

A service in our theoretical e-commerce app could be a

Checkout Service. This service would handle all of the necessary

communication with the backend so as to proceed with the credit card

payments etc.

The core layer is the enabler for the whole app. Services will link the necessary modules out of it for e.g communicating with the backend or providing a general abstraction of persisting the data. Domains will link e.g UI components for easier implementation of screens and so on.

A core module in our e-commerce app could be Network or

UIComponents.

The shared layer is a supporting layer for the whole framework. It can happen that this layer might not need to exist, therefore, it is not considered in all diagrams. However, a perfect example of the shared layer is some logging mechanism. Even core layer modules may want to log some output and that could potentially lead to duplicates. This duplicated code could be solved by the shared layer or by following principles of clean architecture. Nevertheless, more on that topic later.

For example, a shared module in an e-commerce app could be Logging or

AppAnalytics.

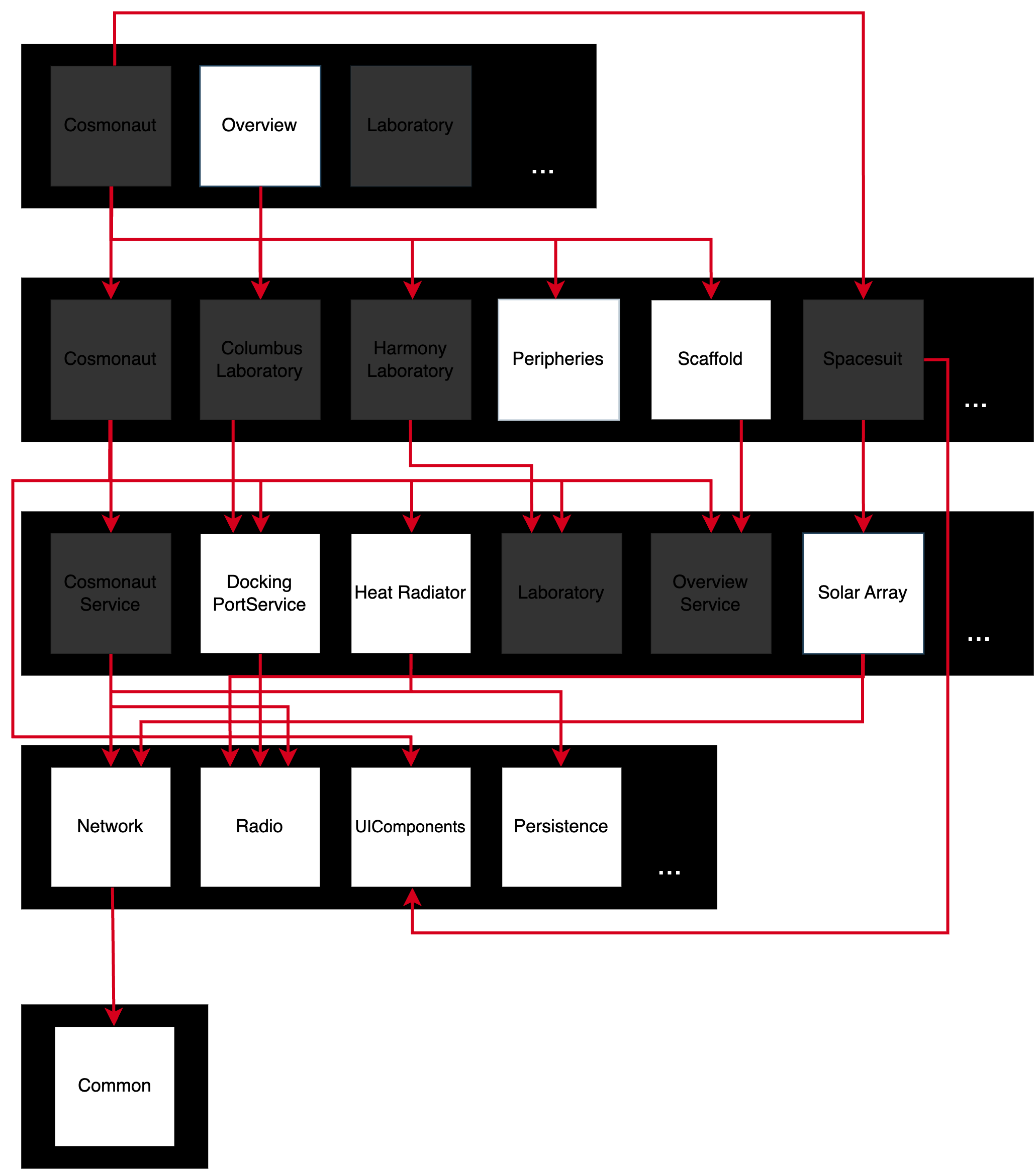

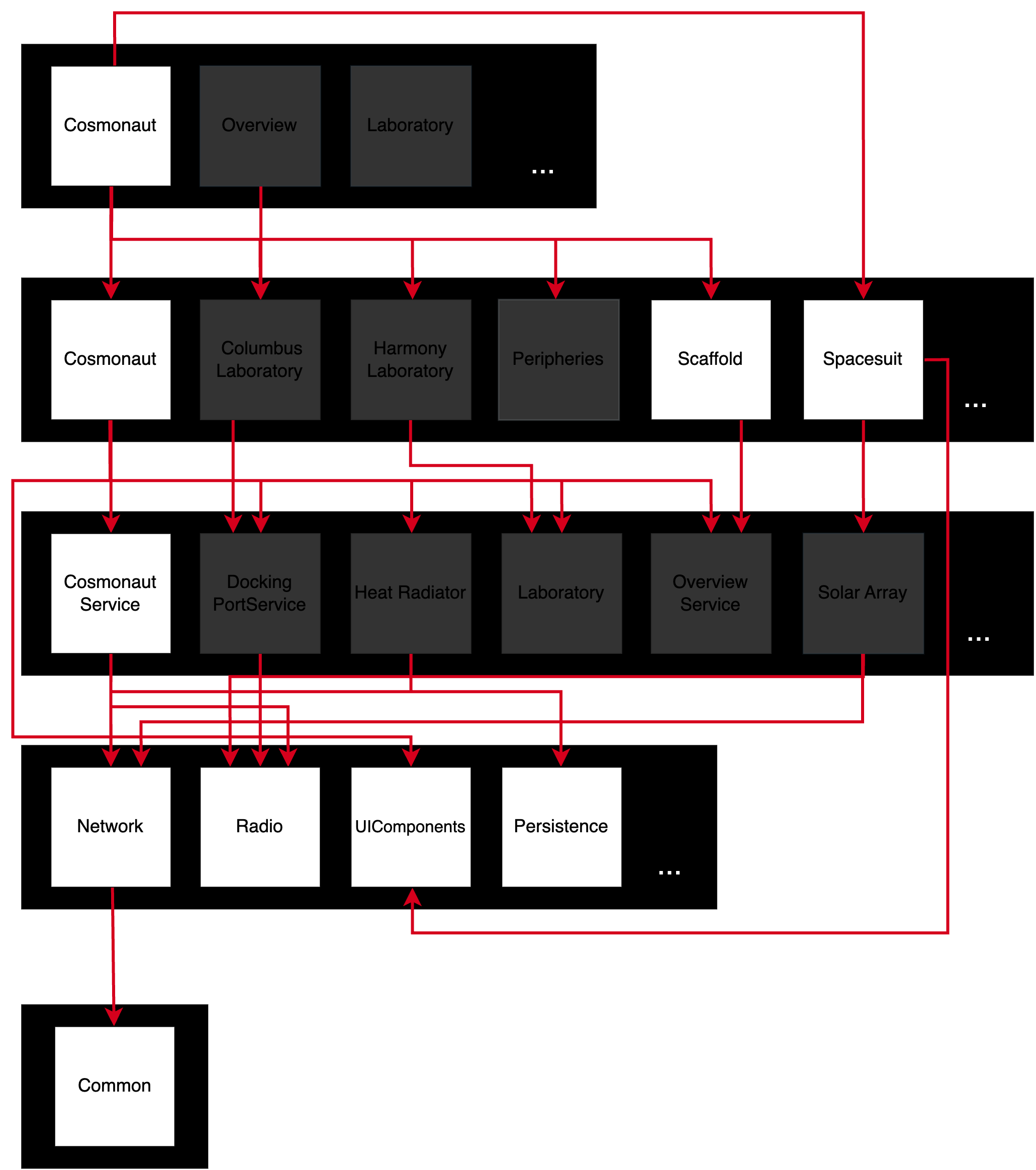

Now in this example, we will have a look at how such architecture could look like for the International Space Station. The diagram below shows the five-layer architecture with the modules and links. This structure is henceforth referenced to as Application Framework throughout this work.

While this chapter is rather theoretical, in the following chapters everything will be explained and showcased in practice.

The example has three applications.

Overview: app that shows astronauts the overall status of the space stationCosmonaut: app where a Cosmonaut can control his spacesuit as well as his supplies and personal informationLaboratory: app from which the laboratories on the space station can be controlled

As described above, all apps link the Scaffold module which provides the bootstrapping for the app while the app itself behaves like a container.

The diagram above describes the concrete linking of modules for the app. Let us have a closer look at it.

Overview app links the domain Peripheries, which implements logic

and screens for peripheries of the station.

The Peripheries domain links the Heat Radiator, Solar Array, and

Docking Port services from which data about those peripheries are

gathered so as UIComponents for bootstrapping the screens'

development.

The linked services use the Network and Radio core modules. These

provide the foundation for the communication with other systems via

network protocols. Radio in this case could implement some

communication channel via BLE (Bluetooth Low Energy) or other technology

which would connect to the solar array or heat radiator. Further,

UIComponents are used to bootstrap the design and Persistence is

used for database operations.

The Cosmonaut app links the Spacesuit and Cosmonaut domains. This

is the same for every other domain, each domain is responsible for

screens and users flow through the part of the app.

Spacesuit and Cosmonaut domains link Spacesuit Service and

Cosmonaut Service, services that provide data for domain-specific

screens. UIComponents provides the UI parts.

Spacesuit service is using Radio for communication with cosmonauts

spacesuit via BLE or another type of radio technology. Cosmonaut

service uses Network for updating Houston about the current state of

the Cosmonaut and uses Persistence for storing the data of the

cosmonaut for offline usage.

I will leave this one for the reader to figure out.

As you can probably imagine, scaling the architecture as described above should not be a problem. When it comes to extending the Overview app for another ISS periphery, for example, a new domain module can be easily added with some service modules etc.

When a requirement comes for creating a new app for e.g. cosmonauts, the

new app can already link the battlefield proven and tested Cosmonaut

domain module with other necessary modules that are required.

Development of the new app will thus become much easier.

The knowledge of the software that remains in one repository where developers have access to and can learn from is also very beneficial.

There are of course some disadvantages as well. For example, onboarding new developers on such an architecture might take a while, especially when there is already a huge existing codebase. In such a case, pair programming comes into play so as a proper project onboarding, software architecture document and the overall documentation of modules which helps newcomers to get on the right track.

\newpage

Before we deep dive into the development of previously described architecture, there is some essential knowledge that needs to be explained. In particular, we will need some background in the type of library that is going to be used for building such a project and its behaviour.

In Apple's ecosystem as of today, we have two main options when it comes

to creating a library. The library can either be statically or

dynamically linked. Previously known as Cocoa Touch Framework, the

dynamically linked library is nowadays referred to simply as

Framework. The statically linked library is known as the

Static Library.

Actually, at this point, a short note deserves also Swift Package and

Swift Package Manager (SPM). SPM is part of the Swift's ecosystem rather

than Apple's. A swift package describes how a source code should be

attached to a target, leaving up to the swift package developer if

static or dynamic linking is used. By default, SPM uses static linking

and similarly as a Framework can have additional resources attached to

it. SPM is not designed to share a compiled executables, it is designed

to share source code files with ease. It also became a common practice

to share a XCFramework via SPM, in that case SPM servers just as a

wrapper around the attached compiled binary.

What is a library?

To quote Apple: "Libraries define symbols that are not built as part of your target."

What are symbols? Symbols reference to chunks of code or data within binary.

\newpage

Types of libraries:

- Dynamicaly linked

- Dylib: Library that has its own Mach-O (explained later) binary.

(

.dylib) - Framework: Framework is a bundle that contains the binary and

other resources the binary might need during the runtime.

(

.framework) - TBDs: Text Based Dynamic Library Stubs is a text stubbed library

(symbols only) around a binary without including it as the binary

resides on the target system, used by Apple to ship lightweight SDKs

for development. (

.tbd) - XCFramework: From Xcode 11, the XCFramework was introduced which

allows grouping a set of frameworks for different platforms e.g

macOS,iOS,iOS simulator,watchOSetc. (.xcframework)

- Statically linked

- Archive: Archive of a compiler produced object files with object

code. (

.a) - Framework: Framework contains the static binary or static

archive with additional resources the library might need.

(

.framework) - XCFramework: Same as for dynamically linked library the

XCFramework can be used with statically linked. (

.xcframework)

We can look at a framework as some bundle that is standalone and can be attached to a project with its own binary. Nevertheless, the binary cannot run by itself, it must be part of some runnable target. So what is exactly the difference?

The main difference between a static and dynamic library is in the Inversion Of Control (IoC) and how they are linked towards the main executable. When you are using something from a static library, you are in control of it as it becomes part of the main executable during the build process (linking). On the other hand, when you are using something from a dynamic framework you are passing responsibility for it to the framework as the framework is dynamically linked to the executable's process on app start. I'll delve more into IoC in the paragraph below. Static libraries, at least on iOS, cannot contain anything other than the executable code unless they are wrapped into a static framework. A framework (dynamic or static) can contain everything you can think of e.g storyboards, XIBs, images and so on...

As mentioned above, the way dynamic framework code execution works is slightly different than in a classic project or a static library. For instance, calling a function from the dynamic framework is done through a framework's interface. Let's say a class from a framework is instantiated in the project and then a specific method is called on it. When the call is being made, you are passing the responsibility for it to the dynamic framework and the framework itself then makes sure that the specific action is executed and the results then passed back to the caller. This programming paradigm is known as Inversion Of Control. Thanks to the umbrella file and module map you know exactly what you can access and instantiate from the dynamic framework after the framework was built.

A dynamic framework does not support any Bridging-Header file; instead,

there is an umbrella.h file. An umbrella file should contain all

Objective-C imports as you would normally have in the bridging-Header

file. The umbrella file is one big interface for the dynamic framework

and it is usually named after the framework name e.g myframework.h. If

you do not want to manually add all the Objective-C headers, you can

just mark .h files as public. Xcode generates headers for ObjC for

public files when building. It does the same thing for Swift files as it

puts the ClassName-Swift.h into the umbrella file and exposes the

publicly available Swift interfaces via the swiftmodule definition. You

can check the final umbrella file and swiftmodule under the derived data

folder of the compiled framework.

On the other hand, a statically linked library is attached directly to the main executable during linking as the library contains already a pre-compiled archive of the source files with symbols. That being said, there is no need for an umbrella file as is the case with IoC in a dynamic framework.

It goes without saying that classes and other structures must be marked as public in order to be visible outside of a framework or a library. Not surprisingly, only objects that are needed for clients of a framework or a library should be exposed.

Now let's have a look at some pros & cons of both.

Dynamic:

- PROS

- Can be opened on demand, therefore, might not get opened at all

if user does not open specific part of the app (

dlopen). - Can be linked transitively to other dynamic libraries without any difficulty.

- Can be exchanged without the recompile of the main executable just by replacing the framework with a new version.

- Is loaded into a different memory space than the main executable.

- Can be shared between applications especially useful for system libraries.

- Can be loaded partially, only the needed symbols can be loaded

into the memory (

dlsym). - Can be loaded lazily, only objects that are referenced will be loaded.

- Can be re-used between targets e.g an iOS app and its app extensions, an watch app and its extensions.

- Library can perform some cleanup tasks when it is closed

(

dlclose). - Potentially faster app start time as if a library is linked lazily, and opened in the runtime.

- Mergeable libraries, Xcode 15 feature could be used for production builds to combine all dynamic libraries into a single framework leveraging the best from the both worlds.

- Hard separation of the codebase improves the compile time of the application.

- Can be opened on demand, therefore, might not get opened at all

if user does not open specific part of the app (

- CONS

- Slower app launch as each dynamic library must be opened, and loaded to memory separately. At the very worst case, a library can run some computational difficult algorithm on the dlopen initialiser which could slow the start up time even further.

- The target must copy all dynamic libraries else the app crashes

on the start or during runtime with

dyld library not found. - The overall size of the binary is bigger than the static one as the compiler can strip symbols from the static library during the compile-time while in dynamic library the symbols at least the public ones must remain.

- Potential replacement of a dynamic library with a new version with different interfaces can break the main executable.

- Slower library API calls as it is loaded into a different memory space and called via library interface.

- Launch time of the app might take longer if all dynamic libraries are opened during the launch time.

Static:

- PROS

- Faster app start up, as just one executable is loaded into the memory

- Is part of the main executable and therefore the app cannot crash during launch or runtime due to a missing library.

- Overall smaller size of the final executable as the unused symbols can be stripped.

- In terms of call speed, there is no difference between the main executable and the library as the library is part of the main executable.

- Compiler can provide some extra optimisation during the build time of the main executable.

- CONS

- The library must NOT be linked transitively as each link of the library would add it again. The library must be present only once in the memory either in the main executable or one of its dependencies otherwise the app will need to decide on startup which library is going to be used.

- The main executable must be recompiled when the library has an update even though the library's interface remains the same.

- Compile time is slower as there are no hard interfaces and the build system must always figure out what to re-compile

When building any kind of modular architecture, it is crucial to keep in

mind that a static library is attached to the executable while a dynamic

one is opened and linked at the launch time. Thereafter, if there are

two frameworks linking the same static library the app will launch with

warnings Class loaded twice ... one of them will be used. issue. That

causes even slower app starts as the app needs to decide which of those

classes will be used. Furthermore, when two different versions of the

same static library are used the app will use them interchangeably.

Debugging will become a horror in that case. That being said, it is very

important to be sure that the linking was done right and no warnings

appear.

All that is the reason why using dynamically linked frameworks for

internal development is the way to go. However, working with static

libraries is, unfortunately, inevitable especially when working with 3rd

party libraries. Big companies like Google, Microsoft or Amazon are

using static libraries for distributing their SDKs. As of now, for

example: GoogleMaps, GooglePlaces, Firebase, MSAppCenter and all

subsets of those SDKs are linked statically.

When using 3rd party dependency manager like Cocoapods for linking one

static library attached to more than one project (App or Framework) it

would fail the installation with

target has transitive dependencies that include static binaries.

Therefore, it takes extra effort to link static binaries into multiple

frameworks.

Let's have a look at how to link such a static library into a dynamically linked SDK.

As mentioned above, it takes extra effort to link a static library or static framework into a dynamically linked project correctly. The crucial part is to make sure that it is linked only in one place. Either it can be linked towards one dynamic framework or towards the app target. When linked toward a dynamic framework, the static library can be exposed via umbrella file and made available everywhere the framework is linked. When linked toward the app target, the static library or framework cannot be exposed anywhere else directly but can be passed through to other frameworks on the code level via some level of abstraction. The same applies to the static framework.

As an example of such umbrella file exposing GoogleMaps library that was linked to it could be:

// MyFramework.h - Umbrella file

#import <UIKit/UIKit.h>

#import "GoogleMaps/GoogleMaps.h"The import of the header file of GoogleMaps into the frameworks

umbrella file exposes all public headers of the GoogleMaps because the

GoogleMaps.h has all the GoogleMaps public headers.

// GoogleMaps.h

#import "GMSIndoorBuilding.h"

#import "GMSIndoorLevel.h"

#import "GMSAddress.h"

...The library becomes available as soon as the MyFramework import

precedes the GoogleMaps one.

// MyFileInApp.swift

import MyFramework

import GoogleMaps

...In case of the static GoogleMaps framework, it is necessary to copy

its bundle towards the app because it is there that the GoogleMaps

binary is looking for its resources (translations, images, and so on).

Let us have a look at some of the commands that comes in handy when

solving some problems when it comes to compiler errors or receiving

compiled closed source dynamic framework or a static library. To give it

a quick start let's have a look at a binary we all know very well;

UIKit. The path to the UIKit.framework is:

/Applications/Xcode.app/Contents/Developer/Platforms/iPhoneOS.platform/Library/Developer/CoreSimulator/Profiles/Runtimes/iOS.simruntime/Contents/Resources/RuntimeRoot/System/Library/Frameworks/UIKit.framework

Apple ships various different tools for exploring compiled libraries and frameworks. On the UIKit framework, I will demonstrate only essential commands that I often find quite useful.

Before we start, it is crucial to know what we are going to be exploring. In the Apple ecosystem, the file format of any binary is called Mach-O (Mach object). Mach-O has a pre-defined structure starting with Mach-O header, following by segments, sections, load commands and so on.

Since you are surely a curious reader, by now you have many questions

about where it all comes from. The answer to that is quite simple. Since

it is all part of the system, you can open up Xcode and look for a file

in a global path /usr/include/mach-o/loader.h. In the loader.h file

for example the Mach-O header struct is defined.

/*

* The 64-bit mach header appears at the very beginning of object files for

* 64-bit architectures.

*/

struct mach_header_64 {

uint32_t magic; /* mach magic number identifier */

cpu_type_t cputype; /* cpu specifier */

cpu_subtype_t cpusubtype; /* machine specifier */

uint32_t filetype; /* type of file */

uint32_t ncmds; /* number of load commands */

uint32_t sizeofcmds; /* the size of all the load commands */

uint32_t flags; /* flags */

uint32_t reserved; /* reserved */

};When the compiler produces the final executable the Mach-O header is placed at a concrete byte position in it. Therefore, tools that are working with the executables knows exactly where to look for desired information. The same principle applies to all other parts of Mach-O as well.

For further exploration of Mach-O file, I would recommend reading the following article.

First, let's have a look at what Architectures the binary can be linked

on (fat headers). For that, we are going to use otool; the utility

that is shipped within every macOS. To list fat headers of a compiled

binary we will use the flag -f and to produce a symbols readable

output I also added the -v flag.

otool -fv ./UIKitNot surprisingly, the output produces two architectures. One that runs

on the Intel mac (x86_64) when deploying to the simulator and one that

runs on iPhones as well as on the recently introduced M1 Mac (arm64).

Fat headers

fat_magic FAT_MAGIC

nfat_arch 2

architecture x86_64

cputype CPU_TYPE_X86_64

cpusubtype CPU_SUBTYPE_X86_64_ALL

capabilities 0x0

offset 4096

size 26736

align 2^12 (4096)

architecture arm64

cputype CPU_TYPE_ARM64

cpusubtype CPU_SUBTYPE_ARM64_ALL

capabilities 0x0

offset 32768

size 51504

align 2^14 (16384)

When the command finishes successfully while not printing any output it simply means that the binary does not contain the fat header. That being said, the library can run only on one architecture and to see which architecture that is, we have to print out the Mach-O header of the executable.

otool -hv ./UIKitFrom the output of the Mach-O header we can see that the cputype is

X86_64. We can also see some extra information like with which flags

the library was compiled, the filetype, and so on.

Mach header

magic cputype cpusubtype caps filetype ncmds sizeofcmds

MH_MAGIC_64 X86_64 ALL 0x00 DYLIB 21 1400

flags

NOUNDEFS DYLDLINK TWOLEVEL APP_EXTENSION_SAFE

Second, let us determine what type of library we are dealing with. For

that, we will use again the otool as mentioned above. Mach-O header

specifies filetype. So running it again on the UIKit.framework with

the -hv flags produces the following output:

Mach header

magic cputype cpusubtype caps filetype ncmds sizeofcmds

MH_MAGIC_64 X86_64 ALL 0x00 DYLIB 21 1400

flags

NOUNDEFS DYLDLINK TWOLEVEL APP_EXTENSION_SAFE

From the output's filetype we can see that it is a dynamically linked

library. From its extension, we can say it is a dynamically linked

framework. As described ealier, a framework can be dynamically or

statically linked. The perfect example of a statically linked framework

is GoogleMaps.framework. When running the same command on the binary

of GoogleMaps from the output we can see that the binary is NOT

dynamically linked as its type is OBJECT aka object files which means

that the library is static and linked to the attached executable at the

compile time.

Mach header

magic cputype cpusubtype caps filetype ncmds sizeofcmds

MH_MAGIC_64 X86_64 ALL 0x00 OBJECT 4 2688

flags

SUBSECTIONS_VIA_SYMBOLS

The reason for wrapping the static library into a framework was the

necessary inclusion of GoogleMaps.bundle which needed to be copied to

the target in order for the library to work correctly with its

resources.

Now, let's try to run the same command on the static library archive. As

an example we can use again one of the Xcode's libraries located at

/Applications/Xcode.app/Contents/Developer/Toolchains/XcodeDefault.xctoolchain/usr/lib/swift/iphoneos/libswiftCompatibility50.a

path. From the library extension we can immediately say the library is

static. Running the otool -hv libswiftCompatibility50.a just confirms

that the filetype is OBJECT.

Archive : ./libswiftCompatibility50.a (architecture armv7)

Mach header

magic cputype cpusubtype caps filetype ncmds sizeofcmds

MH_MAGIC ARM V7 0x00 OBJECT 4 588

flags

SUBSECTIONS_VIA_SYMBOLS

Mach header

magic cputype cpusubtype caps filetype ncmds sizeofcmds

MH_MAGIC ARM V7 0x00 OBJECT 5 736

flags

SUBSECTIONS_VIA_SYMBOLS

...

While static library archive ending with .a is a clearly static one

with a framework to be sure that the library is dynamically linked it is

necessary to check the binary for its filetype in the Mach-O header.

Third, let's have a look at what the library is linking. For that the

otool provides -L flag.

otool -L ./UIKitThe output lists all dependencies of the UIKit framework. For example,

here you can see that UIKit is linking Foundation. That's why the

import Foundation is no longer needed when importing UIKit into a

source code file.

./UIKit:

/System/Library/Frameworks/UIKit.framework/UIKit ...

/System/Library/Frameworks/FileProvider.framework/FileProvider ...

/System/Library/Frameworks/Foundation.framework/Foundation ...

/System/Library/PrivateFrameworks/DocumentManager.framework/DocumentManager ...

/System/Library/PrivateFrameworks/UIKitCore.framework/UIKitCore ...

/System/Library/PrivateFrameworks/ShareSheet.framework/ShareSheet ...

/usr/lib/libobjc.A.dylib ...

/usr/lib/libSystem.B.dylib ...

Fourth, it is also useful to know which symbols are defined in the

framework. For that, the nm utility is available. To print all symbols

including the debugging ones I added -a flag as well as -C to print

them demangled. Name mangling is a technique of adding extra information

about the language data type (class, struct, enum ...) to the symbol

during compile time in order to pass more information about it to the

linker. With a mangled symbol, the linker will know that this symbols is

for a class, getter, setter etc and can work with it accordingly.

nm -Ca ./UIKitUnfortunately, the output here is very limited as those symbols listed

are the ones that define the dynamic framework itself. The limitation is

because Apple ships the binary obfuscated and when reverse-engineering

the binary with for example Radare2 disassembler, all we can see is a

couple of add byte assembly instructions. It is still possible to dump

the list of symbols, but for that we would have to either use lldb and

have the UIKit framework loaded in the memory space or dump the memory

footprint of the framework and explore it decrypted. That is

unfortunately not part of this book.

0000000000000ff0 s _UIKitVersionNumber

0000000000000fc0 s _UIKitVersionString

U dyld_stub_binder

Just to give an example of how the symbols would look, I printed out

compiled realm framework by running nm -Ca ./Realm.

...

2c4650 T realm::Table::do_move_row(unsigned long, unsigned long)

2cb1e8 T realm::Table::do_set_link(unsigned long, unsigned long, unsigned long)

4305e0 S realm::Table::max_integer

4305e8 S realm::Table::min_integer

2c44b4 T realm::Table::do_swap_rows(unsigned long, unsigned long)

2ce9bc T realm::Table::find_all_int(unsigned long, long long)

2cb3ac T realm::Table::get_linklist(unsigned long, unsigned long)

2c4d64 T realm::Table::set_subtable(unsigned long, unsigned long, realm::Table const*)

2bd9f8 T realm::Table::create_column(realm::ColumnType, unsigned long, bool, realm::Allocator&)

2bf3fc T realm::Table::discard_views()

...

It seems like Realm was developed in C++ but it can be clearly seen what

kind of symbols are available within the binary. Let us look at one more

example but for Swift with Alamofire. There we can, unfortunately, see

that the nm was not able to demangle the symbols.

...

34d00 T _$s9Alamofire7RequestC8delegateAA12TaskDelegateCvM

34dc0 T _$s9Alamofire7RequestC4taskSo16NSURLSessionTaskCSgvg

34e20 T _$s9Alamofire7RequestC7sessionSo12NSURLSessionCvg

34e50 T _$s9Alamofire7RequestC7request10Foundation10URLRequestVSgvg

350c0 T _$s9Alamofire7RequestC8responseSo17NSHTTPURLResponseCSgvg

351e0 T _$s9Alamofire7RequestC10retryCountSuvpfi

...

To demangle swift manually following command can be used.

nm -a ./Alamofire | awk '{ print $3 }' | xargs swift demangle {} \;Which produces the mangled symbol name with the demangled explanation.

...

_$s9Alamofire7RequestC4taskSo16NSURLSessionTaskCSgvg

---> Alamofire.Request.task.getter : __C.NSURLSessionTask?

_$s9Alamofire7RequestC4taskSo16NSURLSessionTaskCSgvgTq

---> method descriptor for Alamofire.Request.task.getter : __C.NSURLSessionTask?

_$s9Alamofire7RequestC10retryCountSuvpfi

---> variable initialization expression of Alamofire.Request.retryCount : Swift.UInt

...

Last but not least, it can be also helpful to list all strings that the

binary contains. That could help catch developers' mistakes such as not

obfuscated secrets and some other strings that should not be part of the

binary. To do that we will use strings utility again on the Alamofire

binary.

strings ./AlamofireThe output is a list of plain text strings found in the binary.

...

Could not fetch the file size from the provided URL:

The URL provided is a directory:

The system returned an error while checking the provided URL for

reachability.

URL:

The URL provided is not reachable:

...

The last piece of information that is missing now is how it all gets

glued together. As Apple developers, we are using Xcode for developing

apps for Apple products that are then distributed via App Store or other

distribution channels. Xcode under the hood is using

Xcode Build System for producing final executables that run on X86

and ARM processor architectures.

The Xcode build system consists of multiple steps that depend on each

other. Xcode build system supports C based languages (C, C++,

Objective-C, Objective-C++) compiled with clang as well as Swift

language compiled with swiftc.

Let's have a quick look at what Xcode does when the build is triggered.

- Preprocessing

Preprocessing resolves macros, removes comments, imports files and so

on. In a nutshell, it prepares the code for the compiler. The

preprocessor also decides which compiler will be used for which source

code file. Not surprisingly, Swift source code file will be compiled by

swiftc and other C like files will use clang.

- Compiler (

swiftc,clang)

As mentioned above, the Xcode build system uses two compilers; clang and swiftc. The compiler consists of two parts, front-end and back-end. Both compilers use the same back-end, LLVM (Low-Level Virtual Machine) and language-specific front-end. The job of a compiler is to compile the post-processed source code files into object files that contain object code. Object code is simply human-readable assembly instructions that can be understood by the CPU.

- Assembler (

asm)

The assembler takes the output of the compiler (assembly) and produces relocatable machine code. Machine code is recognised by a concrete type of processor (ARM, X86). The opposite of relocatable machine code would be absolute machine code. While relocatable code can be placed at any position in memory by loader the absolute machine code has its position set in the binary.

- Linker (

ld)

The final step of the build system is linking. The linker is a program that takes object files (multiple compiled files) and links (merges) them together based on the symbols those files are using as well as static and dynamic libraries as needed. In order to be able to link libraries the linker needs to know the paths where to look for them. Linker produces the final single file; Mach-O executable.

- Loader (

loader)

After the executable was built, the job of a loader is to bring the executable into memory and start the program execution. Loader is a system program operating on the kernel level. Loader assigns the memory space and loads Mach-O executable to it.

Now you should have a high-level overview of what phases the Xcode build system goes through when the build is started.

I hope this chapter provided a clear understanding of the essential differences between static and dynamic libraries as well as provided some clear examples showing to examine them. It was quite a lot to grasp, so now it's time for a double shot of espresso or any kind of preferable refreshment.

I would highly recommend to deep dive into this topic even more. Here are some resources I would recommend;

Exploring iOS-es Mach-O Executable Structure

Difference in between static and dynamic library from our beloved StackOverflow

Dynamic Library Programming Topics

Xcode Build System : Know it better

Behind the Scenes of the Xcode Build Process

Used binaries:

\newpage

Since we touched the Xcode's build system in the previous chapter it

would be unfair to the swiftc to leave it untouched. Even though

knowing how compiler works is not mandatory knowledge it is really

interesting and it gives a good closure of the whole process from

writing human-readable code to running it on bare metal. In this chapter

we are going to look at how a library can be built purely in Swift's

ecosystem.

While other chapters are rather essential for having a good understanding of the development of modular architecture, this chapter is optional.

To fully understand swift's compiler architecture and its process, let us have a look at the documentation provided by swift.org and do some practical examples based on it.

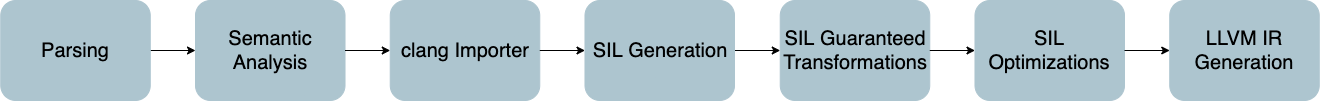

The following image describes the swiftc architecture. It consists of

seven steps, which are explained in subchapters.

For demonstration purposes, I prepared two simple swift source code

files. First, employee.swift and second main.swift. The file

employee.swift is standalone source code while main.swift requires

the Employee being linked to it as a library. All compiler steps are

explained on the employee.swift but in the end, the employee source

code will be created as a library that the main file will consume and

use.

employee.swift

import Foundation

public protocol Address {

var houseNo: Int { get }

var street: String { get }

var city: String { get }

var state: String { get }

}

public protocol Person {

var firstName: String { get }

var lastName: String { get }

var address: Address { get }

}

public class Employee: Person {

public let firstName: String

public let lastName: String

public let address: Address

public init(firstName: String, lastName: String, address: Address) {

self.firstName = firstName

self.lastName = lastName

self.address = address

}

public func printEmployeeInfo() {

print("\(firstName) \(lastName)")

print("\(address.houseNo). \(address.street), \(address.city), \(address.state)")

}

}

public struct EmployeeAddress: Address {

public let houseNo: Int

public let street: String

public let city: String

public let state: String

public init(houseNo: Int, street: String, city: String, state: String) {

self.houseNo = houseNo

self.street = street

self.city = city

self.state = state

}

}main.swift

import Foundation

import Employee

let employee = Employee(firstName: "Cyril",

lastName: "Cermak",

address: EmployeeAddress(houseNo: 1, street: "PorschePlatz", city: "Stuttgart", state: "Germany"))

employee.printEmployeeInfo()\newpage

The parser is a simple, recursive-descent parser (implemented in lib/Parse) with an integrated, hand-coded lexer. The parser is responsible for generating an Abstract Syntax Tree (AST) without any semantic or type information, and emit warnings or errors for grammatical problems with the input source.

Source: swift.org

First in the compilation process is parsing. As the definition says, the parser is responsible for the lexical syntax check without any type check. The following command prints the parsed AST.

swiftc ./employee.swift -dump-parseIn the output, you can notice that the types are not resolved and end with errors.

(source_file "./employee.swift"

// Importing Foundation

(import_decl range=[./employee.swift:1:1 - line:1:8] 'Foundation')

// Address protocol declaration

(protocol range=[./employee.swift:3:8 - line:8:1] "Address" <Self : Address> requirement signature=<null>

(pattern_binding_decl range=[./employee.swift:4:5 - line:4:28]

(pattern_typed

(pattern_named 'houseNo')

(type_ident

(component id='Int' bind=none))))

// houseNo variable declaration

(var_decl range=[./employee.swift:4:9 - line:4:9] "houseNo" type='<null type>' readImpl=getter immutable

(accessor_decl range=[./employee.swift:4:24 - line:4:24] 'anonname=0x7fc1e408db80' get_for=houseNo

// Not recognized Int type

(parameter "self"./employee.swift:4:18: error: cannot find type 'Int' in scope

var houseNo: Int { get }

^~~

)

...

// Employee class declaration

(class_decl range=[./employee.swift:16:8 - line:31:1] "Employee" inherits: <null>

(pattern_binding_decl range=[./employee.swift:17:12 - line:17:27]

(pattern_typed

(pattern_named 'firstName')

(type_ident

(component id='String' bind=none))))

// Employee class vars declaration

(var_decl range=[./employee.swift:17:16 - line:17:16] "firstName" type='<null type>' let readImpl=stored immutable)

(pattern_binding_decl range=[./employee.swift:18:12 - line:18:26]

(pattern_typed

(pattern_named 'lastName')

(type_ident

(component id='String' bind=none))))

...

From the parsed AST we can see that it is really descriptive. The source

code of employee.swift has 47 lines of code while its parsed AST

without type check has 270.

Out of curiosity, let us have a look at how the tree would look with a

syntax error. To do so, I added the winner of all times in hide and

seek, a semi-colon ;, to the protocol declaration.

public protocol Address {

var houseNo: Int; { get }

After running the same command we can see a syntax error at the

declaration of houseNo variable. That is the error Xcode would show as

soon as it type checks the source file.

...

(var_decl range=[./employee.swift:4:9 - line:4:9] "houseNo" type='<null type>'./employee.swift:4:9: error: property in protocol must have explicit { get } or { get set } specifier

var houseNo: Int; { get }

...

\newpage

Semantic analysis (implemented in lib/Sema) is responsible for taking the parsed AST and transforming it into a well-formed, fully-type-checked form of the AST, emitting warnings or errors for semantic problems in the source code. Semantic analysis includes type inference and, on success, indicates that it is safe to generate code from the resulting, type-checked AST.

Source: swift.org

After parsing, comes the semantic analysis. From its definition, we should see fully type-checked parsed AST. Executing the following command will give us the answer.

swiftc ./employee.swift -dump-astIn the output all types are resolved and recognised by the compiler and the errors no longer appear.

// Address protocol with resolved types

(protocol range=[./employee.swift:3:8 - line:8:1] "Address" <Self : Address> interface type='Address.Protocol' access=public non-resilient requirement signature=<Self>

(pattern_binding_decl range=[./employee.swift:4:5 - line:4:18] trailing_semi

(pattern_typed type='Int'

(pattern_named type='Int' 'houseNo')

(type_ident

(component id='Int' bind=Swift.(file).Int))))

...

// EmployeeAddress conforming to the Address protocol

(struct_decl range=[./employee.swift:33:8 - line:45:1] "EmployeeAddress" interface type='EmployeeAddress.Type' access=public non-resilient inherits: Address

(pattern_binding_decl range=[./employee.swift:34:12 - line:34:25]

(pattern_typed type='Int'

(pattern_named type='Int' 'houseNo')

(type_ident

(component id='Int' bind=Swift.(file).Int))))

// Variable declaration on EmployeeAddress

(var_decl range=[./employee.swift:34:16 - line:34:16] "houseNo" type='Int' interface type='Int' access=public let readImpl=stored immutable

(accessor_decl implicit range=[./employee.swift:34:16 - line:34:16] 'anonname=0x7fd2821409e8' interface type='(EmployeeAddress) -> () -> Int' access=public get_for=houseNo

(parameter "self" type='EmployeeAddress' interface type='EmployeeAddress')

(parameter_list)

(brace_stmt implicit range=[./employee.swift:34:16 - line:34:16]

(return_stmt implicit

(member_ref_expr implicit type='Int' decl=employee.(file).EmployeeAddress.houseNo@./employee.swift:34:16 direct_to_storage

(declref_expr implicit type='EmployeeAddress' decl=employee.(file).EmployeeAddress.<anonymous>.self@./employee.swift:34:16 function_ref=unapplied))))))

Not surprisingly, when using an unknown type, the command results in an error.

public protocol Address {

var houseNo: Foo { get }

/employee.swift:4:18: error: cannot find type 'Foo' in scope

var houseNo: Foo { get }

^~~

(source_file "./employee.swift"

(import_decl range=[./employee.swift:1:1 - line:1:8] 'Foundation')

(protocol range=[./employee.swift:3:8 - line:8:1] "Address" <Self : Address> interface type='Address.Protocol' access=public non-resilient requirement signature=<Self>

(pattern_binding_decl range=[./employee.swift:4:5 - line:4:28]

(pattern_typed type='<<error type>>'

The Clang importer (implemented in lib/ClangImporter imports Clang modules and maps the C or Objective-C APIs they export into their corresponding Swift APIs. The resulting imported ASTs can be referred to by semantic analysis.

Source: swift.org

The third in the compilation process is the clang importer. This is the well-known bridging of C/ObjC languages to the Swift API's and wise versa.

The Swift Intermediate Language (SIL) is a high-level, Swift-specific intermediate language suitable for further analysis and optimization of Swift code. The SIL generation phase (implemented in lib/SILGen) lowers the type-checked AST into so-called "raw" SIL. The design of SIL is described in docs/SIL.rst.

Source: swift.org

The fourth step in the compilation process is the Swift Intermediate Language. Are you curious about how it looks? To print it, we can use the following command.

swiftc ./employee.swift -emit-silIn the output, we can see the witness tables, vtables and

message dispatch tables alongside with other intermediate

declarations. Unfortunately, an explanation of this is out of the scope

of this book. More about these topics can be obtained in the article

about method

dispatch.

...

// protocol witness for Address.state.getter in conformance EmployeeAddress

sil shared [transparent] [serialized] [thunk] @$s8employee15EmployeeAddressVAA0C0A2aDP5stateSSvgTW : $@convention(witness_method: Address) (@in_guaranteed EmployeeAddress) -> @owned String {

// %0 // user: %1

bb0(%0 : $*EmployeeAddress):

%1 = load %0 : $*EmployeeAddress // user: %3

// function_ref EmployeeAddress.state.getter

%2 = function_ref @$s8employee15EmployeeAddressV5stateSSvg : $@convention(method) (@guaranteed EmployeeAddress) -> @owned String // user: %3

%3 = apply %2(%1) : $@convention(method) (@guaranteed EmployeeAddress) -> @owned String // user: %4

return %3 : $String // id: %4

} // end sil function '$s8employee15EmployeeAddressVAA0C0A2aDP5stateSSvgTW'

sil_vtable [serialized] Employee {

#Employee.init!allocator: (Employee.Type) -> (String, String, Address) -> Employee : @$s8employee8EmployeeC9firstName04lastD07addressACSS_SSAA7Address_ptcfC // Employee.__allocating_init(firstName:lastName:address:)

#Employee.printEmployeeInfo: (Employee) -> () -> () : @$s8employee8EmployeeC05printB4InfoyyF // Employee.printEmployeeInfo()

#Employee.deinit!deallocator: @$s8employee8EmployeeCfD // Employee.__deallocating_deinit

}

sil_witness_table [serialized] Employee: Person module employee {

method #Person.firstName!getter: <Self where Self : Person> (Self) -> () -> String : @$s8employee8EmployeeCAA6PersonA2aDP9firstNameSSvgTW // protocol witness for Person.firstName.getter in conformance Employee

method #Person.lastName!getter: <Self where Self : Person> (Self) -> () -> String : @$s8employee8EmployeeCAA6PersonA2aDP8lastNameSSvgTW // protocol witness for Person.lastName.getter in conformance Employee

method #Person.address!getter: <Self where Self : Person> (Self) -> () -> Address : @$s8employee8EmployeeCAA6PersonA2aDP7addressAA7Address_pvgTW // protocol witness for Person.address.getter in conformance Employee

}

sil_witness_table [serialized] EmployeeAddress: Address module employee {

method #Address.houseNo!getter: <Self where Self : Address> (Self) -> () -> Int : @$s8employee15EmployeeAddressVAA0C0A2aDP7houseNoSivgTW // protocol witness for Address.houseNo.getter in conformance EmployeeAddress

method #Address.street!getter: <Self where Self : Address> (Self) -> () -> String : @$s8employee15EmployeeAddressVAA0C0A2aDP6streetSSvgTW // protocol witness for Address.street.getter in conformance EmployeeAddress

method #Address.city!getter: <Self where Self : Address> (Self) -> () -> String : @$s8employee15EmployeeAddressVAA0C0A2aDP4citySSvgTW // protocol witness for Address.city.getter in conformance EmployeeAddress

method #Address.state!getter: <Self where Self : Address> (Self) -> () -> String : @$s8employee15EmployeeAddressVAA0C0A2aDP5stateSSvgTW // protocol witness for Address.state.getter in conformance EmployeeAddress

}

...

Furthermore, the SIL must go through next two phases; guaranteed transformation and optimisation.

SIL guaranteed transformations: The SIL guaranteed transformations (implemented in lib/SILOptimizer/Mandatory) perform additional dataflow diagnostics that affect the correctness of a program (such as a use of uninitialized variables). The end result of these transformations is "canonical" SIL.

Source: swift.org

SIL Optimizations: The SIL optimizations (implemented in lib/Analysis, lib/ARC, lib/LoopTransforms, and lib/Transforms) perform additional high-level, Swift-specific optimizations to the program, including (for example) Automatic Reference Counting optimizations, devirtualization, and generic specialization.

Source: swift.org

\newpage

IR generation (implemented in lib/IRGen) lowers SIL to LLVM IR, at which point LLVM can continue to optimize it and generate machine code.

Source: swift.org

The final step in the compilation process is that of the IR (Intermediate Representation) for LLVM. To get the IR from the swiftc we can use the following command:

swiftc ./employee.swift -emit-ir | moreHere we can see a snippet of the LLVM's familiar code declaration. In the next step, the code would be transformed by LLVM into the machine code.

...

entry:

%1 = getelementptr inbounds %__opaque_existential_type_1, %__opaque_existential_type_1* %0, i32 0, i32 1

%2 = load %swift.type*, %swift.type** %1, align 8

%3 = getelementptr inbounds %__opaque_existential_type_1, %__opaque_existential_type_1* %0, i32 0, i32 0

%4 = bitcast %swift.type* %2 to i8***

%5 = getelementptr inbounds i8**, i8*** %4, i64 -1

%.valueWitnesses = load i8**, i8*** %5, align 8, !invariant.load !64, !dereferenceable !65

%6 = bitcast i8** %.valueWitnesses to %swift.vwtable*

%7 = getelementptr inbounds %swift.vwtable, %swift.vwtable* %6, i32 0, i32 10

%flags = load i32, i32* %7, align 8, !invariant.load !64

%8 = and i32 %flags, 131072

%flags.isInline = icmp eq i32 %8, 0

br i1 %flags.isInline, label %inline, label %outline

...\newpage

Finally, we can explore how to manually create a library out of the source code and link it towards the executable.

The following command will export the employee.swift file as an

Employee.dylib with its module definition. Instead of using the

parameter -emit-module we could use -emit-object to obtain a

statically linked library.

swiftc ./employee.swift -emit-library -emit-module -parse-as-library -module-name EmployeeAfter executing the command, the following files should be created.

380B Apr 2 13:36 Employee.swiftdoc

19K Apr 3 20:52 Employee.swiftmodule

2.9K Apr 3 20:52 Employee.swiftsourceinfo

1.1K Apr 3 20:52 employee.swift

57K Apr 3 20:52 libEmployee.dylibNow we can import the Employee library into the main.swift file and

proceed with the compile. However, here we have to tell the compiler and

linker where to find the Employee library. In this example, I placed the

library into a directory named Frameworks which resides on the same

level as the main.swift.

swiftc main.swift -emit-executable -lEmployee -I ./Frameworks -L ./FrameworksTo give it a bit more explanation the command swiftc -h desribes those

flags as follows:

...

-emit-executable Emit a linked executable

-I <value> Add directory to the import search path

-L <value> Add directory to library link search path

-l<value> Specifies a library which should be linked against

...Hurrray, the executable was created with the linked library! Unfortunately, it crashes right on start with the following:

dyld: Library not loaded: libEmployee.dylib

Referenced from: /Users/cyrilcermak/Programming/iOS/modular_architecture_on_ios/example/./main

Reason: image not found

[1] 92481 abort ./mainUsing the knowledge from the previous chapter we can check where the

binary expects the library to be with the command otool -l ./main.

Load command 15

cmd LC_LOAD_DYLIB

cmdsize 48

name libEmployee.dylib (offset 24)

time stamp 2 Thu Jan 1 01:00:02 1970

current version 0.0.0

compatibility version 0.0.0The binary expects the libEmployee.dylib to be at the same path. This

can be easily fixed with one more tool, install_name. It changes the

path to the linked library in the main executable. It can be used as

follows;

install_name_tool -change libEmployee.dylib @executable_path/Frameworks/libEmployee.dylib main

Running it again prints the desired output:

Cyril Cermak

1. PorschePlatz, Stuttgart, Germany\newpage

In this chapter, the basics of Swift compiler architecture were explored. I hope this (optional) chapter gave a high-level overview and brought more curiosity into the topic of how compilers work. For more study I would refer to the following sources:

Understanding Swift Performance

Understanding method dispatch in Swift

Getting Started with Swift Compiler Development

executable path, load path and rpath

\newpage

The necessary theory about Apple's libraries and some essentials were explained. Finally, it is time to deep dive into the building phase.

First, let us do it manually and automate the process of creating libraries later on so that the newcomers do not have to copy-paste much of the boilerplate code when starting a new team or new part of the application framework.

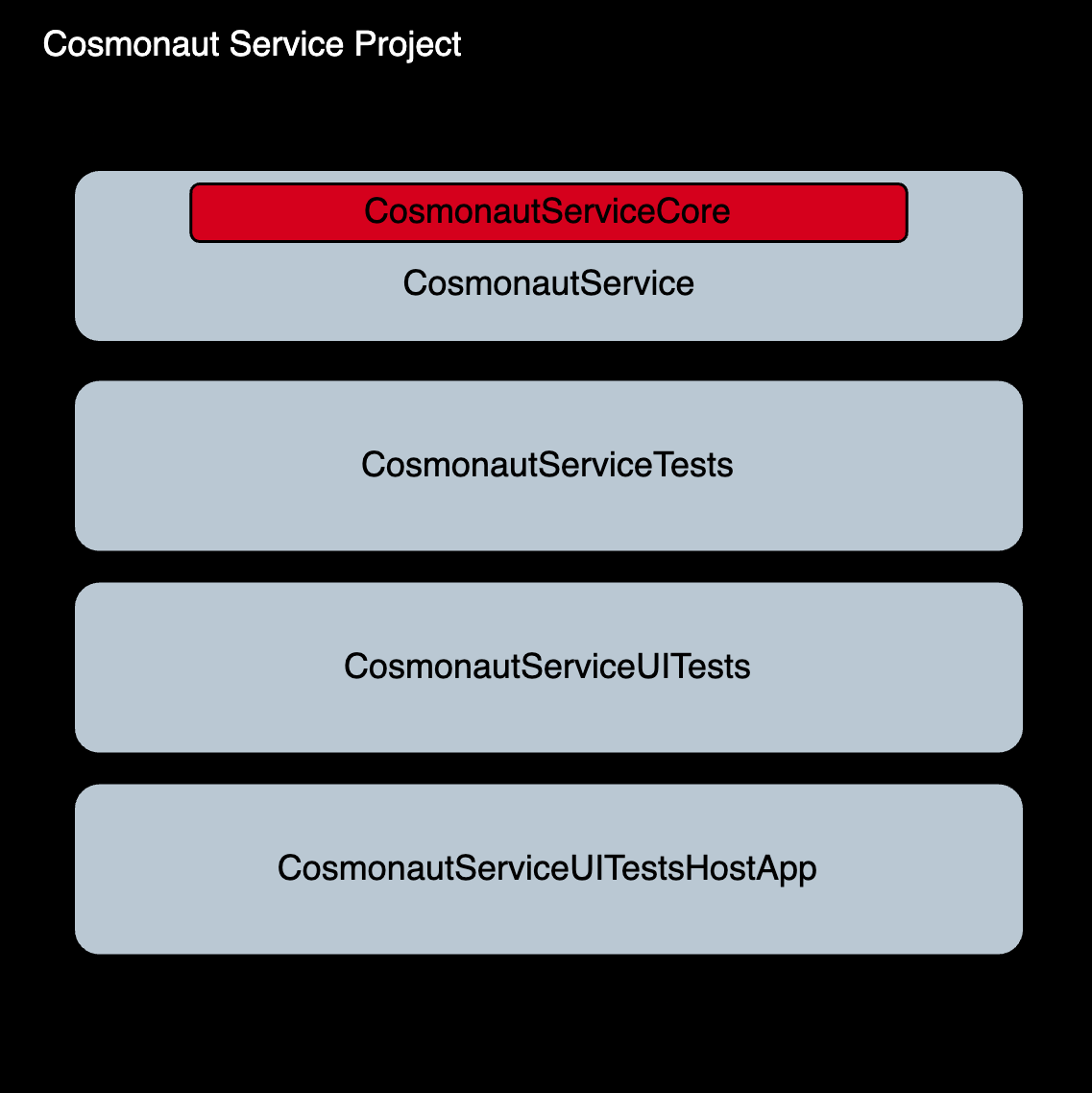

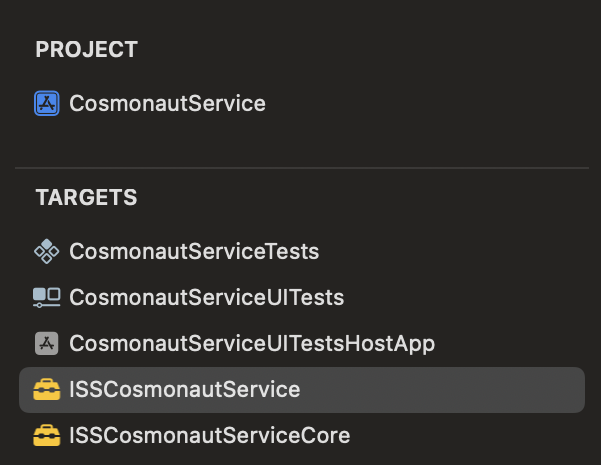

For demonstration purposes, I chose the Cosmonaut app with all its necessary dependencies. Nevertheless, the same principle applies to all other apps within our future iOS/macOS ISS foundational framework.

You can look at the iss_modular_architecture folder and fully focus on

the step by step explanations in the book or you can build it on your

own up until a certain point.

As a reminder, the following schema showcases the Cosmonaut app with its dependencies.

::: {style="float:center" markdown="1"}

{width="70%"}

:::

{width="70%"}

:::

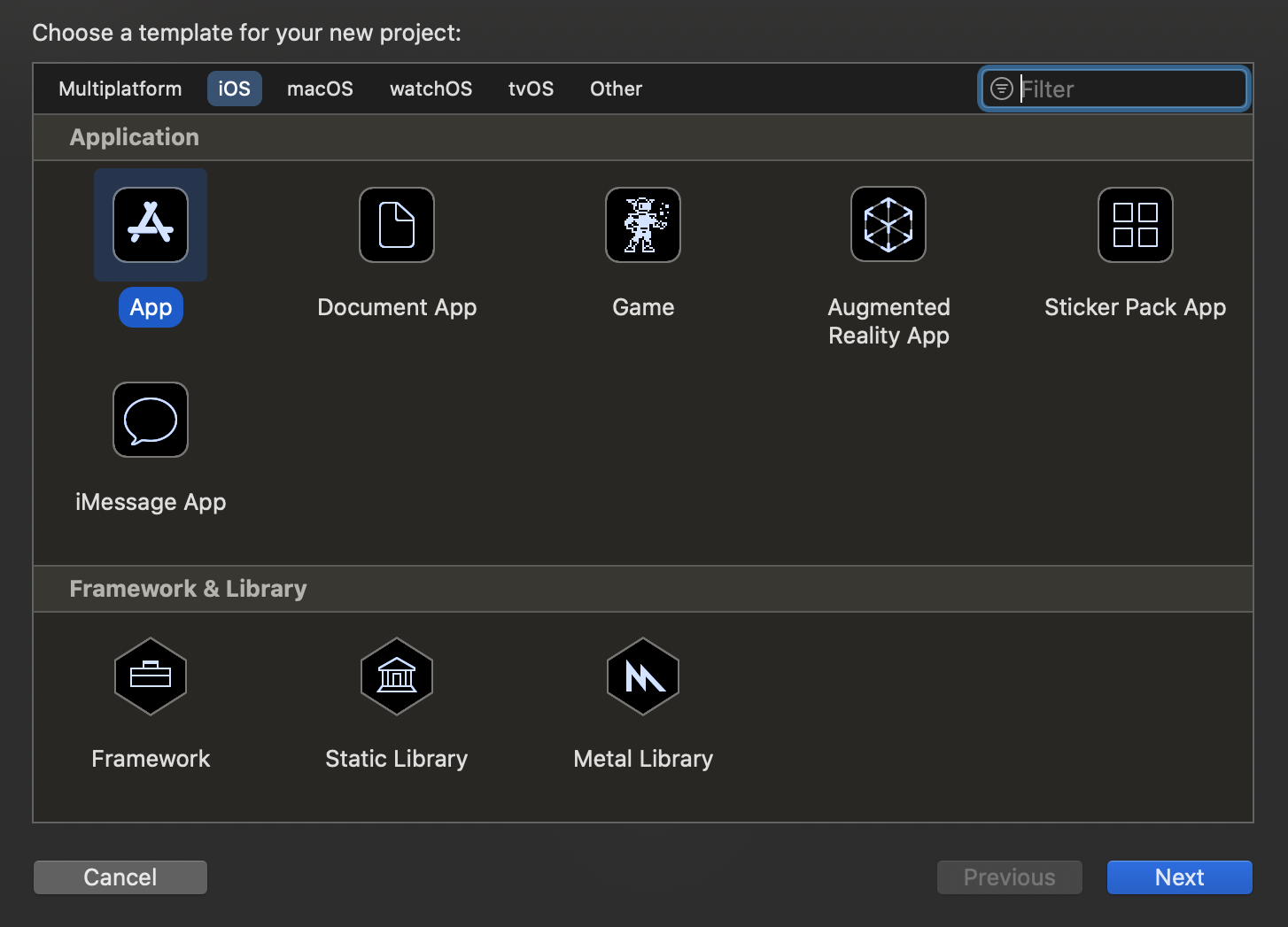

First, let us manually create the Cosmonaut app from Xcode under the

iss_application_framework/app/ directory. To achieve that, simply

create a new App from the Xcode's menu and save it under the predefined

folder path with the Cosmonaut name. An empty project app should be

created, you can run it if you want. Nevertheless, for our purposes, the

project structure is not optimal. We will be working in a workspace that

will contain multiple projects (apps and frameworks).

::: {style="float:center" markdown="1"}

{width="80%"}

:::

{width="80%"}

:::

Since we do not have Cocopods yet, which would convert the project

into a workspace, we have to do it manually. In Xcode under File,

select the option Save As Workspace. Close the project and open the

Workspace that was newly created by Xcode. So far the workspace contains

only the App. Now it is time to create the necessary dependencies for

the Cosmonaut app.

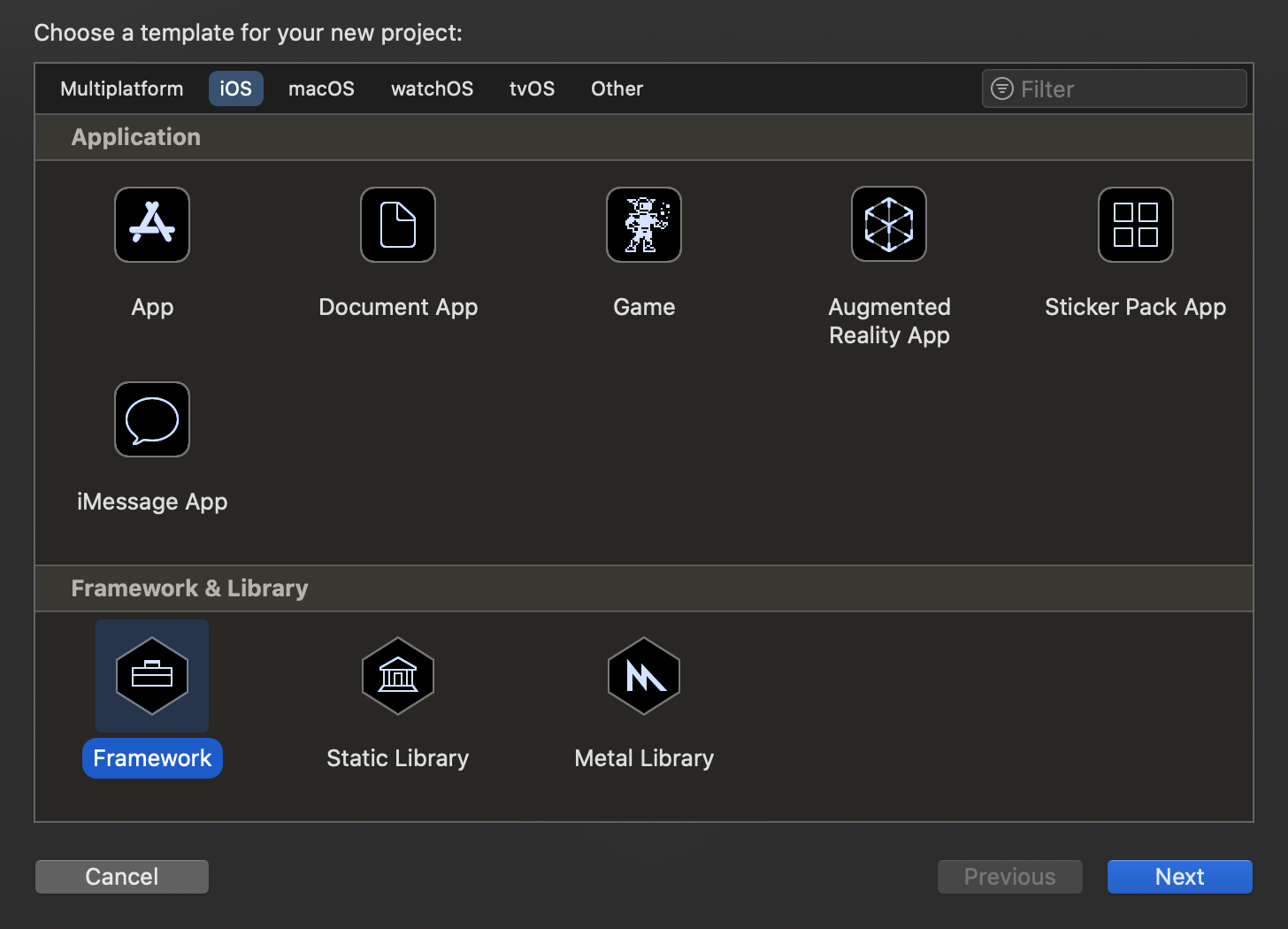

Going top-down through the diagram first comes the Domain layer where

Spacesuit, Cosmonaut and Scaffold is needed to be created. For

creating the Spacesuit let us use Xcode one last time. Under the new

project select the framework icon, name it Cosmonaut and save it under

the iss_application_framework/domain/ directory.

::: {style="float:center" markdown="1"}

{width="80%"}

:::

{width="80%"}

:::

While creating new frameworks and apps is not a daily business, the process still needs to assure that correct namespaces and conventions are used across the whole application framework. This usually leads to copy-pasting an existing framework or app to create a new one with the same patterns. Now is a good time to create the first script that will support the development of the application framework.

If you are building the application framework from scratch please copy

the {PROJECT_ROOT}/fastlane directory from the repository into your

root directory.

The scripting around the framework with Fastlane is explained later on

in the book. However, all you need to know now is that Fastlane contains

lane make_new_project that takes three arguments; type

{app|framework}, project_name and destination_path. The lane in

Fastlane simple uses the instance of the ProjectFactory class located

in the

{PROJECT_ROOT}/fastlane/scripts/ProjectFactory/project_factory.rb

file.

The ProjectFactory creates a new framework or app based on the type

parameter that is passed to it from the command line. As an example of

creating the Spacesuit domain framework, the following command can be

used.

fastlane make_new_project type:framework project_name:Spacesuit destination_path:../domain/SpacesuitIn case of Fastlane not being installed on your Mac, you can install it

via brew install fastlane or later on via Ruby gems defined in

Gemfile. For installation please follow the official

manual.

Furthermore, now that we have the script, all the remaining dependencies can be created with it.

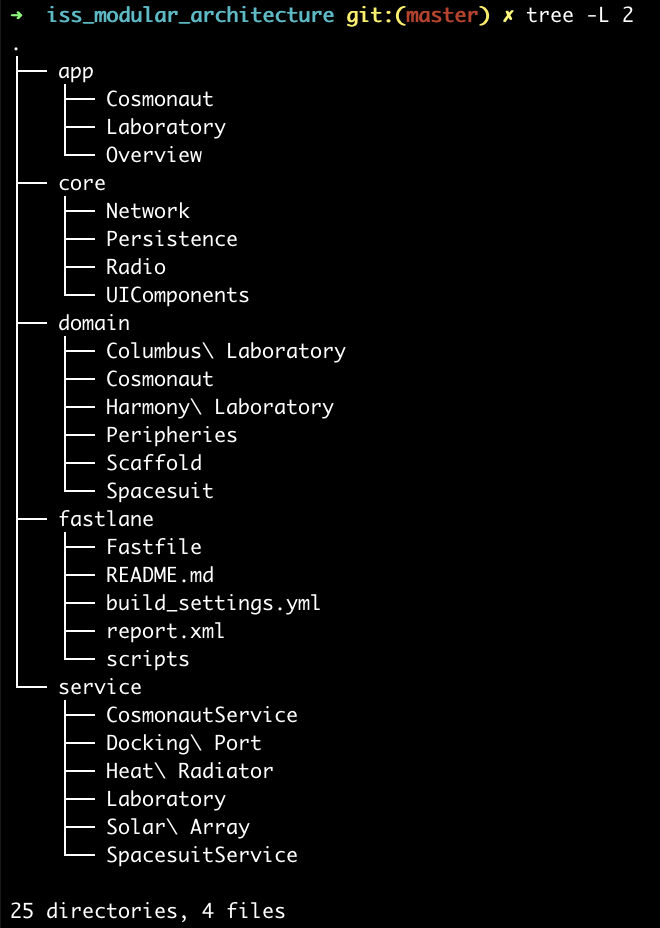

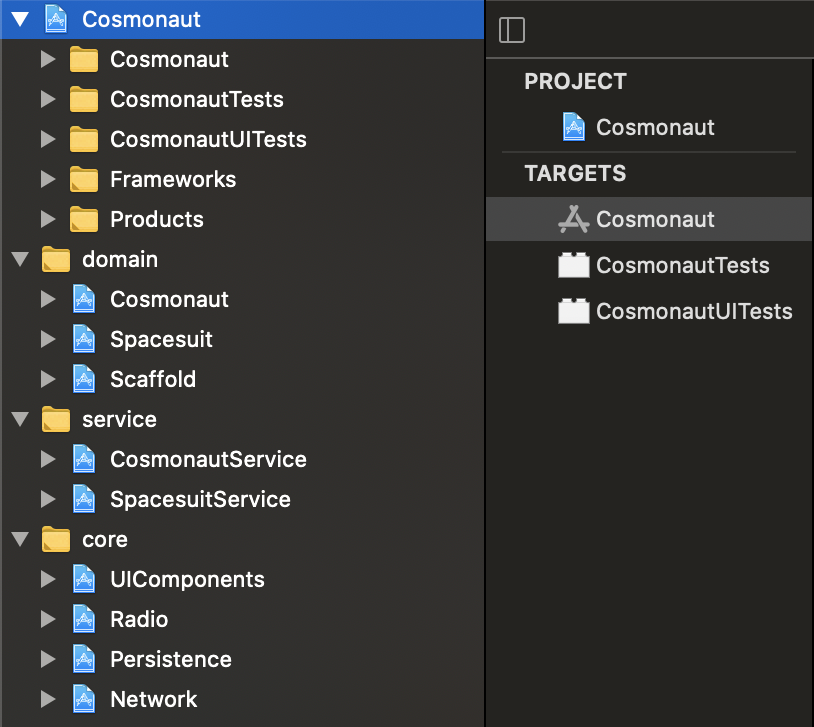

The overall ISS Application Framework should look as follows:

Each directory contains an Xcode project which is either a framework or an app created by the script. From now on, every onboarded team or developer should use the script when adding a framework or an app.

Last but not least, let us create the same directory structure in

Xcode's Workspace so that we can, later on, link those frameworks

together and towards the app. The workspace Cosmonaut.xcworkspace

resides in the folder Cosmonaut under the app folder. An xcworkspace

is simply a structure that contains:

xcshareddata: Directory that contains schemes, breakpoints and other shared informationxcuserdata: Directory that contains information about the current users interface state, opened/modified files of the user and so oncontents.xcworkspacedata: An XML file that describes what projects are linked towards the workspace such that Xcode can understand it

The workspace structure can be created either by drag and drop all

necessary framework projects for the Cosmonaut app or by directly

modifying the contents.xcworkspacedata XML file. No matter which way

was chosen the final xcworkspace should look as follow:

You might have noticed project.yml file that was created with every

framework or app. This file is used by XcodeGen (will be introduced in

a second) to generate the project based on the settings described in the

yaml file. This will avoid conflicts in Apple's infamous

project.pbxproj files that represent each project. In modular

architecture, this is particularly useful as we are working with many

projects across the workspace.

Conflicts in the project.pbxproj files are very common when more than

one developer is working on the same codebase. Besides the build

settings for the project, this file contains and tracks files that are

included for the compilation. It also tracks the targets to which these

files belong. A typical conflict happens when one developer removes a

file from the Xcode's structure while another developer was modifying it

in a separate branch. This will resolve in a merge conflict in the

pbxproj file which is very time consuming to fix as the file is using

Apple's mystified language no one can understand.

Since programmers are lazy creatures, it very often also happens that a file removed from an Xcode project still remains in the repository as the file itself was not moved to the trash. That could lead to git continuing to track a now unused and undesired file. Furthermore, it could also lead to the file being re-added to the project by the developer who was modifying it.

Fortunately, in the Apple ecosystem, we can use

xcodegen, a program that

generates the pbxproj file for us based on the well-arranged yaml

file. In order to use it, we have to first install it via

brew install xcodegen or via other ways described on its homepage.

As an example, let us have a look at the Cosmonaut app project.yml.

app/Cosmonaut/project.yml

# Import of the main build_settings file

include:

- ../../fastlane/build_settings.yml

# Definition of the project

name: Cosmonaut

settings:

groups:

- BuildSettings

# Definition of the targets that exists within the project

targets:

# The main application

Cosmonaut:

type: application

platform: iOS

sources: Cosmonaut

dependencies:

# Domains

- framework: ISSCosmonaut.framework

implicit: true

- framework: ISSSpacesuit.framework

implicit: true

- framework: ISSScaffold.framework

implicit: true

# Services

- framework: ISSSpacesuitService.framework

implicit: true

- framework: ISSCosmonautService.framework

implicit: true

# Core

- framework: ISSNetwork.framework

implicit: true

- framework: ISSRadio.framework

implicit: true

- framework: ISSPersistence.framework

implicit: true

- framework: ISSUIComponents.framework

implicit: true

# Tests for the main application

CosmonautTests:

type: bundle.unit-test

platform: iOS

sources: CosmonautTests

dependencies:

- target: Cosmonaut

settings:

TEST_HOST: $(BUILT_PRODUCTS_DIR)/Cosmonaut.app/Cosmonaut

# UITests for the main application

CosmonautUITests:

type: bundle.ui-testing

platform: iOS

sources: CosmonautUITests

dependencies:

- target: CosmonautEven though the YAML file speaks for itself, let me explain some of it.

First of all, let us look at the include in the very beginning.

# Import of the main build_settings file

include:

- ../../fastlane/build_settings.ymlBefore xcodegen starts generating the pbxproj project it processes and includes other YAML files if the include keyword is found. In case of the application framework, this is extremely helpful as the build settings for each project can be described just by one YAML file.

Imagine a scenario where the iOS deployment version must be bumped up for the app. Since the app links also many frameworks which are being compiled before the app, their deployment target also needs to be bumped up. Without XcodeGen, each project would have to be modified to have the new deployment target. Even worse, when trying some build settings out instead of modifying it on each project a simple change in one file that is included in the others will do the trick.

A simplified build settings YAML file could look like this:

fastlane/build_settings.yml

options:

bundleIdPrefix: com.iss

developmentLanguage: en

settingGroups:

BuildSettings:

base:

# Architectures

SDKROOT: iphoneos

# Build Options

ALWAYS_EMBED_SWIFT_STANDARD_LIBRARIES: $(inherited)

DEBUG_INFORMATION_FORMAT: dwarf-with-dsym

ENABLE_BITCODE: false

# Deployment

IPHONEOS_DEPLOYMENT_TARGET: 13.0

TARGETED_DEVICE_FAMILY: 1

...Worth mentioning is that in the BuildSettings the key in the YAML

matches with Xcode build settings which can be seen in the inspector

side panel. As you can see the BuildSettings key is then referred

inside the project.yml file under the settings right after the project

name.

name: Cosmonaut

settings:

groups:

- BuildSettingsThe following key is targets. In the case of the Cosmonaut

application, we are setting three targets. One for the app itself, one

for unit tests and finally one for UI tests. Each key sets the name of

the target and then describes it with type, platform, dependencies

and other parameters XcodeGen supports.

Next, let us have a look at the dependencies:

dependencies:

# Domains

- framework: ISSCosmonaut.framework

implicit: true

- framework: ISSSpacesuit.framework

implicit: true

- framework: ISSScaffold.framework

implicit: true

...The dependencies section links the specified frameworks toward the app.

On the snippet above, you can see which dependencies the app is using.

The implicit keyword with the framework means that the framework is

not pre-compiled and requires compilation to be found. That being said,

the framework needs to be part of the workspace in order for the build

system to work. Another parameter that can be stated there is

embeded: {true|false}. This parameter sets whether the framework will

be embedded with the app and copied into the target. By default XcodeGen

has embeded: true for applications as they have to copy the compiled

framework to the target in order for the app to launch successfully and

embeded: false for frameworks. Since the framework is not a standalone

executable and must be part of some application it is expected that the

application copies it.

Full documentation of XcodeGen can be found on its GitHub page:

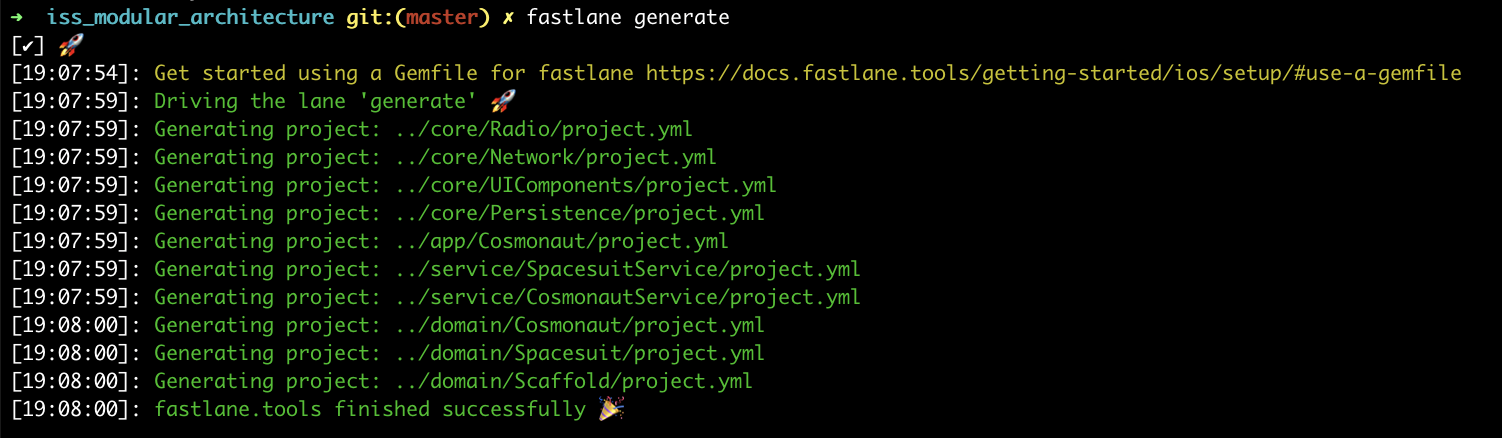

Finally, let's generate the projects and build the app with all its frameworks. For that, a simple lane in Fastlane was created.

lane :generate do

# Finding all projects within directories

Dir["../**/project.yml"].each do |project_path|

# Skipping the template files

next if project_path.include? "fastlane"

UI.success "Generating project: #{project_path}"

`xcodegen -s #{project_path}`

end

endSimply executing the fastlane generate command in the root directory

of the application framework generates all projects and we can open the

workspace and press run. The output of the command should look as

follows:

Looking at the ISS architecture, two very important patterns are being followed.

First of all, any framework does NOT allow linking modules on the same layer. Doing so is meant to prevent creating cross-linking cycles in between frameworks. For example, if the Network module would link Radio module and the Radio module would link Network module we would be in serious trouble. Surprisingly, Xcode does not fail to build every time in such a setup. However, it will have a really hard time with compiling and linking, up until one day it starts failing.

Second of all, each layer can link frameworks only from its sublayer. This ensures the vertical linking pattern. That being said, the cross-linking dependencies will also not happen on the vertical level.

Let us have a look at some examples of cross-linking dependencies.

Let us say that the build system will jump on compiling the Network

module where the Radio is being linked to. When it comes to the point

where the Radio needs to be linked it jumps to compile the Radio module

without finishing the compilation of the Network. The Radio module now

requires a Network module to continue compiling, however, the Network

module has not finished compiling yet, therefore, no swiftmodule and

other files were yet created. The compiler will continue compiling up

until one file will be referencing some part (e.g a class in a file) of

the other module and the other module will be referencing the caller.

That's where the compiler will stop.

Needless to say, each layer is defined to contain stand-alone modules that are just in need of some sub-dependencies. In theory this is all nice and makes sense. In practice, however, it can happen that one domain will require something from another domain (for example, the Cosmonaut domain will require something from the Spacesuit domain). It can be some domain-specific logic, views or even the whole flow of screens. In that case, there are some options for how to tackle the issue. Either, a new module on the service layer can be created and the necessary source code files that are shared across multiple domains being moved there. Another option is to shift those files from the domain layer to the service layer. A third option would be to use abstraction and achieve the same result not from the module level but from the code level. The ideal solution solely depends on the use case. There is yet another possibility, to separate the interfaces into a separate framework which will be introduced in the next section.

A simple example could be that some flow is represented by a protocol

that has a start function on it. That could for example be a

coordinator pattern that would be defined for the whole framework and

all modules would be following it. That protocol must then be defined in

one of the lower layers frameworks in this case since it is related to a

flow of view controllers, the UIComponents could be a good place for it.

Due to that, in the framework, we can rely on all domains understanding

it. Thereafter, the Cosmonaut app could instantiate the coordinator from

the Spacesuit domain and pass it down or assign it as a child

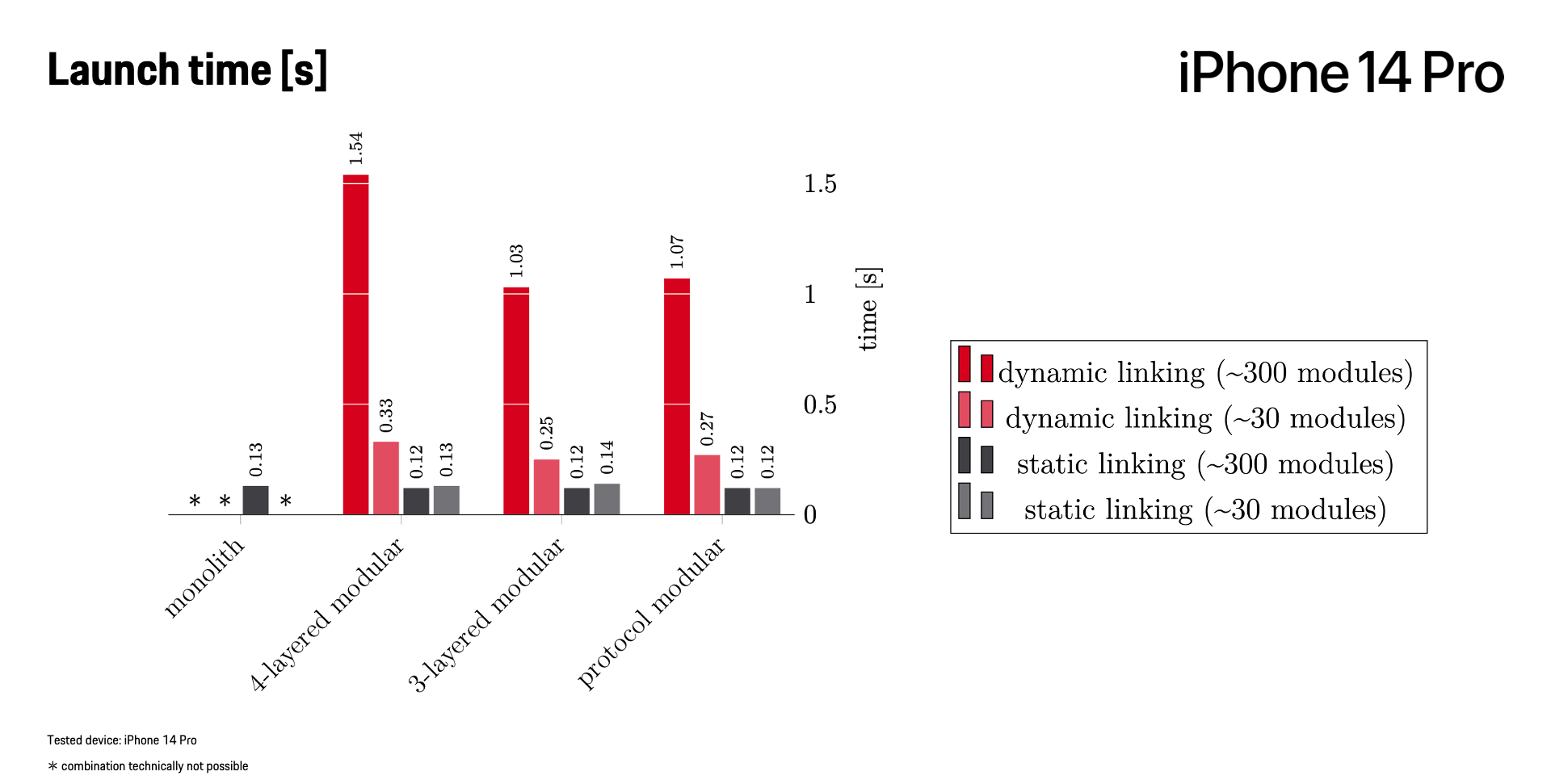

coordinator to the Cosmonaut domain.