In ICLR 2020. Link: https://openreview.net/forum?id=rJx1Na4Fwr

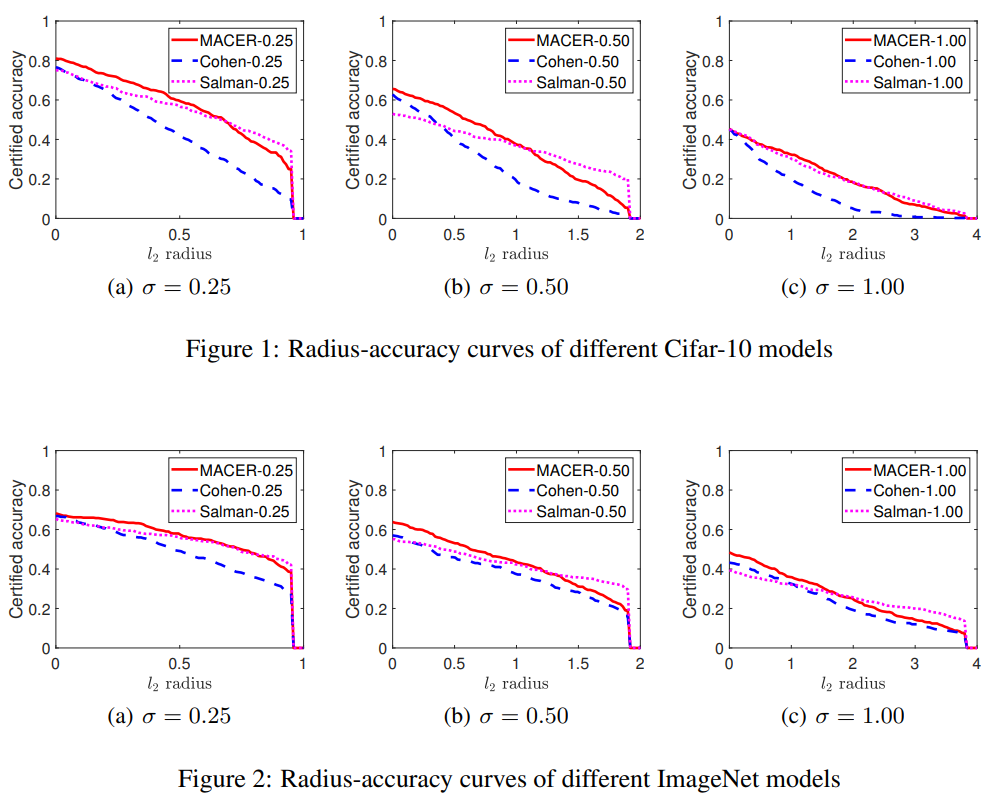

Our proposed algorithm MACER outperforms all existing provably L2-defenses in both average certified radius and training speed on Cifar-10, ImageNet, MNIST and SVHN. Besides, our method does not depend on any specific attack strategy, which makes it substantially different from adversarial training. Our method is also scalable to modern deep neural networks on a wide range of real-world datasets.

MACER is an attack-free and scalable robust training algorithm that trains provably robust models by MAximizing the CErtified Radius. The certified radius is provided by a robustness certification method. In this work, we obtain the certified radius using randomized smoothing.

Because MACER is attack-free, it trains models that are provably robust, i.e. they are robust against any possible attack in the certified region. Additionally, by avoiding time-consuming attack iterations, MACER runs much faster than adversarial training. We conduct extensive experiments to demonstrate that MACER spends less training time than state-of-the-art adversarial training, and the learned models achieve larger average certified radius.

| File | Description |

|---|---|

main.py |

Main train and test file |

macer.py |

MACER algorithm |

model.py |

Network architectures |

rs/*.py |

Randomized smoothing |

visualize/plotcurves.m |

Result visualization |

- Clone this repository

git clone https://github.com/MacerAuthors/macer.git

- Make sure you meet package requirements by running:

pip install -r requirements.txt

- Make sure you add the root folder to the PYTHONPATH (on Linux):

export PYTHONPATH=/path/to/macer

Here we will show how to train a provably l2-robust Cifar-10 model. We will use σ=0.25 as an example.

python main.py --task train --dataset cifar10 --root /root/to/cifar10 --ckptdir /folder/for/checkpoints --matdir /folder/for/matfiles --sigma 0.25

Options sigma, gauss_num, lbd, gamma and beta set parameters sigma, k, lambda, gamma and beta respectively (see Algorithm 1 in our paper for the meaning of these parameters). Option ckptdir specifies a folder for saving checkpoints. Option matdir specifies a folder for saving matfiles. Matfiles are .mat files that record detailed certification results, which can be further processed by Scipy or MATLAB. The figures in our paper are all drawn with MATLAB using those matfiles.

Certification is automatically done during training, but you can still do it manually using the following command:

python main.py --task test --dataset cifar10 --root /root/to/cifar10 --resume_ckpt /path/to/checkpoint.pth --matdir /folder/for/matfiles --sigma 0.25

You can use the --resume_ckpt /path/to/checkpoint.pth option in main.py to load a checkpoint file during training or certification.

After you get the matfiles, use the MATLAB file visualize/plotcurves.m to visualize the results.

Our checkpoints can be downloaded here. For training settings please refer to our paper. Although you won't get exactly the same checkpoints if you run on your own due to randomness, the performance should be close if you follow our settings.

In addition to comparing the approximated certified test accuracy, we also compare the average certified radius (ACR). Performance on Cifar-10:

| σ | Model | 0.00 | 0.25 | 0.50 | 0.75 | 1.00 | 1.25 | 1.50 | 1.75 | 2.00 | 2.25 | ACR |

| 0.25 | Cohen-0.25 | 0.75 | 0.60 | 0.43 | 0.26 | 0 | 0 | 0 | 0 | 0 | 0 | 0.416 |

| Salman-0.25 | 0.74 | 0.67 | 0.57 | 0.47 | 0 | 0 | 0 | 0 | 0 | 0 | 0.538 | |

| MACER-0.25 | 0.81 | 0.71 | 0.59 | 0.43 | 0 | 0 | 0 | 0 | 0 | 0 | 0.556 | |

| 0.50 | Cohen-0.50 | 0.65 | 0.54 | 0.41 | 0.32 | 0.23 | 0.15 | 0.09 | 0.04 | 0 | 0 | 0.491 |

| Salman-0.50 | 0.50 | 0.46 | 0.44 | 0.40 | 0.38 | 0.33 | 0.29 | 0.23 | 0 | 0 | 0.709 | |

| MACER-0.50 | 0.66 | 0.60 | 0.53 | 0.46 | 0.38 | 0.29 | 0.19 | 0.12 | 0 | 0 | 0.726 | |

| 1.00 | Cohen-1.00 | 0.47 | 0.39 | 0.34 | 0.28 | 0.21 | 0.17 | 0.14 | 0.08 | 0.05 | 0.03 | 0.458 |

| Salman-1.00 | 0.45 | 0.41 | 0.38 | 0.35 | 0.32 | 0.28 | 0.25 | 0.22 | 0.19 | 0.17 | 0.787 | |

| MACER-1.00 | 0.45 | 0.41 | 0.38 | 0.35 | 0.32 | 0.29 | 0.25 | 0.22 | 0.18 | 0.16 | 0.792 |

For detailed ImageNet results, check our paper.

For a fair comparison, we use the original codes and checkpoints provided by the authors and run all algorithms on the same machine. For Cifar-10 we use 1 NVIDIA P100 GPU and for ImageNet we use 4 NVIDIA P100 GPUs. (Cohen's repo; Salman's repo)

MACER runs much faster than adversarial training. For example, on Cifar-10 (σ=0.25), MACER uses 61.60 hours to achieve ACR=0.556, while SmoothAdv reaches ACR=0.538 but uses 82.92 hours.

| Dataset | Model | sec/epoch | Epochs | Total hrs | ACR |

| Cifar-10 | Cohen-0.25 | 31.4 | 150 | 1.31 | 0.416 |

| Salman-0.25 | 1990.1 | 150 | 82.92 | 0.538 | |

| MACER-0.25(ours) | 504.0 | 440 | 61.60 | 0.556 | |

| ImageNet | Cohen-0.25 | 2154.5 | 90 | 53.86 | 0.470 |

| Salman-0.25 | 7723.8 | 90 | 193.10 | 0.528 | |

| MACER-0.25(ours) | 3537.1 | 120 | 117.90 | 0.544 |

We carefully examine the effect of each hyperparameter. Ablation study results on Cifar-10:

Please check our paper for detailed discussions.

Please use the following Bibtex entry to cite our paper:

@inproceedings{

zhai2020macer,

title={MACER: Attack-free and Scalable Robust Training via Maximizing Certified Radius},

author={Runtian Zhai and Chen Dan and Di He and Huan Zhang and Boqing Gong and Pradeep Ravikumar and Cho-Jui Hsieh and Liwei Wang},

booktitle={International Conference on Learning Representations},

year={2020},

url={https://openreview.net/forum?id=rJx1Na4Fwr}

}