- ✨ Features

- 🚀 Quick Start

- 🔗 Integrations

- 🌐 Getting Started

- 🏠 Self Hosting

- 📐 Architecture

- 🤝 Contributing

- 🔒 Security

- ❓ FAQ

- 👥 Contributors

- 📜 License

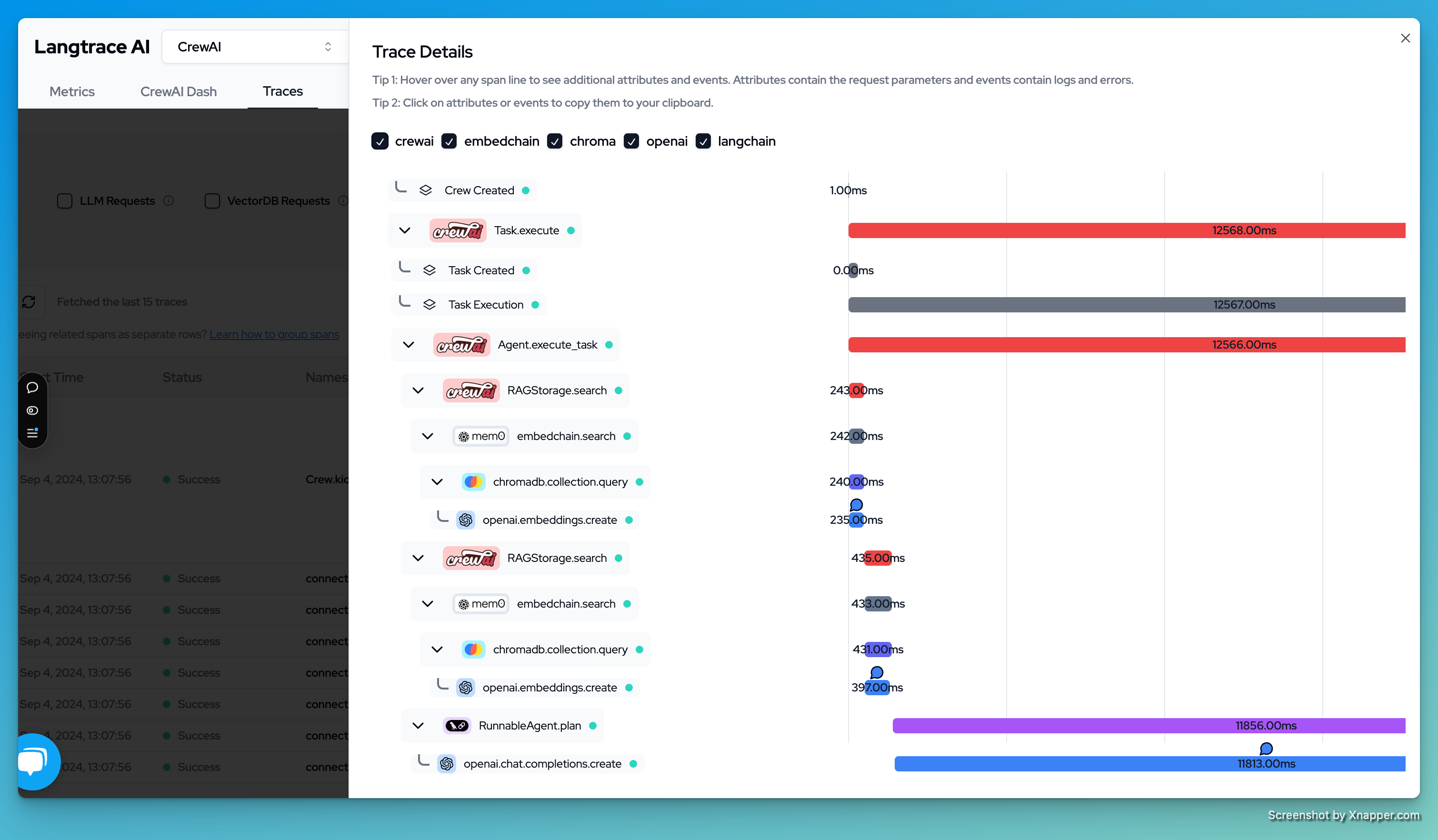

Langtrace is an open source observability software which lets you capture, debug and analyze traces and metrics from all your applications that leverages LLM APIs, Vector Databases and LLM based Frameworks.

- 📊 Open Telemetry Support: Built on OTEL standards for comprehensive tracing

- 🔄 Real-time Monitoring: Track LLM API calls, vector operations, and framework usage

- 🎯 Performance Insights: Analyze latency, costs, and usage patterns

- 🔍 Debug Tools: Trace and debug your LLM application workflows

- 📈 Analytics: Get detailed metrics and visualizations

- 🏠 Self-hosting Option: Deploy on your own infrastructure

# For TypeScript/JavaScript

npm i @langtrase/typescript-sdk

# For Python

pip install langtrace-python-sdkInitialize in your code:

// TypeScript

import * as Langtrace from '@langtrase/typescript-sdk'

Langtrace.init({ api_key: '<your_api_key>' }) // Get your API key at langtrace.ai# Python

from langtrace_python_sdk import langtrace

langtrace.init(api_key='<your_api_key>') # Get your API key at langtrace.aiFor detailed setup instructions, see Getting Started.

The traces generated by Langtrace adhere to Open Telemetry Standards(OTEL). We are developing semantic conventions for the traces generated by this project. You can checkout the current definitions in this repository. Note: This is an ongoing development and we encourage you to get involved and welcome your feedback.

To use the managed SaaS version of Langtrace, follow the steps below:

- Sign up by going to this link.

- Create a new Project after signing up. Projects are containers for storing traces and metrics generated by your application. If you have only one application, creating 1 project will do.

- Generate an API key by going inside the project.

- In your application, install the Langtrace SDK and initialize it with the API key you generated in the step 3.

- The code for installing and setting up the SDK is shown below:

npm i @langtrase/typescript-sdkimport * as Langtrace from '@langtrase/typescript-sdk' // Must precede any llm module imports

Langtrace.init({ api_key: <your_api_key> })OR

import * as Langtrace from "@langtrase/typescript-sdk"; // Must precede any llm module imports

LangTrace.init(); // LANGTRACE_API_KEY as an ENVIRONMENT variablepip install langtrace-python-sdkfrom langtrace_python_sdk import langtrace

langtrace.init(api_key=<your_api_key>)OR

from langtrace_python_sdk import langtrace

langtrace.init() # LANGTRACE_API_KEY as an ENVIRONMENT variableTo run the Langtrace locally, you have to run three services:

- Next.js app

- Postgres database

- Clickhouse database

Important

Checkout our documentation for various deployment options and configurations.

Requirements:

- Docker

- Docker Compose

Feel free to modify the .env file to suit your needs.

docker compose upThe application will be available at http://localhost:3000.

To delete containers and volumes

docker compose down -v-v flag is used to delete volumes

Langtrace collects basic, non-sensitive usage data from self-hosted instances by default, which is sent to a central server (via PostHog).

The following telemetry data is collected by us:

- Project name and type

- Team name

This data helps us to:

- Understand how the platform is being used to improve key features.

- Monitor overall usage for internal analysis and reporting.

No sensitive information is gathered, and the data is not shared with third parties.

If you prefer to disable telemetry, you can do so by setting TELEMETRY_ENABLED=false in your configuration.

Langtrace automatically captures traces from the following vendors and frameworks:

| Provider | TypeScript SDK | Python SDK |

|---|---|---|

| OpenAI | ✅ | ✅ |

| Anthropic | ✅ | ✅ |

| Azure OpenAI | ✅ | ✅ |

| Cohere | ✅ | ✅ |

| DeepSeek | ✅ | ✅ |

| xAI | ✅ | ✅ |

| Groq | ✅ | ✅ |

| Perplexity | ✅ | ✅ |

| Gemini | ✅ | ✅ |

| AWS Bedrock | ✅ | ✅ |

| Mistral | ❌ | ✅ |

| Framework | TypeScript SDK | Python SDK |

|---|---|---|

| Langchain | ❌ | ✅ |

| LlamaIndex | ✅ | ✅ |

| Langgraph | ❌ | ✅ |

| LiteLLM | ❌ | ✅ |

| DSPy | ❌ | ✅ |

| CrewAI | ❌ | ✅ |

| Ollama | ❌ | ✅ |

| VertexAI | ✅ | ✅ |

| Vercel AI | ✅ | ❌ |

| GuardrailsAI | ❌ | ✅ |

| Database | TypeScript SDK | Python SDK |

|---|---|---|

| Pinecone | ✅ | ✅ |

| ChromaDB | ✅ | ✅ |

| QDrant | ✅ | ✅ |

| Weaviate | ✅ | ✅ |

| PGVector | ✅ | ✅ (SQLAlchemy) |

| MongoDB | ❌ | ✅ |

| Milvus | ❌ | ✅ |

- To request for features, head over here to start a discussion.

- To raise an issue, head over here and create an issue.

We welcome contributions to this project. To get started, fork this repository and start developing. To get involved, join our Slack workspace.

To report security vulnerabilities, email us at [email protected]. You can read more on security here.

- Langtrace application(this repository) is licensed under the AGPL 3.0 License. You can read about this license here.

- Langtrace SDKs are licensed under the Apache 2.0 License. You can read about this license here.

karthikscale3 |

dylanzuber-scale3 |

darshit-s3 |

rohit-kadhe |

yemiadej |

alizenhom |

obinnascale3 |

Cruppelt |

Dnaynu |

jatin9823 |

MayuriS24 |

NishantRana07 |

obinnaokafor |

heysagnik |

dabiras3 |

1. Can I self host and run Langtrace in my own cloud? Yes, you can absolutely do that. Follow the self hosting setup instructions in our documentation.

2. What is the pricing for Langtrace cloud? Currently, we are not charging anything for Langtrace cloud and we are primarily looking for feedback so we can continue to improve the project. We will inform our users when we decide to monetize it.

3. What is the tech stack of Langtrace? Langtrace uses NextJS for the frontend and APIs. It uses PostgresDB as a metadata store and Clickhouse DB for storing spans, metrics, logs and traces.

4. Can I contribute to this project? Absolutely! We love developers and welcome contributions. Get involved early by joining our Discord Community.

5. What skillset is required to contribute to this project? Programming Languages: Typescript and Python. Framework knowledge: NextJS. Database: Postgres and Prisma ORM. Nice to haves: Opentelemetry instrumentation framework, experience with distributed tracing.