This project implements Deep RNN Framework of paper Deep RNN Framework for Visual Sequential Applications (CVPR 2019).

Please follow the instructions to run the code.

- A auxiliary annotation software that runs the vertex prediction kernel with our Deep RNN Framework (remarkably enhancing the annotation quality compared with Polygon-RNN) was released! Try the demo!

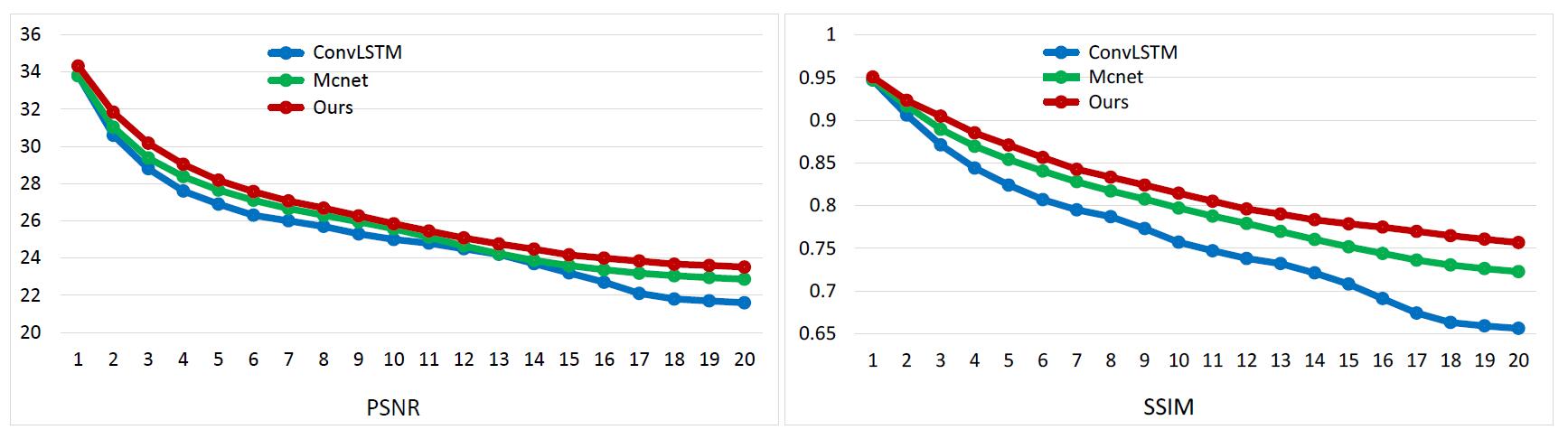

Deep RNN Framework is a RNN framework for high-dimensional squence problems and in this repository we focus on the visual tasks. The Deep RNN Framework achieves more than 11% relative improvements over shallow RNN models on Kinetics, UCF-101, and HMDB-51 for video classification. For auxiliary annotation, after replacing the shallow RNN part of Polygon-RNN with our 15-layer deep CBM (alias in code: RBM), the performance improves by 14.7%. For video future prediction, our Deep RNN Framework improves the state-of-the-art shallow model's performance by 2.4% on PSNR and SSIM.

Results on backbone supported models:

| UCF-101 | HMDB-51 | |||

|---|---|---|---|---|

| Recognition | Anticipation | Recognition | Anticipation | |

| 1-layer LSTM | 71.1 | 30.6 | 36.0 | 18.8 |

| 15-layer ConvLSTM | 68.9 | 49.6 | 34.2 | 27.6 |

| 1-layer CBM | 65.3 | 28.4 | 34.3 | 16.9 |

| 15-layer CBM | 79.8 | 57.7 | 40.2 | 32.1 |

Action recognition results on standalone RNN models:

| Architecture | Kinetics | UCF-101 | HMDB-51 |

|---|---|---|---|

| Shallow LSTM with Backbone | 53.9 | 86.8 | 49.7 |

| C3D | 56.1 | 79.9 | 49.4 |

| Two-Stream | 62.8 | 93.8 | 64.3 |

| 3D-Fused | 62.3 | 91.5 | 66.5 |

| Deep CBM without Backbone | 60.2 | 91.9 | 61.7 |

-

Dependencies:

- Python 2.7

- Pytorch 0.4

- torchvision

- Numpy

- Pillow

- tqdm

-

Download UCF101 and HMDB and organize the image files (from the videos) as follows:

Dataset ├── train │ ├── action0 │ │ ├── video0 | | | ├── frame0 | | | ├── frame1 | | | ├── ... │ │ ├── video1 | | | ├── frame0 | | | ├── frame1 | | | ├── ... │ │ ├── ... │ ├── action1 │ ├── ... ├── test │ ├── action0 │ │ ├── video0 │ │ | ├── frame0 │ │ ├── ... │ ├── ... -

Running train.py and test.py for training and evaluation respectively. By default, the code runs for action recognition and you can assign "--anticipation" for action anticipation:

# for action recognition python train.py python test.py # for action anticipation python train.py --anticipation python test.py --anticipation -

Get our pre-trained models:

- Action Recognition on UCF-101: Google Drive, Baidu Pan

- Action Anticipation on UCF-101: Google Drive, Baidu Pan

- Action Recognition on HMDB-51: Google Drive, Baidu Pan

- Action Anticipation on HMDB-51: Google Drive, Baidu Pan

-

Dependencies:

- Python 2.7

- Pytorch 0.4

- torchvision

- Numpy

- Pillow

- tqdm

-

Download Kinetics-400 from the official website or from the copy of facebookresearch/video-nonlocal-net, and organize the image files (from the videos) the same as UCF101 and HMDB:

Dataset ├── train_frames │ ├── action0 │ │ ├── video0 | | | ├── frame0 ├── test_frames -

Running train.py and test.py for training and evaluation respectively. In this standalone model, we only commit the action recognition task:

a. Run the following command to train.

# start from scratch python main.py --train # start from our pre-trained model python main.py --model_path [path_to_model] --model_name [model's name] --resume --trainb. Run the following command to test.

python main.py --test -

Get our pre-trained models:

- Action Recognition on Kinetics: Google Drive, Baidu Pan

Results on Cityscapes dataset:

| Model | IoU | ||

|---|---|---|---|

| Original Polygon-RNN | 61.4 | ||

| Residual Polygon-RNN | 62.2 | ||

| Residual Polygon-RNN + attention + RL | 67.2 | ||

| Residual Polygon-RNN + attention + RL + EN | 70.2 | ||

| Polygon-RNN++ | 71.4 | ||

| # Layers | # params of RNN | ||

| Polyg-LSTM | 2 | 0.47M | 61.4 |

| Polyg-LSTM | 5 | 2.94M | 63.0 |

| Polyg-LSTM | 10 | 7.07M | 59.3 |

| Polyg-LSTM | 15 | 15.71M | 46.7 |

| Polyg-CBM | 2 | 0.20M | 59.9 |

| Polyg-CBM | 5 | 1.13M | 63.1 |

| Polyg-CBM | 10 | 2.68M | 67.1 |

| Polyg-CBM | 15 | 5.85M | 70.4 |

- Dependencies:

- Python 2.7

- Pytorch 0.4

- torchvision

- Numpy

- Pillow

- Download data from Cityscapes, organize the image files and annotation json files as follows:

img

├── train

│ ├── cityname1

│ │ ├── pic.png

│ │ ├── ...

│ ├── cityname2

│ │ ├── pic.png

│ │ ├── ...

├── val

│ ├── cityname

│ │ ├── pic.png

│ │ ├── ...

├── test

│ ├── cityname

│ │ ├── pic.png

│ │ ├── ...

label

├── train

│ ├── cityname1

│ │ ├── annotation.json

│ │ ├── ...

│ ├── cityname2

│ │ ├── annotation.json

│ │ ├── ...

├── val

│ ├── cityname

│ │ ├── annotation.json

│ │ ├── ...

├── test

│ ├── cityname

│ │ ├── annotation.json

│ │ ├── ...

The png files and the json files should have corresponding same name.

Execute the following command to make directories for new data and save models:

mkdir -p new_img/(train/val/test)

mkdir -p new_label/(train/val/test)

mkdir save

- Run the following command to generate data for train/validation/test.

python generate_data.py --data train/val/test

- Run the following command to train.

python train.py --gpu_id 0 --batch_size 1 --lr 0.0001 --pretrained False

- Run the following command to test.

python test.py --gpu_id 0 --batch_size 128 --model [model_path]

- Get our pre-trained models:

- Deep Polygon-RNN on Cityscapes: Google Drive, Baidu Pan

- Quantitative results on KTH:

| Method | Metric | T1 | T2 | T3 | T4 | T5 | T6 | T7 | T8 | T9 | T10 | T11 | T12 | T13 | T14 | T15 | T16 | T17 | T18 | T19 | T20 | Avg |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ConvLSTM | PSNR | 33.8 | 30.6 | 28.8 | 27.6 | 26.9 | 26.3 | 26.0 | 25.7 | 25.3 | 25.0 | 24.8 | 24.5 | 24.2 | 23.7 | 23.2 | 22.7 | 22.1 | 21.8 | 21.7 | 21.6 | 25.3 |

| SSIM | 0.947 | 0.906 | 0.871 | 0.844 | 0.824 | 0.807 | 0.795 | 0.787 | 0.773 | 0.757 | 0.747 | 0.738 | 0.732 | 0.721 | 0.708 | 0.691 | 0.674 | 0.663 | 0.659 | 0.656 | 0.765 | |

| MCnet | PSNR | 33.8 | 31.0 | 29.4 | 28.4 | 27.6 | 27.1 | 26.7 | 26.3 | 25.9 | 25.6 | 25.1 | 24.7 | 24.2 | 23.9 | 23.6 | 23.4 | 23.2 | 23.1 | 23.0 | 22.9 | 25.9 |

| SSIM | 0.947 | 0.917 | 0.889 | 0.869 | 0.854 | 0.840 | 0.828 | 0.817 | 0.808 | 0.797 | 0.788 | 0.799 | 0.770 | 0.760 | 0.752 | 0.744 | 0.736 | 0.730 | 0.726 | 0.723 | 0.804 | |

| Ours | PSNR | 34.3 | 31.8 | 30.2 | 29.0 | 28.2 | 27.6 | 27.14 | 26.7 | 26.3 | 25.8 | 25.5 | 25.1 | 24.8 | 24.5 | 24.2 | 24.0 | 23.8 | 23.7 | 23.6 | 23.5 | 26.5 |

| SSIM | 0.951 | 0.923 | 0.905 | 0.885 | 0.871 | 0.856 | 0.843 | 0.833 | 0.824 | 0.814 | 0.805 | 0.796 | 0.790 | 0.783 | 0.779 | 0.775 | 0.770 | 0.765 | 0.761 | 0.757 | 0.824 |

- Qualitative results on KTH

- Dependencies:

- Python 2.7

- Tensorflow 1.1.0 (pip install --ignore-installed --upgrade https://storage.googleapis.com/tensorflow/linux/gpu/tensorflow_gpu-1.1.0-cp27-none-linux_x86_64.whl)

- Package: scipy, imageio, pyssim, joblib, Pillow, scikit-image, opencv-python (pip or conda)

- FFMPEG: conda install -c menpo ffmpeg=3.1.3

- Downloading KTH dataset

./data/KTH/download.sh

- Training (enable balanced multi-gpu training)

python train_kth_multigpu.py --gpu 0 1 2 3 4 5 6 7 --batch_size 8 --lr 0.0001

- Testing

python test_kth.py --gpu 0 --prefix [checkpoint_folder] --p [checkpoint_index]

- Obtain quantitative and qualitative results

The generated gifs will be located in

./results/images/KTH

The quantitative results will be located in

./results/quantitative/KTH

The quantitative results for each video will be stored as dictionaries, and the mean results for all test data instances at every timestep can be displayed as

import numpy as np

results = np.load('<results_file_name>')

print(results['psnr'].mean(axis=0))

print(results['ssim'].mean(axis=0))

Special thanks for the source code of MCnet for ICLR 2017 paper: Decomposing Motion and Content for Natural Video Sequence Prediction.

Please cite these papers in your publications if it helps your research:

@article{pang2018deep,

title={Deep RNN Framework for Visual Sequential Applications},

author={Pang, Bo and Zha, Kaiwen and Cao, Hanwen and Shi, Chen and Lu, Cewu},

journal={arXiv preprint arXiv:1811.09961},

year={2018}

}