This project integrates YOLOv11 for real-time object detection and MiDaS for depth estimation to create an intelligent pedestrian assistance system. The system identifies objects, calculates their depth, and provides real-time audio instructions to guide users safely in their walking environment.

-

Trapezoid ROI:

- Focuses object detection and depth estimation on the walking area, ignoring irrelevant regions like the sky.

-

Object Detection & Depth Estimation:

- Detects objects using YOLOv11 and calculates their distance using MiDaS.

-

Interactive Audio Instructions:

- Provides spoken feedback such as:

- "Path is clear."

- "Obstacle ahead, move left."

- "Immediate obstacle nearby, please stop!"

- Provides spoken feedback such as:

-

Efficient Processing:

- Frames are resized to 15% of the original size for computational efficiency.

-

Output Video:

- Annotated video with bounding boxes, depth values, and walking area overlays.

- Audio instructions saved as MP3 files for playback.

-

Interactive Audio Assistant:

- A conversational assistant to answer user queries.

-

Google Maps Integration:

- Dynamic path guidance and navigation.

-

Heygen Integration:

- Exploring enhanced interactivity features using Heygen.

- Python 3.8+

- Required libraries:

torchtorchvisionopencv-pythonnumpygTTSultralyticsPillow

- Clone this repository:

git clone https://github.com/SheemaMasood381/Virtual-Navigation-Assistance-Yolo11 cd pedestrian-assistance-system - Install Dependencies

To set up the project environment, install the required dependencies: ```bash pip install -r requirements.txt

Place the yolo11n.pt file in the root directory of your project.

-

Add your video file to the project directory.

-

Update the

video_pathvariable in the relevant Jupyter Notebook cell to point to your video file. -

Execute the cells in the Jupyter Notebook sequentially.

-

The processed video with annotations will be saved as

processed_video.mp4. -

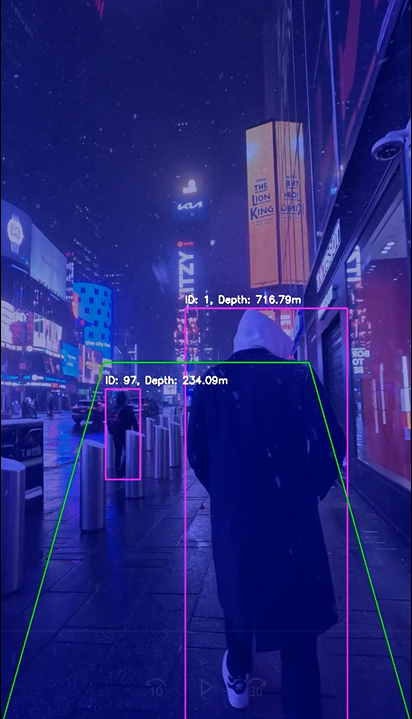

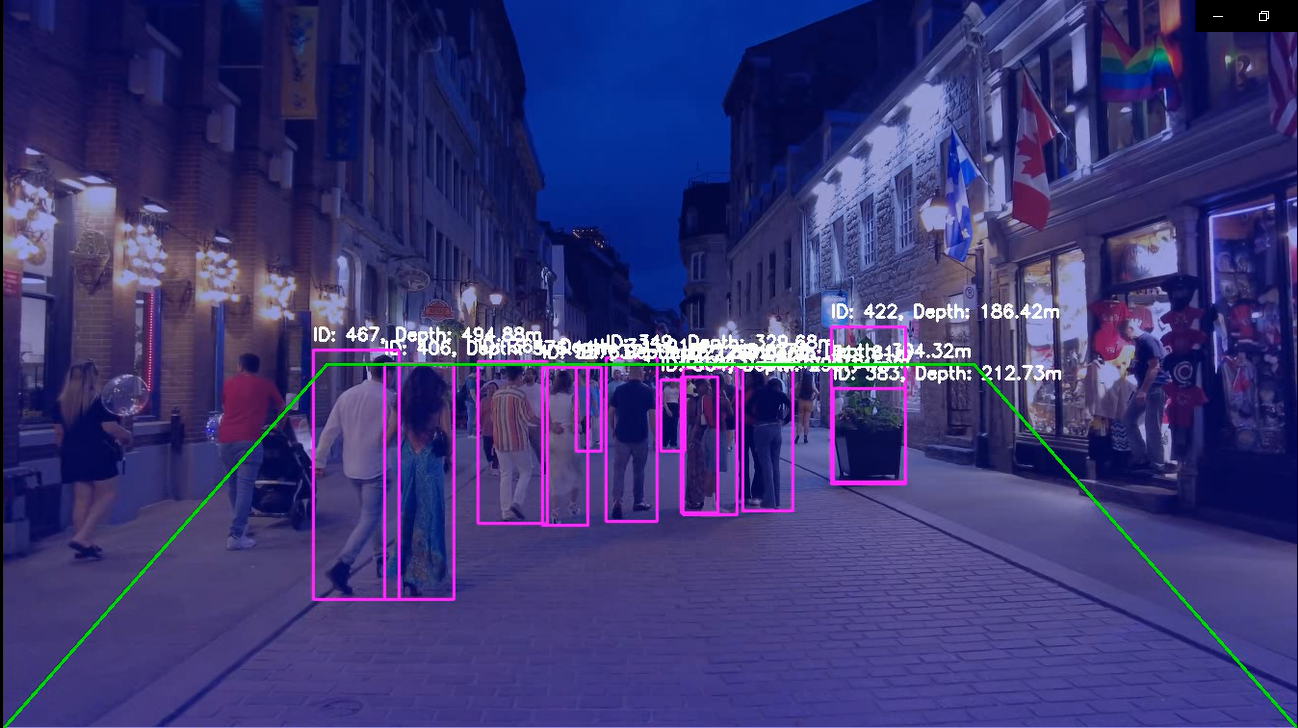

the output video appears as follows

- Resizes the video to 15% of its original size.

- Applies a trapezoid mask to focus on the walking area.

- Uses YOLOv11 to detect and track objects within the trapezoid region.

- Uses MiDaS to estimate the depth of detected objects.

- Provides actionable instructions such as "Move left" or "Stop."

- YOLOv11: For robust object detection.

- MiDaS: For accurate depth estimation.

- gTTS: For generating real-time audio instructions.

Contributions are welcome! Feel free to fork this repository, create a feature branch, and submit a pull request.

This project is licensed under the MIT License. See the LICENSE file for details.