This work involves creating a Bird's Eye Vew (BEV) local map where the position of the vehicle with respect to the road can be viewed from a prespective transformed top view. BEV is commonly used for environmental perception as it clearly presents the location and scale of objects (lanes and the vehicle in this case). The focus is getting a higher FPS or cycle time on the BEV view that allows faster tracking of the dynamically changing trajectories. This is also a crucial factor for time-critical applications like autonomous driving and collision avoidance. The objectives of this work are listed below:

- Creating BEV from four fish-eye cameras.

- 2D Vehicle positioning and heading information.

- Both tasks must be performed in simulation, i.e., Unity and Real hardware (NVIDIA Jetson Xavier).

- Output to be 15-30 FPS.

The Pipeline is divided broadly into three components based on the workflow and division.

- Calibration and Homography (Run once in the PC).

- Seamless BEV (Runs continuously in Jetson).

- Extracting Lane Information or Vehicle 2D Position (Runs continuously in Jetson).

- Unity (2021.3.15.f1 or later)

- Computing Platform (e.g., NVIDIA Jetson Xavier)

- Four Cameras (e.g., Fish Eye Camera)

- Auxiliary hardwares (Display, I/O devices, USB Interfaces)

- Python 3.3.8

- OpenCV 4.6.0

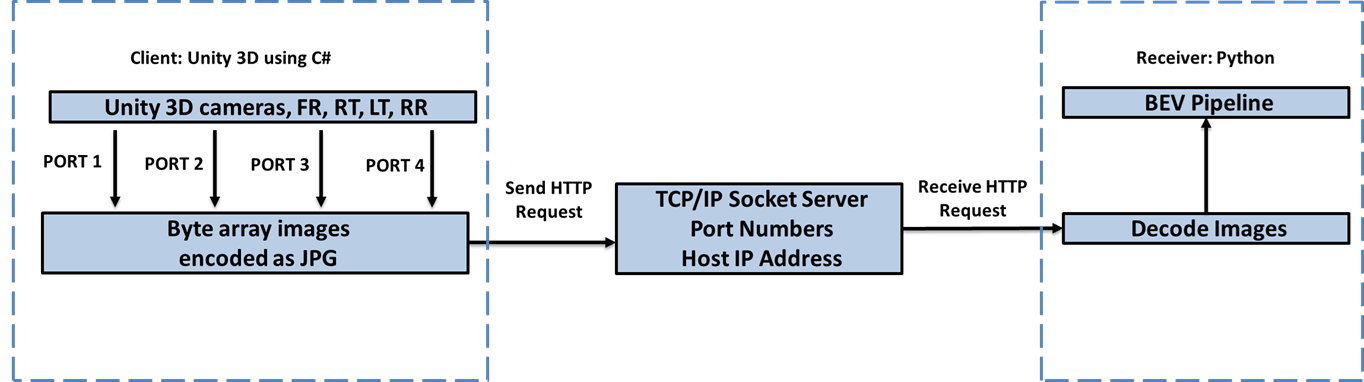

- Connectivity between Unity and Python using TCP/IP

- The first video shows the hardware implementation on the top left shows the test bench's heading and relative position from the lane, and center image is the BEV from four camera, top right shows the test setup as its driving(click on the image to watch the video).

- The sencond video shows the unity implementation:

- First the unit project is loaded in Unity. The simulation is then started.

- The Simulation_BEV_code.py is then executed.

- On the top left window shows the BEV with Car's heading and relative position from the lane, and center window is the unity simulator as the user drives the Car(click on the image to watch the video).

- The BEV from the cameras and localized the vehicle by obtaining the position and heading information with respect to lanes.

- The final output is obtained at around 16 FPS.

- This task has been simulated in Unity and implemented in real hardware.