GAPartNet: Cross-Category Domain-Generalizable Object Perception and Manipulation via Generalizable and Actionable Parts

CVPR 2023 Highlight

This is the official repository of GAPartNet: Cross-Category Domain-Generalizable Object Perception and Manipulation via Generalizable and Actionable Parts.

For more information, please visit our project page.

-

2024/4/4We use IsaacGym to reimplement our interaction algorithm. Seemanipulationfolder for more details. -

2024/3/5We added some example assets inexample_assetsand wrote some usage demos indemo.ipynb. -

2023/6/28We polish our model with the user-friendly Lightning framework and release detailed training code! Check the gapartnet folder for more details! -

2023/5/21GAPartNet Dataset has been released, including Object & Part Assets and Annotations, Rendered PointCloud Data and our Pre-trained Checkpoint.

(New!) GAPartNet Dataset has been released, including Object & Part Assets and Annotations, Rendered PointCloud Data, and our Pre-trained Checkpoint.

To obtain our dataset, please fill out this form and check the Terms&Conditions. Please cite our paper if you use our dataset.

Download our pretrained checkpoint here! We also release the checkpoint trained on all the GAPartNet dataset with the best performance here (Notice that the checkpoint in the dataset is expired, please use this one.)

We release our network and checkpoints; check the gapartnet folder for more details. You can segment parts

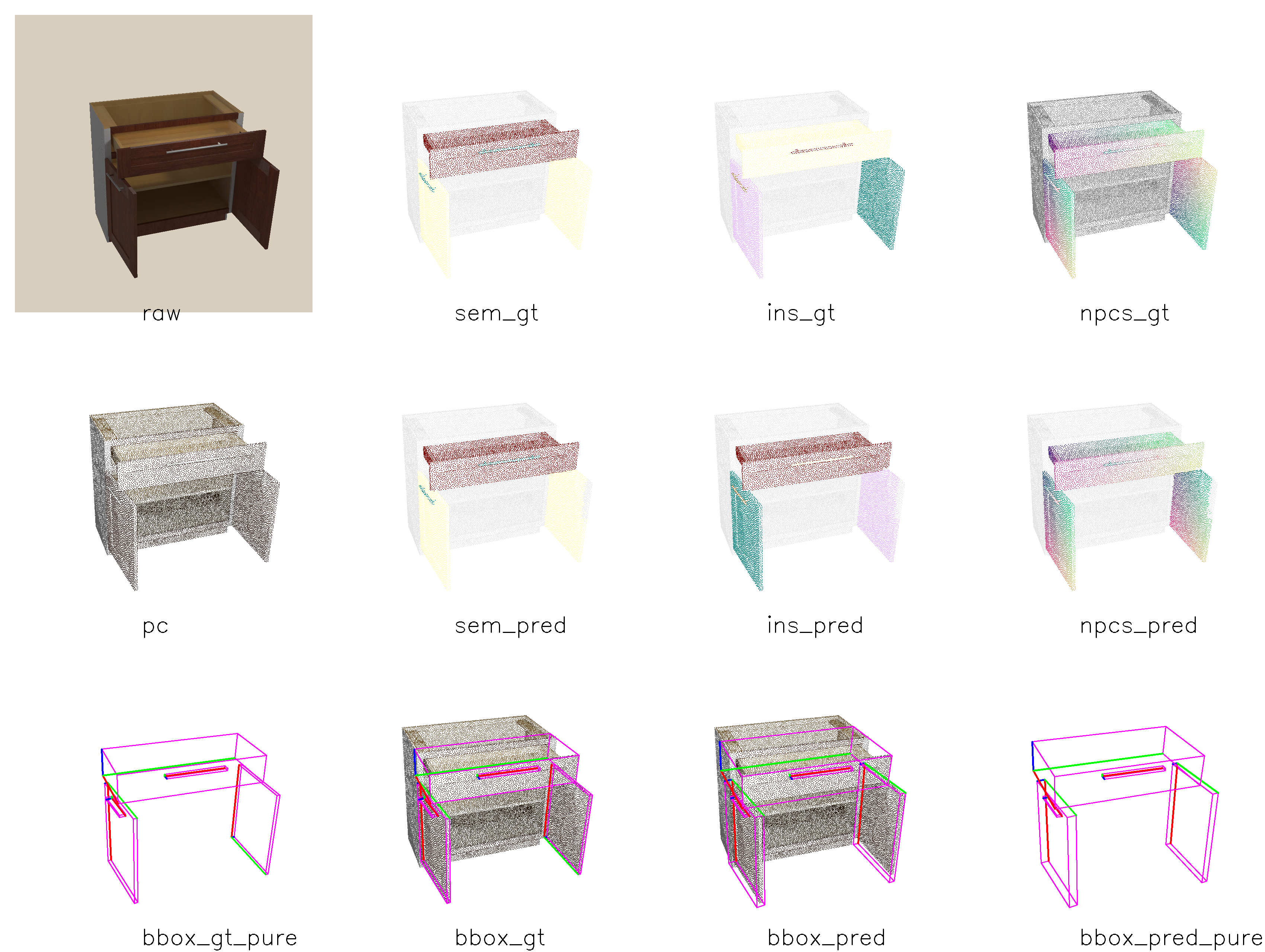

and estimate the pose of it. We also provide visualization code. This is a visualization example:

- Python 3.8

- Pytorch >= 1.11.0

- CUDA >= 11.3

- Open3D with extension (See install guide below)

- epic_ops (See install guide below)

- pointnet2_ops (See install guide below)

- other pip packages

See this repo for more details:

GAPartNet_env: This repo includes Open3D, epic_ops and pointnet2_ops. You can install them by following the instructions in this repo.

See gapartnet folder for more details.

cd gapartnet

CUDA_VISIBLE_DEVICES=0 \

python train.py test -c gapartnet.yaml \

--model.init_args.training_schedule "[0,0]" \

--model.init_args.ckpt ckpt/release.ckpt

Notice:

- We provide visualization code here, you can change cfg in

model.init_args.visualize_cfgand control- whether to visualize (visualize)

- where to save results (SAVE_ROOT)

- what to visualize: save_option includes

["raw", "pc", "sem_pred", "sem_gt", "ins_pred", "ins_gt", "npcs_pred", "npcs_gt", "bbox_gt", "bbox_gt_pure", "bbox_pred", "bbox_pred_pure"](save_option) - the number of visualization samples (sample_num)

- We fix some bugs for mAP computation, check the code for more details.

You can run the following code to train the policy:

cd gapartnet

CUDA_VISIBLE_DEVICES=0 \

python train.py fit -c gapartnet.yaml

Notice:

- For training, please use a good schedule, first train the semantic segmentation backbone and head, then, add the clustering and scorenet supervision for instance segmentation. You can change the schedule in cfg(

model.init_args.training_schedule). The schedule is a list, the first number indicate the epoch to start the clustering and scorenet training, the second number indicate the epoch to start the npcsnet training. For example, [5,10] means that the clustering and scorenet training will start at epoch 5, and the npcsnet training will start at epoch 10. - If you want to debug, add

--model.init_args.debug Trueto the command and also changedata.init_args.xxx_few_shotin the cfg to beTrue, herexxxis the name of training and validation sets. - We also provide multi-GPU parallel training, please set

CUDA_VISIBLE_DEVICESto be the GPUs you want to use, e.g.CUDA_VISIBLE_DEVICES=3,6means you want to use 2 GPU #3 and #6 for training.

If you find our work useful in your research, please consider citing:

@article{geng2022gapartnet,

title={GAPartNet: Cross-Category Domain-Generalizable Object Perception and Manipulation via Generalizable and Actionable Parts},

author={Geng, Haoran and Xu, Helin and Zhao, Chengyang and Xu, Chao and Yi, Li and Huang, Siyuan and Wang, He},

journal={arXiv preprint arXiv:2211.05272},

year={2022}

}

This work and the dataset are licensed under CC BY-NC 4.0.

If you have any questions, please open a github issue or contact us:

Haoran Geng: [email protected]

Helin Xu: [email protected]

Chengyang Zhao: [email protected]

He Wang: [email protected]