Residual networks implementation using Keras-1.0 functional API, that works with both theano/tensorflow backend and 'th'/'tf' image dim ordering.

- Deep Residual Learning for Image Recognition (the 2015 ImageNet competition winner)

- Identity Mappings in Deep Residual Networks

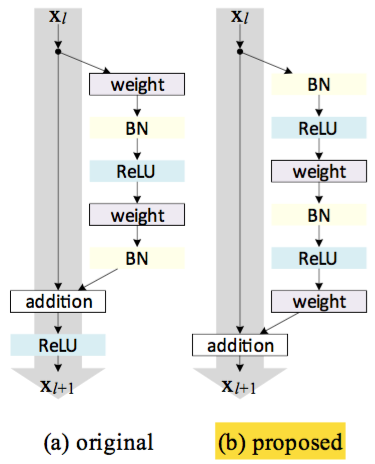

The residual blocks are based on the new improved scheme proposed in Identity Mappings in Deep Residual Networks as shown in figure (b)

Both bottleneck and basic residual blocks are supported. To switch them, simply provide the block function here

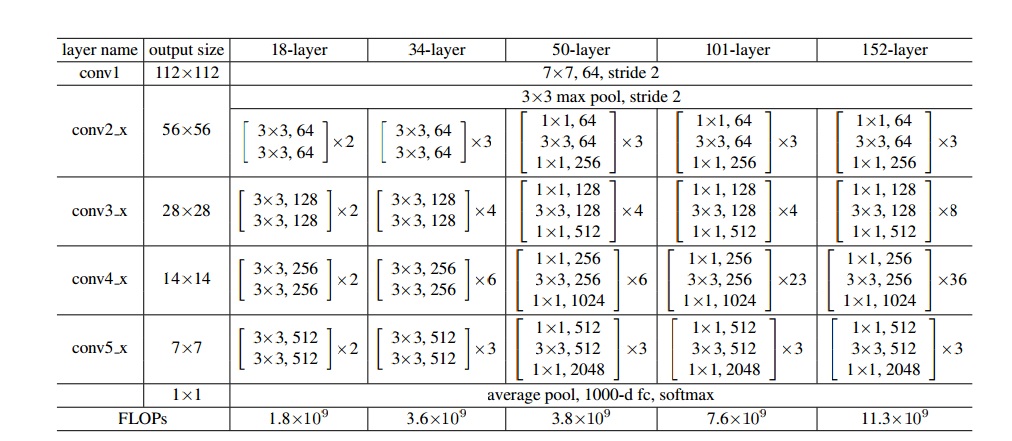

The architecture is based on 50 layer sample (snippet from paper)

There are two key aspects to note here

- conv2_1 has stride of (1, 1) while remaining conv layers has stride (2, 2) at the beginning of the block. This fact is expressed in the following lines.

- At the end of the first skip connection of a block, there is a disconnect in num_filters, width and height at the merge layer. This is addressed in

_shortcutby usingconv 1X1with an appropriate stride. For remaining cases, input is directly merged with residual block as identity.