Pytorch implementation of the following paper:

Junke Wang1,2,Dongdong Chen3,YiWeng Xie1,2,Chong Luo4,Xiyang Dai3, Lu Yuan3, Zuxuan Wu1,2†,Yu-Gang Jiang1,2†

1Shanghai Key Lab of Intell. Info. Processing, School of CS, Fudan University.

2Shanghai Collaborative Innovation Center on Intelligent Visual Computing.

3Microsoft Cloud + AI, 4Microsoft Research Asia.

† denotes correspoinding authors.

- 🔥 We upgrade ChatVideo by integrating more powerful tracking model (SAM2), and captioning model (LLaVA-Next).

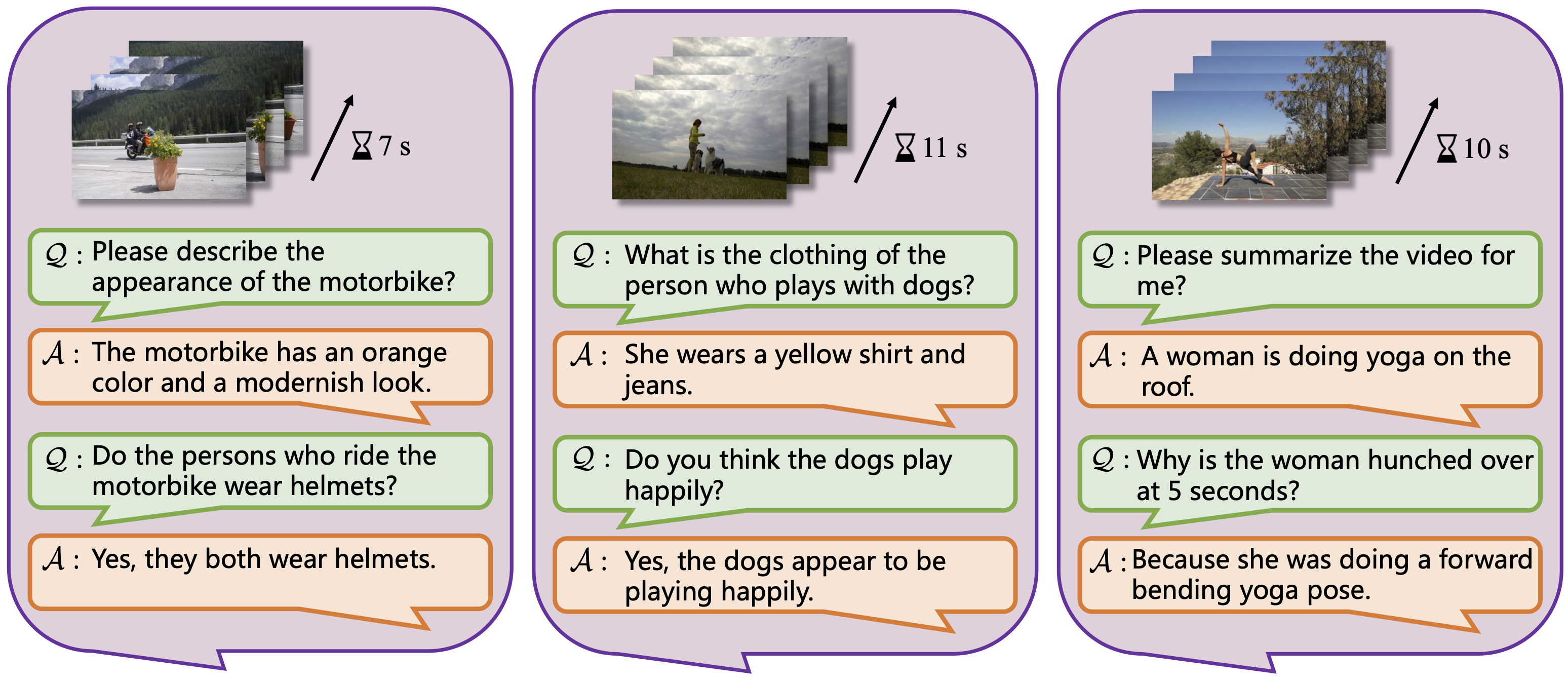

- 🚀 ChatVideo allows ChatGPT to watch videos for you, for the first time, it enables the instance-level understanding in videos by detectiong, tracking, and captioning the tracklets.

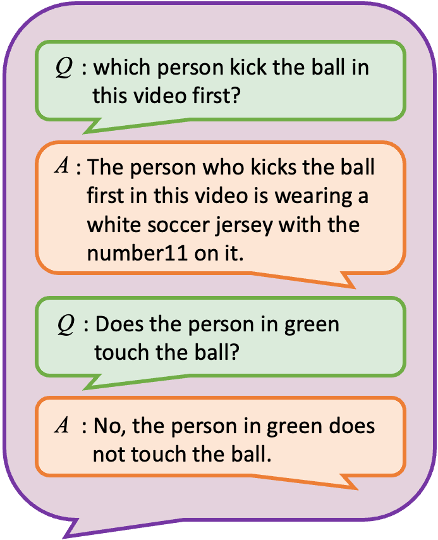

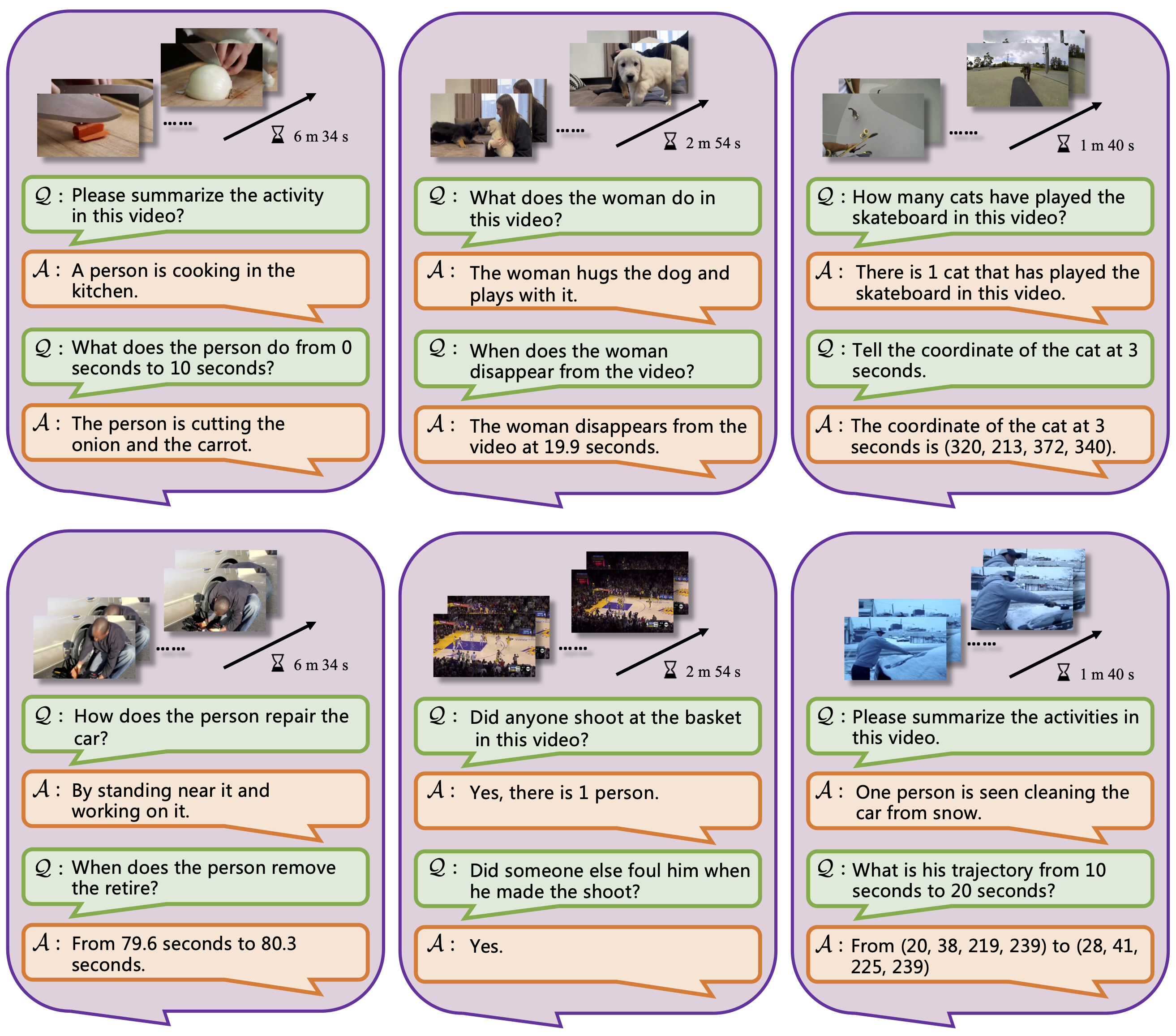

ChatVideo for Appearance Understanding.

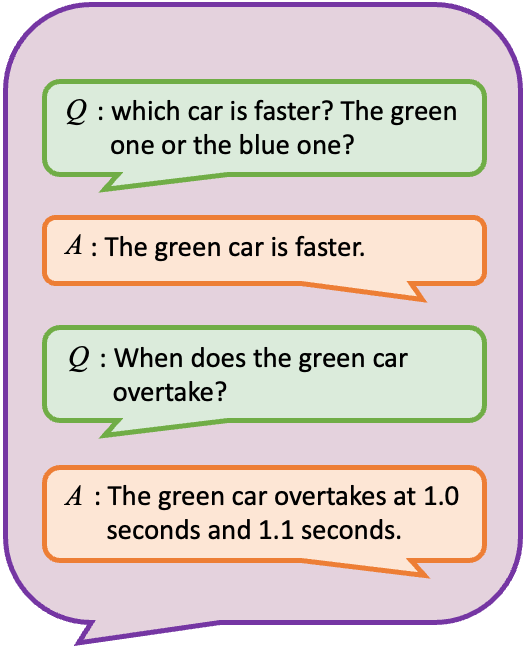

ChatVideo for Motion Understanding.

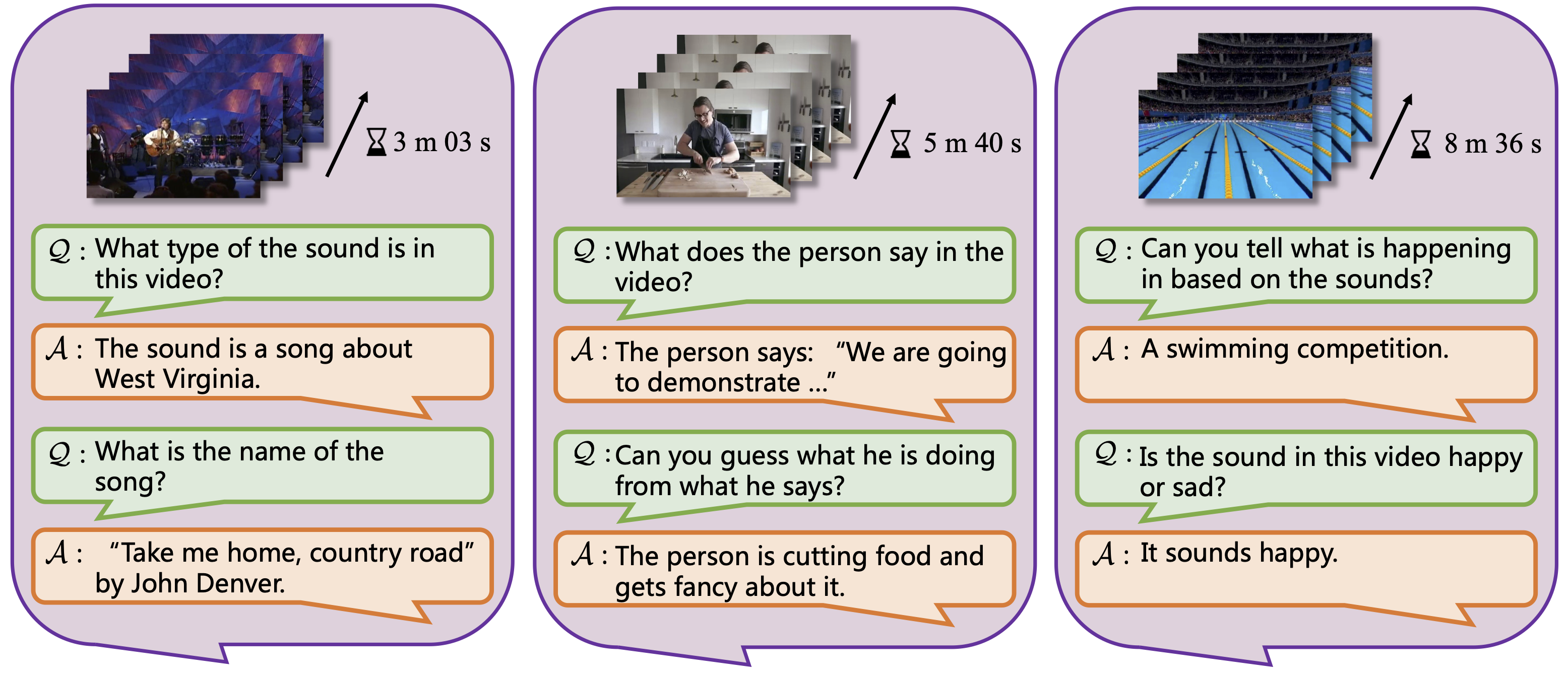

ChatVideo for Audio Understanding

git clone https://github.com/yiwengxie/Chat-Video.git

cd Chat-Video

Firstly, create the conda env:

conda create --name chat python=3.10

# Install pytorch:

conda install pytorch==2.3.1 torchvision==0.18.1 torchaudio==2.3.1 pytorch-cuda=12.1 -c pytorch -c nvidia

# Groundeding-DINO and SAM2

cd projects/GroundedSAM2/

pip install -e .

pip install --no-build-isolation -e grounding_dino

# LLaVA NeXT

cd projects/LLaVA_NeXT/

pip install -e ".[train]"

# Instal other dependencies:

pip install -r requirements.txt

cd projects/GroundedSAM2/checkpoints

bash download_ckpts.sh

cd ../gdino_checkpoints

bash download_ckpts.sh

This project is released under the MIT license. Please see the LICENSE file for more information.

We appreciate the open source of the following projects: SAM2, Grounded-SAM2, LLaVA-Next, Whisper, UNINEXT, BLIP2.

If you find this repository helpful, please consider citing:

@article{wang2023chatvideo,

title={ChatVideo: A Tracklet-centric Multimodal and Versatile Video Understanding System},

author={Wang, Junke and Chen, Dongdong and Luo, Chong and Dai, Xiyang and Yuan, Lu and Wu, Zuxuan and Jiang, Yu-Gang},

journal={arXiv preprint arXiv:2304.14407},

year={2023}

}

@inproceedings{wang2022omnivl,

title={Omnivl: One foundation model for image-language and video-language tasks},

author={Wang, Junke and Chen, Dongdong and Wu, Zuxuan and Luo, Chong and Zhou, Luowei and Zhao, Yucheng and Xie, Yujia and Liu, Ce and Jiang, Yu-Gang and Yuan, Lu},

booktitle={NeurIPS},

year={2022}

}

@article{wang2023omnitracker,

title={Omnitracker: Unifying object tracking by tracking-with-detection},

author={Wang, Junke and Chen, Dongdong and Wu, Zuxuan and Luo, Chong and Dai, Xiyang and Yuan, Lu and Jiang, Yu-Gang},

journal={arXiv preprint arXiv:2303.12079},

year={2023}

}

@inproceedings{wang2024omnivid,

title={Omnivid: A generative framework for universal video understanding},

author={Wang, Junke and Chen, Dongdong and Luo, Chong and He, Bo and Yuan, Lu and Wu, Zuxuan and Jiang, Yu-Gang},

booktitle={CVPR},

year={2024}

}