This is the source code of Data-driven Model Predictive Control (DMRPC).

LSTM训练记录 一定要使用doubleTensor来训练,否则经过归一化之后,精度会出问题

| 训练次数 | 验证集精度 | 训练集精度(与机理模型做对比) |

|---|---|---|

| 600次训练 | 0.00000024 | 5.94e-5 |

| 700次训练 | 0.00000027 | 7.28e-5 |

| 800次训练 | 0.00000017 | 7.28e-5 |

| 900次训练 | 0.00000015 | 3.375e-5 |

| 1000次训练 | 0.00000010 | 3.017e-5 |

| 1100次训练 | 0.00000015 | 3.017e-5 |

| 1200次训练 | 0.00000016 | 2.948e-5 |

| 1300次训练 | 0.00000009 | 2.013e-5 |

| 1400次训练 | 0.00000010 | 2.103e-5 |

| 1500次训练 | 0.00000007 | 1.154e-5 |

| 1600次训练 | 0.00000006 | 1.160e-5 |

| 1700次训练 | 0.00000010 | 1.835e-5 |

| 1800次训练 | 0.00000004 | 1.1212e-5 |

| 1900次训练 | 0.00000011 | 2.16e-5 |

| 2000次训练 | 0.00000005 | 1.64e-5 |

| 2100次训练 | 0.00000008 | 2.546e-5 |

不同的训练次数最终结果差不多,主要影响因素在与归一化后的值。Pytorch使用中,如果使用float32作为默认精度,使用前向差分计算梯度的时候,会出现归一化数据之后,有无前向差分项的归一化后的数值是一样的。所以,后期一定要确认使用float64来作为默认数据精度。

$\begin{array}{ll}\mathcal{J}= & \max {u \in S(\Delta)} \int{t_{k}}^{t_{k+N}} l(\tilde{x}(t), u(t)) d t \ \text { s.t. } & \dot{\tilde{x}}(t)=f_{nn}(\tilde{x}(t), u(t)) \ & u(t) \in U, \quad \forall t \in\left[t_{k}, t_{k+N}\right) \ & \tilde{x}\left(t_{k}\right)=\bar{x}\left(t_{k}\right) \ & V(\tilde{x}(t)) \leq \rho_{e}, \quad \forall t \in\left[t_{k}, t_{k+N}\right) \ & \text { if } \bar{x}\left(t_{k}\right) \in \Omega_{\rho_{e}} \ & \dot{V}\left(\bar{x}\left(t_{k}\right), u\right) \leq \dot{V}\left(\bar{x}\left(t_{k}\right), \phi\left(\bar{x}\left(t_{k}\right)\right)\right. \ & \text { if } \bar{x}\left(t_{k}\right) \in \Omega_{\rho} \backslash \Omega_{\rho_{e}}\end{array}$

where $l(\tilde{x}(t), u(t)) = \int_{t_{k}}^{t_{k+N}}(x(t)^TQx(t) + u(t)^TRu(t)$),

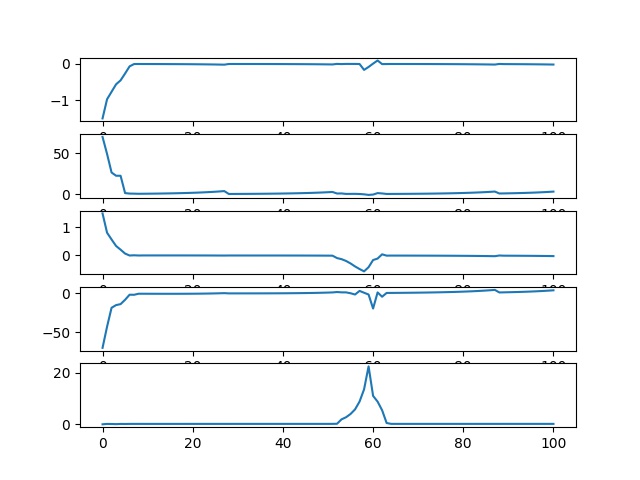

To test that the LMPC framework works in these experiments

Attack starts from

Attacks start from

This attack is difficult to detect at first few steps.

Surge attacks act similarly like min-max attacks initially to maximize the

disruptive impact for a short period of time, then they are reduced to

a lower value. In our case, the duration of the initial surge in terms of

sampling times is selected as

where