🚩🚩🚩This is the pytorch implementation of PanoFormer (PanoFormer: Panorama Transformer for Indoor 360° Depth Estimation, ECCV 2022)!

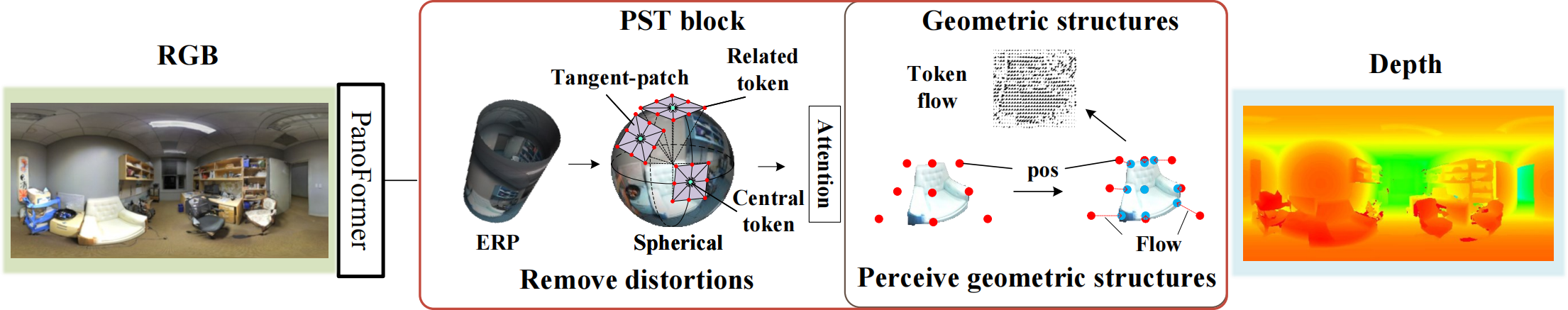

The motivation of PanoFormer. The motivation of PanoFormer. | |

|---|---|

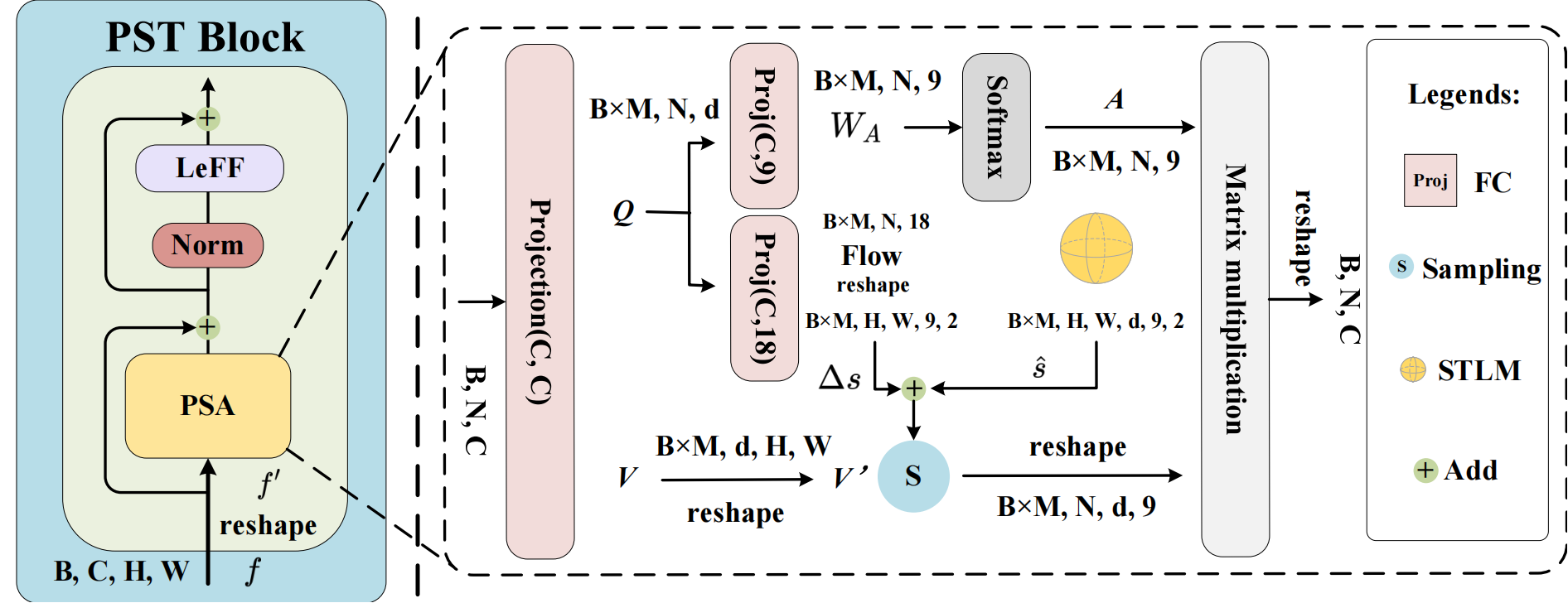

Framework of PanoFormer. Framework of PanoFormer. | |

STLM STLM |

PSA PSA |

You should download and prepare the datasets from the official webpage, Stanford2D3D, Matterport3D, 3D60. Unfortunately, PanoSunCG is not avaliable now. For Matterport3D. please follow the processing strategy in Unifuse.

😥Attention

The version of Stanford2D3D and Matterport3D that contained in 3D60 have a problem: The processed Matterport3D and Stanford2D3D leak the depth information via pixel brightness (pointed by our reviewers, we want to list it to alert the subsquent researchers).

❤️Recommendation datasets

Stanford2D3D,Pano3D(with a solid baseline), and Structured3D

For calculating MAE and MRE, please refer to SliceNet. For others, please refer to Unifuse.

❤️New metrics

For P-RMSE:

You can use the Tool to get these regions with large distortion (top and bottom faces in the cube projection format),

and then use them to calculate the standard RMSE.

For LRCE:

We encourage subsequent researchers to make better use of the seamless nature of panoramas.

The project is built with PyTorch 1.7.1, Python3.8, CUDA10.1, NVIDIA GeForce RTX 3090. For package dependencies, you can install them by:

pip install -r requirements.txt

Please download the pretrained model (to load to train) at the link Model_pretrain(click me), and put it in the files below:

|-- PanoFormer

|-- tmp

| |-- panodepth

| |--train

| |--val

| |--models

| |--weights_pretrain

And you can run the command:

python train.py

Your datasets and the splits shoud be organized as below:

SPLITS

|-- PanoFormer

|-- network

|-- splitsm3d

| |-- matterport3d_train.txt

| |-- matterport3d_val.txt

| |-- matterport3d_test.txt

|-- splitss2d3d

| |-- stanford2d3d_train.txt

| |-- stanford2d3d_val.txt

| |-- stanford2d3d_test.txt

|-- ...

|-- ...

|-- train.py

|-- trainer.py

|-- metric.py

|-- ...

|-- ...

DATASETS

|-- datasets

|-- Matterport3D

| |-- 17DRP5sb8fy

| |-- pano_depth

| |-- pano_skybox_color

| |--...

|-- Stanford2D3D

| |-- area_1

| |-- pano

| |-- depth

| |-- rgb

| |-- ...

|-- 3D60

| |-- Matterport3D

| |-- Stanford2D3D

| |-- area_1

| |--xxx_color_xxx.png

| |--xxx_depth_xxx.exr

| |-- SunCG

|-- ...

We thank the authors of the projects below:

Unifuse, Uformer, SphereNet, DeformableAtt

If you find our work useful, please consider citing:

@inproceedings{shen2022panoformer,

title={PanoFormer: Panorama Transformer for Indoor 360$$\^{}$\{$$\backslash$circ$\}$ $$ Depth Estimation},

author={Shen, Zhijie and Lin, Chunyu and Liao, Kang and Nie, Lang and Zheng, Zishuo and Zhao, Yao},

booktitle={European Conference on Computer Vision},

pages={195--211},

year={2022},

organization={Springer}

}

@inproceedings{shen2021distortion,

title={Distortion-tolerant monocular depth estimation on omnidirectional images using dual-cubemap},

author={Shen, Zhijie and Lin, Chunyu and Nie, Lang and Liao, Kang and Zhao, Yao},

booktitle={2021 IEEE International Conference on Multimedia and Expo (ICME)},

pages={1--6},

year={2021},

organization={IEEE}

}

And also these:

@article{jiang2021unifuse,

title={UniFuse: Unidirectional Fusion for 360$^{\circ}$ Panorama Depth Estimation},

author={Hualie Jiang and Zhe Sheng and Siyu Zhu and Zilong Dong and Rui Huang},

journal={IEEE Robotics and Automation Letters},

year={2021},

publisher={IEEE}

}

@InProceedings{Wang_2022_CVPR,

author = {Wang, Zhendong and Cun, Xiaodong and Bao, Jianmin and Zhou, Wengang and Liu, Jianzhuang and Li, Houqiang},

title = {Uformer: A General U-Shaped Transformer for Image Restoration},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {17683-17693}

}

@article{zhu2020deformable,

title={Deformable detr: Deformable transformers for end-to-end object detection},

author={Zhu, Xizhou and Su, Weijie and Lu, Lewei and Li, Bin and Wang, Xiaogang and Dai, Jifeng},

journal={arXiv preprint arXiv:2010.04159},

year={2020}

}