This is an implementation of GM-SOP(paper , supplemental) , created by Zilin Gao and Qilong Wang.

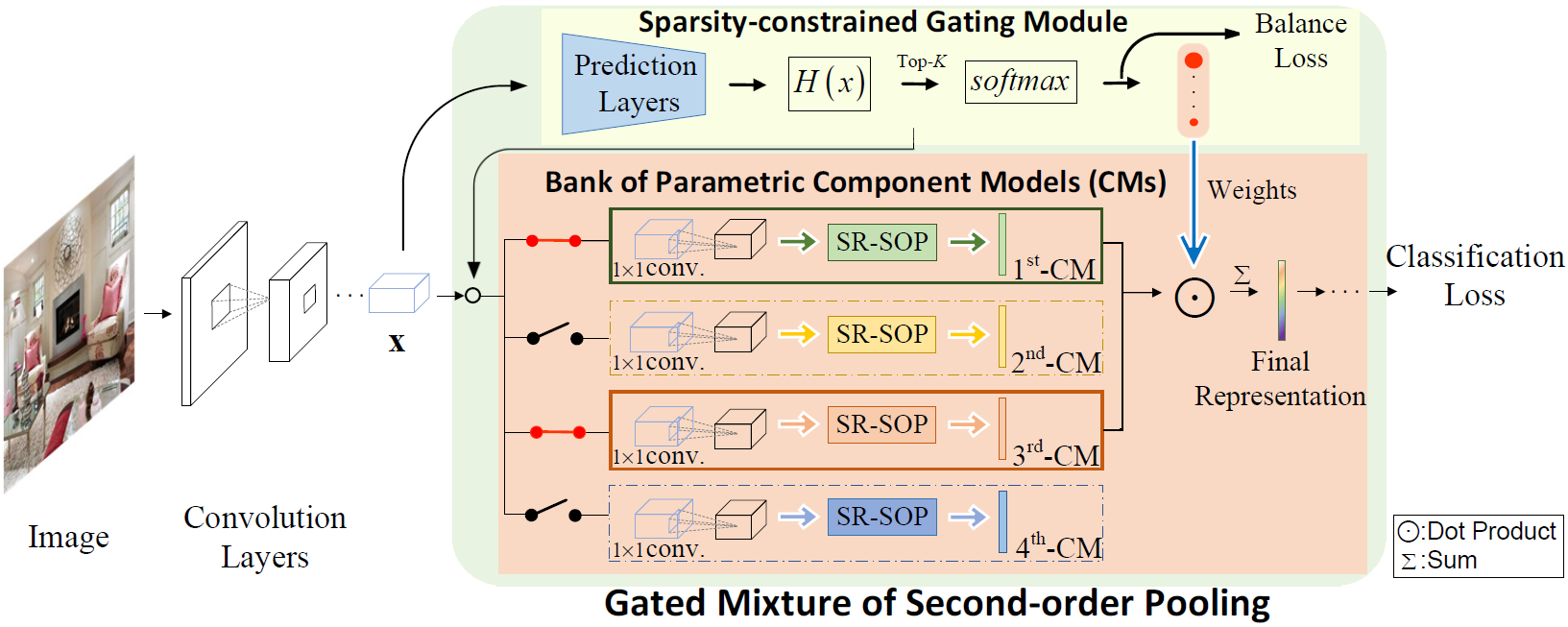

In most of existing deep convolutional neural networks (CNNs) for classification, global average (first-order) pooling (GAP) has become a standard module to summarize activations of the last convolution layer as final representation for prediction. Recent researches show integration of higher-order pooling (HOP) methods clearly improves performance of deep CNNs. However, both GAP and existing HOP methods assume unimodal distributions, which cannot fully capture statistics of convolutional activations, limiting representation ability of deep CNNs, especially for samples with complex contents. To overcome the above limitation, this paper proposes a global Gated Mixture of Second-order Pooling (GM-SOP) method to further improve representation ability of deep CNNs. To this end, we introduce a sparsity-constrained gating mechanism and propose a novel parametric SOP as component of mixture model. Given a bank of SOP candidates, our method can adaptively choose Top-K(K > 1) candidates for each input sample through the sparsity-constrained gating module, and performs weighted sum of outputs of K selected candidates as representation of the sample. The proposed GM-SOP can flexibly accommodate a large number of personalized SOP candidates in an efficient way, leading to richer representations. The deep networks with our GM-SOP can be end-to-end trained, having potential to characterize complex, multi-modal distributions. The proposed method is evaluated on two large scale image benchmarks (i.e., downsampled ImageNet-1K and Places365), and experimental results show our GM-SOP is superior to its counterparts and achieves very competitive performance.

@InProceedings{Wang_2018_NeurIPS,

author = {Wang, Qilong and Gao, Zilin and Xie, Jiangtao and Zuo, Wangmeng and Li, Peihua},

title = {Global Gated Mixture of Second-order Pooling for Improving Deep Convolutional Neural Networks},

journal = {Neural Information Processing Systems (NeurIPS)},

year = {2018}

}

We evaluated our method on two large-scale datasets:

| Dataset | Image Size | Training Set | Val Set | Class | Download |

|---|---|---|---|---|---|

| Downsampled ImageNet-1K* | 64x64 | 1.28M | 50K | 1000 | 13G: Google Drive | Baidu Yun |

| Downsampled Places-365 ** | 100x100 | 1.8M | 182K | 365 | 45G |

*The work[arxiv] provides a downsampled version of

ImageNet-1K dataset. In this work, each image in ImageNet dataset (including both training set and validation set) is downsampled by box sampling method to the size of 64x64, resulting in a downsampled ImageNet-1K dataset with same quantity samples and lower resolution. As it descripted, downsampled ImageNet-1K dataset might represent a viable alternative to the CIFAR datasets while dealing with more complex data and classes.

Based on above work, we prepare one copy of downsampled ImageNet-1K in .mat form for public use. To be specific, on each part of original downsampled ImageNet-1K dataset file, we use unpickle function in python enviroment followed with scipy.io.savemat to convert the original file into .mat format, finally concatenate all parts into one full .mat file.

Downsampled ImageNet-1K MD5code: fe50ac93f74744b970b3102e14e69768

**We downsample all images to 100x100 by imresize function in matlab with bicubic interpolation method.

toolkit: matconvnet 1.0-beta25

matlab: R2016b

cuda: 9.2

GPU: single GTX 1080Ti

system: Ubuntu 16.04

RAM: 32G

Tips: Considering the whole dataset is loaded into RAM when the code runs, the workstation MUST provide available free space as much as the dataset occupied at least. Downsampled ImageNet is above 13G, we use the machine equipped with 32G RAM for experiments. For the same reason, if you want to run with multiple GPUs, RAM should provide dataset_space x GPU_num free space. If the RAM is not allowed, you can also restore the data in form of image files in disk and read them from disk during each mini-batch(like most image reading process).

├── examples

│ ├── GM

│ │ ├── cnn_imagenet64.m

│ │ ├── cnn_imagenet64_init_resnet.m

│ │ ├── cnn_init_WRN_GM.m

│ │ └── cnn_init_WRN_baseline.m

│ │ ├── cnn_init_resnet_GM.m

│ | └── ....

│ └── cnn_train_GM_dag.m

└── matlab

│ ├── +dagnn

│ | ├── Balance_loss.m

│ | ├── CM_out.m

│ | ├── H_x.m

│ | ├── gating.m

│ | └── ...

│ └── ...

└── ...

- The code MUST be compiled by executing matlab/vl_compilenn.m, please see here for details. The main function is example/GM/cnn_imagenet64.m .

- Considering the long data reading process(about above 1min), we provide a tiny FAKE data mat file: examples/GM/imdb.mat as default setting for quick debug. If you want to train model, please download the full dataset we provide above and modify the dataset file path by changing opts.imdbPath in function example/GM/cnn_imagenet64.m.

| Network | Param. | Dim. | Top-1 error / Top-5 error (%) | Model |

|---|---|---|---|---|

| ResNet-18 | 0.9M | 128 | 52.17/27.09 | Google Drive | Baidu Yun |

| ResNet-18-SR-SOP | 9.0M | 8256 | 40.56/19.08 | Google Drive | Baidu Yun |

| GM-GAP-16-8 + ResNet-18 | 2.3M | 512 | 42.25/19.46 | Google Drive | Baidu Yun |

| GM-GAP-16-8 + WRN-36-2 | 8.7M | 512 | 35.98/14.62 | Google Drive | Baidu Yun |

| GM-SOP-16-8 + ResNet-18 | 10.3M | 8256 | 38.48/17.38 | Google Drive | Baidu Yun |

| GM-SOP-16-8 + WRN-36-2 | 15.7M | 8256 | 32.71/12.44 | Google Drive | Baidu Yun |

Some models are trained with batchsize 150, which is different from batchsize 256 in paper, resulting in 0.1~0.4% performance gap compared with paper reported.

- MD5 code:

ResNet-18: cf9bcf22e416773052358870f7786e05

Resnet-18-SR-SOP: b4c60d7955d93e6145071a5157e2a2af

GM-GAP-16-8 + ResNet-18: f80738566ffe9cabb7a1e88ea6c79dcf

GM-GAP-16-8 + WRN-36-2: ae26d1ccf77a568ceccaa878acc4d230

GM-SOP-16-8 + ResNet-18: 6f0db9de2cbe233278ba8acca67a6f78

GM-SOP-16-8 +WRN-36-2: d89ea02e557d087622eb1ea617d516be

- We thank the works as well as the accompanying code of MPN-COV and its fast version iSQRT-COV.

- We would like to thank MatConvNet team for developing MatConvNet toolbox.

If you have any suggestion or question, you can leave a message here or contact us directly: [email protected] . Thanks for your attention!