This repository implements source-contrastive and language-contrastive decoding, as described in Sennrich et al. (EACL 2024).

-

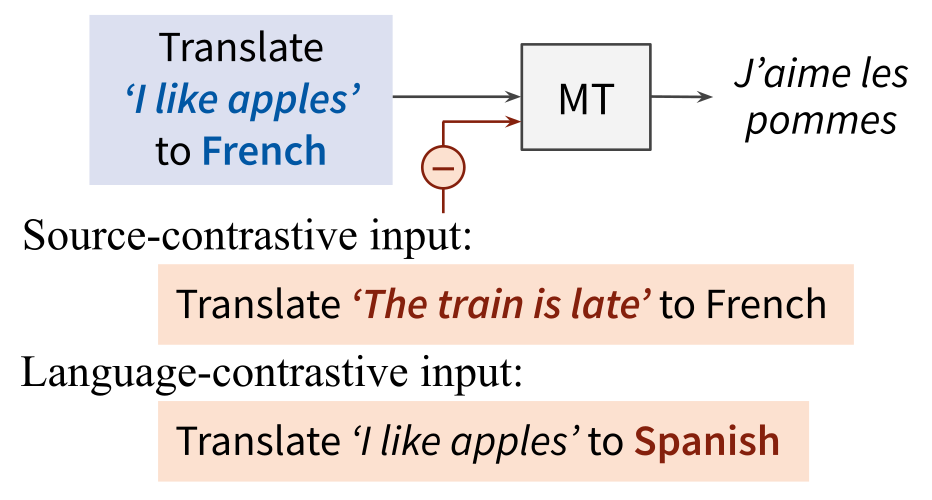

In source-contrastive decoding, we search for a translation that maximizes P(Y|X) - λ·P(Y|X'), where X' is a random source segment. This penalizes hallucinations.

-

In language-contrastive decoding, we search for a translation that maximizes P(Y|X,l_y) - λ·P(Y|X,l_y'), where l_y is the language indicator for the desired target language, l_y' the indicator for some undesired language (such as English or the source language). This penalizes off-target translations.

pip install -r requirements.txt

Example commands

Source-contrastive decoding with M2M-100 (418M) on Asturian–Croatian, with λ_src=0.7:

python -m scripts.run --model_path m2m100_418M --language_pairs ast-hr --source_contrastive --source_weight -0.7

Source-contrastive and language-contrastive decoding with SMaLL-100 on Pashto–Asturian, with 2 random source segments, λ_src=0.7, λ_lang=0.1, and English and Pashto as contrastive target languages:

python -m scripts.run --model_path small100 --language_pairs ps-ast --source_contrastive 2 --source_weight -0.7 --language_contrastive en ps --language_weight -0.1

Language-contrastive decoding with Llama 2 Chat (7B) on English–German, with λ_lang=0.5 and English as contrastive target language, using prompting with a one-shot example:

python -m scripts.run --model_path llama-2-7b-chat --language_pairs en-de --language_contrastive en --language_weight -0.5 --oneshot

This repository automatically downloads and uses FLORES-101 for evaluation. devtest section is used for the evaluation.

Multiple models are implemented:

- M2M-100 (418M). Use

--model_path m2m100_418M - SMaLL-100. Use

--model_path small100 - Llama 2 7B Chat. Use

--model_path llama-2-7b-chatorllama-2-13b-chat

ChrF2:

sacrebleu ref.txt < output.txt --metrics chrf

spBLEU:

sacrebleu ref.txt < output.txt --tokenize flores101

@inproceedings{sennrich-etal-2024-mitigating,

title={Mitigating Hallucinations and Off-target Machine Translation with Source-Contrastive and Language-Contrastive Decoding},

author={Rico Sennrich and Jannis Vamvas and Alireza Mohammadshahi},

booktitle={18th Conference of the European Chapter of the Association for Computational Linguistics},

year={2024}

}