I've implemented the following in the notebook:

- Recorded data from simulator and stored in on disk.

- Loaded data in the notebook from the recorded data folder

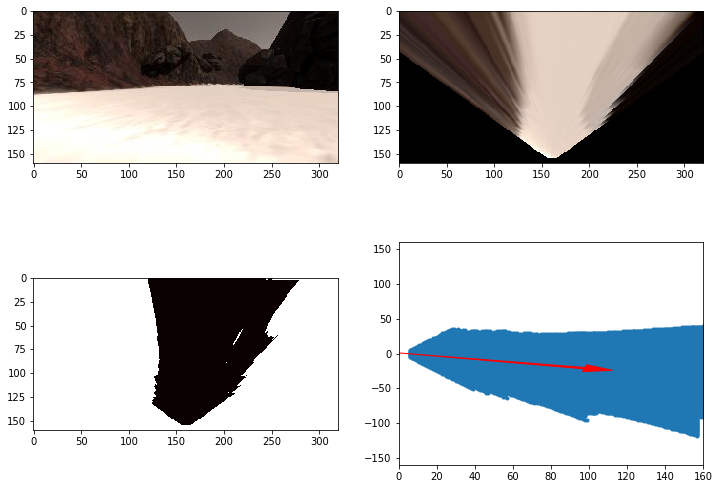

- Implemented thresholding in the color_thresh function such that the image returned has different integer values when a pixel/coordinate in the image is navigable/obstacle/gold sample.

- An example thresholded image is given below. It shows orange areas are blocked, white area is the sample, and black area is navigable.

- Applied coordinate transformations as done in the exercises

- Sample image from notebook

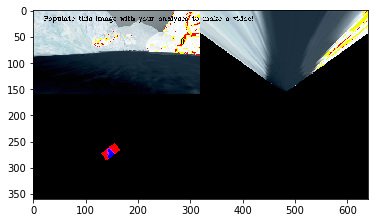

- Changed the process image function to load image data from each row of recorded data

- Process each image and create a thresholded image

- Transform coordinates and update the world map such that gold sample, obstacle and navigable areas are appropriately updated in the corresponding r/g/b channels.

- Sample output from process_image

- Uploaded video to youtube [https://www.youtube.com/watc h?v=8iebrRtWKGc]

- Added process_image code and coordinate transformation code in perception.py

- Made sure I only update the world map when the roll and pitch are close to 0 or 360.

- Stored nav angles/dists and gold angles/dists for use in the decision making.

- I have added the following checks to the decision tree

- Check for 'Stuck'

- An ability to check if the rover is stuck.

- If it is stuck, then stop, turn and get away from obstacle

- Stay close to left wall

- Always calculate max navigable distance in the range [35, 40] degrees of the rover.

- If the distance is less than safe distance, turn right

- If the distance is optimal stay sort of straight.

- If too far try to find left wall.

- Pickup gold

- At any time gold is seen, rover locks to it.

- reduces speed and starts approaching it.

- Stops when it is near sample, and wait till pickup.

- When done, resume.