L'AMBRE is a tool to measure the grammatical well-formedness of texts generated by NLG systems. It analyzes the dependency parses of the text using morpho-syntactic rules, and returns a well-formedness score. This tool utilizes the Surface Syntactic Universal Dependency (SUD) project both for extracting rules as well as parsing, and is therefore applicable across languages. See our EMNLP 2021 paper for more details.

python -m pip install lambreFor a given input text file, lambre computes a morpho-syntactic well-formedness score [0-1]. The following method first downloads the parsers and rule sets for the specified language before computing the document-level score. See the output folder (out) for error visualizations.

>>> import lambre

>>> with open("data/txt/ru.txt", "r") as rf:

... data = rf.readlines()

>>> lambre.score("ru", data)

0.9962L'AMBRE can also be used from command line. See lambre --help for more options.

lambre ru data/txt/ru.txtlambre currently supports two rule sets, chaudhary-etal-2021 (see Chaudhary et al., 2020, 2021) and pratapa-etal-2021 (see Pratapa et al., 2021). The former is the default, but the rule set can be specified using --rule-set option.

Along with the overall L'AMBRE score, we write the erroneous sentences to the output folder out/errors. We provide two visualizations, i) plain text (errors.txt), ii) HTML (errors/*.html). For plain text visualization, we use the ipymarkup tool. We use brat and Universal Dependencies for HTML visualizations.

Below is a sample run on 1000 example Hindi sentences from the Samanantar corpus.

>>> import lambre

>>> with open("examples/hi_sents_1k.txt", "r") as rf:

... data = rf.readlines()

>>> lambre.score("hi", data)

0.8821A few erroneous sentences from this corpus (as detected by L'AMBRE):

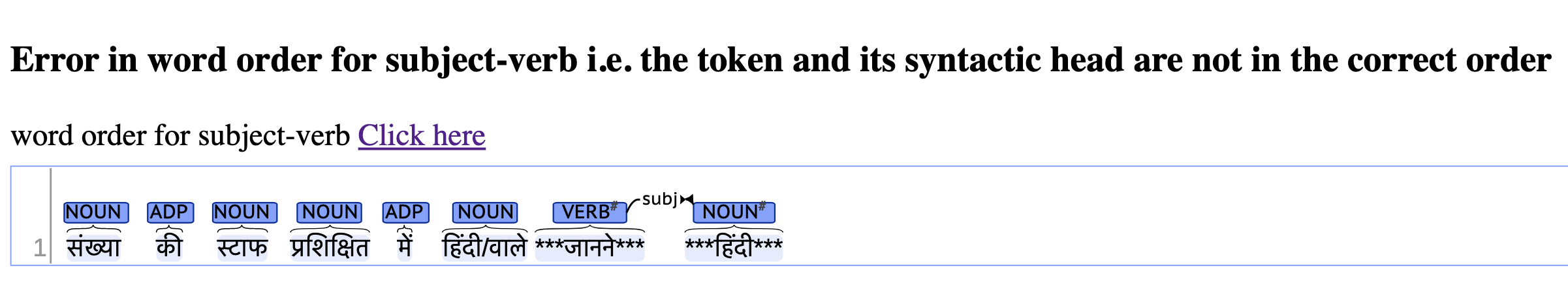

Input sentence: संख्या की स्टाफ प्रशिक्षित में हिंदी/वाले जानने हिंदी

(Stenography Hindi in trained persons of No.)

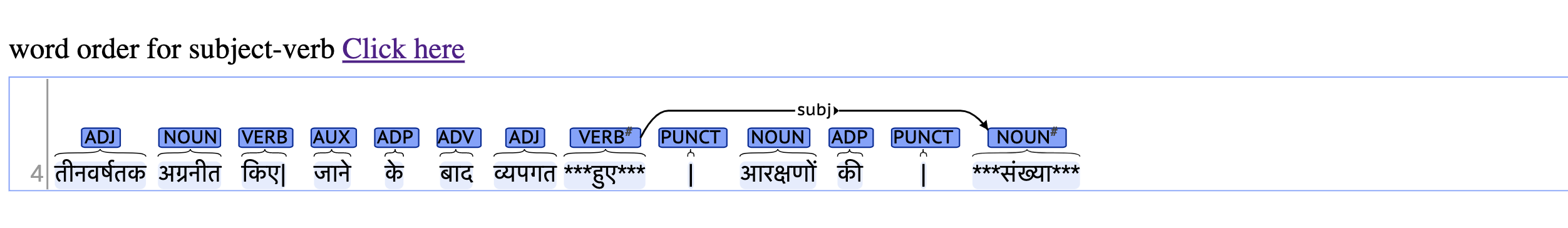

तीनवर्षतक अग्रनीत किए| जाने के बाद व्यपगत हुए| आरक्षणों की| संख्या

(of after forward No. reservations lapsed carrying for 3 years)

Below, we show the visualizations of word order related errors for the above two sentences. We also generate separate files for agreement and case marking (see examples/ for full HTML outputs).

We provide SUD parsers trained using Stanza toolkit. See section 4 in our paper for more details.

We currently support the following languages. lambre automatically downloads the necessary language-specific resources (when available).

| Language | Code | Language | Code | Language | Code | Language | Code |

|---|---|---|---|---|---|---|---|

| Catalan | ca | Spanish | es | Italian | it | Russian | ru |

| Czech | cs | Estonian | et | Latvian | lv | Slovenian | sl |

| Danish | da | Persian | fa | Dutch | nl | Swedish | sv |

| German | de | French | fr | Polish | pl | Ukrainian | uk |

| Greek | el | Hindi | hi | Portuguese | pt | Urdu | ur |

| English | en | Indonesian | id | Romanian | ro |

To manually download rules or parsers for a given language,

>>> import lambre

>>> lambre.download("ru") # RussianIf you find this toolkit helpful in your research, consider citing our paper,

@inproceedings{pratapa-etal-2021-evaluating,

title = "Evaluating the Morphosyntactic Well-formedness of Generated Texts",

author = "Pratapa, Adithya and

Anastasopoulos, Antonios and

Rijhwani, Shruti and

Chaudhary, Aditi and

Mortensen, David R. and

Neubig, Graham and

Tsvetkov, Yulia",

booktitle = "Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing",

month = nov,

year = "2021",

address = "Online and Punta Cana, Dominican Republic",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.emnlp-main.570",

pages = "7131--7150",

}We also encourage you to cite the original works for the chaudhary-etal-2021 ruleset, Chaudhary et al., 2020 and Chaudhary et al., 2021.

L'AMBRE is available under MIT License. The code for training parsers is adapted from stanza, which is available under Apache License, Version 2.0.

For any issues, questions or requests, please use the Github Issue Tracker.