AniPortrait: Audio-Driven Synthesis of Photorealistic Portrait Animations

Huawei Wei, Zejun Yang, Zhisheng Wang

Tencent Games Zhiji, Tencent

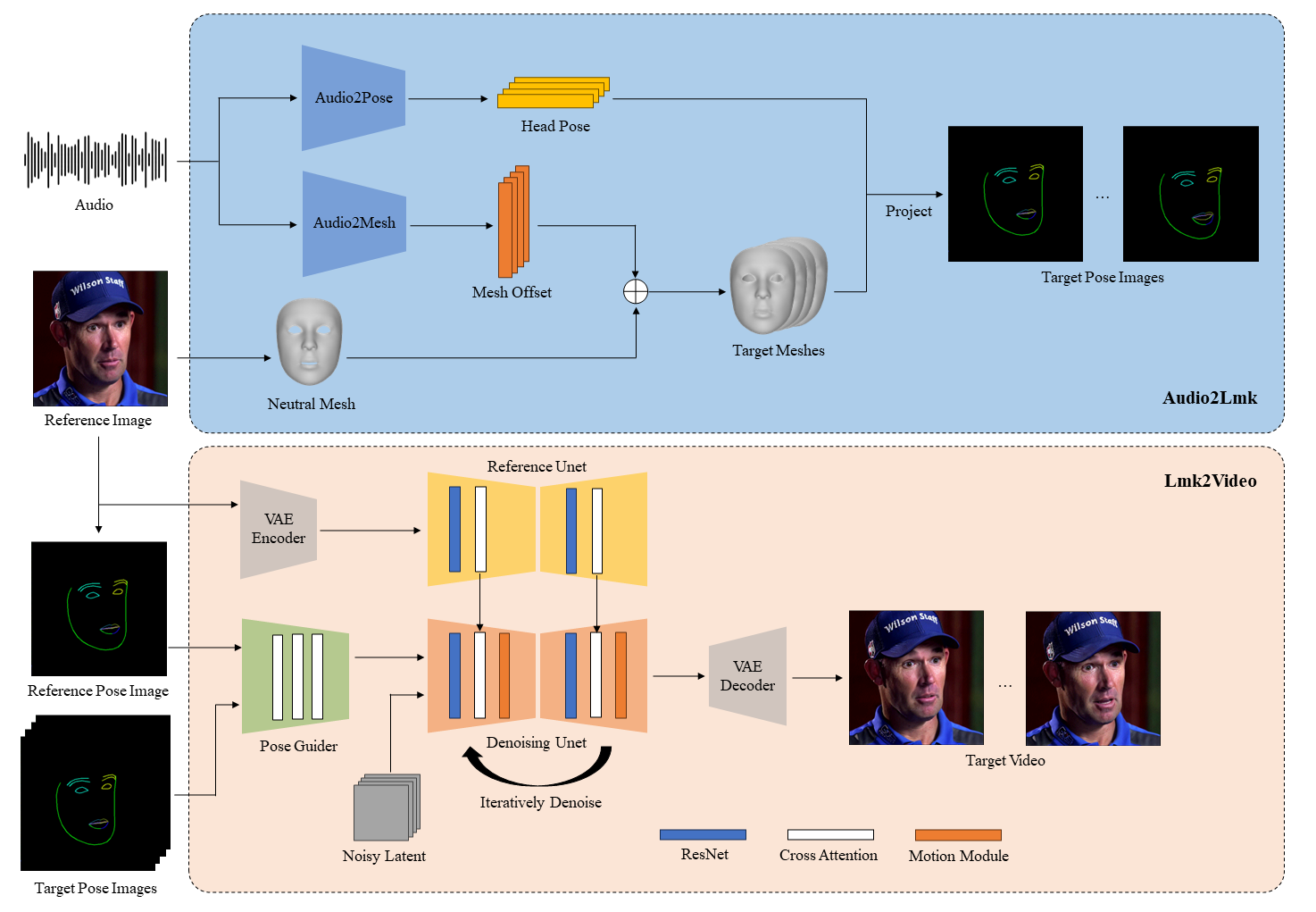

Here we propose AniPortrait, a novel framework for generating high-quality animation driven by audio and a reference portrait image. You can also provide a video to achieve face reenacment.

-

Now our paper is available on arXiv.

-

Update the code to generate pose_temp.npy for head pose control.

-

We will release audio2pose pre-trained weight for audio2video after futher optimization. You can choose head pose template in

./configs/inference/head_pose_tempas substitution.

cxk.mp4 |

solo.mp4 |

Aragaki.mp4 |

num18.mp4 |

jijin.mp4 |

kara.mp4 |

lyl.mp4 |

zl.mp4 |

We recommend a python version >=3.10 and cuda version =11.7. Then build environment as follows:

pip install -r requirements.txtAll the weights should be placed under the ./pretrained_weights direcotry. You can download weights manually as follows:

-

Download our trained weights, which include four parts:

denoising_unet.pth,reference_unet.pth,pose_guider.pth,motion_module.pthandaudio2mesh.pt. -

Download pretrained weight of based models and other components:

Finally, these weights should be orgnized as follows:

./pretrained_weights/

|-- image_encoder

| |-- config.json

| `-- pytorch_model.bin

|-- sd-vae-ft-mse

| |-- config.json

| |-- diffusion_pytorch_model.bin

| `-- diffusion_pytorch_model.safetensors

|-- stable-diffusion-v1-5

| |-- feature_extractor

| | `-- preprocessor_config.json

| |-- model_index.json

| |-- unet

| | |-- config.json

| | `-- diffusion_pytorch_model.bin

| `-- v1-inference.yaml

|-- wav2vec2-base-960h

| |-- config.json

| |-- feature_extractor_config.json

| |-- preprocessor_config.json

| |-- pytorch_model.bin

| |-- README.md

| |-- special_tokens_map.json

| |-- tokenizer_config.json

| `-- vocab.json

|-- audio2mesh.pt

|-- denoising_unet.pth

|-- motion_module.pth

|-- pose_guider.pth

`-- reference_unet.pth

Note: If you have installed some of the pretrained models, such as StableDiffusion V1.5, you can specify their paths in the config file (e.g. ./config/prompts/animation.yaml).

Here are the cli commands for running inference scripts:

python -m scripts.pose2vid --config ./configs/prompts/animation.yaml -W 512 -H 512 -L 64You can refer the format of animation.yaml to add your own reference images or pose videos. To convert the raw video into a pose video (keypoint sequence), you can run with the following command:

python -m scripts.vid2pose --video_path pose_video_path.mp4python -m scripts.vid2vid --config ./configs/prompts/animation_facereenac.yaml -W 512 -H 512 -L 64Add source face videos and reference images in the animation_facereenac.yaml.

python -m scripts.audio2vid --config ./configs/prompts/animation_audio.yaml -W 512 -H 512 -L 64Add audios and reference images in the animation_audio.yaml.

You can use this command to generate a pose_temp.npy for head pose control:

python -m scripts.generate_ref_pose --ref_video ./configs/inference/head_pose_temp/pose_ref_video.mp4 --save_path ./configs/inference/head_pose_temp/pose.npyExtract keypoints from raw videos and write training json file (here is an example of processing VFHQ):

python -m scripts.preprocess_dataset --input_dir VFHQ_PATH --output_dir SAVE_PATH --training_json JSON_PATHUpdate lines in the training config file:

data:

json_path: JSON_PATHRun command:

accelerate launch train_stage_1.py --config ./configs/train/stage1.yamlPut the pretrained motion module weights mm_sd_v15_v2.ckpt (download link) under ./pretrained_weights.

Specify the stage1 training weights in the config file stage2.yaml, for example:

stage1_ckpt_dir: './exp_output/stage1'

stage1_ckpt_step: 30000 Run command:

accelerate launch train_stage_2.py --config ./configs/train/stage2.yamlWe first thank the authors of EMO. Additionally, we would like to thank the contributors to the Moore-AnimateAnyone, majic-animate, animatediff and Open-AnimateAnyone repositories, for their open research and exploration.

@misc{wei2024aniportrait,

title={AniPortrait: Audio-Driven Synthesis of Photorealistic Portrait Animations},

author={Huawei Wei and Zejun Yang and Zhisheng Wang},

year={2024},

eprint={2403.17694},

archivePrefix={arXiv},

primaryClass={cs.CV}

}