- Project Description

- Tools

- Architecture

- Key Insights

- Folder Navigation

- Getting Started Guide

- Setting up GCP

- Running the code

- DBT

- Visualization

- Contribution

This project is part of the Data Engineering Zoomcamp for 2024 Cohort. This project contains an end-to-end data pipeline written in Python.

Data

The project used the data from Components, which consists of 28,000 product reviews from PCMag, CNET, Tech Radar and Trusted Reviews, covering the period from the beginning of 2013 to the end of 2020. The data is approximately 212 MB in size. The dataset comprises various attributes such as product score, article URL, text, title, author, date, and product category when applicable.

Disclaimer: The datasets used for this project are relatively smalls datasets. The main idea of this project wasn't to deal with a big dataset at this point, but rater build a pipeline that can fetch, organize and transform present data. But above all, to answer some questions that arose during the formulation of the problem.

Pipeline description:

- Pipeline fetches the data from online source

- It then conducts a basic transformation on the dataset and uploads it to Google Cloud Storage

- The data is then loaded from GCS, fully tranformed and then upload to BigQuery in the form of tables

- The tables are created using partitioning and clustering for better query performace

Steps 1-3 are orchestrated in Mage

Goal The examination aims to discern patterns within product reviews across diverse publications. It's valuable to ascertain which brands receive higher average scores in these reviews. Additionally, we seek to track the evolving popularity of product categories over time and to identify key authors contributing to tech reviews.

-

Cloud:

-

Terraform (IaC) - Employed for provisioning GCP services such as Buckets and BigQuery Tables

-

Data Ingestion (batch):

- Mage - Employed for workflow orchestration. Utilized for fetching data via API, transforming the data, and subsequently converting the data to parquet files and loading it into GCP buckets, followed by integration with BigQuery

-

Data Lake:

- Google Cloud Storage - Used for storing parquet files

-

Data Warehousing:

- Google BigQuery - Data was stored in tables, with a new table created by implementing partitioning and clustering on the original data to enhance query performance

-

Data Transformations and Processing:

- dbt - Utilized as an analytical platform for crafting data models using SQL sourced from BigQuery. The process involved the creation of multiple new tables, facilitating the discovery of novel insights

-

Dashboarding:

- Google Looker Studio -Employed for data visualization, facilitating the extraction of key insights through visual representation.

Check out the interactive dashboard HERE.

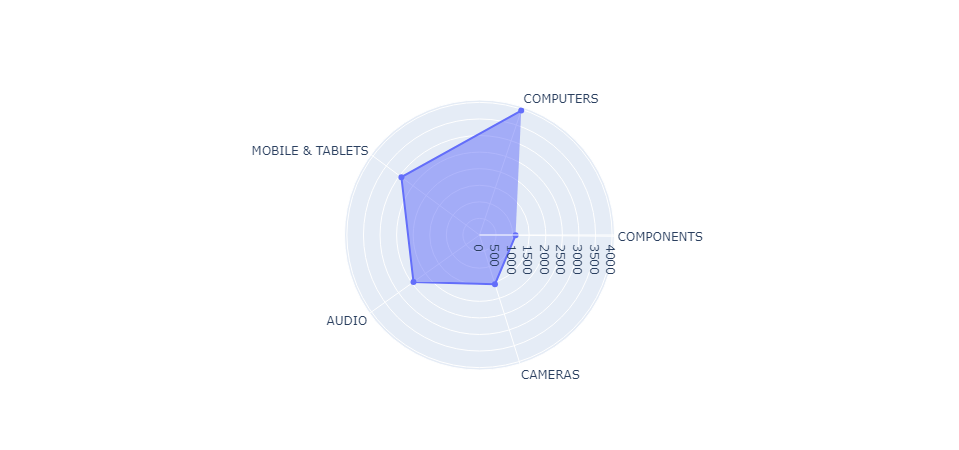

After analyzing data from the year 2013 to 2020, it can be said that:

-

The average product review score across all four publications exceeded 7 on a scale of 1 to 10

-

Computers, including laptops, PCs, and monitors, garnered the highest number of reviews

-

Dyson, Nvidia, OnePlus, Denon, Sigma, and Apple products emerged as top-reviewed items

- tech_reviews_exploration.ipynb

Notebook with Python script for data exploration.

- tech_reviews_queries.sql

SQL queries for create tables in BigQuery before dbt use.

- tech-review.zip

The zip file contains the dataset used for analysis

Sub folders and files for the whole dbt project.

Various images of mage pipelines and dbt models, among other things

Dashboard resulting from the design in looker studio and access link.

Sub folders and files for the whole mage project.

- main.tf

Main configuration file for Terraform, defining the infrastructure as code to provision (or destroy) all the necessary resources in the cloud.

- variables.tf

File containing variable definitions used in the main Terraform file (main.tf), facilitating code customization and reuse.

Before running the code you need to follow the steps below

To set up Google Cloud Platform (GCP), follow these steps:

-

Create a GCP Account: Sign up for a GCP account here. Note that even though there's a trial option, you'll still need a credit card for signup.

-

Create a New Project: Once logged in, navigate to the GCP Console and create a new project. Give it a suitable name and make a note of the project ID.

-

Create a service account:

-

In the left sidebar, click on "IAM & Admin" and then "Service accounts."

-

Click "Create service account" at the top.

-

Enter a name and optional description for your service account.

-

Select roles like BigQuery Admin, Storage Admin, and Compute Admin for this project.

-

Click "Create" to make the service account.

-

After creation, download the private key file. This file is used to authenticate requests to GCP services.

-

Click on the service account to view its details, then navigate to the "Keys" tab and click "Add Key." Choose "JSON" as the key type and click "Create" to download the private key file.

-

Store this JSON key file and rename it to mage-zoomcamp-key.json, placing it in the root level of Mage directory of your project.

-

-

Enable BigQuery API: Ensure that the BigQuery API is enabled by going to the BigQuery API and enabling it here

To run the code on macOS/Linux/WSL, follow these steps:

-

Clone Repository: Clone the repository to your local machine.

-

Navigate to Terraform Directory: Use the terminal to navigate into the terraform directory. Here, we use Terraform to create Google Cloud resources. If necessary, modify the region to your local region in the

variables.tffile, and update theproject IDto match the one created in GCP. -

Initialize Terraform: Run

terraform initto prepare your working directory containing congiguration files and install plugins for required providers. -

Plan Changes: Run

terraform planto show changes required by the current configuration. -

Apply Changes: Execute

terraform applyto create or update infrastructure based on the Terraform configuration. -

Destroy Infrastructure: If needed, run

terraform destroyto remove previously-created infrastructure (use with caution). -

Navigate to Mage Directory:

cdinto the mage directory. -

Build Docker Container: Run

docker-compose buildto build the Docker container. -

Start Docker Container: Execute

docker-compose upto start the Docker container. -

Access Mage Repository: You've initialized a Mage repository named tech-reviews. Access it at http://localhost:6789 in your browser.

This repository should have the following structure:

.

├── mage_data

│ └── tech-reviews

├── tech-reviews

│ ├── __pycache__

│ ├── charts

│ ├── custom

│ ├── data_exporters

│ ├── data_loaders

│ ├── dbt

│ ├── extensions

│ ├── interactions

│ ├── pipelines

│ ├── scratchpads

│ ├── transformers

│ ├── utils

│ ├── __init__.py

│ ├── io_config.yaml

│ ├── metadata.yaml

│ └── requirements.txt

├── .gitignore

├── .env

├── docker-compose.yml

├── Dockerfile

└── requirements.txt

-

Go to the browser, find pipelines, you will see two pipelines, namely

api_gcsandgcs_to_bigquery. You can run the pipelines sequentially. -

Utilize the 'tech_reviews_queries.sql' file located within the './code' directory to generate supplementary tables in BigQuery, incorporating partitioning and clustering for enhanced data efficiency

Once the process is completed, you should possess a parquet file stored in Google Cloud Bucket Storage, alongside a transformed table within BigQuery. Your pipeline should resemble the following structure.

|

|

The objective is to convert the data stored in the Data Warehouse (Google BigQuery) into Analytical Views through the development of a dbt project. At this juncture, you should have accomplished the following:

- Established a functional warehouse (BigQuery)

- Implemented a series of operational pipelines for ingesting the project dataset (Mage)

- The datasets from

./dataingested into GCS in a .parquet format

-

Register for a free developer account on dbt cloud by accessing this link.

-

Utilize the provided instructions to establish a connection with your BigQuery instance.

-

For comprehensive guidelines, refer to the detailed instructions available here.

The transformation lineage is documented within the ./dbt/models directory, and look like the following

-

Log in to Google looker studio with your Google account.

-

Connect your dataset using the BigQuery Connector.

-

Choose your project name and then select the dataset. This action will navigate you to the dashboard page.

-

Proceed to create your visualizations and share them as needed.

Contributions to the pipeline are welcome! Please fork the repository, make your changes, and submit a pull request for review.