This is the companion repo to my LinkedIn Learning Courses on Hadoop and Spark.

- Learning Hadoop - link uses mostly GCP Dataproc for running Hadoop and associated libraries (i.e. Hive, Pig, Spark...) workloads

- Cloud Hadoop: Scaling Apache Spark - link - uses GCP DataProc, AWS EMR or Databricks on AWS

- Azure Databricks Spark Essential Training - link uses Azure with Databricks for scaling Apache Spark workloads

- Setup a Hadoop/Spark cloud-cluster on GCP DataProc or AWS EMR

- see

setup-hadoopfolder in this Repo for instructions/scripts

- see

- Setup a Hadoop/Spark dev environment

- can use EclipseChe (on-line IDE), or local IDE

- select your language (i.e. Python, Scala...)

- Create a GCS bucket for input/output job data

- see

example_datasetsfolder in this Repo for sample data files

- see

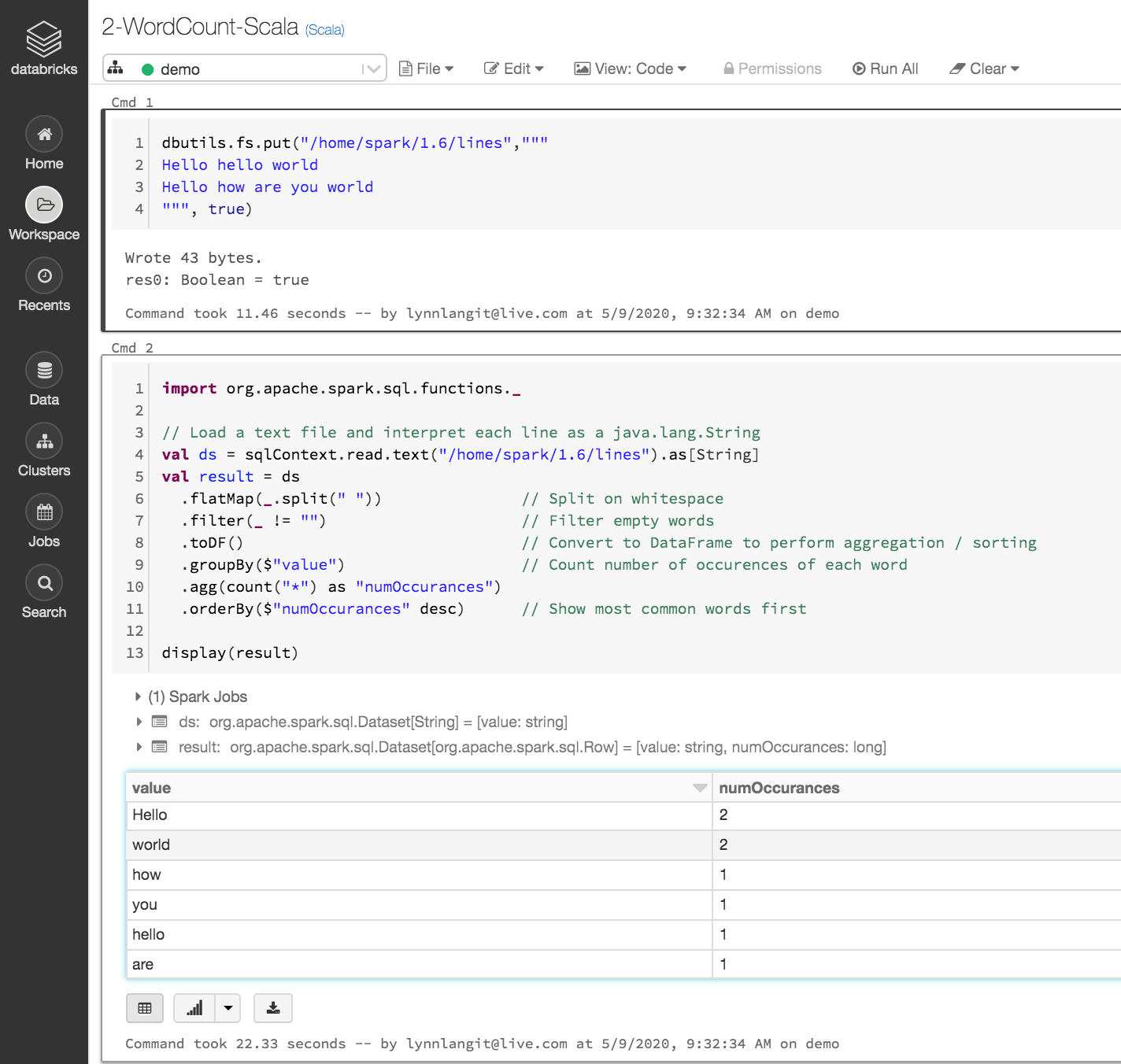

- Use Databricks Community Edition (managed, hosted Apache Spark) - example shown below

- uses Databricks (Jupyter-style) notebooks to connect to a small, managed Spark cluster

- AWS or Azure editions - easier to try out on AWS

- Sign up for free trial - link

EXAMPLES from org.apache.hadoop_or_spark.examples - link for Spark examples

- Run a Hadoop WordCount Job with Java (jar file)

- Run a Hadoop and/or Spark CalculatePi (digits) Script with PySpark or other libraries

- Run using Cloudera shared demo env

- at

https://demo.gethue.com/ - login is user:

demo, pwd:demo

- at

There are ~ 10 courses on Hadoop/Spark topics on LinkedIn Learning. See graphic below