This project is dedicated to forecasting Sea Surface Temperature (SST) using a sophisticated data pipeline and machine learning models. Leveraging the ERA5 reanalysis hourly data from the Climate Data Store (CDS), our approach employs two forecasting models: Facebook's Prophet and the Random Forest model from Apache Spark's MLib library. This project showcases the integration of big data technologies and their scalability with an increasing influx of data, critical for dynamic environmental forecasting.

- Scalability: Demonstrate how our big data solution scales with increased data inflow.

- Comparative Analysis: Provide a comparative study of the two forecasting models used.

- Significance: Highlight the importance of forecasting SST in the context of global climate monitoring and its wider impacts.

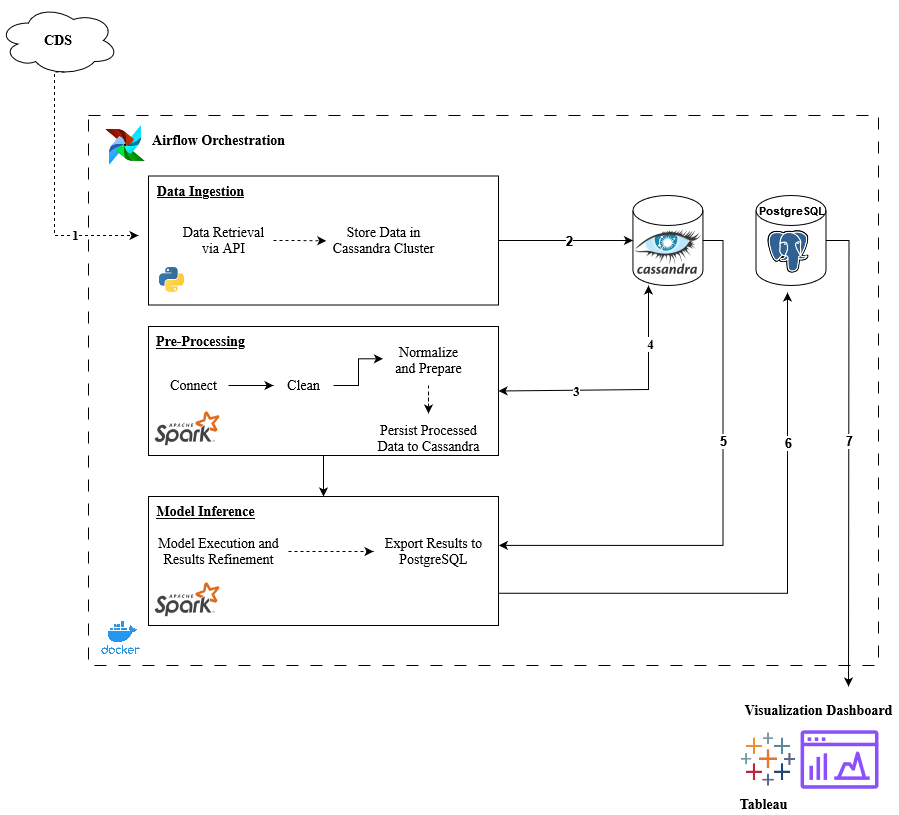

The project utilizes a robust architecture involving several big data tools:

- Apache Airflow: Manages the workflow automation.

- Apache Spark: Processes data and runs ML models.

- Apache Cassandra: Serves as the primary database for storing incoming and processed data.

- PostgreSQL: Stores processed data for reporting and visualization.

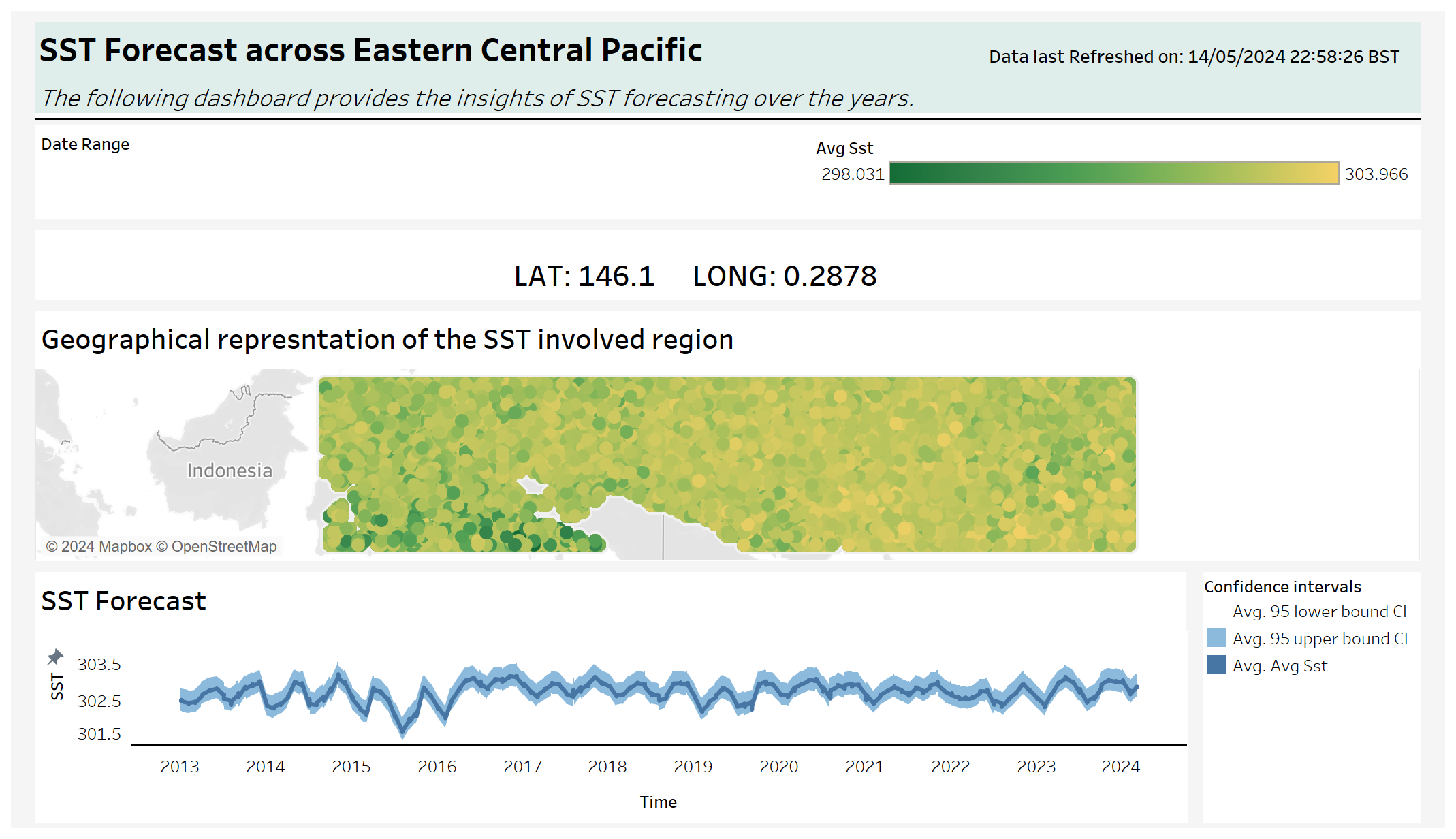

- Tableau: Used for visualizing the forecasting results.

- Data Ingestion: A Docker service pulls data from the CDS cloud using the CDS API, pushing it to Cassandra. This process is triggered by Apache Airflow.

- Data Processing: Triggered by Airflow, this step involves the Spark data-processing service to handle and prepare data for modeling.

- Model Inference: Within the same Spark data-processing service, the Facebook Prophet model runs, forecasting SSTs with a 95% confidence interval.

- Data Storage and Visualization: Post-modeling, the data is pushed to a PostgreSQL database. A Tableau dashboard connects to this database, refreshing the dashboard with new data for visualization.

Ensure Docker and Docker Compose are installed on your machine. Access to CDS API credentials and necessary configurations for Apache Airflow, Apache Spark, Cassandra, PostgreSQL, and Tableau are also required.

-

Clone the Repository

git clone <repository-url>

-

Navigate to Deployment Directory

cd path/to/deployment/ -

Build and Run the Docker Compose

docker-compose up --build -d

This will start all the necessary services, including launching the Airflow dashboard accessible at http://localhost:8080.

- Download Tableau Desktop: Navigate to the Tableau Desktop download page and install Tableau on your system.

- Connect to PostgreSQL Database:

- Open Tableau and go to Connect > To a server > PostgreSQL.

- Download and Install the PostgreSQL Driver:

- Tableau requires a specific driver to integrate with PostgreSQL. Download the Java 8 JDBC driver from here.

- Driver Version Compatibility: Ensure compatibility with Tableau Desktop versions 2021.1 to 2024.1.2.

- Setup the Driver:

- Copy the downloaded

.jarfile to the following directory:C:\Program Files\Tableau\Drivers - You may need to create this directory if it does not exist.

- Copy the downloaded

-

Restart Tableau:

- After installing the driver, restart Tableau for the changes to take effect.

-

Sign In to PostgreSQL:

- Navigate to Connect > PostgreSQL and sign in using your credentials.

- Credentials:

- Server:

localhost - Port:

5432 - Database:

<database_name> - Authentication: Username and password

- Username:

<username> - Password:

<password>

- Server:

-

Loading .twbx file

- Download the .twbx file from this link.

- Open .twbx using Tableau Desktop

Initiating the Data Pipeline with Airflow

-

Open a web browser and navigate to http://localhost:8080 to access the Airflow dashboard. Running the Data Pipeline:

-

In the Airflow dashboard, locate and select the DAG corresponding to the data pipeline. Trigger the DAG to start the pipeline. Monitor the progress directly through the dashboard to ensure each task completes successfully.

-

Once the data pipeline has successfully executed, Tableau, already connected to the updated PostgreSQL database, will automatically refresh the dashboard. Navigate to your Tableau dashboard to view the latest outputs and insights derived from the forecasted SST data.

This sequence ensures a streamlined operation from data processing to visualization, providing up-to-date results on the dashboard for immediate analysis and decision-making.

Sudarsaan Azhagu Sundaram, Preethi Jayakumar, Nidhi Saini, Tushara Rudresh Murthy, Girija Suresh Dahibhate, Sohail Amantulla Patel

This project is licensed under the Creative Commons Attribution-NonCommercial 4.0 International License - see the LICENSE file for details. This restricts the use of this project to academic and other non-commercial purposes only. See the LICENSE file for details.