Code for blog at Data Engineering Best Practices - #1. Data flow & Code

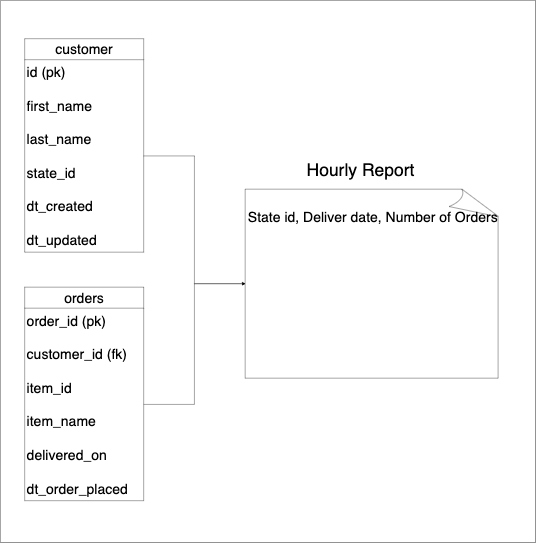

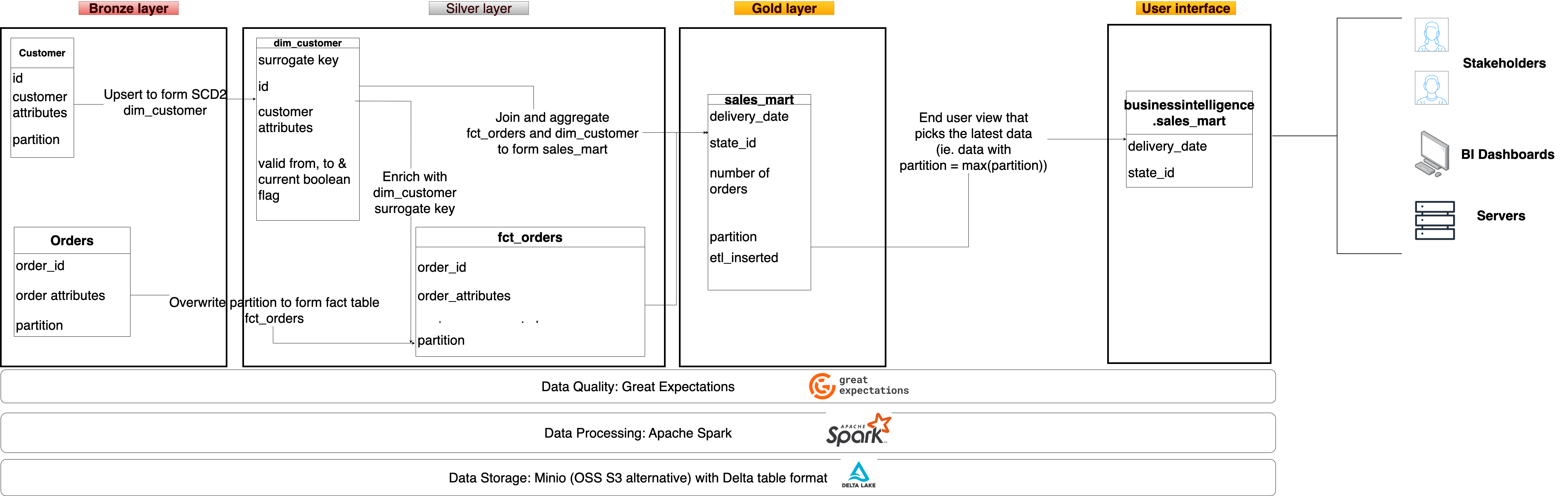

Assume we are extracting customer and order information from upstream sources and creating a daily report of the number of orders.

If you'd like to code along, you'll need

Prerequisite:

- git version >= 2.37.1

- Docker version >= 20.10.17 and Docker compose v2 version >= v2.10.2. Make sure that docker is running using

docker ps - pgcli

Run the following commands via the terminal. If you are using Windows, use WSL to set up Ubuntu and run the following commands via that terminal.

git clone https://github.com/josephmachado/data_engineering_best_practices.git

cd data_engineering_best_practices

make up # Spin up containers

make ddl # Create tables & views

make ci # Run checks & tests

make etl # Run etl

make spark-sh # Spark shell to check created tablesspark.sql("select partition from adventureworks.sales_mart group by 1").show() // should be the number of times you ran `make etl`

spark.sql("select count(*) from businessintelligence.sales_mart").show() // 59

spark.sql("select count(*) from adventureworks.dim_customer").show() // 1000 * num of etl runs

spark.sql("select count(*) from adventureworks.fct_orders").show() // 10000 * num of etl runsYou can see the results of DQ checks, using make meta

select * from ge_validations_store limit 1;

exitUse make down to spin down containers.