This repository exists to capture ideas and experiments designed to grow Bisq, monitor their progress, and offer feedback.

Notable links:

-

issues and projects in this repository for ways to contribute

-

this comprehensive handbook for a comprehensive overview of tactics, strategies, and lessons from past efforts

-

this wiki article for an overview of the growth function, how it’s structured, and how to contribute

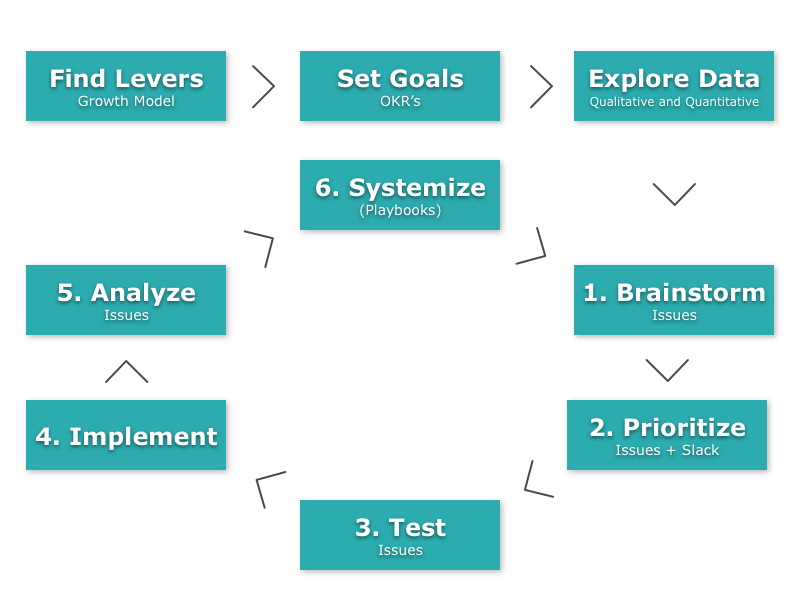

Below are principles and processes to continuously explore ways to grow that we find helpful, inspired by the work of Brian Balfour, Sean Ellis and many others.

Constantly find new ways to learn about our users, product, and channels for communication. Feed that learning into this growth process to improve it.

Momentum is powerful. Establish a cadence for experimentation and feedback in order to fight through failures and get to successes. Find a rhythm that works.

Individual contributors decide what they work on to achieve their OKRs (Objectives and Key Results).

The process shown below can be broken into two major parts:

-

High-level planning, seen on top, and

-

Continuous daily/weekly actions seen in the circle on bottom.

This planning process should happen roughly every 2–3 months. The purpose is to "zoom out" and reconsider what’s most important now. How have the last few months gone? What has changed during that time, and how should our goals change to adapt?

The goal is to find the highest impact area that we can focus on right now given limited resources.

To evaluate each goal, think about following key points:

-

Baseline

-

Ceiling

-

Sensitivity over time

In setting goals, focus on OKRs (Objectives and Key Results), and keep to the following principles:

-

Focus on inputs not outputs. OKRs should never be set on outputs, always on the inputs.

-

Keep OKRs in your face. Display OKRs in a place where we they cannot be forgotten by those who are accountable for them. They should be visible to anyone and everyone, and should be reviewed daily and weekly.

-

Keep OKRs concrete. Objectives are expressed as a qualitative goal, e.g. "Make virality a meaningful channel". Key Results are expressed as quantitative outcomes, e.g. "Grow viral/weekly active users by 1.5%". The timeframe for OKRs should be kept between 30 and 90 days.

|

Note

|

Goals should change mid-OKR only very rarely. |

-

Qualitative. Example sources of qualitative data include user surveys, one-on-one conversations, user videos, forum discussions, arbitration tickets and more.

-

Quantitative. Example sources of quantitative data include Google Analytics, our own markets API, and other data sources we can access. The goal is to answer questions we don’t know about our users, products and channels. Break these data down into pieces.

|

Important

|

We should always be on the lookout for new ways to understand and better serve our current and potential users, but the prime directive is that individual user privacy must never be compromised by our efforts. |

-

Capture. Never stop putting new ideas into the growth backlog

-

Focus. Focus on input parameters, not on output parameters.

-

Observe. How are others doing it? Look outside of your immediate product space. Walk through it together.

-

Question. Brainstorm and ask why, e.g.: What is… What if… What about… How do we do more of…

-

Associate. Connect the dots between unrelated things. e.g.: What if our activation process was like closing a deal?

Prioritize considering following key parameters:

-

Probability. Low: 20%, Medium: 50%, High: 80%

-

Impact. This comes from your prediction. Take into account long lasting effects vs one hit wonders.

Create a hypothesis, e.g.:

If successful, [VARIABLE] will increase by [IMPACT], because [ASSUMPTIONS].

Look at:

-

Quantitative data. Previous experiments, surrounding data, funnel data

-

Qualitative data. Surveys, forum, arbitration tickets, user testing recordings

-

Secondary sources. Networking, blogs, competitor observation, case studies

Create an experiment issue:

See the experiment issue template and other experiment issues for guidance and inspiration.

What do we really need to do to test our assumption?

-

Results. Was the experiment a success or a failure? Be prepared for a lot of failures in order to get to the successes.

-

Impact. How close were you to your prediction(s)? Whether or not the experiment was a success, what results or effects did it produce?

-

Cause. The most important question you can ask is: why did you see the result that you did?

Update and close the GitHub issue as soon as you’ve finished analyzing.