Fine-Tuning a Stable Diffusion model on a limited amount of VRAM using a ControlNet. A dataset composed of Pokemon pictures with BLIP descriptions was modified to fine-tune Stable Diffusion 1.5. By generating sketch-like masks based on the original training set, the goal is to teach the original model to take scribbles (as well as the text prompts) as a conditioning mask for the generation of new Pokemons.

You will need:

python(seepyproject.tomlfor full version)GitMake- a

.secretsfile with the required secrets and credentials - load environment variables from

.env - A GPU with more than 8 GB of VRAM

Clone this repository (requires git ssh keys)

git clone --recursive [email protected]:caetas/FineTune_SD.git

cd finetune_sd

Install dependencies

conda create -y -n python3.9 python=3.9

conda activate python3.9

or if environment already exists

conda env create -f environment.yml

conda activate python3.9

And then setup all virtualenv using make file recipe

(finetune_sd) $ make setup-all

The dataset can be downloaded from the following link. The .parquet file should be moved to the data/raw directory.

To process the images and generate the masks, please run the following command:

python prepare_dataset.pyThe processed dataset will be stored in the data/processed folder.

Run the following commands:

chmod -X control_execute.sh

bash train_script.shNOTE: You can skip the first command after the first execution.

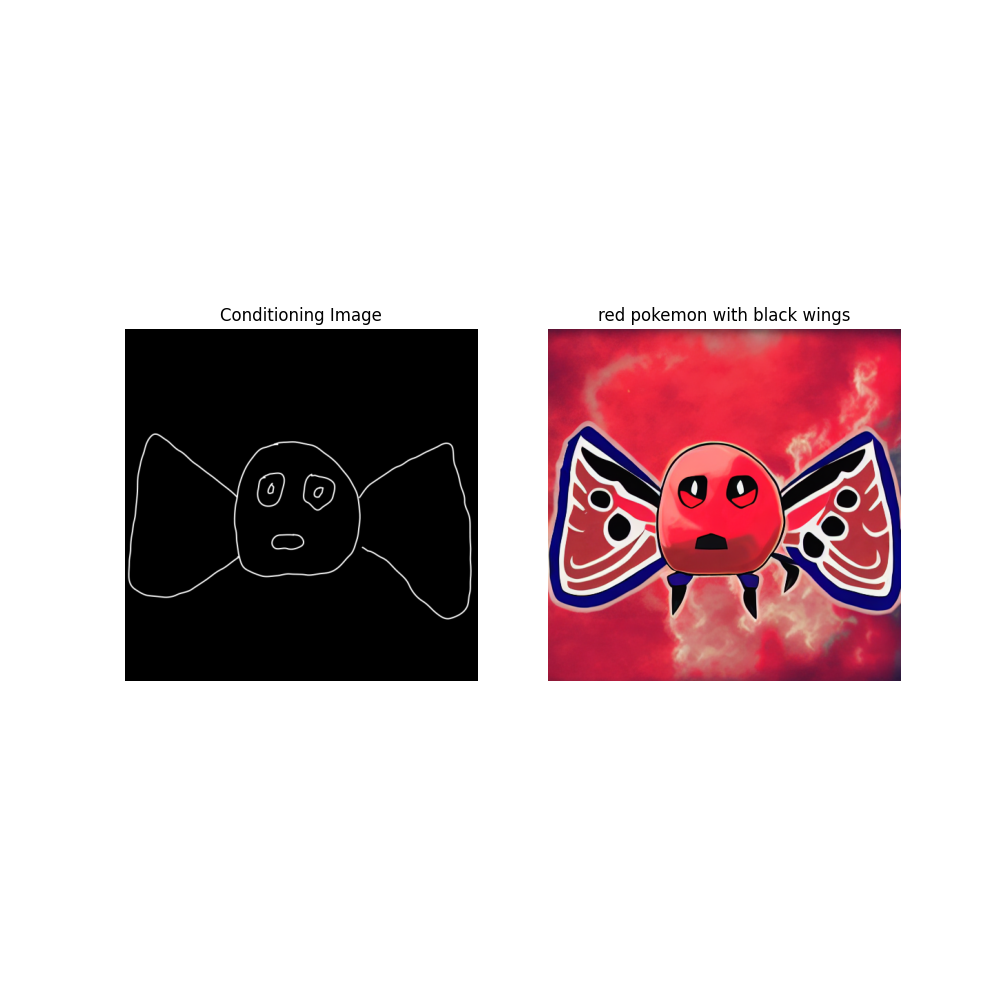

Although my input sketch is very rudimentary, the trained network can follow the provided textual and visual instructions to generate a new (very ugly) Pokemon.

You can check more examples in the reports/figures

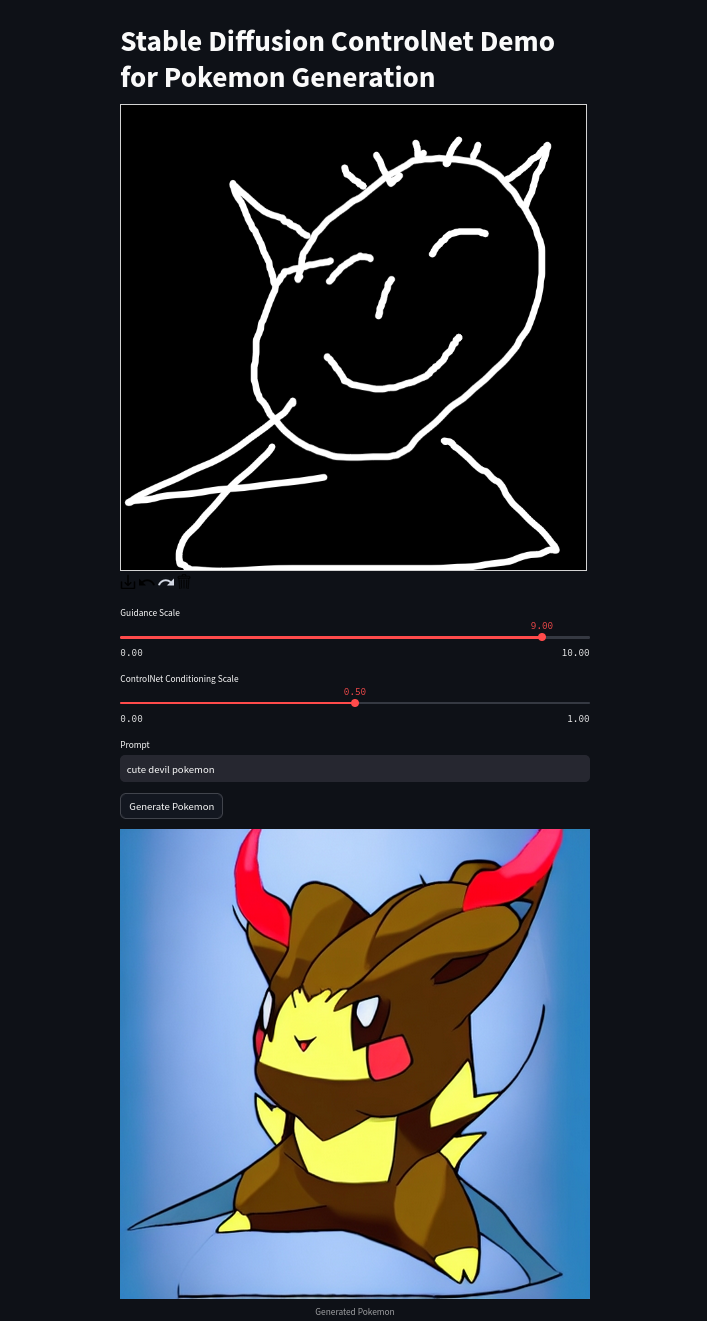

With a Streamlit app, you can draw your own sketch of a Pokemon and ask the pretrained ControlNet to generate an image based on your sketch and a prompt. The influence of the prompt and of the ControlNet can also be adjusted via some sliders.

streamlit run app.pyThe interface should look like this:

Full documentation is available here: docs/.

For more information on the Diffusers library implementation of ControlNet, you can visit the original tutorials

This code was adapted from the aforementioned tutorial and from the training scripts contained in the Diffusers Repository, more precisely in the examples folder.

See the Developer guidelines for more information.

Contributions of any kind are welcome. Please read CONTRIBUTING.md for details and the process for submitting pull requests to us.

See the Changelog for more information.

Thank you for improving the security of the project, please see the Security Policy for more information.

This project is licensed under the terms of the No license license.

See LICENSE for more details.

If you publish work that uses Fine-Tuning Stable Diffusion, please cite Fine-Tuning Stable Diffusion as follows:

@misc{Fine-Tuning Stable Diffusion FineTune_SD,

author = {None},

title = {Fine-Tuning a Stable Diffusion model on a limited amount of VRAM.},

year = {2023},

}