[ECCV 24] AdaCLIP: Adapting CLIP with Hybrid Learnable Prompts for Zero-Shot Anomaly Detection.

by Yunkang Cao, Jiangning Zhang, Luca Frittoli, Yuqi Cheng, Weiming Shen, Giacomo Boracchi

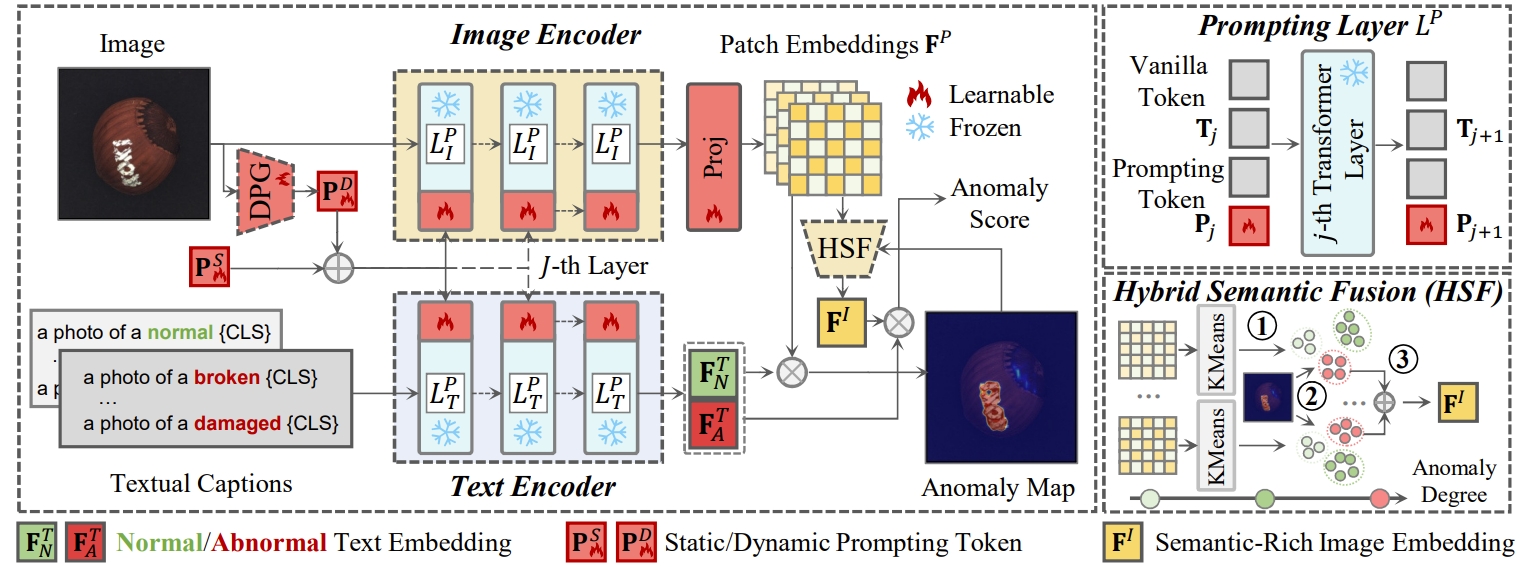

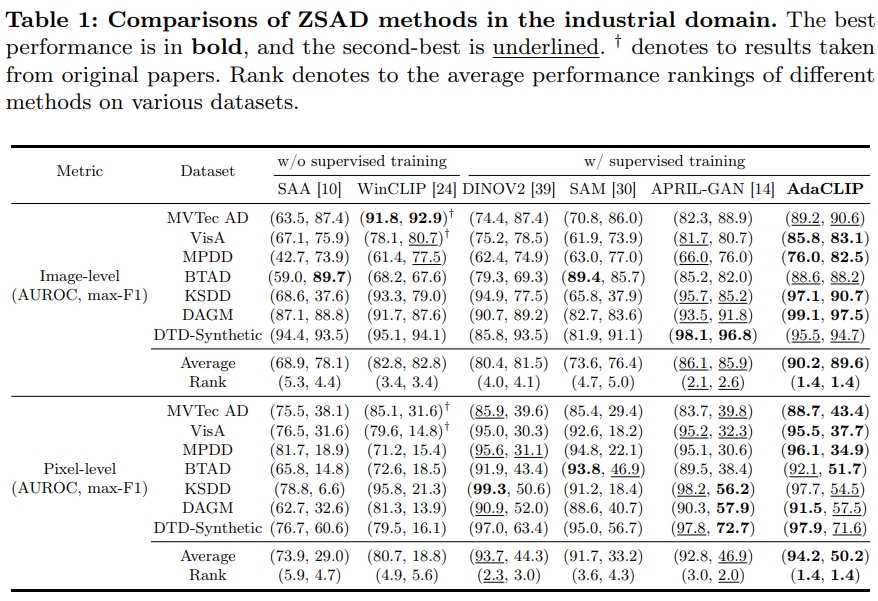

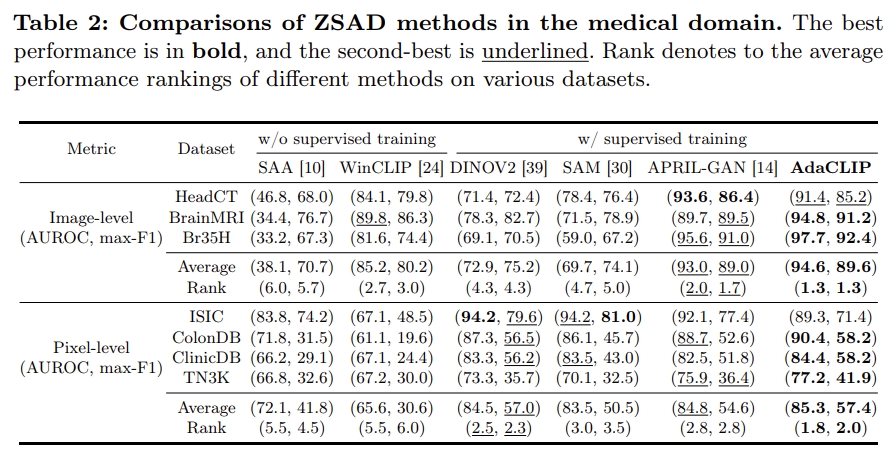

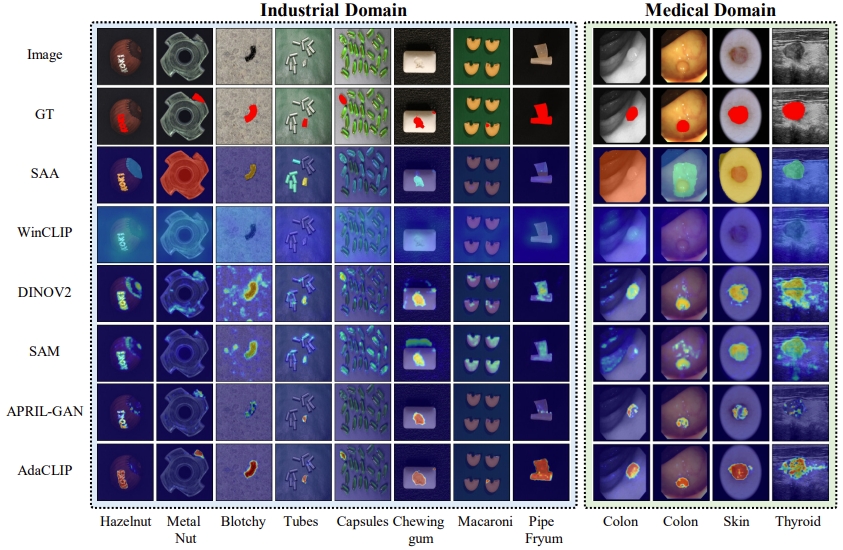

Zero-shot anomaly detection (ZSAD) targets the identification of anomalies within images from arbitrary novel categories. This study introduces AdaCLIP for the ZSAD task, leveraging a pre-trained vision-language model (VLM), CLIP. AdaCLIP incorporates learnable prompts into CLIP and optimizes them through training on auxiliary annotated anomaly detection data. Two types of learnable prompts are proposed: \textit{static} and \textit{dynamic}. Static prompts are shared across all images, serving to preliminarily adapt CLIP for ZSAD. In contrast, dynamic prompts are generated for each test image, providing CLIP with dynamic adaptation capabilities. The combination of static and dynamic prompts is referred to as hybrid prompts, and yields enhanced ZSAD performance. Extensive experiments conducted across 14 real-world anomaly detection datasets from industrial and medical domains indicate that AdaCLIP outperforms other ZSAD methods and can generalize better to different categories and even domains. Finally, our analysis highlights the importance of diverse auxiliary data and optimized prompts for enhanced generalization capacity.

- The description to the utilized training set in our paper is not accurate. By default, we utilize MVTec AD & ColonDB for training, and VisA & ClinicDB are utilized for evaluations on MVTec AD & ColonDB.

To set up the AdaCLIP environment, follow one of the methods below:

- Clone this repo:

git clone https://github.com/caoyunkang/AdaCLIP.git && cd AdaCLIP

- You can use our provided installation script for an automated setup::

sh install.sh

- If you prefer to construct the experimental environment manually, follow these steps:

conda create -n AdaCLIP python=3.9.5 -y conda activate AdaCLIP pip install torch==1.10.1+cu111 torchvision==0.11.2+cu111 torchaudio==0.10.1 -f https://download.pytorch.org/whl/cu111/torch_stable.html pip install tqdm tensorboard setuptools==58.0.4 opencv-python scikit-image scikit-learn matplotlib seaborn ftfy regex numpy==1.26.4 pip install gradio # Optional, for app - Remember to update the dataset root in config.py according to your preference:

DATA_ROOT = '../datasets' # Original setting

Please download our processed visual anomaly detection datasets to your DATA_ROOT as needed.

Note: some links are still in processing...

| Dataset | Google Drive | Baidu Drive | Task |

|---|---|---|---|

| MVTec AD | Google Drive | Baidu Drive | Anomaly Detection & Localization |

| VisA | Google Drive | Baidu Drive | Anomaly Detection & Localization |

| MPDD | Google Drive | Baidu Drive | Anomaly Detection & Localization |

| BTAD | Google Drive | Baidu Drive | Anomaly Detection & Localization |

| KSDD | Google Drive | Baidu Drive | Anomaly Detection & Localization |

| DAGM | Google Drive | Baidu Drive | Anomaly Detection & Localization |

| DTD-Synthetic | Google Drive | Baidu Drive | Anomaly Detection & Localization |

| Dataset | Google Drive | Baidu Drive | Task |

|---|---|---|---|

| HeadCT | Google Drive | Baidu Drive | Anomaly Detection |

| BrainMRI | Google Drive | Baidu Drive | Anomaly Detection |

| Br35H | Google Drive | Baidu Drive | Anomaly Detection |

| ISIC | Google Drive | Baidu Drive | Anomaly Localization |

| ColonDB | Google Drive | Baidu Drive | Anomaly Localization |

| ClinicDB | Google Drive | Baidu Drive | Anomaly Localization |

| TN3K | Google Drive | Baidu Drive | Anomaly Localization |

To use your custom dataset, follow these steps:

- Refer to the instructions in

./data_preprocessto generate the JSON file for your dataset. - Use

./dataset/base_dataset.pyto construct your own dataset.

We offer various pre-trained weights on different auxiliary datasets.

Please download the pre-trained weights in ./weights.

| Pre-trained Datasets | Google Drive | Baidu Drive |

|---|---|---|

| MVTec AD & ClinicDB | Google Drive | Baidu Drive |

| VisA & ColonDB | Google Drive | Baidu Drive |

| All Datasets Mentioned Above | Google Drive | Baidu Drive |

By default, we use MVTec AD & Colondb for training and VisA for validation:

CUDA_VISIBLE_DEVICES=0 python train.py --save_fig True --training_data mvtec colondb --testing_data visaAlternatively, for evaluation on MVTec AD & Colondb, we use VisA & ClinicDB for training and MVTec AD for validation.

CUDA_VISIBLE_DEVICES=0 python train.py --save_fig True --training_data visa clinicdb --testing_data mvtecSince we have utilized half-precision (FP16) for training, the training process can occasionally be unstable. It is recommended to run the training process multiple times and choose the best model based on performance on the validation set as the final model.

To construct a robust ZSAD model for demonstration, we also train our AdaCLIP on all AD datasets mentioned above:

CUDA_VISIBLE_DEVICES=0 python train.py --save_fig True \

--training_data \

br35h brain_mri btad clinicdb colondb \

dagm dtd headct isic mpdd mvtec sdd tn3k visa \

--testing_data mvtecManually select the best models from the validation set and place them in the weights/ directory. Then, run the following testing script:

sh test.shIf you want to test on a single image, you can refer to test_single_image.sh:

CUDA_VISIBLE_DEVICES=0 python test.py --testing_model image --ckt_path weights/pretrained_all.pth --save_fig True \

--image_path asset/img.png --class_name candle --save_name test.pngDue to differences in versions utilized, the reported performance may vary slightly compared to the detection performance with the provided pre-trained weights. Some categories may show higher performance while others may show lower.

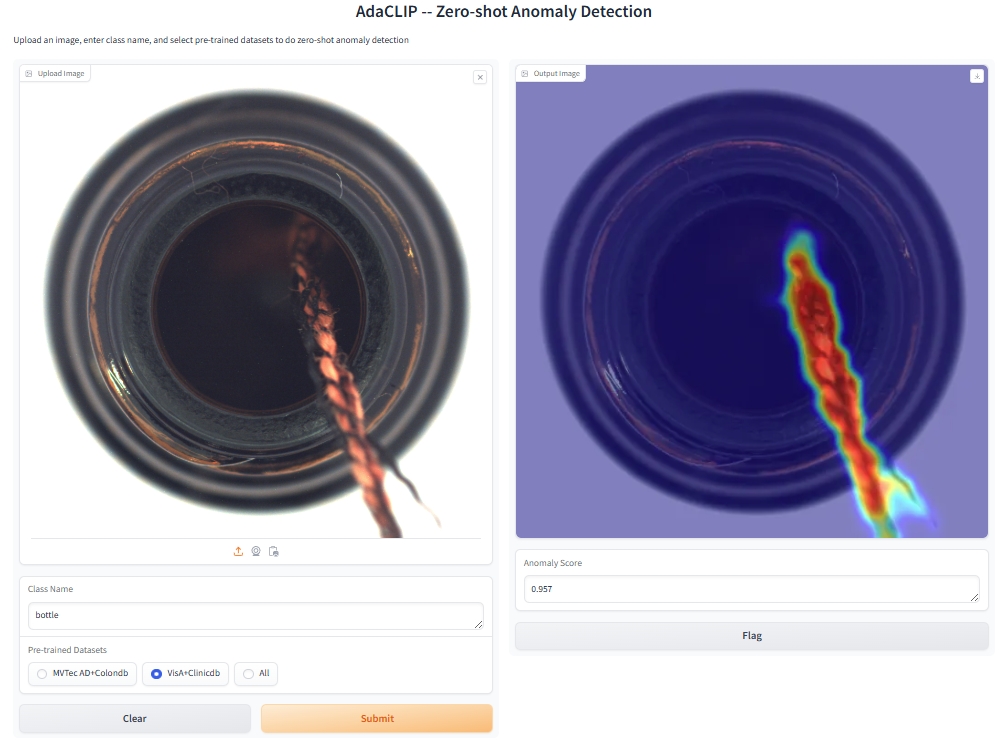

To run the demo application, use the following command:

python app.pyOr visit our Online Demo for a quick start. The three pre-trained weights mentioned are available there. Feel free to test them with your own data!

Please note that we currently do not have a GPU environment for our Hugging Face Space, so inference for a single image may take approximately 50 seconds.

Our work is largely inspired by the following projects. Thanks for their admiring contribution.

If you find this project helpful for your research, please consider citing the following BibTeX entry.

@inproceedings{AdaCLIP,

title={AdaCLIP: Adapting CLIP with Hybrid Learnable Prompts for Zero-Shot Anomaly Detection},

author={Cao, Yunkang and Zhang, Jiangning and Frittoli, Luca and Cheng, Yuqi and Shen, Weiming and Boracchi, Giacomo},

booktitle={European Conference on Computer Vision},

year={2024}

}