Small businesses face the challenge of centralizing data from various sources and analyzing it. This demands an affordable solution since they cannot bear high infrastructure costs, and an accessible one because they cannot allocate a dedicated technical team for this.

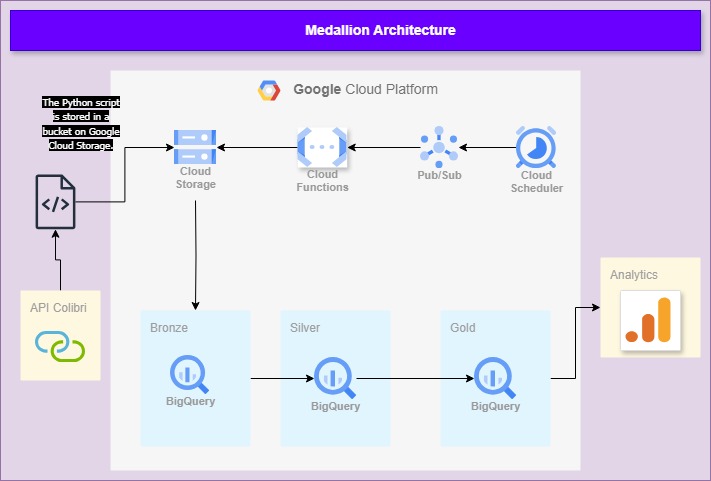

My application is a data pipeline system that automates the collection, processing, and analysis of restaurant data. It uses Google Cloud Platform (GCP) to implement a data lakehouse architecture, utilizing Python and SQL scripts, Google Functions, and Scheduler. The data is stored in layers of the medallion architecture in BigQuery for further analysis. Power BI is used to create interactive dashboards and reports that provide strategic insights for decision-making.

Data Extraction and Processing: A Python program collects, transforms, and cleans the data via API. This program is deployed in Google Cloud Functions.

- Scheduling: Google Cloud Scheduler and Pub/Sub automate the execution of the Python program in Functions, ensuring tasks run at specified times.

- Data Storage: Processed data is stored in BigQuery, structured in bronze, silver, and gold layers for efficient management.

- Data Analysis: The data can be accessed and analyzed in Power BI, enabling insightful business decisions.

For a detailed step-by-step guide on how to deploy this pipeline, please refer to my article on Medium.

To learn how to create layers in BigQuery, read this article.

For guidance on how to securely store passwords in Secret Manager, check out this article.

To understand how to read data from the Colibri API, refer to this article.

If you need to review specific parts of the code, I’ve documented them separately. You can find the documentation here:

Thank you very much for your attention. If you'd like to adapt this for your own project, feel free to do so. To get started, you can fork the repository by clicking the "Fork" button at the top right of the GitHub page. If you found this useful, please consider giving it a star on GitHub!