First things first! You can install the SDK with pip as follows:

pip install observersOr if you want to use other LLM providers through AISuite or Litellm, you can install the SDK with pip as follows:

pip install observers[aisuite] # or observers[litellm]Whenever you want to observer document information, you can use our Docling integration.

pip install observers[docling]For open telemetry, you can install the following:

pip install observers[opentelemetry]We differentiate between observers and stores. Observers wrap generative AI APIs (like OpenAI or llama-index) and track their interactions. Stores are classes that sync these observations to different storage backends (like DuckDB or Hugging Face datasets).

To get started you can run the code below. It sends requests to a HF serverless endpoint and log the interactions into a Hub dataset, using the default store DatasetsStore. The dataset will be pushed to your personal workspace (http://hf.co/{your_username}). To learn how to configure stores, go to the next section.

from observers.observers import wrap_openai

from observers.stores import DuckDBStore

from openai import OpenAI

store = DuckDBStore()

openai_client = OpenAI()

client = wrap_openai(openai_client, store=store)

response = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": "Tell me a joke."}],

)- OpenAI and every other LLM provider that implements the OpenAI API message formate

- AISuite, which is an LLM router by Andrew Ng and which maps to a lot of LLM API providers with a uniform interface.

- Litellm, which is a library that allows you to use a lot of different LLM APIs with a uniform interface.

- Docling, Docling parses documents and exports them to the desired format with ease and speed. This observer allows you to wrap this and push popular document formats (PDF, DOCX, PPTX, XLSX, Images, HTML, AsciiDoc & Markdown) to the different stores.

The wrap_openai function allows you to wrap any OpenAI compliant LLM provider. Take a look at the example doing this for Ollama for more details.

| Store | Example | Annotate | Local | Free | UI filters | SQL filters |

|---|---|---|---|---|---|---|

| Hugging Face Datasets | example | ❌ | ❌ | ✅ | ✅ | ✅ |

| DuckDB | example | ❌ | ✅ | ✅ | ❌ | ✅ |

| Argilla | example | ✅ | ❌ | ✅ | ✅ | ❌ |

| OpenTelemetry | example | ︖* | ︖* | ︖* | ︖* | ︖* |

| Honeycomb | example | ✅ | ❌ | ✅ | ✅ | ✅ |

- These features, for the OpenTelemetry store, depend upon the provider you use

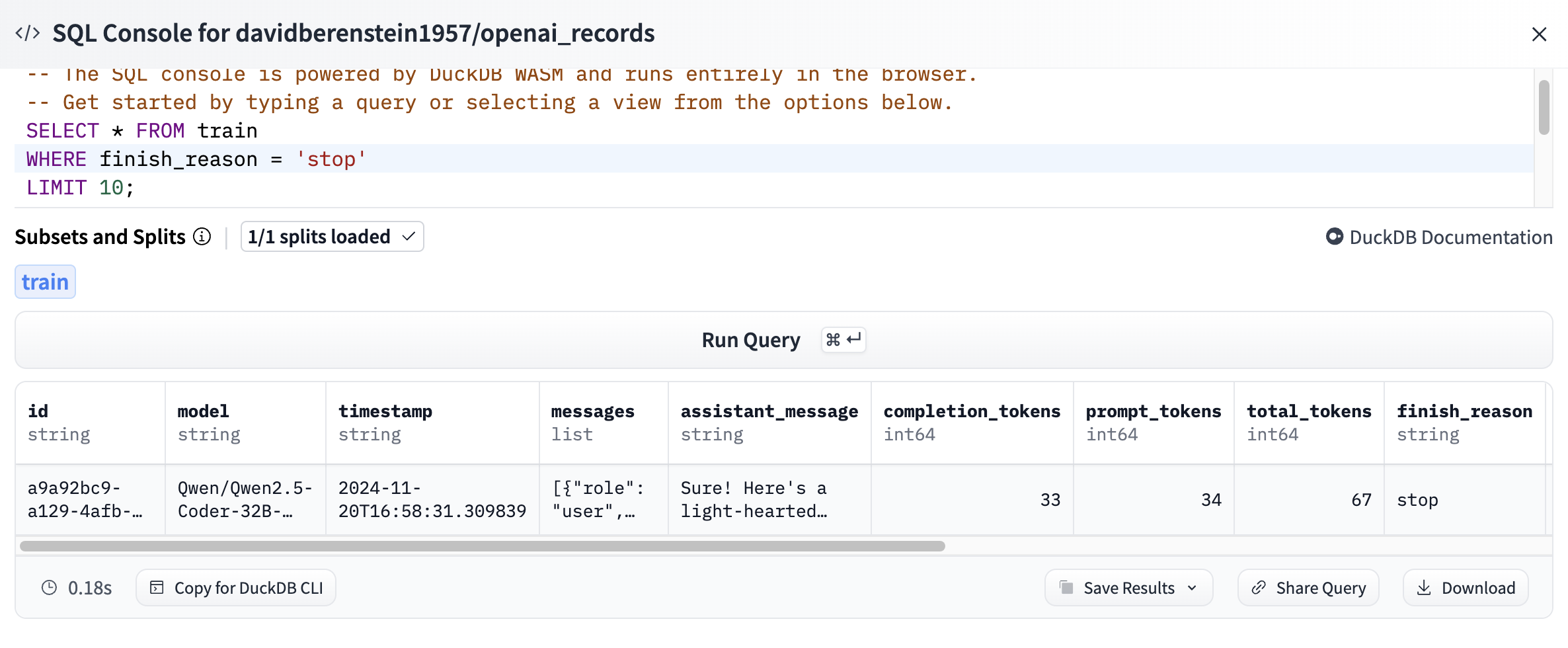

To view and query Hugging Face Datasets, you can use the Hugging Face Datasets Viewer. You can find example datasets on the Hugging Face Hub. From within here, you can query the dataset using SQL or using your own UI. Take a look at the example for more details.

The default store is DuckDB and can be viewed and queried using the DuckDB CLI. Take a look at the example for more details.

> duckdb store.db

> from openai_records limit 10;

┌──────────────────────┬──────────────────────┬──────────────────────┬──────────────────────┬───┬─────────┬──────────────────────┬───────────┐

│ id │ model │ timestamp │ messages │ … │ error │ raw_response │ synced_at │

│ varchar │ varchar │ timestamp │ struct("role" varc… │ │ varchar │ json │ timestamp │

├──────────────────────┼──────────────────────┼──────────────────────┼──────────────────────┼───┼─────────┼──────────────────────┼───────────┤

│ 89cb15f1-d902-4586… │ Qwen/Qwen2.5-Coder… │ 2024-11-19 17:12:3… │ [{'role': user, 'c… │ … │ │ {"id": "", "choice… │ │

│ 415dd081-5000-4d1a… │ Qwen/Qwen2.5-Coder… │ 2024-11-19 17:28:5… │ [{'role': user, 'c… │ … │ │ {"id": "", "choice… │ │

│ chatcmpl-926 │ llama3.1 │ 2024-11-19 17:31:5… │ [{'role': user, 'c… │ … │ │ {"id": "chatcmpl-9… │ │

├──────────────────────┴──────────────────────┴──────────────────────┴──────────────────────┴───┴─────────┴──────────────────────┴───────────┤

│ 3 rows 16 columns (7 shown) │

└────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┘The Argilla Store allows you to sync your observations to Argilla. To use it, you first need to create a free Argilla deployment on Hugging Face. Take a look at the example for more details.

The OpenTelemetry "Store" allows you to sync your observations to any provider that supports OpenTelemetry! Examples are provided for Honeycomb, but any provider that supplies OpenTelemetry compatible environment variables should Just Work®, and your queries will be executed as usual in your provider, against trace data coming from Observers.

See CONTRIBUTING.md