This repo hosts official implementation of our paper "General Facial Representation Learning in a Visual-Linguistic Manner".

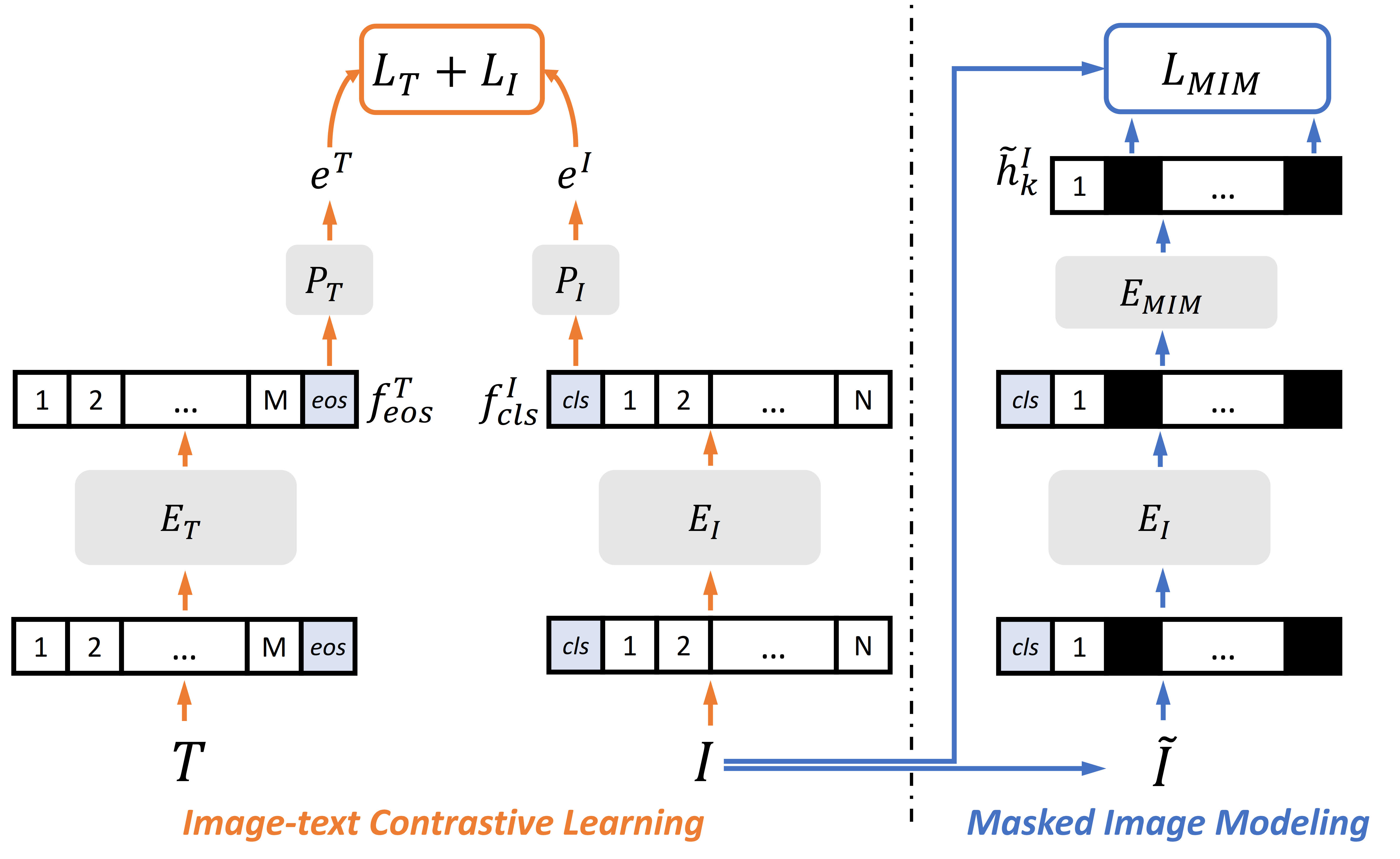

FaRL offers powerful pre-training transformer backbones for face analysis tasks. Its pre-training combines both the image-text contrastive learning and the masked image modeling.

After the pre-training, the image encoder can be utilized for various downstream face tasks.

We offer different pre-trained transformer backbones as below.

| Model Name | Data | Epoch | Link |

|---|---|---|---|

| FaRL-Base-Patch16-LAIONFace20M-ep16 (used in paper) | LAION Face 20M | 16 | BLOB |

| FaRL-Base-Patch16-LAIONFace20M-ep64 | LAION Face 20M | 64 | BLOB |

We run all downstream trainings on 8 NVIDIA GPUs (32G). Our code supports other GPU configurations, but we do not guarantee the resulting performances on them. Before setting up, install these packages:

Then, install the rest dependencies with pip install -r ./requirement.txt.

Please refer to ./DS_DATA.md to prepare the training and testing data for downstream tasks.

Download the pre-trained backbones into ./blob/checkpoint/.

Now you can launch the downstream trainings & evaluations with following command template.

python -m blueprint.run \

farl/experiments/{task}/{train_config_file}.yaml \

--exp_name farl --blob_root ./blob

The repo has included some config files under ./farl/experiments/ that perform finetuning for face parsing and face alignment.

For example, if you would like to launch a face parsing training on LaPa by finetuning our FaRL-Base-Patch16-LAIONFace20M-ep16 pre-training, simply run with:

python -m blueprint.run \

farl/experiments/face_parsing/train_lapa_farl-b-ep16_448_refinebb.yaml \

--exp_name farl --blob_root ./blob

Or if you would like to launch a face alignment training on 300W by finetuning our FaRL-Base-Patch16-LAIONFace20M-ep16 pre-training, you can simply run with:

python -m blueprint.run \

farl/experiments/face_alignment/train_ibug300w_farl-b-ep16_448_refinebb.yaml \

--exp_name farl --blob_root ./blob

It is also easy to create new config files for training and evaluation on your own. For example, you can customize your own face parsing task on CelebAMask-HQ by editing the values below (remember to remove the comments before running).

package: farl.experiments.face_parsing

class: blueprint.ml.DistributedGPURun

local_run:

$PARSE('./trainers/celebm_farl.yaml',

cfg_file=FILE,

train_data_ratio=None, # The data ratio used for training. None means using 100% training data; 0.1 means using only 10% training data.

batch_size=5, # The local batch size on each GPU.

model_type='base', # The size of the pre-trained backbone. Supports 'base', 'large' or 'huge'.

model_path=BLOB('checkpoint/FaRL-Base-Patch16-LAIONFace20M-ep16.pth'), # The path to the pre-trained backbone.

input_resolution=448, # The input image resolution, e.g 224, 448.

head_channel=768, # The channels of the head.

optimizer_name='refine_backbone', # The optimization method. Should be 'refine_backbone' or 'freeze_backbone'.

enable_amp=False) # Whether to enable float16 in downstream training.The following table illustrates the performances of our FaRL-Base-Patch16-LAIONFace20M-ep16 pre-training, which is pre-trained with 16 epoches, both reported in the paper (Paper) and reproduced using this repo (Rep). There are small differences between their performances due to code refactorization.

| Name | Task | Benchmark | Metric | Score (Paper/Rep) | Logs (Paper/Rep) |

|---|---|---|---|---|---|

| face_parsing/ train_celebm_farl-b-ep16-448_refinebb.yaml |

Face Parsing | CelebAMask-HQ | F1-mean ⇑ | 89.56/89.65 | Paper, Rep |

| face_parsing/ train_lapa_farl-b-ep16_448_refinebb.yaml |

Face Parsing | LaPa | F1-mean ⇑ | 93.88/93.86 | Paper, Rep |

| face_alignment/ train_aflw19_farl-b-ep16_448_refinebb.yaml |

Face Alignment | AFLW-19 (Full) | NME_diag ⇓ | 0.943/0.943 | Paper, Rep |

| face_alignment/ train_ibug300w_farl-b-ep16_448_refinebb.yaml |

Face Alignment | 300W (Full) | NME_inter-ocular ⇓ | 2.93/2.92 | Paper, Rep |

| face_alignment/ train_wflw_farl-b-ep16_448_refinebb.yaml |

Face Alignment | WFLW (Full) | NME_inter-ocular ⇓ | 3.96/3.98 | Paper, Rep |

Below we also report results of our new FaRL-Base-Patch16-LAIONFace20M-ep64, which is pre-trained with 64 epoches instead of 16 epoches as above, showing further improvements on most tasks.

| Name | Task | Benchmark | Metric | Score | Logs |

|---|---|---|---|---|---|

| face_parsing/ train_celebm_farl-b-ep64-448_refinebb.yaml |

Face Parsing | CelebAMask-HQ | F1-mean ⇑ | 89.57 | Rep |

| face_parsing/ train_lapa_farl-b-ep64_448_refinebb.yaml |

Face Parsing | LaPa | F1-mean ⇑ | 94.04 | Rep |

| face_alignment/ train_aflw19_farl-b-ep64_448_refinebb.yaml |

Face Alignment | AFLW-19 (Full) | NME_diag ⇓ | 0.938 | Rep |

| face_alignment/ train_ibug300w_farl-b-ep64_448_refinebb.yaml |

Face Alignment | 300W (Full) | NME_inter-ocular ⇓ | 2.88 | Rep |

| face_alignment/ train_wflw_farl-b-ep64_448_refinebb.yaml |

Face Alignment | WFLW (Full) | NME_inter-ocular ⇓ | 3.88 | Rep |

For help or issues concerning the code and the released models, feel free to submit a GitHub issue, or contact Hao Yang ([email protected]).

If you find our work helpful, please consider citing

@article{zheng2021farl,

title={General Facial Representation Learning in a Visual-Linguistic Manner},

author={Zheng, Yinglin and Yang, Hao and Zhang, Ting and Bao, Jianmin and Chen, Dongdong and Huang, Yangyu and Yuan, Lu and Chen, Dong and Zeng, Ming and Wen, Fang},

journal={arXiv preprint arXiv:2112.03109},

year={2021}

}

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.