Hungarian algorithm + Kalman filter multitarget tracker implementation.

- MobileNet SSD and tracking for low resolution and low quality videos from car DVR:

- Mouse tracking:

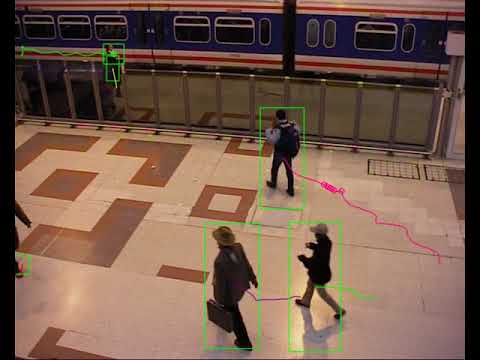

- Motion Detection and tracking:

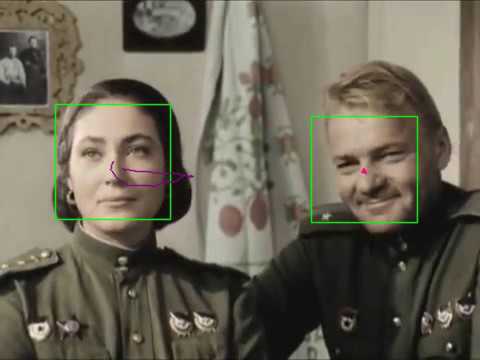

- Multiple Faces tracking:

- Simple Abandoned detector:

- Background substraction: built-in Vibe, SuBSENSE and LOBSTER; MOG2 from opencv; MOG, GMG and CNT from opencv_contrib

- Foreground segmentation: contours

- Matching: Hungrian algorithm or algorithm based on weighted bipartite graphs

- Tracking: Linear or Unscented Kalman filter for objects center or for object coordinates and size

- Use or not local tracker (LK optical flow) to smooth trajectories

- Tracking for lost objects and collision resolving: built-in DAT or STAPLE; KCF, MIL, MedianFlow, GOTURN, MOSSE or CSRT from opencv_contrib

- Haar face detector from OpenCV

- HOG and C4 pedestrian detectors

- MobileNet SSD detector with inference from OpenCV and models from chuanqi305/MobileNet-SSD

- YOLO and Tiny YOLO detectors from https://pjreddie.com/darknet/yolo/ (inference from opencv_dnn or from https://github.com/AlexeyAB/darknet )

- Simple Abandoned detector

- Download project sources

- Install CMake

- Install OpenCV (https://github.com/opencv/opencv) and OpenCV contrib (https://github.com/opencv/opencv_contrib) repositories

- Configure project CmakeLists.txt, set OpenCV_DIR.

- If opencv_contrib don't installed then disable options USE_OCV_BGFG=OFF, USE_OCV_KCF=OFF and USE_OCV_UKF=OFF

- If you want to use native darknet YOLO detector with CUDA + cuDNN then set BUILD_YOLO_LIB=ON

- Go to the build directory and run make

Usage:

Usage:

./MultitargetTracker <path to movie file> [--example]=<number of example 0..6> [--start_frame]=<start a video from this position> [--end_frame]=<play a video to this position> [--end_delay]=<delay in milliseconds after video ending> [--out]=<name of result video file> [--show_logs]=<show logs> [--gpu]=<use OpenCL>

./MultitargetTracker ../data/atrium.avi -e=1 -o=../data/atrium_motion.avi

Press:

* 'm' key for change mode: play|pause. When video is paused you can press any key for get next frame.

* Press Esc to exit from video

Params:

1. Movie file, for example ../data/atrium.avi

2. [Optional] Number of example: 0 - MouseTracking, 1 - MotionDetector, 2 - FaceDetector, 3 - PedestrianDetector, 4 - MobileNet SSD detector, 5 - YOLO OpenCV detector, 6 - Yolo Darknet detector

-e=0 or --example=1

3. [Optional] Frame number to start a video from this position

-sf=0 or --start_frame==1500

4. [Optional] Play a video to this position (if 0 then played to the end of file)

-ef=0 or --end_frame==200

5. [Optional] Delay in milliseconds after video ending

-ed=0 or --end_delay=1000

6. [Optional] Name of result video file

-o=out.avi or --out=result.mp4

7. [Optional] Show Trackers logs in terminal

-sl=1 or --show_logs=0

8. [Optional] Use built-in OpenCL

-g=1 or --gpu=0

- OpenCV (and contrib): https://github.com/opencv/opencv and https://github.com/opencv/opencv_contrib

- Vibe: https://github.com/BelBES/VIBE

- SuBSENSE and LOBSTER: https://github.com/ethereon/subsense

- GTL: https://github.com/rdmpage/graph-template-library

- MWBM: https://github.com/rdmpage/maximum-weighted-bipartite-matching

- Pedestrians detector: https://github.com/sturkmen72/C4-Real-time-pedestrian-detection

- Non Maximum Suppression: https://github.com/Nuzhny007/Non-Maximum-Suppression

- MobileNet SSD models: https://github.com/chuanqi305/MobileNet-SSD

- YOLO models: https://pjreddie.com/darknet/yolo/

- Darknet inference: https://github.com/AlexeyAB/darknet

- GOTURN models: https://github.com/opencv/opencv_extra/tree/c4219d5eb3105ed8e634278fad312a1a8d2c182d/testdata/tracking

- DAT tracker: https://github.com/foolwood/DAT

- STAPLE tracker: https://github.com/xuduo35/STAPLE

GNU GPLv3: http://www.gnu.org/licenses/gpl-3.0.txt