This repository contains the code for the paper ScribeAgent: Towards Specialized Web Agents Using Production-Scale Workflow Data. We use proprietary dataset of public Scribes to fine-tune LLMs into web agents. Check out our blog post and stay tuned for future releases!

Table of content:

- Code

- Data preprocessing

- Fine-tuning open-source LLMs

- Mind2Web and WebArena benchmark evaluation

- Data

- Example workflows collected from Scribe and the processed data

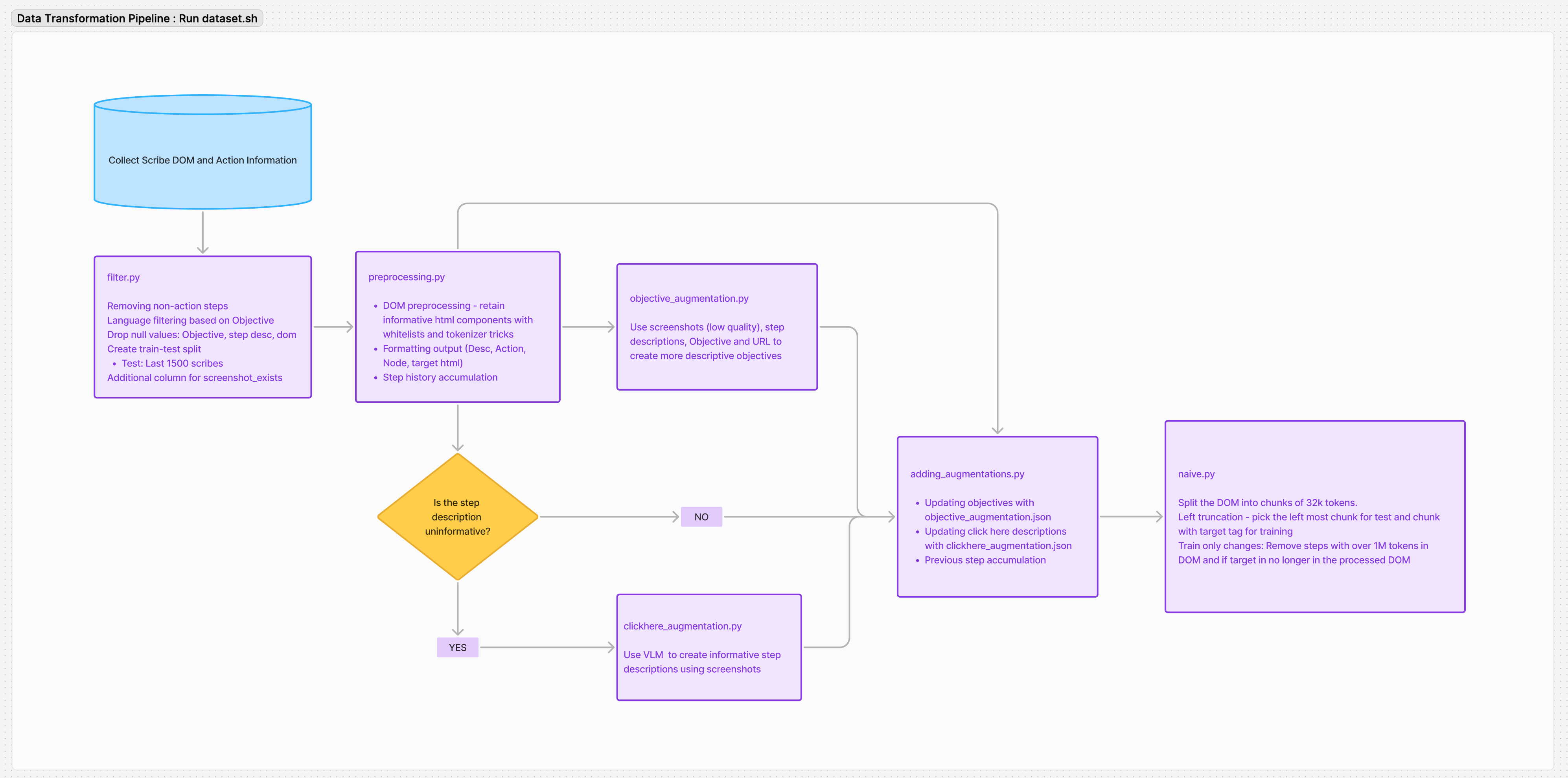

For ScribeAgent, we consider an observation space consisting of the user obejective, current web page's URL and HTML-DOM, and previous actions. The preprocessing folder details the data preprocessing pipeline, including DOM pruning and input-output formatiing. For more information on how data moves through the pipeline, check out the Data Transformation Flowchart and Section 3.2.2 of our paper.

-

dataset.sh: Bash script to sequencially run all preprocessing files needed to recreate the final train and test files. -

example_workflow.csv: This demostrates how a scribe is given as input to the preprocessing script. -

preprocessing.py: Performs DOM preprocessing and target formatting -

naive.py: Most HTML DOMs are bigger than our model context window. We perform naive truncation at tag level, do left truncate the DOMs to fit within the model's context window. -

filter.py: Filters our null values and non-english scribes. Splits the dataset into train and test set -

circle_all.py: This is used to create screenshots with circled targets with OpenCV. This requiresxy_mapping.csvfrom Snowflake -

clickhere_augmentation.py: Create action_id to augmented step description mapping using circled screenshots -

objective_augmentation.py: Create workflow_id to augmented objective description mapping using screenshots -

adding_augmentations.py: Adding objective and step description augmentations and prompt generation (model input) -

GPT_augmentation_file: This contains files used for augmenting step and objective descriptions. We save these files to avoid re-runnning GPT calls.train/test_objectives_clean.json: Mapping from workflow_id to augmented objectivestrain/test_step_desc.json: Mapping from action_id to augmented step description for click here actions

-

data: Place to store all datascreenshot: Store screenshots (action_id.jpeg)circled_ss: Store screenshots with circled targets (action_id_circled.jpeg)xy_position.csv: Store circle coordinates

Set the environment variable HF_TOKEN and OPENAI_APIKEY. Install the require libraries.

pip install -r requirements.txt

export HF_TOKEN=<your hf token>

export OPENAI_APIKEY=<your openai key>

Then:

chmod +x dataset.sh

./dataset.sh

We use LoRA to fine-tune open-source LLMs for ScribeAgent. The fine-tuning folder contains the code for fine-tuning and inference, implemented using Huggingface and peft.

collator.py: Implements a custom collator for instruction fine-tuning.finetuning.py: Contains LoRA fine-tuning code utilizing the Hugging Face Trainer and PEFT library.inference.py: Performs inference using VLLM with FP8 quantization.prepare_model.py: Handles loading and merging of adapter weights to optimize inference speed.requirements.txt: Lists of Python dependencies required for fine-tuning and inference.run.sh: Specifies and passes all the necessary arguments to run finetuning and inference.

Set the environment variable HF_TOKEN. Install the require libraries.

pip install -r requirements.txt

export HF_TOKEN=<your hf token>

Then:

chmod +x run.sh

./run.sh

We also include the code to adapt ScribeAgent, which takes HTML as input and outputs actions in HTML format, to public benchmarks.

- Mind2Web: Follow the original GitHub repository to set up the environment. Then, check out this page for detailed instructions to run the evaluation.

- WebArena: Check out this page for detailed instructions to run the evaluation.

If you find this repository useful, please consider citing our paper:

@misc{scribeagent,

title={ScribeAgent: Towards Specialized Web Agents Using Production-Scale Workflow Data},

author={Junhong Shen and Atishay Jain and Zedian Xiao and Ishan Amlekar and Mouad Hadji and Aaron Podolny and Ameet Talwalkar},

year={2024},

eprint={2411.15004},

archivePrefix={arXiv},

primaryClass={cs.CL},

}