Table of Contents generated with DocToc

- Python Fundamentals

- Getting Started With Python 3

- Strings and Collections

- Modularity

- Creating, Running, and Importing a Module

- Defining Functions and Returning Values

- Distinguishing Between Module Import and Module Execution

- The Python Execution Model

- Main Functions and Command Line Arguments

- Sparse is better than Dense

- Documenting Using Docstrings

- Documenting With Comments

- The Whole Shebang

- Objects

- Collections

- Handling Exceptions

- Iterables

- Classes

- Files and Resource Management

- Shipping Working and Maintainable Code

My course notes from Python Fundamentals course on Pluralsight.

int

signed, arbitrary precision integer, eg 42, specified in decimal but also can be binary with 0b prefix, octal with 0o prefix, or hex with 0x prefix. Can also use constructor to convert other numeric types to int, for example int(3.5) returns 3 (rounds down). Strings can be converted to ints int("496") returns 496.

float

64-bit floating point numbers, implemented as IEEE-754 double precision floating point numbers with 53 bits of binary precision, which is around 15 to 16 bits of decimal precision.

any literla number containing a decimal point or E is interpreted as a float.

Can use scientific notation, for example 3e8, which is 3 x 10 to power of 8, 1.616e-35 is 1.616 x 10 to power of negative 35.

float constructor can be used to convert other tyoes to float, for example int float(7) returns 7.0, float("1.618") returns 1.618.

Can also create "not a number", and positive/negative infinity float("nan"), float("inf"), float("-inf")

Any calculation involving int and float is promoted to float, 3.0 + 1 is 4.0.

None

The null object. The solve value of NoneType, used to represent absence of a value, not displayed by REPL.

Can be assigned to a variable a = None. Can test whether a variable is null a is None returns true.

bool

Boolean logical value, either True or False.

bool constructor can be used to convert other types. For integers, bool(0) is falsey, and all other int values are truthy.

Same behaviour with floats bool(0.0) is falsey, any other float is truthy.

Empty list is falsey bool([]), populated list is truthy bool([1, 3, 5]).

Empty string is falsey bool(""), any other non empty string is truthy.

Two objects are equivalent if one could be used in place of the other.

== value equality / equivalence

!= value inequality / inequivalence

< less than

> greater than

<= less than or equal to

>= greater than or equal to

if expr:

print("expr is True")expr is converted to bool as if by the bool() constructor. So this evaluates to True

if "eggs":

print("Yes please!")Optional else clause:

h = 42

if h > 50:

print("Greater than 50")

else:

print("Less than 50")Rather than nesting an if block in an else block, use elif keyword, because "zen of python" - "flat is better than nested"

while statment terminated by a colon because it introduces a new block. expr is converted to bool as if by the bool() constructor.

while expr:

print("loop while expr is True")c = 5

# equivalent would be: while c:, but more idiomatic python to be explicit as below:

while c != 0:

print(c)

c -= 1break keyword terminates innermost loop and transfers execution to the first statement after the loop.

while True:

if expr:

break

print("Go here on break")data type str. Immutable sequences of Unicode codepoints. Codepoints are roughly like characters though not strictly equivalent.

Once a string is constructed, cannot modify its contents.

Literal strings are delimited by either single or double quotes, but must be consistent within a single string:

'This is a string'

"This is also a string"Adjacent strings are concatenated into a single string

>>> "first" "second"

'firstsecond'Newline options:

Multiline strings - delimited by three quote characters (can be double or single quotes)

>>>"""This is

a multiline

string"""

'This is\na multiline\nstring'Escape sequences

Embed the newline control character right in the string

>>> m = 'This string\nspans multiple\nlines'Note Python 3 gas Universal Newlines, so no need on Windows to specify carriage return \r\n,

rather \n is translated to the native newline sequence on whatever platform the code is running on.

Raw Strings

"what you see is what you get". To avoid ugly escape sequences, especially useful with Windows paths.

To use it, precede string with r'

>>> path = r'C:\Users\Merlin\Documents\Spells'

>>> path

'C:\\Users\\Merlin\\Documents\\Spells'Strings are sequence types, which means they support common operations for querying sequences.

Can access certain characters in string using square brackets:

>>> s = 'parrot'

>>> s[4]

'o'

>>> type(s[4])

<class 'str'>There is no separate character type from string type. Characters are simply one element strings.

String objects support many operations, type help(str) in repl. For example:

>>> c = 'oslo'

>>> c.capitalize()

'Oslo 'Unicode

Python strings are unicode, unicode characters can be used in string literals because default soruce encoding is UTF-8.

Immutable sequences of bytes. Used for raw binary data and fixed width single byte character encoding such as ASCII.

Byte literals b'data' or b"data".

Support many of same operations that strings do such as indexing and splitting.

To convert between strings and bytes, must know the encoding of the byte sequence used to represent the strings unicode codepoints as bytes.

encode: str to bytes decode: bytes to str

Mutable sequence of objects. List literals delimited by square brackets: [a, b, c, d].

Items can be retrieved with square brackets and zero-based index:

>>> a = ["apple", "orange", "pear"]

>>> a[1]

'orange'Elements can be replaced by assigning to a specific element a[1] = 7.

Lists can contain different types.

To create an empty list b = []. To add an item b.append(1.6).

List constructor can be used to create a list from another collection such as a string:

>>> list("abcd")

['a', 'b', 'c', 'd']Mutable mappings of keys to values.

Dict literals delimited by curly braces and contain comma separated key-value pairs {k1: v1, k2: v2}.

Items can be retrieved and assigned by key using square brackets:

>>> d = {'alice': '123', 'bob': '456'}

>>> d['alice']

'123'

>>> d['bob'] = 999If assign to a key that doesn't exist yet, a new entry will be added.

Entries are not stored in any particular order.

To create an empty dictionary e = {}

Visit each item in an iterable series. General form is:

for item in iterable:

...body...cities = ["London", "New York", "Paris"]

for city in cities:

print(city)Can also iterate over dictionary, which gets keys:

colors = {'crimson': 0xdc143c, 'coral': 0xff7f50}

for color in colors:

print(color, colors[color])Outputs:

coral 16744272

crimson 14423100

Module like words.py can be imported into the REPL, omit the file extension:

>>> import words

... program output...When importing a module, code in it is executed immediately. To have more control over when code is executed and reuse, move code into a function.

Functions are defined with def keyword, followed by function name, and arguments in parenthesis.

def square(x):

return x * x

// invoke square

square(5) // 25Functions don't need to return a value and can produce side effects. Can return early from a function by using return keyword with no parameter.

When all the code in the module is organized into functions, then importing it will not execute anything right away (besides just defining the functions):

>>> import words

>>> words.fetch_words()Can also import just a specific function:

>>> from words import fetch_words()

>>> fetch_words()Notice that running the module now from OS cli python words.py will not do anything, because all executing it now does is define a function then immediately exit.

To make a module with useful functions AND which can be run as a script, need to use special attributes, which are delimited by double underscores.

__name__ - evalutes to __main__ or the actual module name depending on how the enclosing module is being used. Used to detect whether module is being run as a script or being imported into another module or the REPL.

For example, add print(__name__) as last line in module, then when imported into the REPL, will print out the module name, for example "words-special".

Note if module is imported a second time in the same session, print statement will NOT run again, because module code is only executed once on first import (singleton?).

Now running the script at OS cli python words_special.py outputs __main__.

Module can use this behaviour to detect how its being used.

def <some_function>() is not a declaration, its a statement that when used in sequence with top level module scope code, causes the code within the function to be bound to the name of the function.

When modules are imported or run, all of the top level statements are run, this is how functions within the module namespace are defined.

By .py file is a Python module. But modules can be written for convenience import, convenience execution, or using the if __name__ == '__main__' idiom, both import and execution.

Python module - Conveninet import with API.

Python script - Conveninet execution from command line.

Python program - Possibly composed of many modules.

Recommend making even simple scripts importable, to make development and testing easier.

This refactor supports testing the individual functions and all together from the REPL. Note multiple functions can be imported from a module with a single import statement:

>>> from words_cli import (fetch_words, print_items)

>>> print_items(fetch_words())Can also import everything from a module, but not generally recommended because you can't control what gets imported, which can cause namespace collisions:

>>> from words_cli import *

>>> fetch_words()To accept command line arguments, use the argv attribute of the sys module, which is a list of strings:

import sys

...

def main():

url = sys.argv[1]

words = fetch_words(url)

print_items(words)Issue with above is main() can no longer be tested from REPL because sys.argv[1] does not have a useful value in that environment.

Solution is to have url be a parameter to the main function, and pass in sys.argv[1] from the main check.

More sophisticated cli parsing: argparse, also third party options such as docopt.

Top level functions have 2 blank lines between them. This is a convention for modern Python. (PEP 8 recommendation).

API documentation in Python uses docstrings. Literal strings which occur as the first statement in a named block such as a function or module.

Use triple quoted strings even for a single line because it can easily be expanded to add more details later. Recommend using Google's Python Style Guide, it can be machine parsed to generate html api docs, but still human readable.

Begin with short description of function, followed by list of arguments to function, and the return value.

After docstring is added, can access it via help in REPL:

>>> from words-cli import fetch_words

>>> help(fetch_words)Module docstrings are placed at the beginning of the module, before any statements. Then can request help on the module as a whole:

>>> import words_cli

>>> help(words_cli)Generally recommended to use docstrings, which explain how to consume your code. And code should be clean enough not to require additional explanation of how it works, but sometimes this is needed. For example:

if __name__ == '__main__':

main(sys.argv[1]) # The 0th arg is the module filenameTo allow program to be run as a shell script, add at beginning of file, the shebang line which tells the OS which interpreter to use to run the program - #!/usr/bin/env python

Objects are immutable, for example, a = 500, then a = 1000, a now pointing to a new int object 1000, and if no live references left pointing to the int 500 object, the garbage collector will reclaim it eventually.

is operator a is b tests if two variables are referencing the same object. Can also compare a is None.

Assignment operator only binds to names, never copies an object by value.

Python doesn't really have variables, only named references to objects. References behave like labels which support retrieving objects.

Value equality vs. identity - "Value" refers to equivalent contents, whereas "Identity" refers to same object.

Value comparison can be controlled programmatically.

When an object reference is passed to a function, if the function modifies the object, that change will be seen outside the function:

m = [1, 2, 3]

def modify(k):

k.append(4)

modify(m)

# m is now ]1, 2, 3, 4]To avoid side effects, the function must be responsible for making a copy of the object, and operate on the copy.

Function objects are passed by object reference. The value of the reference is copied, not the value of the object. No objects are copied.

The return statement also uses pass by object reference.

Default Arguments - def function(a, b=value), "value" is default value for "b".

Parameters with default arguments must come after those without.

def banner(message, border='-'):

line = border * len(message)

print(line)

print(message)

print(line)Message string is a positional argument, border string is a keyword argument.

Positional arguments are matched in sequence with formal arguments, whereas keyword arguments are matched by name.

This can be called as follows:

banner("foo")banner("foo", "*")banner("foo", border="*")

Default Argument Evaluation - Default argument values are evaluated when def is evaluated. But they can be modified like any other object.

Always use immutable objects for default values, otherwise get surprising results, for example None rather than []:

def add_spam(menu=None):

if menu is None:

menu = []

menu.append('spam')

return menuIs both dynamic and strong.

Dynamic type system - type of an oject reference isn't resolved until the program is running and doesn't need to specified up front when the program is written.

For example, this function to add two objects doesn't specify what type they are. It can be called with any type for which addition operator is defined such as int, float, strings. The arguments a and b can reference any type of object.

def add(a, b):

return a + b

add(5, 7) # 12

add(3.1, 2.4) # 5.5

add("news", "paper") # 'newspaper'

add([1, 6], [21, 107]) # [1, 6, 21, 107]Strong type system - language will not implicitly convert objects between types. For example, attempt to add types for which addition is not defined, such as string and int:

add("The answer is", 42) # TypeError: Can't convert 'int' object to str implicitlyObject references have no type. Variables are just untyped name bindings to objects. They can be rebound/re-assigned to objects of different types. But where is that binding stored?

Python Name Scopes - Scopes are contexts in which named references can be looked up. Four scopes from narrowest to broadest:

- Local - those names defined inside the current function.

- Enclosing - those names defined inside any and all enclosing functions.

- Global - those names defined in the top-level of a module, each module brings with it a new global scope.

- Built-in - those names provided by the Python language through "builtins" module.

Names are looked up in the narrowest relevant context (L, E, G, B).

Note: Scopes do not correspond to source code blocks as demarcated by indentation. For loops, if blocks etc. DO NOT introduce new nested scopes.

For example, words-cli.py contains global names:

mainbound bydef main(url)statementsysbound when sys is imported__name__provided by the Python runtimeurlopenbound fromurllib.requestmodulefetch_wordsbound bydef fetch_words(url)statementprint_itemsbound bydef print_items(items)statement

Module scope name bindings are usually introduced by import statements and function or class definitions.

Within fetch_words function, local-scope names include:

wordbound by inner for loopline_wordsbound by assignmenturlbound by formal argumentlinebound by outer for loopstory_wordsbound by Assignmentstorybound by with statement

Local names is brought into existence at first use and continues to live within function scope until function completes, then references are destroyed.

Occasionally need to rebind a global name at module scope, as this simple module demonstrates:

"""Demonstrate scoping."""

count = 0 # global within this module

def show_count():

print("count = ", count) # this looks up count in first local scope, not found so goes up to global scope

def set_count(c):

#count = c # count here is a local variable that shadows the global, not what was intended

# this modifies the actual global

global count

count = cIncluding functions and modules.

import words binds the "words" module to the name "words" in the current namespace.

Built-in type function can be used to determine the type of any object, for example type(words) returns <class 'module'>.

Built-in dir function returns the attributes (introspect) of an object, dir(words) to list all functions in the module, anything imported, and special system functions denoted by double underscores.

Can use type function on any attribute to learn more about it, type(words.fetch_words) returns <class 'function'>.

Immutable sequences of arbitrary objects. Once created, the objects within them cannot be replaced or removed, and new elements cannot be added.

Similar syntax to lists, except they are delimited by parenthesis rather than square brackets.

# assignment

t = ("Norway", 4.953, 3)

# access

t[0] # 'Norway'

# built-in len function to get size of tuple

len(t) # 3

# tuples are iteratable

for item in t:

print(item) # Norway 4.953 3

# can be concatenated

t + (338.0, 265e9) # ('Norway', 4.953, 3, 338.0, 265000000000.0)

# repeat with multiplication Operators

t * 3 # ('Norway', 4.953, 3, 'Norway', 4.953, 3, 'Norway', 4.953, 3)

# can be nested (because tuples can contain any object, including another tuple)

a = ((220, 284), (1184, 1210), (2620, 2924), (5020, 5564))

# repeated application of index operator to get to inner element

a[2][1] # 2924If you need a single element tuple, cannot use h = (391), will be parsed as integer with parenthesis for order of operations.

Instead, use trailing comma separator k = (391,)

To specify an empty tuple e = ().

Often times parenthesis can be ommitted. Useful for functions that return multiple values.

Returning multiple values in tuples is often used together with destructuring (aka tuple unpacking), unpack data structures into named references:

>>> lower, upper = minmax([83, 33, 84, 32, 85, 31, 86])

>>> lower # 31

>>> upper # 86Tuple unpacking works with arbitrarily bested tuples:

>>> (a, (b, (c, d))) = (4, (3, (2, 1)))

>>> a # 4

>>> b # 3

>>> c # 2

>>> D # 1Python idiom for swapping two or more variables:

>>> a = 'jelly'

>>> b = 'bean'

>>> a, b = b, a

>>> a # 'bean'

>>> b # 'jelly'To create a tuple from an existing collection object such as list, use constructor tuple(iterable)

in operator to test for containment

>>> 5 in (3, 5, 17)

>>> True

>>> 5 not in (3, 5, 17)

>>> Falsestr is a homogenous immutable sequence of Unicode codepoints (roughly equivalent to characters).

Built in len function to determine length of string.

Concatenation via + operator and +=.

For joining large numbers of strings, join method is more memory efficient than concatenation, it's called on the separator string.

Can also split, passing in separator string as argument:

>>> colors = ';'.join(['#45ff23', '#2321fa', '#1298a3'])

>>> colors # '#45ff23;#2321fa;#1298a3'

colors.split(';') # ['#45ff23', '#2321fa', '#1298a3']Python idiom for concatenating a collection of strings is to join using the empty separator:

''.join(['high', 'way', 'man']) # 'highwayman'partition divides a string into three sections around a separator: prefix, separator, suffix, returning these in a tuple.

>>> "unforgetable".partition("forget") # ('un', 'forget', 'able')Commonly used with tuple unpacking:

>>> departure, separator, arrival = "London:Edinburgh".partition(':')

>>> departure # 'London'

>>> separator # ':'

>>> arrival # 'Edinburgh'Often not interested in separator, Python convention is to use underscore:

>>> origin, _, destination = "Seattle-Boston".partition('-')

>>> origin # 'Seattle'

>>> destination # 'Boston'format supercedes string interpolation (but not replace) from older Python. Can be used on any string containing "replacement" fields, which are surrounded by curly braces. The objects provided as arguments to format are converted to strings and used to populate the replacement fields. Field names are matched up to positional arguments to format.

>>> "The age of {0} is {1}".format('Jim', 32) # 'The age of Jim is 32'Field name may be used more than once:

>>> "The age of {0} is {1}. {0}'s birthday is on {2}".format('Fred', 24, 'October 31')

"The age of Fred is 24. Fred's birthday is on October 31"If field names are used exactly once and in the same order as the arguments, then the names can be ommitted:

>>> "Reticulating spline {} of {}.".format(4, 23)

"Reticulating spline 4 of 23"Keyword arguments can be supplied:

>>> "current position {lat} {lng}".format(lat="60N", lng="5E")

'current position 60N 5E'Can index into sequences with square brackets in replacement field:

>>> pos = (65.2, 23.1, 82.2)

>>> "Galactic position x={pos[0]} y={pos[1]} z={pos[2]}".format(pos=pos)

'Galactic position x=65.2 y=23.1 z=82.2'Can access object attributes:

>>> import math

>>> "Math constants: pi={m.pi}, e={m.e}".format(m=math)

'Math constants: pi=3.141592653589793, e=2.718281828459045'Also have control over field alignment and floating point formatting:

>>> "Math constants: pi={m.pi:.3f}, e={m.e:.3f}".format(m=math)

'Math constants: pi=3.142, e=2.718'Type of sequence used to represent an arithmetic progression of integers. Created by call to range constructor, there is no literal form.

Typical usage, provide just the stop value, range(5) is equivalent to range(0,5). Stop value is 1 past end of sequence:

for i in range(5):

print(i) # 0 1 2 3 4Can also supply a start value range(5, 10) - 5 6 7 8 9. Common usage is to pass this to list constructor list(range(5, 10)) - [5, 6, 7, 8, 9].

Step argument controls interval between successive numbers list(range(0, 10, 2)) - [0, 2, 4, 6, 8]. In this case, must supply all three arguments (start, stop, step).

Note: Avoid range() for iterating over lists, Python is not C! Prefer direct iteration over iterable objects such as lists.

If you need a counter, use enumerate function, which returns an iterable series of pairs, where each pair is a tuple. First element of pair is index of current item, second element of the pair is the item itself:

>>> t = [6, 372, 8862, 14880]

>>> for p in enumerate(t):

... print(p)

(0, 6)

(1, 372)

(2, 8862)

(3, 14880)A further improvement is to use tuple unpacking:

>>> for i, v in enumerate(t):

... print("i = {}, v = {}".format(i, v))

...

= 0, v = 6

i = 1, v = 372

i = 2, v = 8862

i = 3, v = 14880Ranges not widely used in modern Python code because of good iteration primitives.

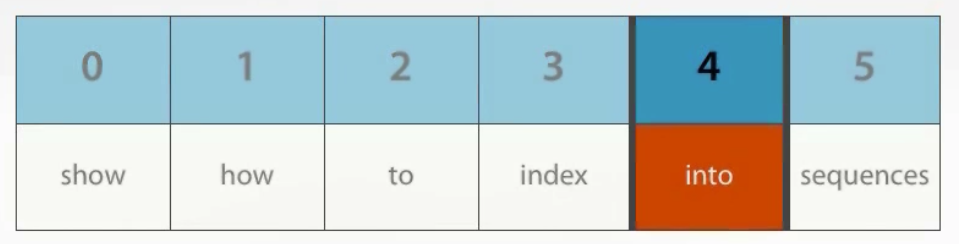

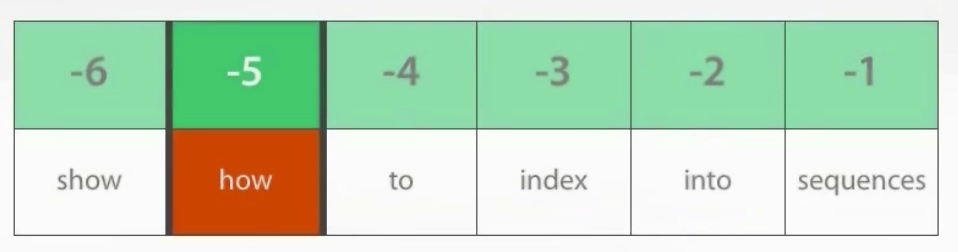

Zero and positive integers index from the beginning:

Lists and other sequences such as tuples can also be indexed from the end, using negative indexes:

>>> s = "show how to index into sequences".split()

>>> s

'show', 'how', 'to', 'index', 'into', 'sequences']

>>> s[4] # extracts 5th element

'into'

>>> s[-5] # extracts 5th element from the end

'how'

>>> s[-1] # last element of sequence, more elegant than length-1 solution

'Sequences'Note index -0 returns same as 0, which is first element in list. This means negative indexing is 1 based.

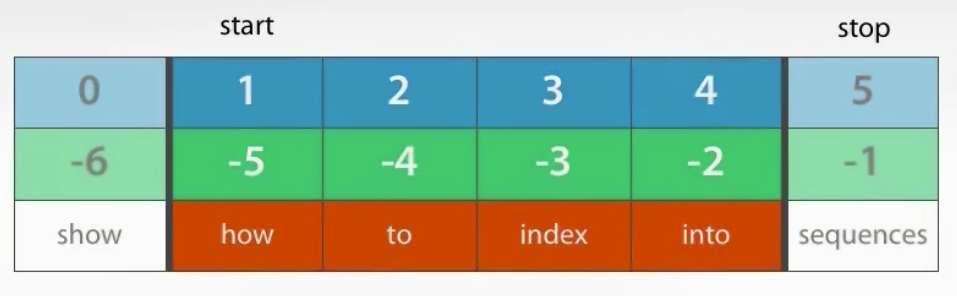

A form of extended indexing, to refer to portions of a list.

slice = seq[start:stop]

Slice range is half-open - stop not included.

>>> s = "show how to index into sequences".split()

>>> s[1:4]

['how', 'to', 'index']Can be combined with negative indexing, for example, all elements but first and last:

>>> s[1:-1]

['how', 'to', 'index', 'into']Start and stop indicies are optional. To slice all elements from third to end of list, s[3:].

To slice all elements from beginning of list, up to, but no tincluding the third, s[:3].

Half-open ranges give complementary slices: s[:x] + s[x:] == s

Since start and stop are optional, all elements of a list can be retrieved with full_slice = s[:]. This is an important idiom for copying lists. full_slice has distinct identity from s, but equivalent value. But note that elements within full_slice are references to the same objects that s refers to.

A more obvious way to copy a list is u = s.copy(), or list constructor v = list(s). Constructor is more flexible than copy method because it can accept any iterable source, not just lists.

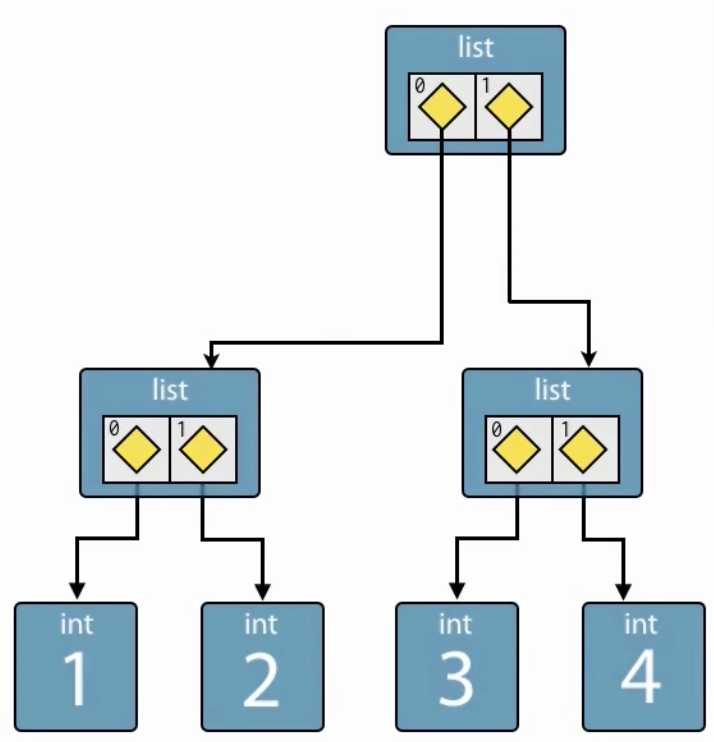

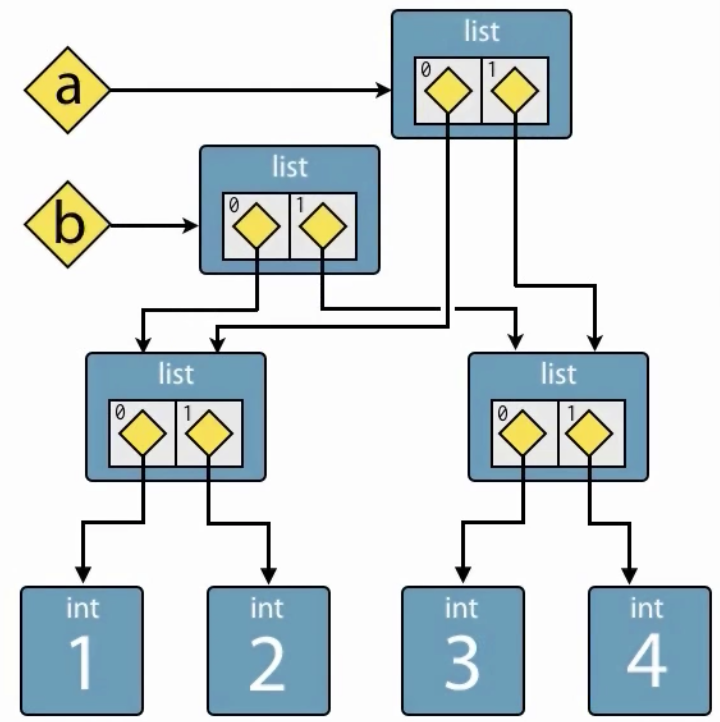

All of the above techniques perform a shallow copy. Create a new list containing the same object references as the original list.

Given a list with nested lists:

>>> a = [[1, 2], [3, 4]]Now when its copied:

>>> b = a[:]

>>> a is b

False # lists are distinct objects

>>> a == b

True # lists contain equivalent values

>>> a[0] === b[0]

True # lists refer to same inner objectRepetition is supported using multiplication operator.

>>> c = [21, 37]

>>> d = c * 4

>>> d

[21, 37, 21, 37, 21, 37, 21, 37]Note that repetition repeats the reference, without copying the value. i.e. makesk shallow copies.

To find an element in a list, use index method, passing object to search for. Elements are compared for equivalence or value equality.

>>> w = "the quick brown fox jumps over the lazy dog".split()

>>> w

['the', 'quick', 'brown', 'fox', 'jumps', 'over', 'the', 'lazy', 'dog']

>>> i = w.index('fox')

>>> i

3

>>> w[i]

'fox'

>>> w.index('unicorn') # trying to find something that's not there generates ValueError

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ValueError: 'unicorn' is not in listAnother way to search is to count occurrences of matching elements:

>>> w.count('the')

2Use in operator to test for membership:

>>> 37 in [1, 78, 9, 37, 34, 53]

True

>>> 78 not in [1, 78, 9, 37, 34, 53]

FalseUse del to remove elements from list. Takes a single parameter, which is a reference to a list element, and removes it from the list.

>>> u = "jackdaws love my big sphinx of quartz".split()

>>> u

['jackdaws', 'love', 'my', 'big', 'sphinx', 'of', 'quartz']

>>> del u[3]

>>> u

['jackdaws', 'love', 'my', 'sphinx', 'of', 'quartz']Use remove method to remove elements by value rather than position:

>>> u.remove('jackdaws')

>>> u

['love', 'my', 'sphinx', 'of', 'quartz']Equivalent to using del and index:

>>> del[u[u.index('quartz')]]

>>> u

['love', 'my', 'sphinx', 'of']Attempting to remove an item that does not exist results in ValueError.

Use insert method to add items to the list. Takes the index of the new item and the item itself.

>>> a = "I accidentally the whole universe".split()

>>> a.insert(2, "destroyed")

>>> a

['I', 'accidentally', 'destroyed', 'the', 'whole', 'universe']Convert list back to string by invoking join on space separator:

>>> ' '.join(a)

'I accidentally destroyed the whole universe'Concatenating lists using addition operator results in a new list, with no changes of the original lists, whereas augmented assignment operator += modifies the assignee in place.

>>> m = [2, 1, 3]

>>> n = [4, 7, 11]

>>> k = m + n

>>> k

[2, 1, 3, 4, 7, 11]

>>> m

[2, 1, 3]

>>> n

[4, 7, 11]

>>> k += [18, 29, 47]

>>> k

[2, 1, 3, 4, 7, 11, 18, 29, 47]Extend method also does what += operator does.

k.extend([76, 129, 199])

>>> k

[2, 1, 3, 4, 7, 11, 18, 29, 47, 76, 129, 199]The above works with any iterable series, not just lists.

List can be reversed in place

>>> g = [1, 11, 21, 1211, 112111]

>>> g.reverse()

>>> g

[112111, 1211, 21, 11, 1]And sorted

>>> d = [5, 17, 41, 29, 71, 149, 3299, 7, 13, 67]

>>> d.sort()

>>> d

[5, 7, 13, 17, 29, 41, 67, 71, 149, 3299]sort method accepts two optional arguments reverse and key

>>> d = [5, 17, 41, 29, 71, 149, 3299, 7, 13, 67]

>>> d.sort(reverse=True )

>>> d

[3299, 149, 71, 67, 41, 29, 17, 13, 7, 5]key parameter accepts any callable object, used to extract key from each item.

Items will be sorted according to relative ordering of these keys.

Callable Objects

Functions, built in len function is used to determine length of collection.

It can be used as a key to order words by length. For exmaple:

>>> h = 'not perplexing do handwriting family where I illegibly know doctors'.split()

>>> h

['not', 'perplexing', 'do', 'handwriting', 'family', 'where', 'I', 'illegibly', 'know', 'doctors']

>>> h.sort(key=len)

>>> h

['I', 'do', 'not', 'know', 'where', 'family', 'doctors', 'illegibly', 'perplexing', 'handwriting']Then to join list of words back together into a single word, use join function with a space separator:

>>> ' '.join(h)

'I do not know where family doctors illegibly perplexing handwriting'Avoiding Side Effects

Sometimes want to sort or reverse a list without modifying the original.

Use build in functions reversed and sorted, which return reverse iterator and a new sorted list.

>>> x = [4, 9, 2, 1]

>>> y = sorted(x)

>>> y

[1, 2, 4, 9]

>>> x

[4, 9, 2, 1]

>>>

>>> p = [9, 3, 1, 0]

>>> q = reversed(p)

>>> q

<list_reverseiterator object at 0x102ac7550>

>>> list(q) # use list constructor to evaluate result of reversed because reversed returns an iterator

[0, 1, 3, 9]

>>> p

[9, 3, 1, 0]Unordered mapping from unique, immutable keys (eg: Strings, Numbers, Tuples ) to mutable values. Keys must be unique. Can have duplicate values as long as they're associated with different keys.

dict constructor can convert other types to dictionaries, copying an iterable series of key-value pairs stored in tuples:

>>> names_and_ages = [ ('Alice', 32), ('Bob', 48), ('Charlie', 28), ('Daniel', 33)]

>>> d = dict(names_and_ages)

>>> d

{'Charlie': 28, 'Alice': 32, 'Daniel': 33, 'Bob': 48}As long as keys are valid identifiers, can even create a dictionary directly from keyword arguments.

>>> phonetic = dict(a='alfa', b='bravo', c='charlie', d='delta', e='echo', f='foxtrot')

>>> phonetic

{'b': 'bravo', 'f': 'foxtrot', 'e': 'echo', 'd': 'delta', 'c': 'charlie', 'a': 'alfa'}Dictionary copying is shallow by default, copying only references to the key and value objects, not the objects themselves.

There are two ways to copy a dictionary, either using the copy method, or to pass an existing dictionary to the dict constructor.

>>> d = dict(goldenrod=0xDAA520, indigo=0x4B0082, seashell=0xFFF5EE)

>>> d

{'indigo': 4915330, 'goldenrod': 14329120, 'seashell': 16774638}

>>> e = d.copy()

>>> e

{'indigo': 4915330, 'goldenrod': 14329120, 'seashell': 16774638}

>>>

>>> f = dict(e)

>>> f

{'indigo': 4915330, 'goldenrod': 14329120, 'seashell': 16774638}Update

Dictionary can be extended with definitions from another dictionary using update method, invoked on the dictionary object to be updated:

>>> g = dict(wheat=0xF5DEB3, khaki=0xF0E68C, crimson=0xDC143D)

>>> f.update(g)

>>> f

{'indigo': 4915330, 'goldenrod': 14329120, 'seashell': 16774638, 'crimson': 14423101, 'wheat': 16113331, 'khaki': 15787660}If argument to update contains keys that are already in the target dictionary, then the values for these keys are replaced in the target:

>>> stocks = {'GOOG': 891, 'AAPL': 416, 'IBM': 194}

>>> stocks.update({'GOOG': 894, 'YHOO': 25})

>>> stocks

{'AAPL': 416, 'GOOG': 894, 'IBM': 194, 'YHOO': 25}Dictionaries are iterable using for loops. Dictionary yields the next key on each iteration, and value can be retrieved using lookup with square bracket notation.

>>> colors = dict(aquamarine='#7FFFD4', burlywood='#DEB887', chartreuse='#7FFF00', cornflower='#6495ED', firebrick='#B22222', honeydew='#F0FFF0', maroon='#B03060', sienna='#A0522D')

>>> for key in colors:

... print("{key} => {value}".format(key=key, value=colors[key]))

...

cornflower => #6495ED

honeydew => #F0FFF0

maroon => #B03060

firebrick => #B22222

burlywood => #DEB887

aquamarine => #7FFFD4

sienna => #A0522D

chartreuse => #7FFF00Can also iterate over just the values, using the values dictionary method, which returns an object which provides an iterable view of the dictionary values without copying the values. Note there is no efficient way to retrieve the key from a value:

>>> for value in colors.values():

... print(value)

...

#6495ED

#F0FFF0

#B03060

#B22222

#DEB887

#7FFFD4

#A0522D

#7FFF00keys method provides an iterable view of the dictionary keys, but not often used because default iteration of dictionaries is already by key.

>>> for key in colors.keys():

... print(key)

...

burlywood

honeydew

firebrick

aquamarine

chartreuse

sienna

cornflower

maroonMost useful is to iterate over keys and values at the same time. Each key/value pair in dictionary is called an item.

Can get an iterable view of the items using the dictionary's items method. When iterated over items view yields each key/value pair as a tuple. Tuple unpacking is used in the for statement to access both key and value in one operation.

for key, value in colors.items():

... print("{key} => {value}".format(key=key, value=value))

...

burlywood => #DEB887

honeydew => #F0FFF0

firebrick => #B22222

aquamarine => #7FFFD4

chartreuse => #7FFF00

sienna => #A0522D

cornflower => #6495ED

maroon => #B03060Membership

Membership tests use in and not in operators and work only on keys.

>>> symbols = dict(usd='\u0024', gbp='\u00a3', nzd='\u0024', krw='\u20a9', eur='\u20ac', jpy='\u00a5', nok='kr', ils='\u20aa', hhg='Pu')

>>> symbols

'gbp': '£', 'eur': '€', 'nok': 'kr', 'ils': '₪', 'usd': '$', 'hhg': 'Pu', 'nzd': '$', 'jpy': '¥', 'krw': '₩'}

>>>

>>> 'nzd' in symbols

True

>>>

>>> 'mkd' in symbols

>>> Falsedel keyword is used to remove entries from a dictionary:

>>> z = {'H': 1, 'Tc': 43, 'Xe': 54, 'Un': 137, 'Rf': 104, 'Fm': 100}

>>> z

{'Un': 137, 'Xe': 54, 'Rf': 104, 'Fm': 100, 'Tc': 43, 'H': 1}

>>>

del z['Un']

>>> z

{'Xe': 54, 'Rf': 104, 'Fm': 100, 'Tc': 43, 'H': 1}Mutability

Dictionary keys are immutable, values can be modified. For example, can use augmented assignment operator to append new elements to one of the entry values:

>>> m = {'H': [1, 2, 3], 'He': [3, 4], 'Li': [6, 7], 'Be': [7, 9, 10], 'B': [10, 11], 'C': [11, 12, 13, 14]}

>>> m['H'] += [4, 5, 6, 7]

>>> m['H']

[1, 2, 3, 4, 5, 6, 7]The dictionary itself is mutable, for example, new entries can be added after it has been created:

>>> m['N'] = [13, 14, 15]

>>> m

{'C': [11, 12, 13, 14], 'He': [3, 4], 'Be': [7, 9, 10], 'Li': [6, 7], 'N': [13, 14, 15], 'H': [1, 2, 3, 4, 5, 6, 7], 'B': [10, 11]}pprint module is part of Python standard library. It contains a function also named pprint, therefore bind it to a different named variable when importing.

>>> from pprint import pprint as pp

>>> pp(m)

{'B': [10, 11],

'Be': [7, 9, 10],

'C': [11, 12, 13, 14],

'H': [1, 2, 3, 4, 5, 6, 7],

'He': [3, 4],

'Li': [6, 7],

'N': [13, 14, 15]}Unordered collection of unique elements. Collection is mutable (elements can be added and removed from the set), but the element itself is immutable.

Literal form is similar to dictionary:

>>> p = {6, 28, 496, 8128, 33550336}

>>> p

{33550336, 496, 28, 6, 8128}

>>> type(p)

<class 'set'>Recall that empty curly braces create an empty dictionary, therefore to create an empty set, use the set constructor with no arguments:

>>> d = {}

>>> type(d)

<class 'dict'>

>>>

>>> e = set()

>>> e

set()Set constructor can create a set from any iterable series (such as list):

>>> s = set([2, 4, 26, 64, 4096, 65536, 262144])

>>> s

{64, 4096, 2, 65536, 4, 262144, 26}Duplicates are discarded, common pattern is to use sets to remove duplicate items from a series of objects:

>>> t = [1, 4, 2, 1, 7, 9, 9]

>>> set(t)

{1, 2, 4, 9, 7}Sets are iterable, but the order is arbitrary:

>>> for x in {1, 2, 4, 8, 16, 32}:

... print(x)

...

32

1

2

4

8

16Membership is performed in in and not in operators:

>>> q = {2, 9, 6, 4}

>>> 3 in q

False

>>> 3 not in q

TrueUse add method to add a single element. Adding an element that already exists has no effect, does not generate an error.

>>> k = {81, 108}

>>> k

{81, 108}

>>> k.add(54)

>>> k

{81, 108, 54}

>>> k.add(108)

>>> k

{81, 108, 54}Multiple elements can be added at once using update method, which takes any iterable series, including another set:

>>> k.update([37, 128, 87])

>>> k

{128, 81, 108, 37, 87}

>>> k.update({7, 8})

>>> k

{128, 81, 7, 37, 87, 8, 108}remove method removes an element from the set, but throws an error if the element is not a member of the set:

>>> k.remove(81)

>>> k

{128, 7, 37, 87, 8, 108}

>>> k.remove(999)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

KeyError: 999discard method also removes an element from the set, but has no effect (and no error) if element is not a member of the set:

>>> k.discard(999)

>>> k

{128, 7, 37, 87, 8, 108}copy method performs a shallow copy of the set, copying references, but not the objects they refer to:

>>> j = k.copy()

>>> j

{128, 37, 87, 7, 8, 108}set constructor:

>>> m = set(j)

>>> m

{128, 37, 87, 7, 8, 108}Set Algebra

Powerful operations to compute set unions, differences, and intersections, and to evaluate whether two sets have subset, superset, or disjoint relations.

>>> blue_eyes = {'Olivia', 'Harry', 'Lily', 'Jack', 'Amelia'}

>>> blond_hair = {'Harry', 'Jack', 'Amelia', 'Mia', 'Joshua'}

>>> smell_hcn = {'Harry', 'Amelia'}

>>> taste_ptc = {'Harry', 'Lily', 'Amelia', 'Lola'}

>>> o_blood = {'Mia', 'Joshua', 'Lily', 'Olivia'}

>>> b_blood = {'Amelia', 'Jack'}

>>> a_blood = {'Harry'}

>>> ab_blood = {'Joshua', 'Lola'}union method collects all elements that are in either or both sets, for example find all people with blue eyes, blond hair, or both:

>>> blue_eyes.union(blond_hair)

{'Lily', 'Joshua', 'Harry', 'Jack', 'Amelia', 'Mia', 'Olivia'}union is commutative, can swap order of operands and get equivalent results:

>>> blue_eyes.union(blond_hair ) == blond_hair.union(blue_eyes)

Trueintersection collects only elements that are present in both sets, for example, find all people with blue eyes and blond hair:

>>> blue_eyes.intersection(blond_hair)

{'Jack', 'Harry', 'Amelia'}intersection is also commutative.

difference method finds all elements in first set that are not in the first set, for example, find people with blond hair who don't have blue eyes:

>>> blond_hair.difference(blue_eyes)

{'Joshua', 'Mia'}difference is not commutative.

symmetric_difference collects all the elements that are in the first set or the second set, but not both, for example, find people who have exclusively blond hair or blue eyes but not both:

blond_hair.symmetric_difference(blue_eyes)symmetric_difference is commutative.

Predicate Methods Provide information on relationship between sets.

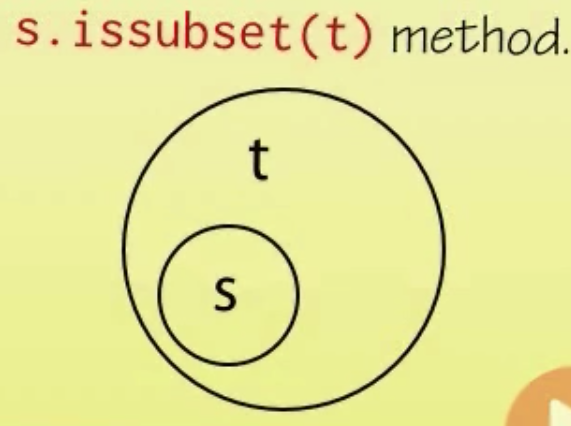

Subset

Check if one set is a setset of another, for example, do all the people that can smell hydrogen cyanide also have blonde hair:

>>> smell_hcn.issubset(blond_hair)

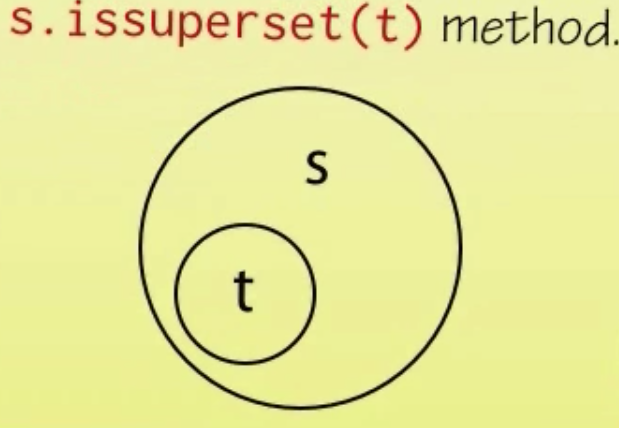

>>> TrueSuperset

For example, can all the people that taste ptc also smell hcn:

>>> taste_ptc.issuperset(smell_hcn)

TrueDisjoint

Test that two sets have no members in common.

>>> a_blood.isdisjoint(o_blood)

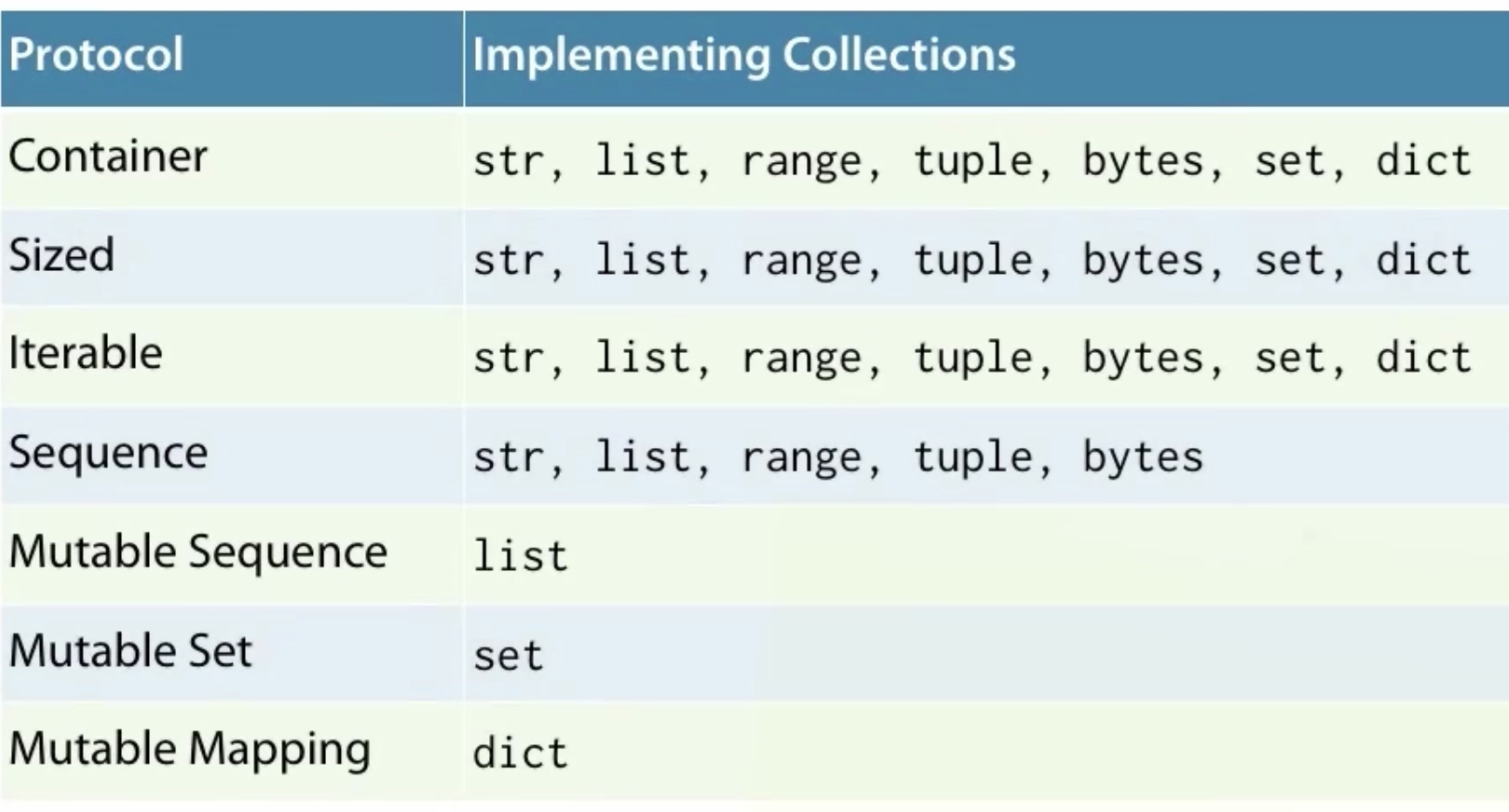

TrueA protocol is a set of operations or methods that a type must support if it is to implement that protocol. Protocol doesn't need to be defined in src (like Java interface).

Container Protocol requires that membership testing is supported using in and not in operators.

Sized Protocol requires that number of elements in collection can be determined by passing the collection to the built-in len(s) function.

Iterable Protocol requires that an iterator can be produced with built-in iter(s) and can be used with for loops:

for item in iterable:

do_something(item)Sequence Protocol requires that:

- Elements can be retrieved using square brackets and an integer index

item = seq[index] - Elements can be found by value

index = seq.index(item) - Count items

num = seq.count(item) - Can produce a reversed sequence using the

reversebuilt in functionr = reversed(seq)

Mechanism for stopping normal program flow and continuing at some surrounding context or code block. (Python's exception handling is similar to other imperative languages such as C++ or Java).

Raise an exception to interrupt program flow.

Handle an exception to resume control.

Unhandled exceptions will terminate the program.

Exception objects contain information about the exception event such as where it occurred.

Import this module into repl:

>>> from exceptional import convertTry a normal usage:

>>> convert("33")

33Try exceptional usage, int constructor will raise an exception at unexpected input:

>>> convert("hedgehog")

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/Users/dbaron/projects/python-pluralsight/code/exceptional.py", line 6, in convert

x = int(s)

ValueError: invalid literal for int() with base 10: 'hedgehog'ValueError displayed in stack trace is the type of the exception object and error message "ValueError: invalid literal..." is part of payload of the exception object.

Exception propagates across several levels in the call stack.

To make the code more robust, handle ValueError using try...except

def convert(s):

'''Convert to an integer.'''

try:

x = int(s)

except ValueError:

x = -1

return xtry block contains code that could raise an exception.

except block contains code that performs error handling if an exception is raised.

Each try block can have multiple except blocks to handle exceptions of different types:

def convert(s):

'''Convert to an integer.'''

try:

x = int(s)

print("Conversion succeeded! x =", x)

except ValueError:

print("Conversion failed.")

x = -1

except TypeError:

print("Conversion failed.")

x = -1

return xTo avoid code duplication, multiple except block can accept a tuple of exception types:

def convert(s):

'''Convert to an integer.'''

x = -1

try:

x = int(s)

print("Conversion succeeded! x =", x)

except (ValueError, TypeError):

print("Conversion failed.")

return xNot useful to catch programming errors such as IndentationError, SyntaxError, NameError. These should be corrected during development. They're only useful to catch as exceptions for tool development such as an IDE.

If there's no code to handle an exception, its an error to have an empty block, so instead, use pass, special statement that does nothing:

def convert(s):

'''Convert to an integer.'''

x = -1

try:

x = int(s)

print("Conversion succeeded! x =", x)

except (ValueError, TypeError):

pass

return xThe above code can be simplified further with multiple return statements:

def convert(s):

'''Convert to an integer.'''

try:

return int(s)

except (ValueError, TypeError):

return -1Can get a named reference to the exception object using as clause, to get more details about what went wrong.

Note need to import sys module to print to standard error:

import sys

def convert(s):

'''Convert to an integer.'''

try:

return int(s)

except (ValueError, TypeError) as e:

# exception objects can be converted to strings using str constructor

print("Conversion error: {}".format(str(e)), file=sys.stderr)

return -1Rather than returning an error code (which can be ignored by upstream callers, thus causing issues when the result is used), it's more pythonic to re-raise the exception object that is currently being handled:

def convert_raise(s):

'''Convert to an integer.'''

try:

return int(s)

except(ValueError, TypeError) as e:

print("Conversion error: {}".format(str(e)), file=sys.stderr)

raise- Callers need to know what exceptions to expect and when, so they can put appropriate exception handlers in place. Document all exceptions in docstring.

- Use exceptions that users will anticipate.

- Standard exceptions are usually the best choice. For example, if a function parameter is supplied with illegal value, raise a

ValueError, by using it as a constructor, passing in a string error message.

Exceptions are parts of families of related functions referred to as "protocols".

For example, objects that implement sequence protocol raise IndexError exception for indicies which are out of range.

Exceptions that a function raise are part of its specification, just like the arguments it accepts, so they should be implemented and documented.

Recommend to use common/existing exception types when possible, rather than creating your own. Example, IndexError, KeyError, ValueError, TypeError.

Index Error Raised when integer index is out of range. For example, indexing past end of list:

>>> z = [1, 4, 2]

>>> z[3]

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

IndexError: list index out of rangeValue Error Raised when object is of the right type but contains an inappropriate value. For example, construct an int from non-numeric string:

>>> int("jim")

raceback (most recent call last):

File "<stdin>", line 1, in <module>

ValueError: invalid literal for int() with base 10: 'jim'Key Error Raised when lookup in a mapping fails. For example, lookup a non-existing key in a dictionary:

>>> codes = dict(gb=44, us=1, no=47, fr=33, es=34)

>>> codes['de']

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

KeyError: 'de'Do Not Guard Against Type Errors Because this runs against dynamic typing and limits re-use potential of code. It's generally not worth the trouble, just let it fail.

The two different approaches to dealing with a program that might fail:

- LBYL: Look Before You Leap Check all preconditions are met in advance of attempting an error-prone operation.

- EAFP: It's Easier to Ask Forgiveness than Permission Blindly hope for the best but be prepared to deal with the consequences if something goes wrong.

Python favours EAFP because puts primary logic of happy path in its most readable form. With deviations from the normal flow handled separately rather than interspersed with the main flow.

For example, open a file and read some data from it, first the LBYL approach:

import os

p = '/path/to/datafile.dat'

if os.path.exists(p):

process_file(p)

else:

print('No such file as {}'.format(p))Problems: Only checking for existence, could have other problems like file exists but contains garbage, or the path could be a directory. So need to complicate the code further by checking for all of these.

A more subtle problem is race condition, it's possible for file to be deleted by another process after the existence check, but before process_file(p) is executed. Which means will still need error handling code for process_file.

EAFP approach is simpler, just attempt the operation without any advance checks, and handle any errors that may result:

import os

p = 'path/to/datafile.dat'

try:

process_file(f)

except OSError as e:

print('Could not process file because {}'.format(str(e)))Use try... finally to run code such as cleanup, regardless of whether an exception occurred or not.

For example, a function that changes cwd, creates a new dir at that location, then restores to the original directory:

import os

def make_at(path, dir_name):

original_path = os.getcwd()

os.chdir(path)

os.mkdir(dir_name)

os.chdir(original_path)If os.mkdir fails, then line that restores to original cwd will never run, resulting in an unintended side-effect (leaving the process at a different location from where it started).

Use finally to run chdir no matter what happens:

import os

def make_at(path, dir_name):

original_path = os.getcwd()

try:

os.chdir(path)

os.mkdir(dir_name)

finally:

os.chdir(original_path)Can also combine finally with except. Finally block will run even if OSError is thrown and handled.

import os

def make_at(path, dir_name):

original_path = os.getcwd()

try:

os.chdir(path)

os.mkdir(dir_name)

except OSError as e:

print(e, file=sys.stderr)

raise

finally:

os.chdir(original_path)Errors should never pass silently, unless explicitly silenced.

Detecting a single keypress from python (such as press any key), reuires operating-system specific modules. Can't use built in input function because that waits for user to press Return key before returning a string.

In Windows use msvcrt (Microsoft Visual C Runtime). On Linux and OSX, use sys, tty, termios.

A concise syntax for describing lists, sets or dictionaries in a declarative or functional style. Resulting shorthand is readable and expressive. Should be purely functional, i.e. no side effects.

Comprehension is enclosed in square brackets like a literal list, but contains fragment of declarative code that describe how to construct the elements of the list.

>>> words = "Why sometimes I have believed as many as six impossible things before breakfast".split()

>>> words

['Why', 'sometimes', 'I', 'have', 'believed', 'as', 'many', 'as', 'six', 'impossible', 'things', 'before', 'breakfast']

>>>

>>> my_comprehension = [len(word) for word in words]

>>> my_comprehension

[3, 9, 1, 4, 8, 2, 4, 2, 3, 10, 6, 6, 9]The new list my_comprehension is formed by binding word to each value in words in turn, and evaluating the length of the word to create a new value.

General form of list comprehensions:

[ expr(item) for item in iterable ]For each item in the iterable object (on the right), evaluate the expression on the left (which is usually in terms of the item but doesn't have to be), and use the result of that evaluation as the next element in the new list being constructed.

Equivalent code in imperative style, using for loop:

my_imperative = []

for word in words:

my_imperative.append(len(word))Note that source object doesn't have to be a list, can be any iterable object such as tuple.

Expression in terms of item can be any python expression.

>>> from math import factorial

>>> f = [len(str(factorial(x))) for x in range(20)]

>>> f

[1, 1, 1, 1, 2, 3, 3, 4, 5, 6, 7, 8, 9, 10, 11, 13, 14, 15, 16, 18]Similar syntax to list comprehension but using curly braces instead of square brackets:

{ expr(item) for item in iterable }Factorial example from list comprehension above contains duplicates, fix by using set comprehension:

>>> g = {len(str(factorial(x))) for x in range(20)}

>>> g

{1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 13, 14, 15, 16, 18}But note that sets are unordered containers so results may not be in meaningful order.

Also uses curly braces. Distinguished from set comprehension by providing two colon separated expressions for the key and value:

{ key_expr:value_expr for item in iterable }Example, invert dictionary to support lookups in opposite direction.

>>> from pprint import pprint as pp

>>>

>>> country_to_capital = {'United Kingdom': 'London', 'Brazil': 'Brazilia', 'Morocoo': 'Rabat', 'Sweden': 'Stockholm'}

>>> capital_to_country = { capital: country for country, capital in country_to_capital.items()}

>>> capital_to_country

{'Brazilia': 'Brazil', 'London': 'United Kingdom', 'Stockholm': 'Sweden', 'Rabat': 'Morocoo'}Note that dictionary comprehensions do not usually operate directly on dictionary sources because that yields only the keys.

To get access to both keys and values, use dictionary's items() method together with tuple unpacking, as shown above.

If comprehension produces duplicate keys, later keys will overwrite earlier keys. For example, map first letter of each word to the word:

>>> words = ["hi", "hello", "foxtrot", "hotel"]

>>> word_map = {word[0] : word for word in words}

{'h': 'hotel', 'f': 'foxtrot'}Comprehension expressions can be as complex as you like, though for readability, should extract complex logic to separate functions.

import os

import glob

import pprint

file_sizes = {os.path.realpath(p): os.stat(p).st_size for p in glob.glob('*.py')}

pp = pprint.PrettyPrinter(width=41, compact=True)

pp.pprint(file_sizes)All three collection comprehension types (list, set, dictionary) support optional filtering clause to choose which items of source are evaluated by the expression on the left.

[ expr(item) for item in iterable if predicate(item) ]from math import sqrt

def is_prime(x):

if x < 2:

return False

for i in range(2, int(sqrt(x)) + 1):

if x % i == 0:

return False

return True

# list all primes less than 100

[x for x in range(101) if is_prime(x)]The above example demonstrates is_prime function being used as a filtering clause of a list comprehension.

Note strange looking syntax x for x. This is because values are not being transformed by an expression. The expression in terms of x is x itself.

Filter predicate and transforming expression can be combined. For example, a dictionary comprehension that maps numbers with exactly 3 divisors to a tuple of those divisors

prime_square_divisors = {x*x:(1, x, x*x) for x in range(101) if is_prime(x)}Comprehensions and for loops iterate over the entire sequence by default, but sometimes, require more fine grained control. Two important concepts to understand wrt iteration:

Iterable protocol

Allows for passing an iterable object (usually collection or stream of objects such as list) to the built-in iter() function to get an iterator for the iterable object:

iterator = iter(iterable)Iterator protocol

Iterator objects support the iterator protocol, which requires that the iterator object can be passed to the built-in next() function to fetch the next iterm:

item = next(iterator)Example - each call to next moves the iterator through the sequence. If you try and go past end of sequence, Python raises an exception of type StopIteration.

>>> seasons = ['Spring', 'Summer', 'Autumn', 'Winter']

>>> iterator = iter(seasons)

>>> next(iterator)

'Spring'

>>> next(iterator)

'Summer'

>>> next(iterator)

'Autumn'

>>> next(iterator)

'Winter'

>>> next(iterator)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

StopIterationExample use of iterator to build utility to return first item in series, works on any iterable object like list, set:

def first(iterable):

"""Return first item in series or raises a ValueError if series is empty"""

iterator = iter(iterable)

try:

return next(iterator)

except StopIteration:

raise ValueError("iterable is empty")

>>> first(['first', 'second', 'third'])

'first'

>>> first({'first', 'second', 'third'})

'first'

>>> first(set())

Traceback (most recent call last):

File "iter-util.py", line 11, in <module>

first(set())

File "iter-util.py", line 7, in first

raise ValueError("iterable is empty")

ValueError: iterable is emptyHigher level constructs such as for loops and comprehensions are built on iterators.

A means to describe iterable sequences with code in functions. All generators are iterators.

Sequences are lazily evaluated, the next value in the sequence is computed on demand.

Lazy evaluation means we can model infinite sequences such as data streams from a sensor or an active log file.

Generator functions are composable into pipelines, for natural stream processing.

Generators are defined by any function that contains the yield keyword at least once. They may also contain the return keyword and no arguments. Like all functions, there's an implicit return at the end.

def gen123():

yield 1

yield 2

yield 3

>>> g = gen123()

>>> g

<generator object gen123 at 0x10a37bbf8>

>>>

>>> next(g)

1

>>> next(g)

2

>>> next(g)

3

>>> next(g)

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

StopIteration

>>>

>>> for v in gen123():

... print(v)

...

1

2

3In the above example, g is a generator object. Generators are iterators, so can work with them as such to retrieve or yield successive values from the sequence. Or use them anywhere that works with iterators such as for loops.

Each call to generator function returns a new generator object, therefore each instance can be advanced independently:

>>> h = gen123()

>>> i = gen123()

>>> h

<generator object gen123 at 0x10a37bc50>

>>> i

<generator object gen123 at 0x10a37bca8>

>>> next(h)

1

>>> next(h)

2

>>> next(i)

1To understand how and when generators function:

>>> def gen246():

... print("About to yield 2")

... yield 2

... print("About to yield 4")

... yield 4

... print("About to yield 6")

... yield 6

... print("About to return")

...

g = gen246()

>>> next(g)

About to yield 2

2

>>> next(g)

About to yield 4

4

>>> next(g)

About to yield 6

6

>>> next(g)

About to return

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

StopIterationNote that calling it g = gen246() generator object has been created and returned but none of the code in the generator body has yet been executed.

Then calling next(g) code runs just up until the first yield statement. Calling next(g) again, generator will resume execution where it left off and run again until next yield statement.

After the final yield has been executed, next call to next(g) causes function to execute until return at end of function body.

- Generators resume execution

- Can maintain state in local variables

- Complex control flow

- Lazy evaluation

Advantage of interleaved approach (see run_pipeline() function in example) is distinct needs to only do just enough work to yield first 3 distinct values, and not have to process it's entire source list; it pays to be lazy!

- Just in Time Computation: computation is only run when the next result is requested

- Generators can be used to model infinite sequences, no data structure needs to be built to contain the entire sequence.

Example, Lucas series, which starts with 2 and 1. Each value after that is the sum of the preceding two values.

def lucas():

yield 2

a = 2

b = 1

while True:

yield b

# update a and b to hold the new previous two values (uses tuple unpacking)

a, b = b, a + bGenerator expressions are a cross between comprehensions and generator functions. Use similar syntax to list comprehensions, but result in creation of a generator object, which produces the specified sequence lazily.

# Note the use of outer parentheses instead of brackets

(expr(item) for item in iterable)Useful when want lazy evaluation of generators with declarative precision of comprehensions.

For example, this generator expression yield a list of the first million square numbers.

>>> million_squares = (x*x for x in range(1, 1000001))

>>> million_squaresAfter the above line is executed, none of the million squares has been created. million_squares is a generator object that captures the specification of the sequence.

To force evaluation of the generator, use it to create a list:

>>> list(million_squares)

[1, 4, 9, 16, 25, 36, 49, 64, 81, 100, 121, 144, 169, 196, 225, 256, 289, 324, 361, 400, 441, 484, 529, 576, 625, 676, 729, 784, 841, 900, 961, 1024, 1089, 1156, 1225, 1296, 1369, 1444...Since generator object is an iterator, once exhaused by above call, will yield no more items, i.e generators are single use objects.

>>> list(million_squares)

[]Each time a generator function is invoked, it returns a new generator object. To recreate a generator from a generator comprehension, must execute it again.

Compute sum of first 10 million squares using built-in sum function, which accepts an iterable series of numbers. If were using list comphrenesion, would consume ~400MB memory, but using generator comprehension results in insignificant memory usage.

>>> sum(x*x for x in range(1, 10000001))

333333383333335000000In the example above, did not supply additional parentheses for generator function (in addition to those needed by sum function). i.e. parentheses used for sum function can also serve as those used by generator comprehension. They can be included but not required.

Can also use if clause, for example, to compute sum of numbers from 0 to 1000, but only those that are prime:

>>> sum(x for x in range(1001) if is_prime(x))

76127Python provides some built-in functions for performing common iterator operations, such as enumerate for producing integer indicies, and sum to sum numbers.

In addition to built-in functions, itertools module contains additional functions and generators for procesing iterable streams of data.

islice performs lazy list slicing.

count is an open ended range.

>>> from itertools import islice, count

>>>

>>> thousand_primes = islice((x for x in count() if is_prime(x )), 1000)

>>> thousand_primes

<itertools.islice object at 0x10b224e58>

>>> list(thousand_primes)

2, 3, 5, 7, 11, 13, 17, 19, 23, 29, 31, 37, 41, 43, 47, 53, 59, 61, 67, 71, 73, 79, 83, 89, 97, 101, 103, 107, 109, 113, 127, 131, 137, 139, 149, 151, 157, 163, 167, 173, 179, 181...

>>>

sum(islice((x for x in count() if is_prime(x )), 1000))

3682913Two more useful built-in functions for iterator operations are any and all. Equivalent to logical operators and and or, but for iterable series of booleans.

>>> any([False, False, True])

True

>>> all([False, False, True])

False

>>> all([True, True, True])

TrueUse any together with generator to determine if there are any primes in a range:

>>> any(is_prime(x) for x in range(1328, 1361))

FalseCheck that all the given city names are proper nouns with initial upper case letters:

>>> all(name == name.title() for name in ['London', 'New York', 'Sydney'])

TrueLast built-in for iterator operations is zip, used to synchronize iterations over two iterable series. For example, combine two columns of temperature data into pairs of corresponding readings:

>>> sunday = [12, 14, 15, 15, 17, 21, 22, 22, 23, 22, 20, 18]

>>> monday = [13, 14, 14, 14, 16, 20, 21, 22, 22, 21, 19, 17]

>>>

>>> for item in zip(sunday, monday):

... print(item)

...

(12, 13)

(14, 14)

(15, 14)

(15, 14)

(17, 16)

(21, 20)

(22, 21)

(22, 22)

(23, 22)

(22, 21)

(20, 19)

(18, 17)zip yields tuples when iterating, so it can be used together with tuple unpacking in a for loop:

>>> for sun, mon in zip(sunday, monday):

... print("average = ", (sun + mon) / 2)

...

average = 12.5

average = 14.0

average = 14.5

average = 14.5

average = 16.5

average = 20.5

average = 21.5

average = 22.0

average = 22.5

average = 21.5

average = 19.5

average = 17.5zip can accept any number of iterable arguments:

>>> tuesday = [2, 2, 3, 7, 9, 10, 11, 12, 10, 9, 8, 8]

>>> for temps in zip(sunday, monday, tuesday):

... print("min={:4.1f}, max={:4.1f}, average={:4.1f}".format(min(temps), max(temps), sum(temps), sum(temps) / len(temps)))To get one long temperature series for all three days, use chain to lazily concatenate tuples, which is different from simply concatenating several lists into one new list:

>>> from itertools import chain

>>> temperatures = chain(sunday, monday, tuesday)

>>> # check all temps above freezing point without memory impact of data duplication

>>> all(t > 0 for t in temperatures )

TruePutting it all together, demonstrate composability of generator functions, generator expressions, pure predicate functions and for loops:

>>> for x in (p for p in lucas() if is_prime(p)):

... print(x)

...

2

3

7

11

29

47

199

521

2207

3571

9349

3010349

54018521

370248451

6643838879

119218851371

5600748293801

688846502588399

...Ability to create custom types, because sometimes Python's built-in types are not enough to solve the problem. Classes define structure adn behaviour of objects. An object's class controls its initialization.

Classes make complex problems tractable, but can alsomake simple solutions overly complex. Python is OO but doesn't force you to use classes until you need it.

class keyword to define new classes. By convention, class names use CamelCase.

Just like def, class is a statement that can occur anywhere in a program. It binds a class definition to a class name.

Import the class object in the repl:

>>> from airtravel import Flight

>>> Flight

<class 'airtravel.Flight'>To use the class, call the class, which is called just like any other function. Constructor returns a new object, which is assigned here to name f.

f = Flight()

>>> type(f)

<class 'airtravel.Flight'>Method Function defined within a class.

Instance methods Functions which can be called on objects.

self First argument to instance methods.

>>> f = Flight()

>>> f.number()Note that we don't provide f as argument to number method. Because its syntactic sugar for Flight.number(f).

If provided, __init__() method is called as part of creating a new object. It must be named with the double underscores. First argument to init is self. Initializer does not return anything, it just modifies the object referred to by self.

__init__ is an initializer, not a constructor. It's purpose is to configure an object that already exists by the time its called. The constructor is provided by the Python runtime system, which checksk for presence of initializer and calls it if present.

self is similar to this in Java.

Example initializer:

class Flight:

def __init__(self, number):

# assigning to attribute that doesn't yet exist brings it into being

self._number = number

def number(self):

return self._number;Note use of _number, to avoid name clash with number() method. (methods are functions, functions are objects, these functions are bound to attributes of the object). Also by convention, implementation details not intended for public use start with underscore.

Arguments passed to constructor will be forwarded to initializer:

>>> >>> f = Flight('SN060')

>>> f.number()

'SN060'Truths about an object that endure for its lifetime. For example, flight number must always start with two letter uppercase airline code followed by three digit route number.

Class invariants are defined in the init method and raise exceptions if they are not satisfied.

Can use keyword arguments when passing many values to a constructor, to make the code self documenting:

a = Aircraft("G-EUPT", "Airbus A319", num_rows=22, num_seats_per_row=6)Law of Demeter The principle of least knowledge. Only talk to your friends.

OOP principle to never call methods on objects you receive from other calls.

Now that the Flight class takes an instance of an Aircraft:

>>> f = Flight("BA758", Aircraft("G-EUPT", "Airbus A319", num_rows=22, num_seats_per_row=6))Note in air travel class example, will add boarding pass printer as function, don't create a new class unless there's a good reason to.

Using objects of different types through a common interface. Applies to functions and complex objects.

For example, make_boarding_cards method does not need to know concrete card printing type, only the abstract details of its interface, i.e just the order of its arguments. We could replace console_card_printer with html_card_printer, this is polymorphism.

Polymorphism in Python is achieved via Duck Typing "When I see a bird that walks like a duck, swims like a duck and quacks like a duck, I call that bird a duck" -James Whitcomb Riley. An objects fitness for use is determined at the time of use. An objects suitability for use is not based on inheritance or hierarchy (unlike statically typed languages), but only on the attributes the object has at the time of use.

class Flight:

...

def make_boarding_cards(self, card_printer):

for passenger, seat in sorted(self._passenger_seats()):

card_printer(passenger, seat, self.number(), self.aircraft_model())

...

# module level function

def console_card_printer(passenger, flight_number, seat, aircraft):

output = "| Name: {0}" \

" Flight: {1}" \

" Seat: {2}" \

" Aircraft: {3}" \

" |".format(passenger, flight_number, seat, aircraft)

banner = "+" + "-" * (len(output) - 2) + '+'

border = '|' + ' ' * (len(output) - 2) + '|'

lines = [banner, border, output, border, banner]

card = "\n".join(lines)

print(card)

print()

...

# Usage

f = Flight("BA7588", Aircraft("G-EUPT", "Airbus A319", num_rows=22, num_seats_per_row=6))

f.allocate_seat("12A", "Guido van Rossum")

f.allocate_seat("15F", "Bjarne Stroustrup")

f.make_boarding_cards(console_card_printer)Duck typing is basis for collection protocols.

Mechanism whereby one class can be derived from base class, to allow behaviour to be made more specific in sub-class. In Java, this is required to achieve run-time polymorphism, but not so Python because it uses Late binding - no attribute calls or method lookups are bound to actual objects until the point at which they are called.

Inheritance is most useful for sharing implementation between classes. Specified with parentheses following class name:

class Aircraft:

def num_seats(self):

rows, row_seats = self.seating_plan()

return len(rows) * len(row_seats)

class AirbusA319(Aircraft):

def seating_plan(self):

return range(1, 23), "ABCDEF"

...Inheritance creates very tight coupling between classes. Thanks to duck typing, it is not needed as often in Python.

open() to open a file, most common arguments are:

filepath to file (required)moderead/write/append, binary/text (optional but recommend specifying for clarity)encodingtext encoding

At file system level, files contain series of bytes. Python distinguishes between files open in binary and text modes.

Files opened in binary mode return and manipulate their contents as bytes objects without any decoding, reflect raw data in the file.

File opened in text mode treats contents as if it contains text strings of str type, the raw bytes are first decoded using platform dependent encoding or using specified encoding. By default, text mode uses universal newlines. This translates between newline char in code \n and platform-dependent representation in raw bytes in file, for example /r/n on Windows.

If no encoding specified, will use default specified by sys but not guaranteed to be the same on every system:

>>> import sys

>>> sys.getdefaultencoding()

'utf-8'Using keyword arguments for clarity:

f = open('wasteland.txt', mode='wt', encoding='utf-8')First argument is file name to be opened.

mode argument is a string containing letters with different meanings, for example w for write and t for text.

open() modes

All mode strings must consist of read, write or append mode (r/w/a) with optional plus (+) modifier, combined with selector for text or binary mode (t/b).

| Character | Meaning |

|---|---|

| r | open for reading (default) |

| w | open for writing, truncating the file first |

| x | open for exclusive creation, failing if the file already exists |

| a | open for writing, appending to the end of the file if it exists |

| b | binary mode |

| t | text mode (default) |

| + | open a disk file for updating (reading and writing) |

| u | universal newline modes (for backwards compatibility, should not be used in new code) |

Typical mode strings:

wb write binary, at append text.

Object returned from open is a file-like object. Can request help on instances at the repl:

>>> f = open('wasteland.txt', mode='wt', encoding='utf-8')

>>> help(f)

...

| write(self, text, /)

| Write string to stream.

| Returns the number of characters written (which is always equal to

| the length of the string).

...write returns number of codepoints written to file (not number of bytes written to file after encoding the universal newline character), because Python's universal newline behaviour translates line endings to platform's native line endings, which for example on Windows will be one extra byte vs Linux. Do not rely on sum of values returned by write to be the size of the file in bytes.

Caller is responsible for providing newline characters:

>>> f.write('What are the roots that clutch, ')

32

>>> f.write('what branches grow\n')

19

>>> f.write('Out of this stony rubbish? ')

27When finished writing, must close file with close() method:

>>> f.close()Content only becomes visible in the file after close is called.

Use open function with mode string for reading like rt (read text):

>>> g = open('wasteland.txt', mode='rt', encoding='utf-8')To read in a specific number of characters (in text mode, read method expects number of characters to read in from the file):

>>> g.read(32)

'What are the roots that clutch, 'read returns the text and advances file pointer to end of what was read. Return type is str when file opened in text mode.

To read all/remaining data, call file() with no arguments:

>>> g.read()

'what branches grow\nOut of this stony rubbish? 'Calling read() after end of file has been reached returns an empty string ''.

When finished reading a file, remember to close() it.

If not closed, can use seek to move file pointer, passing in offset, returns new file pointer position:

>>> f.seek(0)

0read for text files is awkward. Python has better tools for reading a file line by line.

readline() returns lines terminated by new line character, if present in file. After end of file is reached, calling readline() returns empty string.

>>> g.readline()

'What are the roots that clutch, what branches grow\n'

>>> g.readline()

'Out of this stony rubbish? '

>>> g.readline()

''

>>> g.seek(0)

''To read all lines in file to a list (make sure there's enough memory):

>>> g.readlines()

['What are the roots that clutch, what branches grow\n', 'Out of this stony rubbish? ']

g.close()Use a mode to open file for writing, appending to end of file if it already exists.